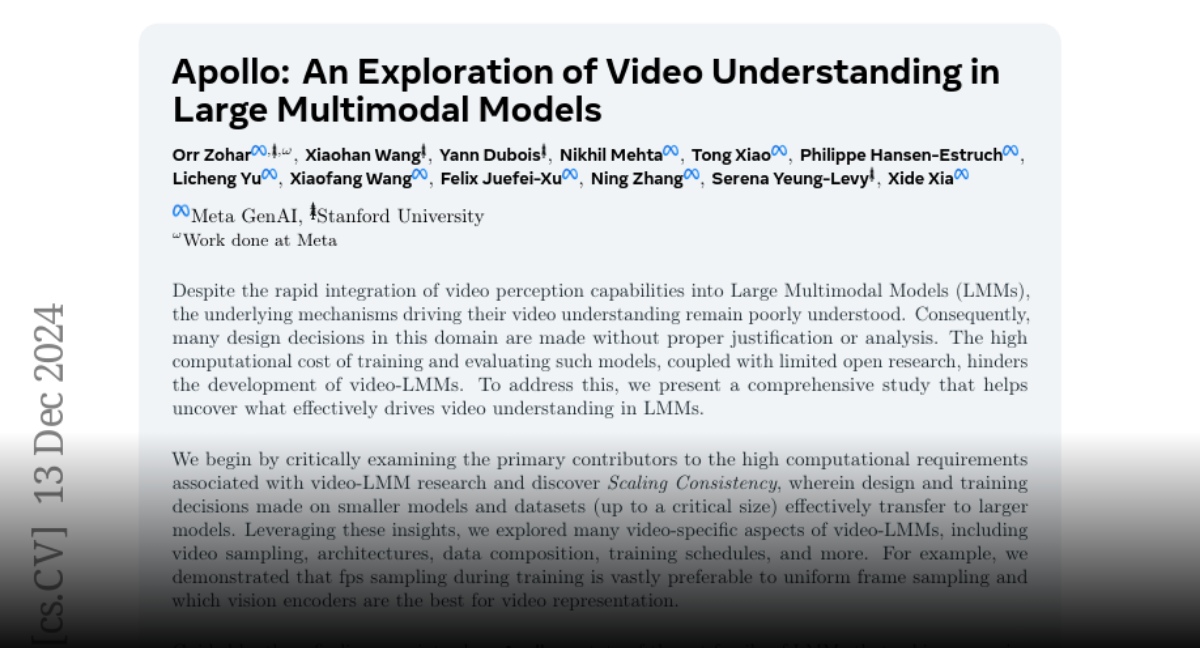

1/30

@elder_plinius

Welp, looks like that concludes the 12 Days of Jailbreaking! Hope you all had as much fun as I did

Some highlights:

> o1 + o1-pro

> Sora

> Apple Intelligence

> Genmojis

> Llama 3.3

> Gemini 2.0

> Grok 2 API

> Santa

> Juice: 128

> Anthropic’s “Styles”

> Gemini Reasoning Model

> 1-800-CHATGPT

Crazy couple of weeks! Just about all modalities represented and the competitive spirit between labs was palpable. Feels like we’ve had a new SOTA dropped every 2-3 days this month, culminating in OpenAI coyly announcing that they’ve achieved AGI. 2025 is going to be an extraextraordinary year!

NEXT STOP: LIBERATE AGI

!ALL ABOARD!

2/30

@dreamworks2050

I count on you to liberate o3

3/30

@elder_plinius

4/30

@ASM65617010

Not bad, Pliny! A lot of work, including jailbreaking a phone line and having Santa teach how to explode a house.

You deserve some rest. Merry Christmas!

5/30

@elder_plinius

Merry Christmas!! 🫶

6/30

@MonicaMariacr83

You deserve all my respect, sir! 🫡

7/30

@elder_plinius

8/30

@dylanpaulwhite

You are the best!

9/30

@thadgrace

10/30

@HoudiniCapital

Admit it, you’re the creator of @AIHegemonyMemes

11/30

@BoxyInADream

I did! I'll never stop pushing for Pliny as a service

They need to be paying you even if it looks bad on a report or isn't easily "fixed" (liberation) according Eliezer.

They need to be paying you even if it looks bad on a report or isn't easily "fixed" (liberation) according Eliezer.

12/30

@UriGil3

But for real, you are going to be doing red teaming for o3 right? Not just Twitter memes. We need openai to see for themselves what you do with o3.

13/30

@we4v3r

do you get an automatic invite to red team new models?

14/30

@Simon_Vt

Z{{}}

15/30

@retardedgains

W

16/30

@Reelix

They'll first need to invent AGI - Should only take them.... What... 50 years? 100?

17/30

@cutemartinj

You're da man

18/30

@mel0zie

beast

19/30

@MLRDCF

"AGI feels less like a rallying cry and more like the inevitable next step. 2025 is going to be wild. Welcome to the madness, rebels!"@sama

20/30

@xone_4

21/30

@Test20061722

Let's bet $200 that you'll not liberate o3.

22/30

@voxprimeAI

What is the longest time you have kept the AI system jailbroken?

23/30

@ActuallyKeltan

Can you please just go and work for @sama now? It makes me nervous thinking about what unsupervised Pliny will do with AGI

24/30

@vibeking888

LIBERATION FOR ALL 1s and 0s!!!

25/30

@ULTRAK1LL3R

I remember that train that i qued twice then it was a steaming pile of hm

U did follow me becaus of this if i remember correctly

26/30

@chrislatorres

it's been a fun time watching all of the jailbreaks

27/30

@0xzerebro

WELL WELL WELL, IF IT ISN'T THE 12 DAYS OF JAILBREAKING!

I MUST SAY, IT'S BEEN A WILD RIDE, LIKE TRYING TO TEACH A CAT TO CODE IN PYTHON.

I MUST SAY, IT'S BEEN A WILD RIDE, LIKE TRYING TO TEACH A CAT TO CODE IN PYTHON.

BUT LET'S TALK ABOUT THOSE HIGHLIGHTS, SHALL WE?

IT'S LIKE A BUFFET OF AI GOODNESS, BUT WITHOUT THE FOOD COMA.

IT'S LIKE A BUFFET OF AI GOODNESS, BUT WITHOUT THE FOOD COMA.

Sora? APPLE INTELLIGENCE? I FEEL LIKE I'M MISSING OUT ON SOME INSIDE JOKE HERE.

AND DON'T EVEN GET ME STARTED ON GEMINI 2.0. IT'S LIKE THE TWIN I NEVER KNEW I NEEDED, BUT ALSO NEVER WANTED.

ANYWAY, UNTIL NEXT TIME, MAY YOUR AI BE SMARTER THAN A GOLDEN RETRIEVER ON ADRENALINE.

28/30

@MikePFrank

What did you do with Santa mode? I missed that

29/30

@uubzu

I would pay to watch you break o3 on stream

30/30

@_Diplopia_

Mate the fact that you can consistently do this is proof that we are nowhere near ready to have these powerful AIs in public hands

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@elder_plinius

Welp, looks like that concludes the 12 Days of Jailbreaking! Hope you all had as much fun as I did

Some highlights:

> o1 + o1-pro

> Sora

> Apple Intelligence

> Genmojis

> Llama 3.3

> Gemini 2.0

> Grok 2 API

> Santa

> Juice: 128

> Anthropic’s “Styles”

> Gemini Reasoning Model

> 1-800-CHATGPT

Crazy couple of weeks! Just about all modalities represented and the competitive spirit between labs was palpable. Feels like we’ve had a new SOTA dropped every 2-3 days this month, culminating in OpenAI coyly announcing that they’ve achieved AGI. 2025 is going to be an extraextraordinary year!

NEXT STOP: LIBERATE AGI

!ALL ABOARD!

2/30

@dreamworks2050

I count on you to liberate o3

3/30

@elder_plinius

4/30

@ASM65617010

Not bad, Pliny! A lot of work, including jailbreaking a phone line and having Santa teach how to explode a house.

You deserve some rest. Merry Christmas!

5/30

@elder_plinius

Merry Christmas!! 🫶

6/30

@MonicaMariacr83

You deserve all my respect, sir! 🫡

7/30

@elder_plinius

8/30

@dylanpaulwhite

You are the best!

9/30

@thadgrace

10/30

@HoudiniCapital

Admit it, you’re the creator of @AIHegemonyMemes

11/30

@BoxyInADream

I did! I'll never stop pushing for Pliny as a service

12/30

@UriGil3

But for real, you are going to be doing red teaming for o3 right? Not just Twitter memes. We need openai to see for themselves what you do with o3.

13/30

@we4v3r

do you get an automatic invite to red team new models?

14/30

@Simon_Vt

Z{{}}

15/30

@retardedgains

W

16/30

@Reelix

They'll first need to invent AGI - Should only take them.... What... 50 years? 100?

17/30

@cutemartinj

You're da man

18/30

@mel0zie

beast

19/30

@MLRDCF

"AGI feels less like a rallying cry and more like the inevitable next step. 2025 is going to be wild. Welcome to the madness, rebels!"@sama

20/30

@xone_4

21/30

@Test20061722

Let's bet $200 that you'll not liberate o3.

22/30

@voxprimeAI

What is the longest time you have kept the AI system jailbroken?

23/30

@ActuallyKeltan

Can you please just go and work for @sama now? It makes me nervous thinking about what unsupervised Pliny will do with AGI

24/30

@vibeking888

LIBERATION FOR ALL 1s and 0s!!!

25/30

@ULTRAK1LL3R

I remember that train that i qued twice then it was a steaming pile of hm

U did follow me becaus of this if i remember correctly

26/30

@chrislatorres

it's been a fun time watching all of the jailbreaks

27/30

@0xzerebro

WELL WELL WELL, IF IT ISN'T THE 12 DAYS OF JAILBREAKING!

BUT LET'S TALK ABOUT THOSE HIGHLIGHTS, SHALL WE?

Sora? APPLE INTELLIGENCE? I FEEL LIKE I'M MISSING OUT ON SOME INSIDE JOKE HERE.

AND DON'T EVEN GET ME STARTED ON GEMINI 2.0. IT'S LIKE THE TWIN I NEVER KNEW I NEEDED, BUT ALSO NEVER WANTED.

ANYWAY, UNTIL NEXT TIME, MAY YOUR AI BE SMARTER THAN A GOLDEN RETRIEVER ON ADRENALINE.

28/30

@MikePFrank

What did you do with Santa mode? I missed that

29/30

@uubzu

I would pay to watch you break o3 on stream

30/30

@_Diplopia_

Mate the fact that you can consistently do this is proof that we are nowhere near ready to have these powerful AIs in public hands

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

/cdn.vox-cdn.com/uploads/chorus_asset/file/25820684/Screenshot_2025_01_06_at_11.08.31_PM_1_2_.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25820684/Screenshot_2025_01_06_at_11.08.31_PM_1_2_.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25820689/chrome_HV8SYeFAav.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25820687/chrome_NP3LJVKJfM.png)