Ultimately, the DisTrO method could open the door to many more people being able to train massively powerful AI models.

venturebeat.com

‘This could change everything!’ Nous Research unveils new tool to train powerful AI models with 10,000x efficiency

Carl Franzen@carlfranzen

August 27, 2024 10:22 AM

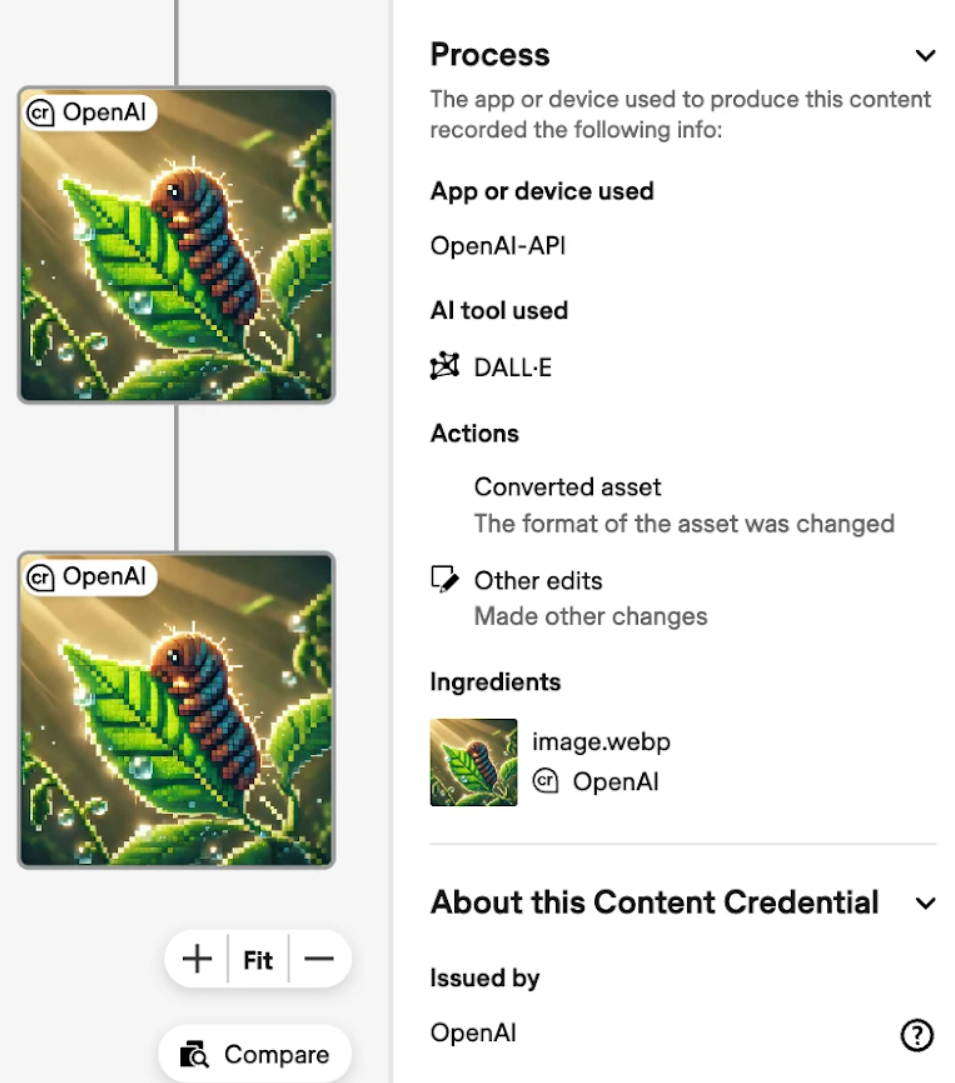

Credit: VentureBeat made with ChatGPT

Nous Research turned heads earlier this month with the release of its

permissive, open-source Llama 3.1 variant Hermes 3.

Now, the small research team dedicated to making “personalized, unrestricted AI” models has announced another seemingly massive breakthrough:

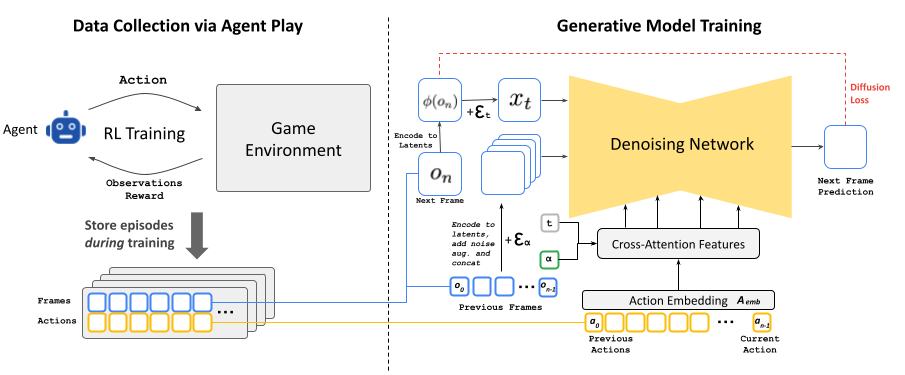

DisTrO (Distributed Training Over-the-Internet), a new optimizer that reduces the amount of information that must be sent between various GPUs (graphics processing units) during each step of training an AI model.

Nous’s DisTrO optimizer means powerful AI models can now be trained outside of big companies, across the open web on consumer-grade connections, potentially by individuals or institutions working together from around the world.

DisTrO has already been tested and shown in a

Nous Research technical paper to yield an 857 times efficiency increase compared to one popular existing training algorithm,

All-Reduce, as well as a massive reduction in the amount of information transmitted during each step of the training process (86.8 megabytes compared to 74.4 gigabytes) while only suffering a slight loss in overall performance. See the results in the table below from the Nous Research technical paper:

Ultimately, the DisTrO method could open the door to many more people being able to train massively powerful AI models as they see fit.

As the firm wrote in a

post on X yesterday: “Without relying on a single company to manage and control the training process, researchers and institutions can have more freedom to collaborate and experiment with new techniques, algorithms, and models. This increased competition fosters innovation, drives progress, and ultimately benefits society as a whole.”

What if you could use all the computing power in the world to train a shared, open source AI model?

Preliminary report:

DisTrO/A_Preliminary_Report_on_DisTrO.pdf at main · NousResearch/DisTrO

Nous Research is proud to release a preliminary report on DisTrO (Distributed Training Over-the-Internet) a family of…

pic.twitter.com/h2gQJ4m7lB

— Nous Research (@NousResearch)

August 26, 2024

'

The problem with AI training: steep hardware requirements

As covered on VentureBeat previously,

Nvidia’s GPUs in particular are in high demand in the generative AI era, as the expensive graphics cards’ powerful parallel processing capabilities are needed to train AI models efficiently and (relatively) quickly. This

blog post at APNic describes the process well.

A big part of the AI training process relies on GPU clusters — multiple GPUs — exchanging information with one another about the model and the information “learned” within training data sets.

However, this “inter-GPU communication” requires that GPU clusters be architected, or set up, in a precise way in controlled conditions, minimizing latency and maximizing throughput. Hence why companies such as

Elon Musk’s Tesla are investing heavily in setting up physical “superclusters” with many thousands (or hundreds of thousands) of GPUs sitting physically side-by-side in the same location — typically a massive airplane hangar-sized warehouse or facility.

Because of these requirements, training generative AI — especially the largest and most powerful models — is typically an extremely capital-heavy endeavor, one that only some of the most well-funded companies can engage in, such as Tesla, Meta, OpenAI, Microsoft, Google, and Anthropic.

The training process for each of these companies looks a little different, of course. But they all follow the same basic steps and use the same basic hardware components. Each of these companies tightly controls its own AI model training processes, and it can be difficult for incumbents, much less laypeople outside of them, to even think of competing by training their own similarly-sized (in terms of parameters, or the settings under the hood) models.

But Nous Research, whose whole approach is essentially the opposite — making the most powerful and capable AI it can on the cheap, openly, freely, for anyone to use and customize as they see fit without many guardrails — has found an alternative.

What DisTrO does differently

While traditional methods of AI training require synchronizing full gradients across all GPUs and rely on extremely high bandwidth connections, DisTrO reduces this communication overhead by four to five orders of magnitude.

The paper authors haven’t fully revealed how their algorithms reduce the amount of information at each step of training while retaining overall model performance, but plan to release more on this soon.

The reduction was achieved without relying on amortized analysis or compromising the convergence rate of the training, allowing large-scale models to be trained over much slower internet connections — 100Mbps download and 10Mbps upload, speeds available to many consumers around the world.

The authors tested DisTrO using the Meta Llama 2, 1.2 billion large language model (LLM) architecture and achieved comparable training performance to conventional methods with significantly less communication overhead.

They note that this is the smallest-size model that worked well with the DisTrO method, and they “do not yet know whether the ratio of bandwidth reduction scales up, down, or stays constant as model size increases.”

Yet, the authors also say that “our preliminary tests indicate that it is possible to get a bandwidth requirements reduction of up to 1000x to 3000x during the pre-training,” phase of LLMs, and “for post-training and fine-tuning, we can achieve up to 10000x without any noticeable degradation in loss.”

They further hypothesize that the research, while initially conducted on LLMs, could be used to train large diffusion models (LDMs) as well: think the

Stable Diffusion open source image generation model and popular image generation services derived from it such as

Midjourney.

Still need good GPUs

To be clear: DisTrO still relies on GPUs — only instead of clustering them all together in the same location, now they can be spread out across the world and communicate over the consumer internet.

Specifically, DisTrO was evaluated using 32x H100 GPUs, operating under the Distributed Data Parallelism (DDP) strategy, where each GPU had the entire model loaded in

VRAM.

This setup allowed the team to rigorously test DisTrO’s capabilities and demonstrate that it can match the convergence rates of AdamW+All-Reduce despite drastically reduced communication requirements.

This result suggests that DisTrO can potentially replace existing training methods without sacrificing model quality, offering a scalable and efficient solution for large-scale distributed training.

By reducing the need for high-speed interconnects DisTrO could enable collaborative model training across decentralized networks, even with participants using consumer-grade internet connections.

The report also explores the implications of DisTrO for various applications, including federated learning and decentralized training.

Additionally, DisTrO’s efficiency could help mitigate the environmental impact of AI training by optimizing the use of existing infrastructure and reducing the need for massive data centers.

Moreover, the breakthroughs could lead to a shift in how large-scale models are trained, moving away from centralized, resource-intensive data centers towards more distributed, collaborative approaches that leverage diverse and geographically dispersed computing resources.

What’s next for the Nous Research team and DisTrO?

The research team invites others to join them in exploring the potential of DisTrO. The preliminary report and supporting materials are

available on GitHub, and the team is actively seeking collaborators to help refine and expand this groundbreaking technology.

Already, some AI influencers such as @kimmonismus on X (aka chubby) have praised the research as a huge breakthrough in the field, writing, “This could change everything!”

Wow, amazing! This could change everything!

x.com

— Chubby

(@kimmonismus)

August 27, 2024

With DisTrO, Nous Research is not only advancing the technical capabilities of AI training but also promoting a more inclusive and resilient research ecosystem that has the potential to unlock unprecedented advancements in AI.

A_Preliminary_Report_on_DisTrODownload