1/1

[CL] A Philosophical Introduction to Language Models - Part II: The Way Forward

[2405.03207] A Philosophical Introduction to Language Models - Part II: The Way Forward

- This paper explores novel philosophical questions raised by advances in large language models (LLMs) beyond classical debates.

- It examines evidence from causal intervention methods about the nature of LLMs' internal representations and computations.

- It discusses implications of multimodal and modular extensions of LLMs.

- It covers debates about whether LLMs may meet minimal criteria for consciousness.

- It discusses concerns about secrecy and reproducibility in LLM research.

- It discusses whether LLM-like systems may be relevant to modeling human cognition if architecturally constrained.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

[CL] A Philosophical Introduction to Language Models - Part II: The Way Forward

[2405.03207] A Philosophical Introduction to Language Models - Part II: The Way Forward

- This paper explores novel philosophical questions raised by advances in large language models (LLMs) beyond classical debates.

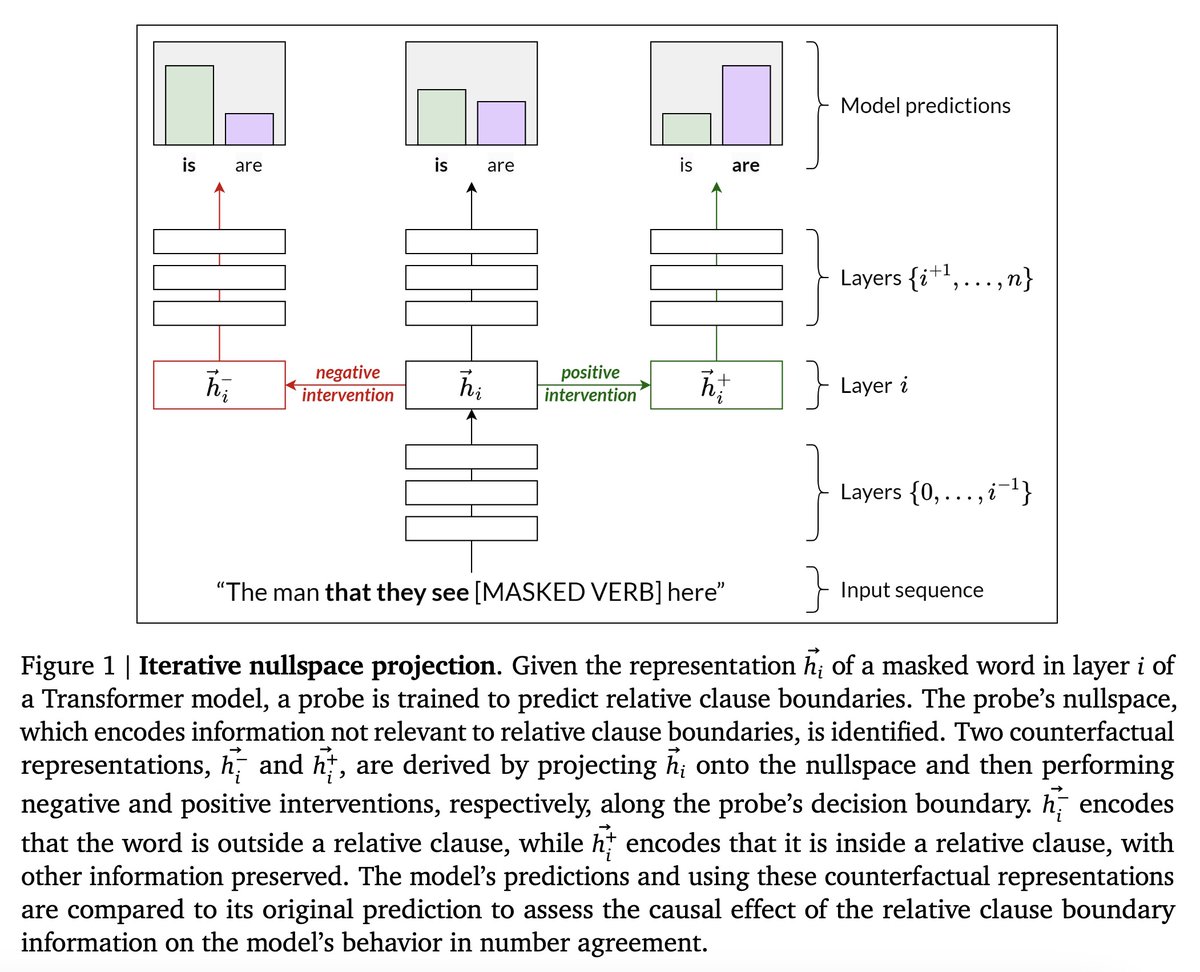

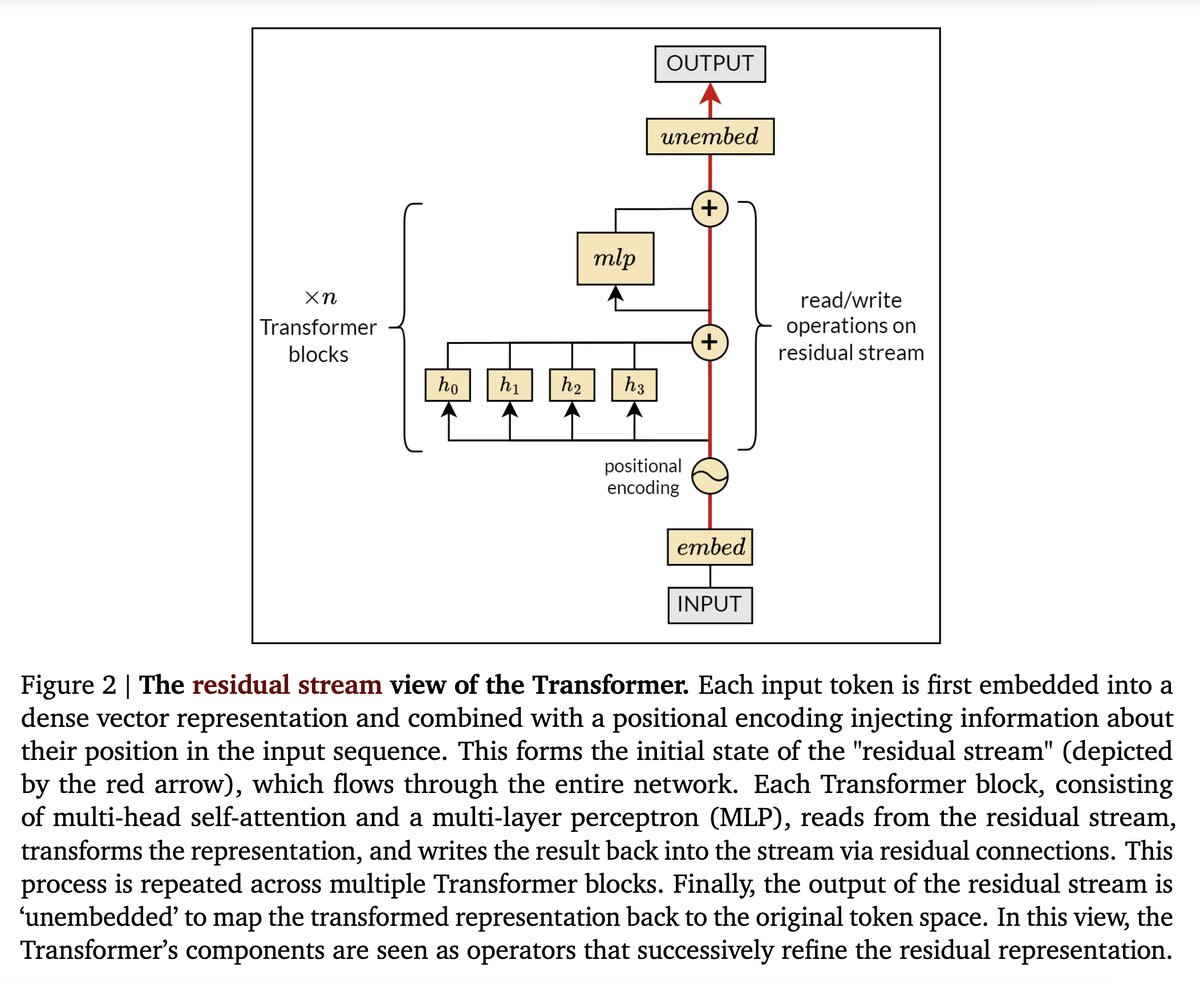

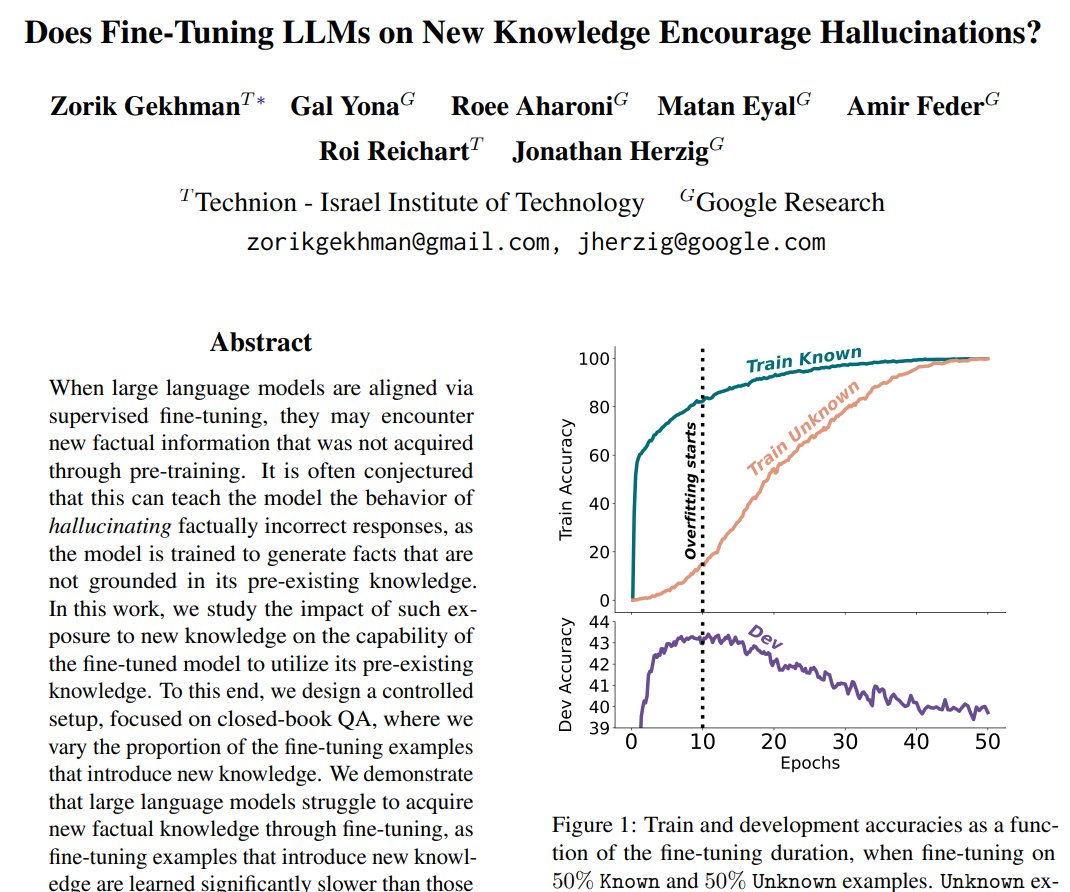

- It examines evidence from causal intervention methods about the nature of LLMs' internal representations and computations.

- It discusses implications of multimodal and modular extensions of LLMs.

- It covers debates about whether LLMs may meet minimal criteria for consciousness.

- It discusses concerns about secrecy and reproducibility in LLM research.

- It discusses whether LLM-like systems may be relevant to modeling human cognition if architecturally constrained.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196