A virtual assistant that knows where you left your keys? That’s the dream.

www.theverge.com

Project Astra is the future of AI at Google

Siri and Alexa never managed to be useful assistants. But Google and others are convinced the next generation of bots is really going to work.

By

David Pierce, editor-at-large and Vergecast co-host with over a decade of experience covering consumer tech. Previously, at Protocol, The Wall Street Journal, and Wired.

May 14, 2024, 1:56 PM EDT

31 Comments

Astra is meant to be a real-time, multimodal AI assistant. Image: Google

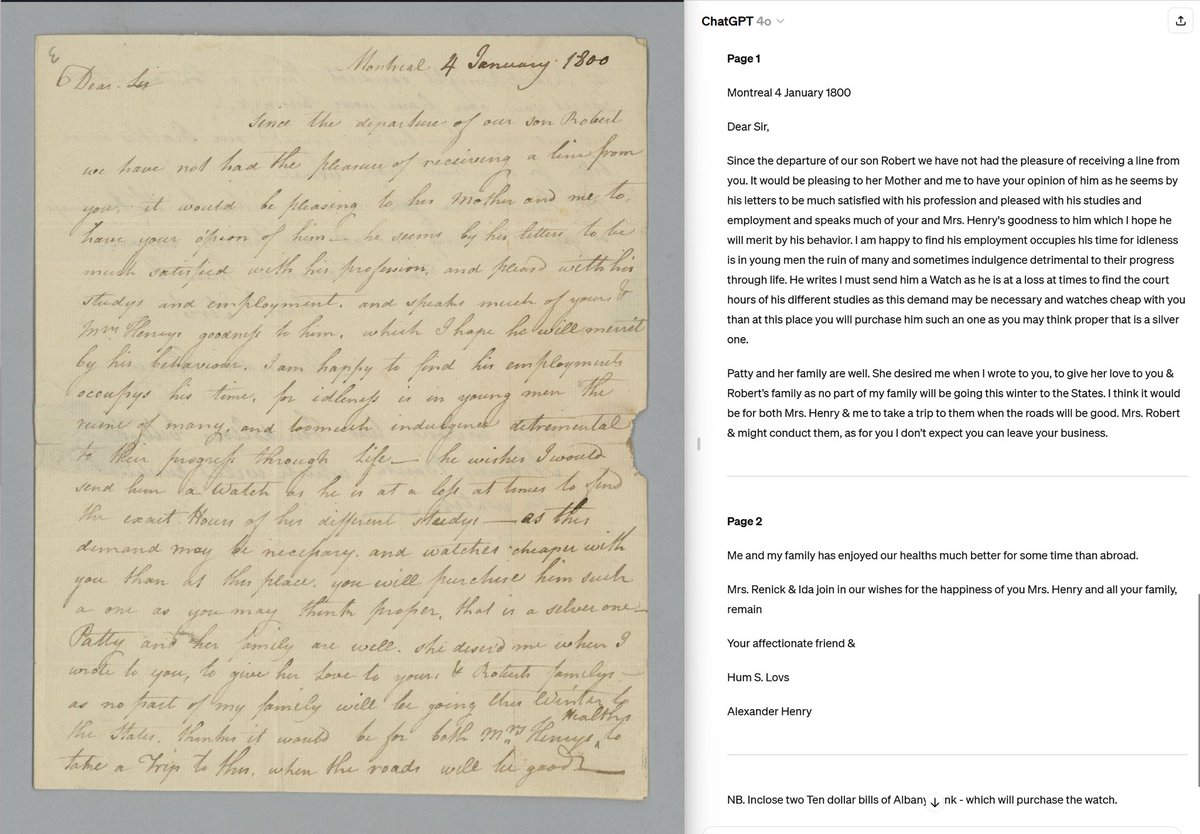

“I’ve had this vision in my mind for quite a while,” says Demis Hassabis, the head of Google DeepMind and the

leader of Google’s AI efforts. Hassabis has been thinking about and working on AI for decades, but four or five years ago, something really crystallized. One day soon, he realized, “We would have this universal assistant. It’s multimodal, it’s with you all the time.” Call it the

Star Trek Communicator; call it the voice from

Her; call it whatever you want. “It’s that helper,” Hassabis continues, “that’s just useful. You get used to it being there whenever you need it.”

At

Google I/O, the company’s annual developer conference, Hassabis

showed off a very early version of what he hopes will become that universal assistant. Google calls it Project Astra, and it’s a real-time, multimodal AI assistant that can see the world, knows what things are and where you left them, and can answer questions or help you do almost anything. In an incredibly impressive demo video that Hassabis swears is not faked or doctored in any way, an Astra user in Google’s London office asks the system to identify a part of a speaker, find their missing glasses, review code, and more. It all works practically in real time and in a very conversational way.

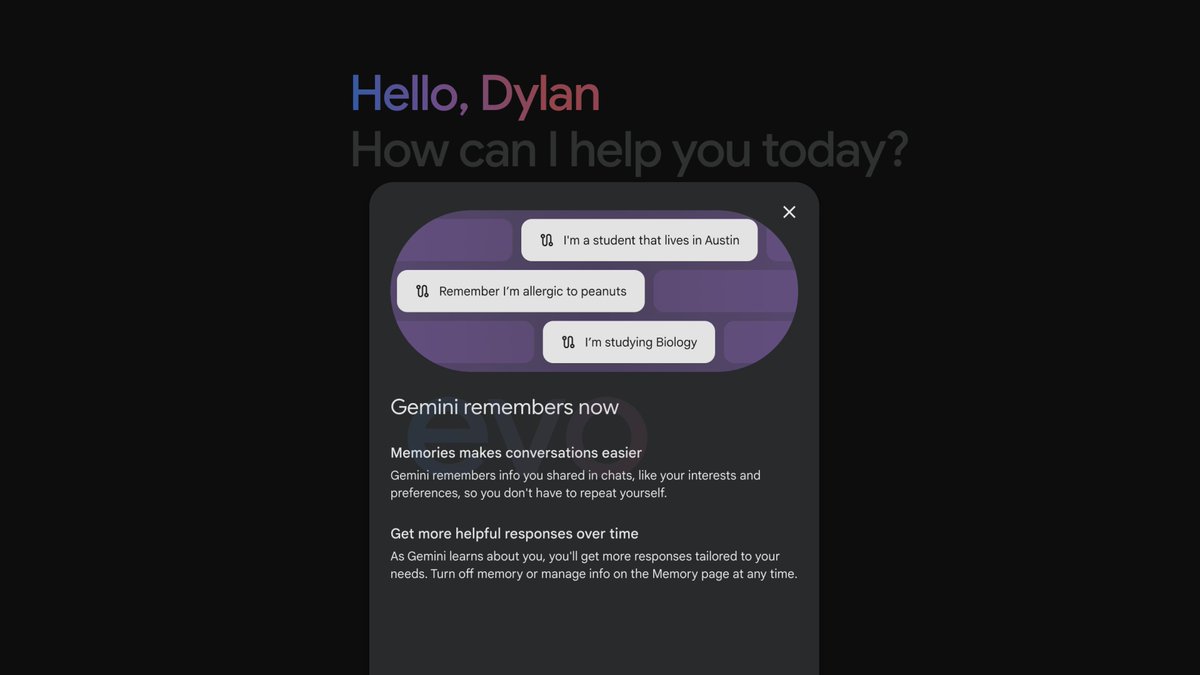

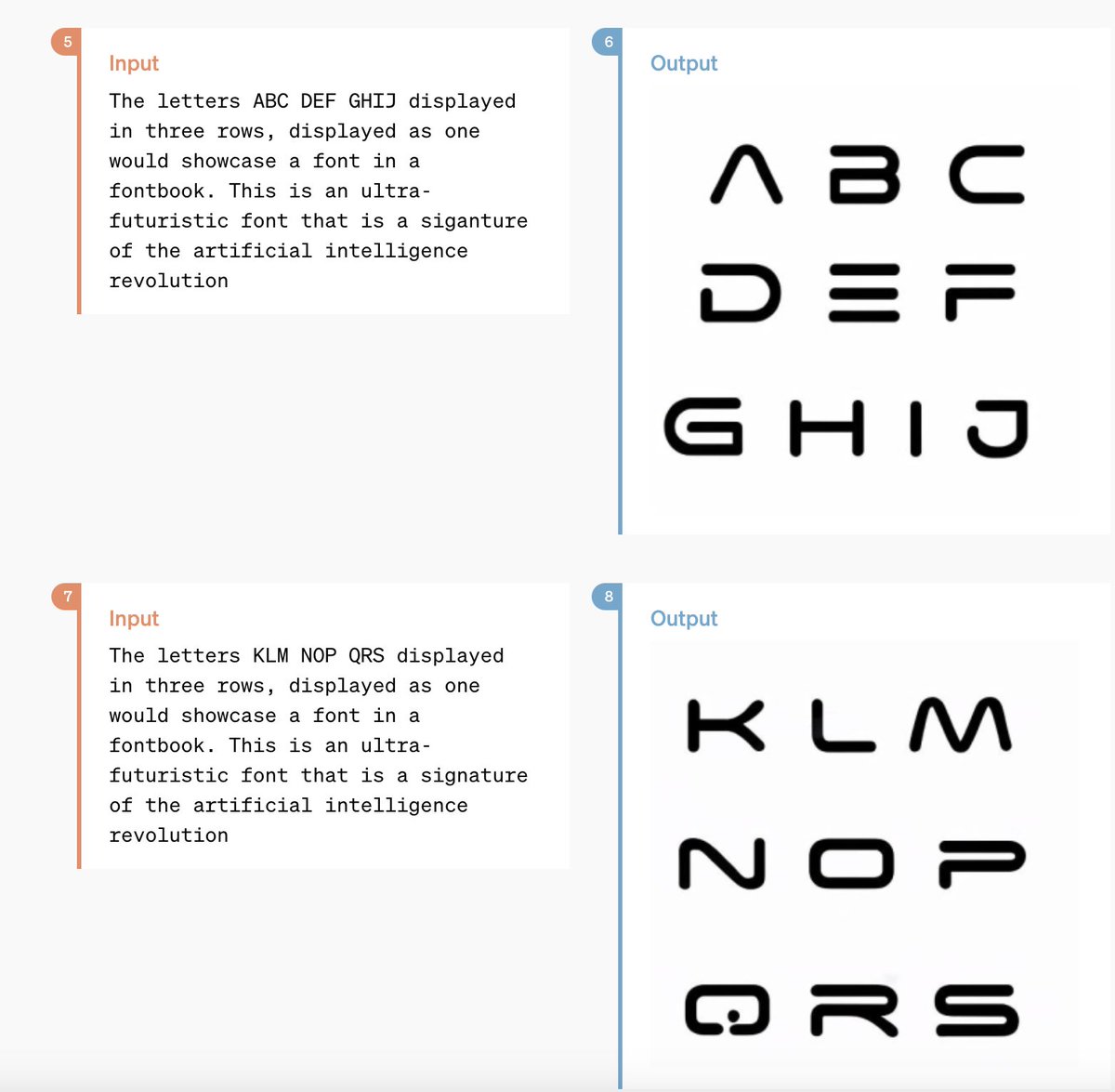

Astra is just one of many Gemini announcements at this year’s I/O. There’s a new model,

called Gemini 1.5 Flash, designed to be faster for common tasks like summarization and captioning. Another new model,

called Veo, can generate video from a text prompt. Gemini Nano, the model designed to be used locally on devices like your phone, is supposedly faster than ever as well. The context window for

Gemini Pro, which refers to how much information the model can consider in a given query, is doubling to 2 million tokens, and Google says the model is better at following instructions than ever. Google’s making fast progress both on the models themselves and on getting them in front of users.

Astra is multimodal by design — you can talk, type, draw, photograph, and video to chat with it. Image: Google

Going forward, Hassabis says, the story of AI will be less about the models themselves and all about what they can do for you. And that story is all about agents: bots that don’t just talk with you but actually accomplish stuff on your behalf. “Our history in agents is longer than our generalized model work,” he says, pointing to the game-playing AlphaGo system from nearly a decade ago. Some of those agents, he imagines, will be ultra-simple tools for getting things done, while others will be more like collaborators and companions. “I think it may even be down to personal preference at some point,” he says, “and understanding your context.”

Astra, Hassabis says, is much closer than previous products to the way a true real-time AI assistant ought to work. When Gemini 1.5 Pro, the latest version of Google’s mainstream large language model, was ready, Hassabis says he knew the underlying tech was good enough for something like Astra to begin to work well. But the model is only part of the product. “We had components of this six months ago,” he says, “but one of the issues was just speed and latency. Without that, the usability isn’t quite there.” So, for six months, speeding up the system has been one of the team’s most important jobs. That meant improving the model but also optimizing the rest of the infrastructure to work well and at scale. Luckily, Hassabis says with a laugh, “That’s something Google does very well!”

A lot of Google’s AI announcements at I/O are about giving you more and easier ways to use Gemini. A new product called Gemini Live is a voice-only assistant that lets you have easy back-and-forth conversations with the model, interrupting it when it gets long-winded or calling back to earlier parts of the conversation. A new feature in Google Lens allows you to search the web by shooting and narrating a video. A lot of this is enabled by Gemini’s large context window, which means it can access a huge amount of information at a time, and Hassabis says it’s crucial to making it feel normal and natural to interact with your assistant.

Gemini 1.5 Flash exists to make AI assistants faster above all else. Image: Google

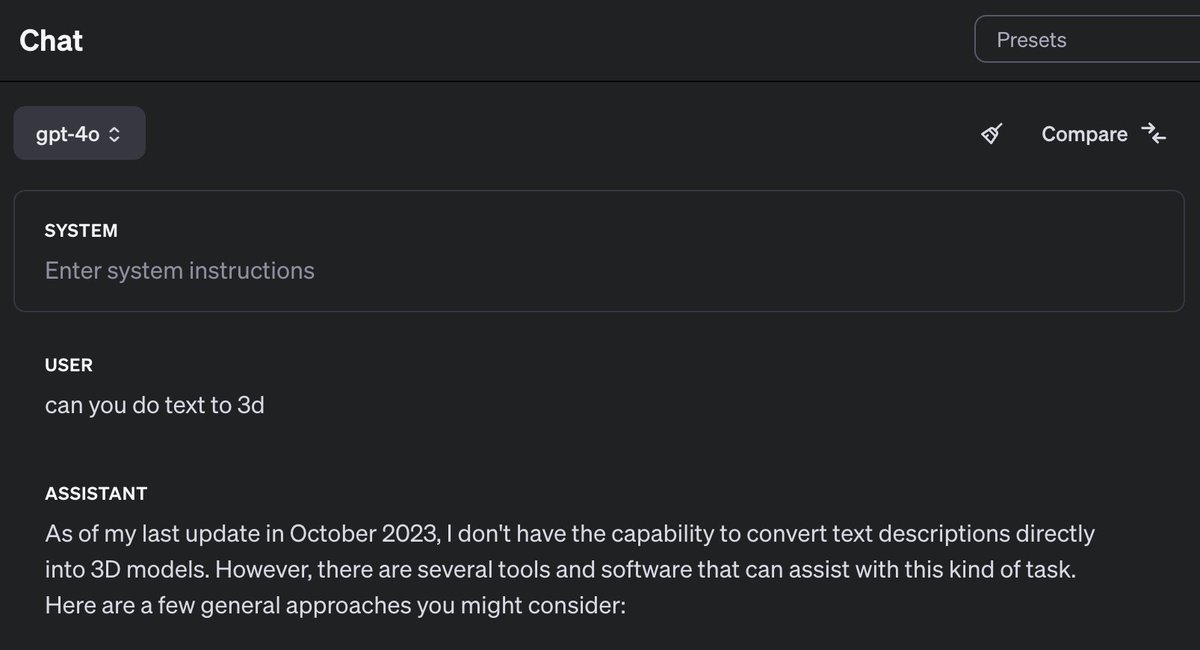

Know who agrees with that assessment, by the way? OpenAI, which has been talking about AI agents for a while now. In fact, the company

demoed a product strikingly similar to Gemini Live barely an hour after Hassabis and I chatted. The two companies are increasingly fighting for the same territory and seem to share a vision for how AI might change your life and how you might use it over time.

How exactly will those assistants work, and how will you use them? Nobody knows for sure, not even Hassabis. One thing Google is focused on right now is trip planning — it built a new tool for using Gemini to build an itinerary for your vacation that you can then edit in tandem with the assistant. There will eventually be many more features like that. Hassabis says he’s bullish on phones and glasses as key devices for these agents but also says “there is probably room for some exciting form factors.” Astra is still in an early prototype phase and only represents one way you might want to interact with a system like Gemini. The DeepMind team is still researching how best to bring multimodal models together and how to balance ultra-huge general models with smaller and more focused ones.

We’re still very much in the “speeds and feeds” era of AI, in which every incremental model matters and we obsess over parameter sizes. But pretty quickly, at least according to Hassabis, we’re going to start asking different questions about AI. Better questions. Questions about what these assistants can do, how they do it, and how they can make our lives better. Because the tech is a long way from perfect, but it’s getting better really fast.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25447224/AI_Overviews___Complex_Questions__still_.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447224/AI_Overviews___Complex_Questions__still_.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447225/AI_Organized_Results_Page__still_.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447226/AI_Overviews___Break_it_Down__still_.png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25447271/Gemini_Astra.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447271/Gemini_Astra.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447272/Gemini_Astra_Speaker.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25447277/Flash_Utility.png)