You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?Just upgraded to 2 month trial for The Artist Formerly Known as Bard (Gemini). Gonna see how this goes. Bard has been a life saver at work and with studying for certs. Let's see what more it can do.

UK gov't touts $100M+ plan to fire up 'responsible' AI R&D | TechCrunch

The U.K. government is finally publishing its response to an AI regulation consultation it kicked off last March, when it put out a white paper setting

UK gov’t touts $100M+ plan to fire up ‘responsible’ AI R&D

But existing regulators get a much smaller budget top-up...

Natasha Lomas @riptari / 7:01 PM EST•February 5, 2024

Image Credits: Ian Vogler / Getty Images

The U.K. government is finally publishing its response to an AI regulation consultation it kicked off last March, when it put out a white paper setting out a preference for relying on existing laws and regulators, combined with “context-specific” guidance, to lightly supervise the disruptive high tech sector.

The full response is being made available later this morning, so wasn’t available for review at the time of writing. But in a press release ahead of publication the Department for Science, Innovation and Technology (DSIT) is spinning the plan as a boost to U.K. “global leadership” via targeted measures — including £100 million+ (~$125 million) in extra funding — to bolster AI regulation and fire up innovation.

Per DSIT’s press release, there will be £10 million (~$12.5 million) in additional funding for regulators to “upskill” for their expanded workload, i.e. of figuring out how to apply existing sectoral rules to AI developments and actually enforcing existing laws on AI apps that breach the rules (including, it is envisaged, by developing their own tech tools).

“The fund will help regulators develop cutting-edge research and practical tools to monitor and address risks and opportunities in their sectors, from telecoms and healthcare to finance and education. For example, this might include new technical tools for examining AI systems,” DSIT writes. It did not provide any detail on how many additional staff could be recruited with the extra funding.

The release also touts — a notably larger — £90 million (~$113 million) in funding the government says will be used to establish nine research hubs to foster homegrown AI innovation in areas, such as healthcare, math and chemistry, which it suggests will be situated around the U.K.

The 90:10 funding split is suggestive of where the government wants most of the action to happen — with the bucket marked ‘homegrown AI development’ the clear winner here, while “targeted” enforcement on associated AI safety risks is envisaged as the comparatively small-time add-on operation for regulators. (Although it’s worth noting the government has previously announced £100 million for an AI taskforce, focused on safety R&D around advanced AI models.)

DSIT confirmed to TechCrunch that the £10 million fund for expanding regulators’ AI capabilities has not yet been established — saying the government is “working at pace” to get the mechanism set up. “However, it’s key that we do this properly in order to achieve our objectives and ensure that we are getting value for taxpayers’ money,” a department spokesperson told us.

The £90 million funding for the nine AI research hubs covers five years, starting from February 1. “The funding has already been awarded with investments in the nine hubs ranging from £7.2 million to £10 million,” the spokesperson added. They did not offer details on the focus of the other six research hubs.

The other top-line headline today is that the government is sticking to its plan not to introduce any new legislation for artificial intelligence yet.

“The UK government will not rush to legislate, or risk implementing ‘quick-fix’ rules that would soon become outdated or ineffective,” writes DSIT. “Instead, the government’s context-based approach means existing regulators are empowered to address AI risks in a targeted way.”

This staying the course is unsurprising — given the government is facing an election this year which polls suggest it will almost certainly lose. So this looks like an administration that’s fast running out of time to write laws on anything. Certainly, time is dwindling in the current parliament. (And, well, passing legislation on a tech topic as complex as AI clearly isn’t in the current prime minister’s gift at this point in the political calendar.)

At the same time, the European Union just locked in agreement on the final text of its own risk-based framework for regulating “trustworthy” AI — a long-brewing high tech rulebook which looks set to start to apply there from later this year. So the U.K.’s strategy of leaning away from legislating on AI, and opting to tread water on the issue, has the effect of starkly amplifying the differentiation vs the neighbouring bloc where, taking the contrasting approach, the EU is now moving forward (and moving further away from the U.K.’s position) by implementing its AI law.

The U.K. government evidently sees this tactic as rolling out the bigger welcome mat for AI developers. Even as the EU reckons businesses, even disruptive high tech businesses, thrive on legal certainty — plus, alongside that, the bloc is unveiling its own package of AI support measures — so which of these approaches, sector-specific guidelines vs a set of prescribed legal risks, will woo the most growth-charging AI “innovation” remains to be seen.

“The UK’s agile regulatory system will simultaneously allow regulators to respond rapidly to emerging risks, while giving developers room to innovate and grow in the UK,” is DSIT’s boosterish line.

(While, on business confidence, specifically, its release flags how “key regulators”, including Ofcom and the Competition and Markets Authority [CMA], have been asked to publish their approach to managing AI by April 30 — which it says will see them “set out AI-related risks in their areas, detail their current skillset and expertise to address them, and a plan for how they will regulate AI over the coming year” — suggesting AI developers operating under U.K. rules should prepare to read the regulatory tealeaves, across multiple sectoral AI enforcement priority plans, in order to quantify their own risk of getting into legal hot water.)

One thing is clear: U.K. prime minister Rishi Sunak continues to be extremely comfortable in the company of techbros — whether he’s taking time out from his day job to conduct an interview of Elon Musk for streaming on the latter’s own social media platform; finding time in his packed schedule to meet the CEOs of US AI giants to listen to their ‘existential risk’ lobbying agenda; or hosting a “global AI safety summit” to gather the tech faithful at Bletchley Park — so his decision to opt for a policy choice that avoids coming with any hard new rules right now was undoubtedly the obvious pick for him and his time-strapped government.

On the flip side, Sunak’s government does look to be in a hurry in another respect: When it comes to distributing taxpayer funding to charge up homegrown “AI innovation” — and, the suggestion here from DSIT is, these funds will be strategically targeted to ensure the accelerated high tech developments are “responsible” (whatever “responsible” means without there being a legal framework in place to define the contextual bounds in question).

As well as the aforementioned £90 million for the nine research hubs trailed in DSIT’s PR, there’s an announcement of £2 million in Arts & Humanities Research Council (AHRC) funding to support new research projects the government says “will help to define what responsible AI looks like across sectors such as education, policing and the creative industries”. These are part of the AHRC’s existing Bridging Responsible AI Divides (BRAID) program.

Additionally, £19 million will go toward 21 projects to develop “innovative trusted and responsible AI and machine learning solutions” aimed at accelerating deployment of AI technologies and driving productivity. (“This will be funded through the Accelerating Trustworthy AI Phase 2 competition, supported through the UKRI [ UK Research & Innovation] Technology Missions Fund, and delivered by the Innovate UK BridgeAI program,” says DSIT.)

In a statement accompanying today’s announcements, Michelle Donelan, the secretary of state for science, innovation, and technology, added:

The UK’s innovative approach to AI regulation has made us a world leader in both AI safety and AI development.

I am personally driven by AI’s potential to transform our public services and the economy for the better — leading to new treatments for cruel diseases like cancer and dementia, and opening the door to advanced skills and technology that will power the British economy of the future.

AI is moving fast, but we have shown that humans can move just as fast. By taking an agile, sector-specific approach, we have begun to grip the risks immediately, which in turn is paving the way for the UK to become one of the first countries in the world to reap the benefits of AI safely.

Today’s £100 million+ (total) funding announcements are additional to the £100 million previously announced by the government for the aforementioned AI safety taskforce (turned AI Safety Institute) which is focused on so-called frontier (or foundational) AI models, per DSIT, which confirmed this when we asked.

We also asked about the criteria and processes for awarding AI projects U.K. taxpayer funding. We’ve heard concerns the government’s approach may be sidestepping the need for a thorough peer review process — with the risk of proposals not being robustly scrutinized in the rush to get funding distributed.

A DSIT spokesperson responded by denying there’s been any change to the usual UKRI processes. “UKRI funds research on a competitive basis,” they suggested. “Individual applications for research are assessed by relevant independent experts from academia and business. Each proposal for research funding is assessed by experts for excellence and, where applicable, impact.”

“DSIT is working with regulators to finalise the specifics [of project oversight] but this will be focused around regulator projects that support the implementation of our AI regulatory framework to ensure that we are capitalising on the transformative opportunities that this technology has to offer, while mitigating against the risks that it poses,” the spokesperson added.

On foundational model safety, DSIT’s PR suggests the AI Safety Institute will “see the UK working closely with international partners to boost our ability to evaluate and research AI models”. And the government is also announcing a further investment of £9 million, via the International Science Partnerships Fund, which it says will be used to bring together researchers and innovators in the U.K. and the U.S. — “to focus on developing safe, responsible, and trustworthy AI”.

The department’s press release goes on to describe the government’s response as laying out a “pro-innovation case for further targeted binding requirements on the small number of organisations that are currently developing highly capable general-purpose AI systems, to ensure that they are accountable for making these technologies sufficiently safe”.

“This would build on steps the UK’s expert regulators are already taking to respond to AI risks and opportunities in their domains,” it adds. (And on that front the CMA put out a set of principles it said would guide its approach towards generative AI last fall.) The PR also talks effusively of “a partnership with the US on responsible AI”.

Asked for more details on this, the spokesperson said the aim of the partnership is to “bring together researchers and innovators in bilateral research partnerships with the US focused on developing safer, responsible, and trustworthy AI, as well as AI for scientific uses” — adding that the hope is for “international teams to examine new methodologies for responsible AI development and use”.

“Developing common understanding of technology development between nations will enhance inputs to international governance of AI and help shape research inputs to domestic policy makers and regulators,” DSIT’s spokesperson added.

While they confirmed there will be no U.S.-style ‘AI safety and security’ Executive Order issued by Sunak’s government, the AI regulation White Paper consultation response dropping later today sets out “the next steps”.[/SIZE]

A DSIT spokesperson responded by denying there’s been any change to the usual UKRI processes. “UKRI funds research on a competitive basis,” they suggested. “Individual applications for research are assessed by relevant independent experts from academia and business. Each proposal for research funding is assessed by experts for excellence and, where applicable, impact.”

“DSIT is working with regulators to finalise the specifics [of project oversight] but this will be focused around regulator projects that support the implementation of our AI regulatory framework to ensure that we are capitalising on the transformative opportunities that this technology has to offer, while mitigating against the risks that it poses,” the spokesperson added.

On foundational model safety, DSIT’s PR suggests the AI Safety Institute will “see the UK working closely with international partners to boost our ability to evaluate and research AI models”. And the government is also announcing a further investment of £9 million, via the International Science Partnerships Fund, which it says will be used to bring together researchers and innovators in the U.K. and the U.S. — “to focus on developing safe, responsible, and trustworthy AI”.

The department’s press release goes on to describe the government’s response as laying out a “pro-innovation case for further targeted binding requirements on the small number of organisations that are currently developing highly capable general-purpose AI systems, to ensure that they are accountable for making these technologies sufficiently safe”.

“This would build on steps the UK’s expert regulators are already taking to respond to AI risks and opportunities in their domains,” it adds. (And on that front the CMA put out a set of principles it said would guide its approach towards generative AI last fall.) The PR also talks effusively of “a partnership with the US on responsible AI”.

Asked for more details on this, the spokesperson said the aim of the partnership is to “bring together researchers and innovators in bilateral research partnerships with the US focused on developing safer, responsible, and trustworthy AI, as well as AI for scientific uses” — adding that the hope is for “international teams to examine new methodologies for responsible AI development and use”.

“Developing common understanding of technology development between nations will enhance inputs to international governance of AI and help shape research inputs to domestic policy makers and regulators,” DSIT’s spokesperson added.

While they confirmed there will be no U.S.-style ‘AI safety and security’ Executive Order issued by Sunak’s government, the AI regulation White Paper consultation response dropping later today sets out “the next steps”.[/SIZE]

Roblox rolls out real-time AI translation for all users — is this the start of true global multiplayer?

Works in 16 languages

Roblox rolls out real-time AI translation for all users — is this the start of true global multiplayer?

NewsBy Ryan Morrison

published 2 days ago

Works in 16 languages

(Image credit: Roblox)

Metaverse platform Roblox is bringing a new AI translation tool online for its millions of users around the world, making it easier to communicate regardless of a player's native language.

Built on a new custom artificial intelligence language model, the translation service will work in real-time chat and convert a message in one language to the user's native language instantly.

Roblox boasts over 70 million users connecting every day with some 2.4 billion messages exchanged between 180 countries every day and, according to the company, a third of the time people are chatting with others who don’t speak the same language as them.

This is likely an indication of how AI is going to be used in gaming, particularly multiplayer gaming in the short term. It presents a way to improve connections and communication. We could also see future models that incorporate live voice translation for in-game chat.

How does Roblox live AI translation work?

Live AI translation in Roblox works across 16 languages and is built on top of the TextChatService. Users simply start typing in their native language and others will see the message automatically converted.“For example, a user in Korea can type a chat message in Korean, and an English-speaking user will see it in English, while at the same time, a German-speaking user will read and respond to the message in German,” and they’d all understand each other.

We are very excited to roll it out to further enhance and expand our community’s daily communication.

Zhen Fang, Roblox

To improve the speed of translation so it feels instant, Roblox built a model that leverages linguistic similarities between languages, ensuring those most closely matched are trained together such as Spanish and Portuguese.

The translation AI is built on a large language model trained on open-source data, human-labeled chat translation results and common chat sentences and phrases found in Roblox itself.

Zhen Fang, Head of International at Roblox said: “Our early experiments with this new automated chat translation technology showed a very positive impact on time spent, chat engagement, and session quality, so we are very excited to roll it out to further enhance and expand our community’s daily communication.”

What is coming next for localization?

(Image credit: Roblox)

The underlying translation model is also being made available to community developers, allowing them to offer translations of other aspects of the interface.

“In the future, we envision every part of a user experience to be fully translated into their native language, including offering AI-powered voice translation,” said CTO Daniel Sturman.

This feeds into a growing trend in multiplayer and global digital environments where, despite being available anywhere in the world, the experience reflects your local needs.

In the future AI tools will also be able to customize the environment to reflect local tastes and interests, adapt speech patterns, phrasing and styles of NPCs and improve customization.

Lv99 Slacker

Pro

AI-Powered Service Churns Out Pictures of Fake IDs Capable of Passing KYC/AML for as Little as $15 | Feb 5, 2024

www.nobsbitcoin.com

www.nobsbitcoin.com

AI-Powered Service Churns Out Pictures of Fake IDs Capable of Passing KYC/AML for as Little as $15

"A website called OnlyFake is claiming to use “neural networks” to generate realistic looking photos of fake IDs for just $15, radically disrupting the marketplace for fake identities and cybersecurity more generally," reported 404Media.

www.nobsbitcoin.com

www.nobsbitcoin.com

"A website called OnlyFake is claiming to use “neural networks” to generate realistic looking photos of fake IDs for just $15, radically disrupting the marketplace for fake identities and cybersecurity more generally," reported 404Media.

"In our own tests, OnlyFake created a highly convincing California driver's license, complete with whatever arbitrary name, biographical information, address, expiration date, and signature we wanted," reported 404Media.

404 Media then used another fake ID generated by the service to successfully step through the KYC process on OKX exchange.

"The service claims to use 'generators' which create up to 20,000 documents a day. The service’s owner, who goes by the moniker John Wick, told 404 Media that hundreds of documents can be generated at once using data from an Excel table."

Wick also told 404 Media their service could be used to bypass verification at a host of sites, including Binance, Revolut, Wise, Kraken, Bybit, Payoneer, Huobi, Airbnb, OKX and Coinbase, adding that it shouldn't be used for the purpose of forging documents.

"OnlyFake offers other technical measures to make its churned out images even more convincing. The service offers a metadata changer because identity verification services, or people, may inspect this information to determine if the photo is fake or not," was stated in the article.

New AI tool discovers realistic 'metamaterials' with unusual properties

A coating that can hide objects in plain sight, or an implant that behaves exactly like bone tissue—these extraordinary objects are already made from "metamaterials." Researchers from TU Delft have now developed an AI tool that not only can discover such extraordinary materials but also makes...

Editors' notes

New AI tool discovers realistic 'metamaterials' with unusual properties

by Fien Bosman, Delft University of Technology

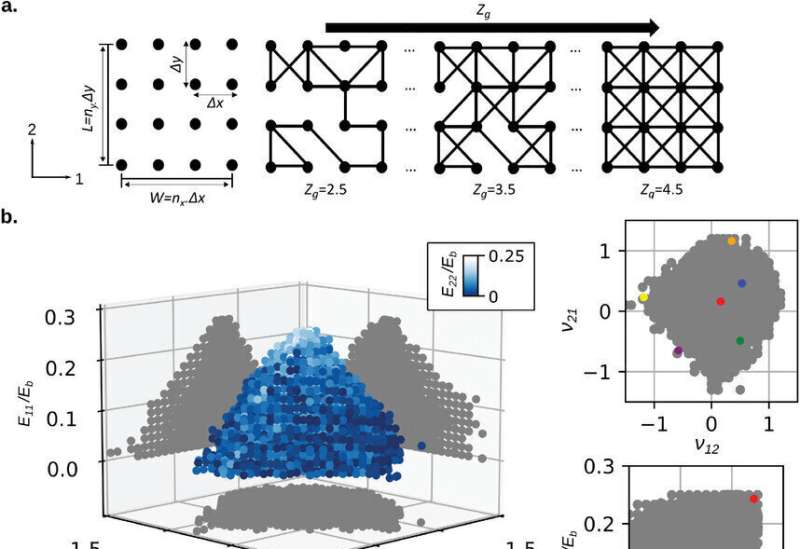

A schematic illustration and elastic properties of the RN unit cells as well as the network architecture of the unit cell elastic properties model. Credit: Advanced Materials (2023). DOI: 10.1002/adma.202303481

A coating that can hide objects in plain sight, or an implant that behaves exactly like bone tissue—these extraordinary objects are already made from "metamaterials." Researchers from TU Delft have now developed an AI tool that not only can discover such extraordinary materials but also makes them fabrication-ready and durable. This makes it possible to create devices with unprecedented functionalities. They have published their findings in Advanced Materials.

The properties of normal materials, such as stiffness and flexibility, are determined by the molecular composition of the material, but the properties of metamaterials are determined by the geometry of the structure from which they are built. Researchers design these structures digitally and then have it 3D-printed. The resulting metamaterials can exhibit unnatural and extreme properties. Researchers have, for instance, designed metamaterials that, despite being solid, behave like a fluid.

"Traditionally, designers use the materials available to them to design a new device or a machine. The problem with that is that the range of available material properties is limited. Some properties that we would like to have just don't exist in nature. Our approach is: tell us what you want to have as properties and we engineer an appropriate material with those properties. What you will then get is not really a material but something in-between a structure and a material, a metamaterial," says Professor Amir Zadpoor of the Department of Biomechanical Engineering.

New AI tool discovers realistic 'metamaterials' with unusual properties. Credit: TU Delft

Inverse design

Such a material discovery process requires solving a so-called "inverse problem": the problem of finding the geometry that gives rise to the properties you desire. Inverse problems are notoriously difficult to solve, which is where AI comes into the picture. TU Delft researchers have developed deep-learning models that solve these inverse problems.

"Even when inverse problems were solved in the past, they have been limited by the simplifying assumption that the small-scale geometry can be made from an infinite number of building blocks. The problem with that assumption is that metamaterials are usually made by 3D printing and real 3D printers have a limited resolution, which limits the number of building blocks that fit within a given device," says first author Dr. Helda Pahlavani.

The AI models developed by TU Delft researchers break new ground by bypassing any such simplifying assumptions. "So we can now simply ask: how many building blocks does your manufacturing technique allow you to accommodate in your device? The model then finds the geometry that gives you your desired properties for the number of building blocks that you can actually manufacture."

Unlocking full potential

A major practical problem neglected in previous research has been the durability of metamaterials. Most existing designs break once they are used a few times. That is because existing metamaterial design approaches do not take durability into account."So far, it has been only about what properties can be achieved. Our study considers durability and selects the most durable designs from a large pool of design candidates. This makes our designs really practical and not just theoretical adventures," says Zadpoor.

The possibilities of metamaterials seem endless, but the full potential is far from being realized, says assistant professor Mohammad J. Mirzaali, corresponding author of the publication. This is because finding the optimal design of a metamaterial is currently still largely based on intuition, involves trial and error, and is, therefore, labor-intensive. Using an inverse design process, where the desired properties are the starting point of the design, is still very rare within the metamaterials field.

"But we think the step we have taken is revolutionary in the field of metamaterials. It could lead to all kinds of new applications." There are possible applications in orthopedic implants, surgical instruments, soft robots, adaptive mirrors, and exo-suits.

More information: Helda Pahlavani et al, Deep Learning for Size‐Agnostic Inverse Design of Random‐Network 3D Printed Mechanical Metamaterials, Advanced Materials (2023). DOI: 10.1002/adma.202303481

Journal information: Advanced Materials

Provided by Delft University of Technology

Computer Science > Artificial Intelligence

[Submitted on 8 Feb 2024]An Interactive Agent Foundation Model

Zane Durante, Bidipta Sarkar, Ran Gong, Rohan Taori, Yusuke Noda, Paul Tang, Ehsan Adeli, Shrinidhi Kowshika Lakshmikanth, Kevin Schulman, Arnold Milstein, Demetri Terzopoulos, Ade Famoti, Noboru Kuno, Ashley Llorens, Hoi Vo, Katsu Ikeuchi, Li Fei-Fei, Jianfeng Gao, Naoki Wake, Qiuyuan HuangThe development of artificial intelligence systems is transitioning from creating static, task-specific models to dynamic, agent-based systems capable of performing well in a wide range of applications. We propose an Interactive Agent Foundation Model that uses a novel multi-task agent training paradigm for training AI agents across a wide range of domains, datasets, and tasks. Our training paradigm unifies diverse pre-training strategies, including visual masked auto-encoders, language modeling, and next-action prediction, enabling a versatile and adaptable AI framework. We demonstrate the performance of our framework across three separate domains -- Robotics, Gaming AI, and Healthcare. Our model demonstrates its ability to generate meaningful and contextually relevant outputs in each area. The strength of our approach lies in its generality, leveraging a variety of data sources such as robotics sequences, gameplay data, large-scale video datasets, and textual information for effective multimodal and multi-task learning. Our approach provides a promising avenue for developing generalist, action-taking, multimodal systems.

| Subjects: | Artificial Intelligence (cs.AI); Machine Learning (cs.LG); Robotics (cs.RO) |

| Cite as: | arXiv:2402.05929 [cs.AI] |

| (or arXiv:2402.05929v1 [cs.AI] for this version) | |

| [2402.05929] An Interactive Agent Foundation Model Focus to learn more |

Submission history

From: Zane Durante [view email][v1] Thu, 8 Feb 2024 18:58:02 UTC (31,731 KB)

Lv99 Slacker

Pro

How Walmart, Delta, Chevron and Starbucks are using AI to monitor employee messages | Feb 9, 2024

www.cnbc.com

www.cnbc.com

How Walmart, Delta, Chevron and Starbucks are using AI to monitor employee messages

Aware uses AI to analyze companies' employee messages across Slack, Microsoft Teams, Zoom and other communications services.

Depending on where you work, there’s a significant chance that artificial intelligence is analyzing your messages on Slack, Microsoft Teams, Zoom

and other popular apps.

Huge U.S. employers such as Walmart, Delta Air Lines, T-Mobile, Chevron and Starbucks, as well as European brands including Nestle and AstraZeneca, have turned to a seven-year-old startup, Aware, to monitor chatter among their rank and file, according to the company.

Jeff Schumann, co-founder and CEO of the Columbus, Ohio-based startup, says the AI helps companies “understand the risk within their communications,” getting a read on employee sentiment in real time, rather than depending on an annual or twice-per-year survey.

Using the anonymized data in Aware’s analytics product, clients can see how employees of a certain age group or in a particular geography are responding to a new corporate policy or marketing campaign, according to Schumann. Aware’s dozens of AI models, built to read text and process images, can also identify bullying, harassment, discrimination, noncompliance, pornography, nudity and other behaviors, he said.

Aware’s analytics tool — the one that monitors employee sentiment and toxicity — doesn’t have the ability to flag individual employee names, according to Schumann. But its separate eDiscovery tool can, in the event of extreme threats or other risk behaviors that are predetermined by the client, he added.

Aware said Walmart, T-Mobile, Chevron and Starbucks use its technology for governance risk and compliance, and that type of work accounts for about 80% of the company’s business.

Jutta Williams, co-founder of AI accountability nonprofit Humane Intelligence, said AI adds a new and potentially problematic wrinkle to so-called insider risk programs, which have existed for years to evaluate things like corporate espionage, especially within email communications.

Speaking broadly about employee surveillance AI rather than Aware’s technology specifically, Williams told CNBC: “A lot of this becomes thought crime.” She added, “This is treating people like inventory in a way I’ve not seen.”

Aware’s revenue has jumped 150% per year on average over the past five years, Schumann told CNBC, and its typical customer has about 30,000 employees. Top competitors include Qualtrics, Relativity, Proofpoint, Smarsh and Netskope.

Bumble's new AI tool identifies and blocks scam accounts, fake profiles | TechCrunch

Bumble announced today that it's launching a new AI-powered feature that is designed to help identify spam, scams and fake profiles.

Bumble’s new AI tool identifies and blocks scam accounts, fake profiles

Aisha Malik @aiishamalik1 / 11:21 AM EST•February 5, 2024

Image Credits: TechCrunch

Bumble announced today that it’s launching a new AI-powered feature that is designed to help identify spam, scams and fake profiles. The new tool, called Deception Detector, aims to take action on malicious content before Bumble users ever come across it.

In testing, Bumble found that the tool was able to automatically block 95% of accounts that were identified as spam or scam accounts. Within the first two months of testing the tool, Bumble saw user reports of spam, scams and fake accounts reduced by 45%. Deception Detector operates alongside Bumble’s human moderation team.

The launch of the new feature comes as internal Bumble research showed that fake profiles and the risk of scams are users’ top concerns when online dating, the company says. The research also showed that 46% of women expressed anxiety over the authenticity of their online matches on dating apps.

“Bumble Inc. was founded with the aim to build equitable relationships and empower women to make the first move, and Deception Detector is our latest innovation as part of our ongoing commitment to our community to help ensure that connections made on our apps are genuine,” said Bumble CEO Lidiane Jones in a statement. “With a dedicated focus on women’s experience online, we recognize that in the AI era, trust is more paramount than ever.”

The Federal Trade Commission (FTC) reported last year that romance scams cost victims $1.3 billion in 2022, while the median reported loss was $4,400. The report stated that although romance scammers often use dating apps to target people, it’s more common for people to be targeted via DMs on social media platforms. For instance, 40% of people who lost money to a romance scam said the contact started on social media, while 19% said it began on a website or app.

The new tool is Bumble’s latest AI feature designed to make the app safer. In 2019, Bumble introduced a “Private Detector” feature that automatically blurs nude images and labels them as such in chats, allowing users to view the image or report the user.

Bumble is also leveraging AI within Bumble For Friends, the company’s dedicated app for finding friends. The app recently launched AI-powered icebreaker suggestions that help users write and send a first message based on the other person’s profile. You can tweak the question or ask for another suggestion, but you can only use one AI-generated icebreaker per chat.

It’s worth noting that Private Detector and Deception Detector are in use in both Bumble and Bumble for Friends.

Microsoft brings new AI image functionality to Copilot, adds new model Deucalion

The company seems outwardly unconcerned about any criticisms of AI being misused, as well as unbothered by the lawsuit and investigation...

Microsoft brings new AI image functionality to Copilot, adds new model Deucalion

Carl Franzen @carlfranzenFebruary 7, 2024 9:10 AM

Credit: VentureBeat made with Midjourney V6

In a startling move, Microsoft today announced a redesigned look for its Copilot AI search and chatbot experience on the web (formerly known as Bing Chat), new built-in AI image creation and editing functionality, and a new AI model, Deucalion, that is powering one version of Copilot.

In addition, the Redmond, Washington-headquartered software and cloud giant unveiled a new video ad that will air during this coming Sunday’s NFL Super Bowl pro football championship game between the Kansas City Chiefs and the San Francisco Giants teams.

The redesign is arguably the least interesting part of the announcements today, with Microsoft giving its Copilot landing page on the web a cleaner look with more white space and less text, yet also adding more imagery in the form of a visual carousel of “cards” that show different AI-generated images as examples of what the user can make, plus samples of the “prompts” or instructions that the user could type in to generate them.

Here are images of the old Bing Chat and the new Microsoft Copilot design, one after another, for you to compare:

Bing Chat initial design circa July 2023. Credit: VentureBeat screenshot/Microsoft

Microsoft Copilot redesign circa February 2024. Credit: VentureBeat screenshot/Microsoft

The new Copilot is available publicly for all users at “copilot.microsoft.com and our Copilot app on iOS and Android app stores,” though the AI image generation features are currently available “in English in the United States, United Kingdom, Australia, India and New Zealand,” for now.

A savvy pro-AI Super Bowl ad

The Super Bowl is of course one of the most widely viewed sports events in the world and the United States each year, and the price to air ads nationally during it starts in the multi-millions of dollars even for a short spot of just 30 or so seconds.

That’s not such a big cost for Microsoft given its position lately as one of, if not the most, valuable companies in the world by market capitalization (the lead spot fluctuates pretty regularly), but it does indicate how serious the company is about bolstering the Copilot name and its associations with generative AI as a technology more generally, convincing “Main Street,” to use the colloquialism for average U.S. residents, that they should be using Copilot for their web searching instead of, say, Google.

But the ad actually goes even further than this: in fact, if you watch it (embedded above), you’ll see it quickly but effectively shows people using it to “generate storyboard images” for scenes in a movie script, as well as “code for my 3D open world game.”

The message from Microsoft here is clear: Copilot can do much more than just search. It can create content and even software for you.

Emphasizing content creation in film/TV, video gaming, entertainment — even amid resistance and deepfake scandals

The emphasis on targeting the entertainment industry is notable as well at a time when many actors, writers, performers, musicians, VFX artists and even game makers are openly resisting and calling for more protections against AI taking away their work opportunities. Microsoft’s add pretty clearly and cleanly brushes past these objections, in my view, positioning Copilot and AI more generally as a a creative tool for up-and-coming strivers.It’s also notable that Microsoft is bringing new AI image generation and editing capabilities directly to Copilot, which its release says is powered by its Designer AI art generator, similar to how OpenAI’s DALL-E 3 image generation AI model has been baked into ChatGPT.

Designer AI is of course, also powered by DALL-E 3 thanks to Microsoft’s big investment and support for OpenAI. As Microsoft’s news release authored by executive vice president and consumer marketing chief Yusuf Mehdi states:

“With Designer in Copilot, you can go beyond just creating images to now customize your generated images with inline editing right inside Copilot, keeping you in the flow of your chat. Whether you want to highlight an object to make it pop with enhanced color, blur the background of your image to make your subject shine, or even reimagine your image with a different effect like pixel art, Copilot has you covered, all for free. If you’re a Copilot Pro subscriber, in addition to the above, you can also now easily resize and regenerate images between square and landscape without leaving chat. Lastly, we will soon roll out our new Designer GPT inside Copilot, which offers an immersive, dedicated canvas inside of Copilot where you can visualize your ideas.“

Microsoft is plowing ahead with its AI image generation capabilities, trying to make them even more accessible to users across mobile and desktop, even amid the scandal that erupted late last month when explicit, nonconsensual AI generated deepfakes of musician Taylor Swift (who is expected to appear at the Super Bowl in support of her NFL player boyfriend) circulated on social platforms and the web, allegedly created with Microsoft’s Designer AI generator. And that was coming after more local deepfake scandals in at least one U.S. high school.

The company seems outwardly unconcerned about any criticisms of AI being misused, as well as unbothered by the lawsuit and federal investigation it is facing from various parties over its use of AI and alliance with OpenAI.

A new AI model emerges: Deucalion

Buried amid the announcements today — actually, not even mentioned in the release itself — is the fact that Microsoft has added a new AI model under the hood of one version of Copilot: Deucalion.According to a post on X (formerly Twitter) from Microsoft Corporate Vice President and Head of Engineering for Copilot and Bing, Jordi Ribas, the company has “shipped Deucalion, a fine-tuned model that makes Balanced mode… richer and faster.”

“Balanced” mode refers to the middle category of results Copilot can produce. Users can select either “Creative,” “Balanced” or “Precise” modes for their responses from Copilot (and previously, Bing Chat), which will result in the AI assistant providing either more or less of its own generated output, and ultimately, more hallucinations the more creative one goes.

However, the “Creative” mode can be more effective for those seeking not specific facts but help with, as the name indicates, creative, open-ended projects such as fictional worldbuilding, writing, and designing.

For those doing research for school or work, the “Precise” and “Balanced” modes are probably a better bet. Of course, “Balanced,” as the name indicates, seeks to split the difference and provide both equal parts creativity and precision/factual responses for users.

Now, the big question is what the Deucalion is based on. Bing Chat itself was powered by OpenAI’s GPT-4, the model underlying ChatGPT Plus/Team/Enterprise, so it stands to reason that GPT-4 and GPT-4 Turbo/V continue to power Copilot.

However, is Deucalion based on GPT-4, or another model, say Microsoft’s Phi-2? The fact that Ribas said it was “a fine-tuned model” makes me think it is a version of GPT-4 that has been further tweaked by Microsoft engineers for their purposes. OpenAI does support fine-tuning of GPT 4, according to its documentation.

Documentation for Deucalion is pretty scarce right now from what I’ve seen, but Mikhail Parakhin, Microsoft’s CEO of Advertising and Web Services, posted on X last week that the company was testing it and that it was named after the son of Prometheus in Greek mythology.

As seen in Mikhail’s X post/tweet, it was actually a response to third-party Windows developer and tech influencer Vitor de Lucca, who noticed that the answers provided in Copilot’s Balanced mode were “better and bigger.”

de Lucca further posted on X yesterday that the translation capabilities from the new Deucalion-powered Balanced mode were also superior to the Creative mode.

We’ve reached out to our Microsoft spokesperson contacts for more information and tweeted at Ribas for more information about it, and will update you when we hear back.