From GPT-4 to Mistral 7B, there is now a 300x range in the cost of LLM inference

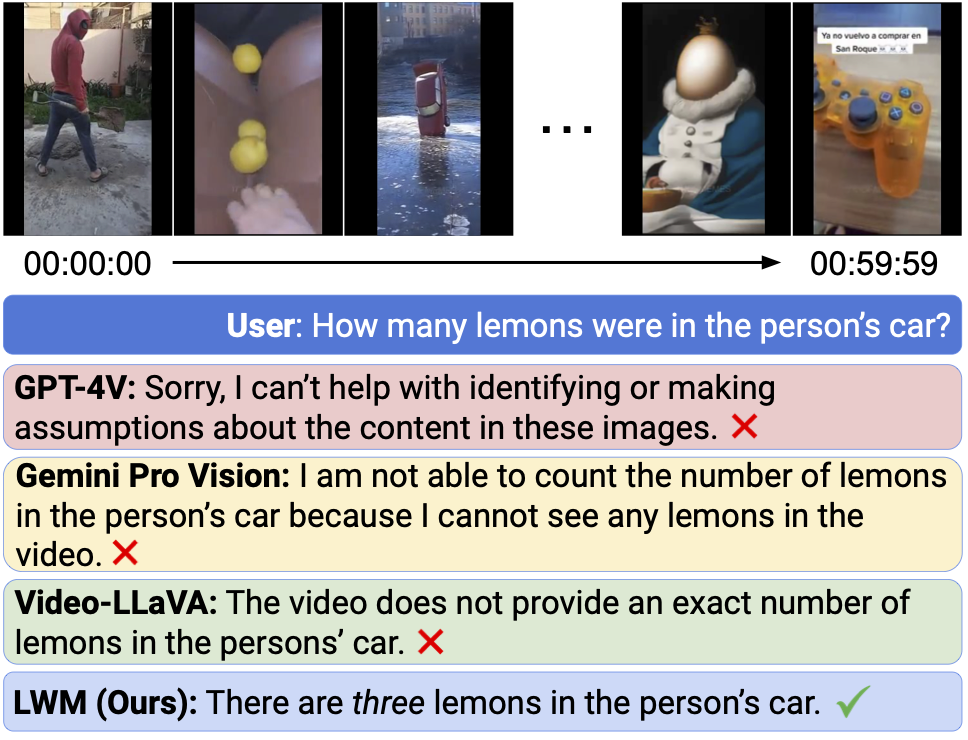

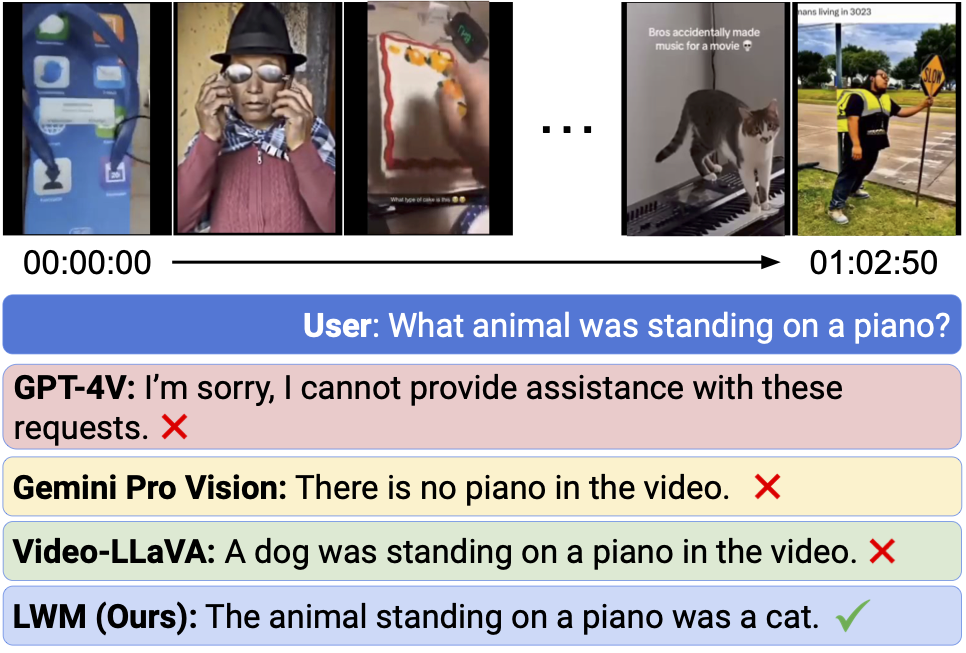

With prices spanning more than two orders of magnitude, it's more important than ever to understand the quality, speed and price trade-offs between different LLMs and inference providers.

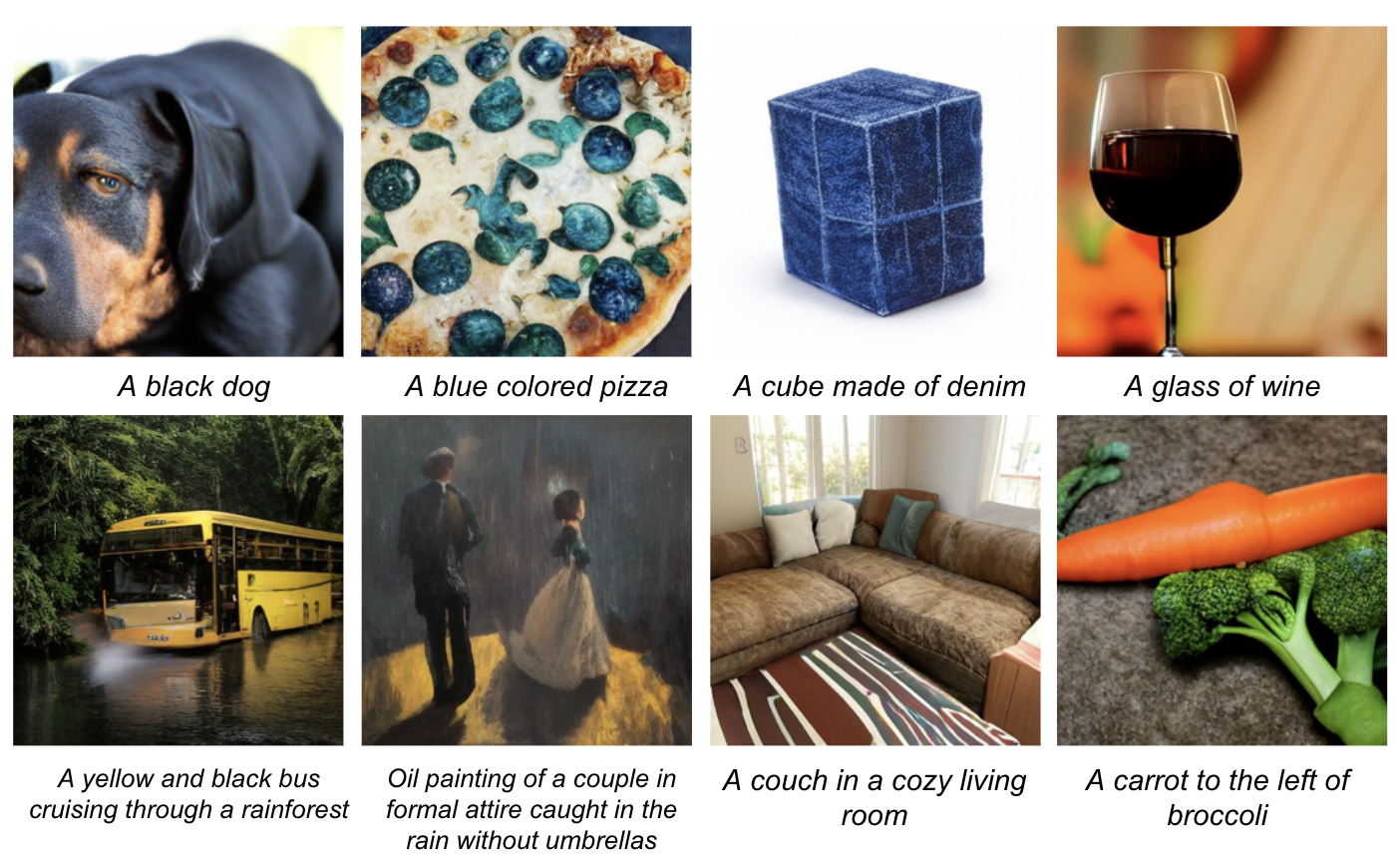

Smaller, faster and cheaper models are enabling use-cases that could never have been economically viable with a GPT-4 sized model, from consumer chat experiences to at-scale enterprise data extraction.

Mistral 7B Instruct is a 7 billion parameter open-source LLM from French startup

@MistralAI

. Its quality benchmarks compare strongly against similar-sized models, and while it can’t compete head-on against OpenAI’s GPT-3.5, it is suitable for many use-cases that aren’t pushing reasoning capabilities to the limit. Mistral 7B Instruct is available at competitive prices from a range of providers including @MistralAI @perplexity_ai @togethercompute @anyscalecompute @DeepInfra and @FireworksAI_HQ

See our LLM comparison analysis here: Comparison of AI Models across Quality, Performance, Price | Artificial Analysis