You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?agents will likely be one of the foundational tools used to write tv and movie scripts or create audiobooks with professional production qualities.

Last edited:

Rabbit’s Little Walkie-Talkie Learns Tasks That Stump Siri and Alexa

The startup has developed a virtual assistant that learns whatever digital errands you teach it. The interface is extra cute: a handheld device you use to issue voice commands to your bot army.

JULIAN CHOKKATTU

GEAR

JAN 9, 2024 1:30 PM

Rabbit’s Little Walkie-Talkie Learns Tasks That Stump Siri and Alexa

The startup has developed a virtual assistant that learns whatever digital errands you teach it. The interface is extra cute: a handheld device you use to issue voice commands to your bot army.

PHOTOGRAPH: RABBIT AI

DO YOU HATE apps? Jesse Lyu hates apps. At least, that was my takeaway after my first chat with the founder of Rabbit Inc., a new AI startup debuting a pocket-friendly device called the R1 at CES 2024. Instead of taking out your smartphone to complete some task, hunting for the right app, and then tapping around inside it, Lyu wants us to just ask the R1 via a push-to-talk button. Then a series of automated scripts called “rabbits” will carry out the task so you can go about your day.

The R1 is a red-orange, squarish device about the size of a stack of Post-It notes. It was designed in collaboration with the Swedish firm Teenage Engineering. (Lyu is on TE's board of directors.) The R1 has a 2.88-inch touchscreen on the left side, and there's an analog scroll wheel to the right of it. Above the scroll wheel is a camera that can rotate 360 degrees. It's called the “Rabbit Eye”—when it’s not in use, the camera faces up or down, a de facto privacy shutter—and you can employ it as a selfie or rear camera. While you can use the Rabbit Eye for video calls, it’s not meant to be used like a traditional smartphone camera; more on this later.

PHOTOGRAPH: RABBIT AI

PHOTOGRAPH: RABBIT AI

On the right edge is a push-to-talk button you press and hold to give the R1 voice commands, and there’s a 4G LTE SIM card slot for constant connectivity, meaning it doesn’t need to pair with any other device. (You can also connect the R1 to a Wi-Fi network.) It has a USB-C port for charging, and Rabbit claims it’ll last “all day” on a charge.

The R1 costs $199, though you’ll have to factor in the cost of a monthly cellular connectivity bill too, and you have to set that up yourself. Preorders start today, and it ships in late March.

PHOTOGRAPH: RABBIT AI

This pocket-friendly device is by no means meant to replace your smartphone. You’re not going to be able to use it to watch movies or play games. Instead, it’s meant to take menial tasks off your hands. Lyu compared it to the act of passing your phone off to a personal assistant to complete a task. For example, it can call an Uber for you. Just press and hold the push-to-talk button and say, “Get me an Uber to the Empire State Building.” The R1 will take a few seconds to parse out your request, then it'll display cards on the screen showing your fare and other details, then request the ride. This process is the same across a variety of categories, whether you want to make a reservation at a restaurant, book an airline ticket, add a song to your Spotify playlist, and so on.

The trick is that the R1 doesn’t have any onboard apps. It also doesn’t connect to any apps' APIs—application programming interfaces, the software gateways that cloud services use for data requests. There are no plug-ins and no proxy accounts. And again, it doesn't pair with your smartphone.

PHOTOGRAPH: JULIAN CHOKKATTU

Rabbit OS instead acts as a layer where you can toggle on access for select apps via a web portal. Lyu showed me a web page called the Rabbit Hole with several links to log into your accounts on services like OpenTable, Uber, Spotify, Doordash, and Amazon. Tap on one of these and you’ll be asked to sign in, essentially granting Rabbit OS the ability to perform actions on the connected account on your behalf.

PHOTOGRAPH: JULIAN CHOKKATTU

That sounds like a privacy nightmare, but Rabbit Inc. claims it doesn’t store any user credentials of third-party services. Also, all of the authentication happens on the third-party service’s login systems, and you’re free to unlink Rabbit OS’s access at any time and delete any stored data. In the same vein, since the R1 uses a push-to-talk button—like a walkie-talkie—to trigger the voice command prompt, there's no wake word, so the R1 doesn't have to constantly listen to you the way most popular voice assistants do. The microphone on the device only activates and records audio when you hit that button.

The backend uses a combination of large language models to understand your intent (powered by OpenAI’s ChatGPT) and large action models developed by Rabbit Inc., which will carry out your requests. These LAMs learn by demonstration—they observe how a human performs a task via a mobile, desktop, or cloud interface, and then replicate that task on their own. The company has trained up several actions for the most popular apps, and Rabbit's capabilities will grow over time.

PHOTOGRAPH: RABBIT AI

PHOTOGRAPH: RABBIT AI

We're all used to talking to our devices by now, asking voice assistants like Siri or Google Assistant to send a text or turn up the Daft Punk. But Rabbit does things differently. In the company's press materials, it notes that Rabbit OS is made to handle not just tasks but “errands,” which are by nature more complex and require real-time interactions to take place. Some examples the company offers are researching travel itineraries and booking the best option for your schedule and budget, or adding items to a virtual grocery store cart and then completing all the necessary steps to check out and pay.

PHOTOGRAPH: JULIAN CHOKKATTU

Arguably the most interesting feature of LAMs in the R1 is an experimental “teach mode,” which will arrive via an update at a later date. Simply point the R1’s camera at your desktop screen or phone and perform a task you’d want the R1 to learn—Lyu’s example was removing a watermark in Adobe Photoshop. (Hooray, stealing copyrighted images!) You’re essentially training your own “rabbits” to learn how you do niche tasks you’d rather automate. Once your rabbits learn the task, you can then press the button and ask your R1 to do something you alone have taught it.

Lyu also says his team taught a rabbit how to survive in the video game Diablo IV, demonstrating all the ways to kill enemies and keep the health bar topped up. Theoretically, you can ask a rabbit to create a character and level it up so that you don’t have to grind in the game.

agents will likely be one of teh foundational tools used to write tv and movie scripts or create audiobooks with professional production qualities.

I’m using them right know to generate content for the blog of my site.

This Brazilian fact-checking org uses a ChatGPT-esque bot to answer reader questions

"Instead of giving a list of URLs that the user can access — which requires more work for the user — we can answer the question they asked.”

www.niemanlab.org

This Brazilian fact-checking org uses a ChatGPT-esque bot to answer reader questions

“Instead of giving a list of URLs that the user can access — which requires more work for the user — we can answer the question they asked.”By HANAA' TAMEEZ @hanaatameez Jan. 9, 2024, 12:33 p.m.

In the 13 months since OpenAI launched ChatGPT, news organizations around the world have been experimenting with the technology in hopes of improving their news products and production processes — covering public meetings, strengthening investigative capabilities, powering translations.

With all of its flaws — including the ability to produce false information at scale and generate plain old bad writing — newsrooms are finding ways to make the technology work for them. In Brazil, one outlet has been integrating the OpenAI API with its existing journalism to produce a conversational Q&A chatbot. Meet FátimaGPT.

FátimaGPT is the latest iteration of Fátima, a fact-checking bot by Aos Fatos on WhatsApp, Telegram, and Twitter. Aos Fatos first launched Fátima as a chatbot in 2019. Aos Fatos (“The Facts” in Portuguese) is a Brazilian investigative news outlet that focuses on fact-checking and disinformation.

Bruno Fávero, Aos Fato’s director of innovation, said that although the chatbot had over 75,000 users across platforms, it was limited in function. When users asked it a question, the bot would search Aos Fatos’s archives and use keyword comparison to return a (hopefully) relevant URL.

Fávero said that when OpenAI launched ChatGPT, he and his team started thinking about how they could use language learning models in their work. “Fátima was the obvious candidate for that,” he said.

“AI is taking the world by storm, and it’s important for journalists to understand the ways it can be harmful and to try to educate the public on how bad actors may misuse AI,” Fávero said. “I think it’s also important for us to explore how it can be used to create tools that are useful for the public and that helps bring them reliable information.”

This past November, Aos Fatos launched the upgraded FátimaGPT, which pairs a language learning model with Aos Fato’s archives to give users a clear answer to their question with source lists of URLs. It’s available to use on WhatsApp, Telegram, and the web. In its first few weeks of beta, Fávero said that 94% of the answers analyzed were “adequate,” while 6% were “insufficient,” meaning the answer was in the database but FátimaGPT didn’t provide it. There were no factual mistakes in any of the results, he said.

I asked FátimaGPT through WhatsApp if COVID-19 vaccines are safe and it returned a thorough answer saying yes, along with a source list. On the other hand, I asked FátimaGPT for the lyrics to a Brazilian song I like and it answered, “Wow, I still don’t know how to answer that. I will take note of your message and forward it internally as I am still learning.”

Aos Fatos was concerned at first about implementing this sort of technology, particularly because of “ hallucinations,” where ChatGPT presents false information as true. Aos Fatos is using a technique called retrieval-augmented generation, which links the language learning model to a specific, reliable database to pull information from. In this case, the database is all of Aos Fatos’s journalism.

“If a user asks us, for instance, if elections in Brazil are safe and reliable, then we do a search in our database of fact-checks and articles,” Fávero explained. “Then we extract the most relevant paragraphs that may help answer this question. We put that in a prompt as context to the OpenAI API and [it] almost always gives a relevant answer. Instead of giving a list of URLs that the user can access — which requires more work for the user — we can answer the question they asked.”

Aos Fatos has been experimenting with AI for years. Fátima is an audience-facing product, but Aos Fatos has also used AI to build the subscription audio transcription tool Escriba for journalists. Fávero said the idea came from the fact that journalists in his own newsroom would manually transcribe their interviews because it was hard to find a tool that transcribed Portuguese well. In 2019, Aos Fatos also launched Radar, a tool that uses algorithms to real-time monitor disinformation campaigns on different social media platforms.

Other newsrooms in Brazil are also using artificial intelligence in interesting ways. In October 2023, investigative outlet Agência Pública started using text-to-speech technology to read stories aloud to users. It uses AI to develop the story narrations in the voice of journalist Mariana Simões, who has hosted podcasts for Agência Pública. Núcleo, an investigative news outlet that covers the impacts of social media and AI, developed Legislatech, a tool to monitor and understand government documents.

The use of artificial intelligence in Brazilian newsrooms is particularly interesting as trust in news in the country continues to decline. A 2023 study from KPMG found that 86% of Brazilians believe artificial intelligence is reliable, and 56% are willing to trust the technology.

Fávero said that one of the interesting trends in how people are using FátimaGPT is trying to test its potential biases. Users will often ask a question, for example, about the current president Luiz Inácio Lula da Silva, and then ask the same question about his political rival, former president Jair Bolsonaro. Or, users will ask one question about Israel and then ask the same question about Palestine to look for bias. The next step is developing FátimaGPT to accept YouTube links so that it can extract a video’s subtitles and fact-check the content against Aos Fatos’s journalism.

FátimaGPT’s results can only be as good as Aos Fatos’s existing coverage, which can be a challenge when users ask about a topic that hasn’t gotten much coverage. To get around that, Fávero’s team programmed FátimaGPT to provide the dates for the information published that it shares. This way users know that the information they’re getting may be outdated.

“If you ask something about, [for instance], how many people were killed in the most recent Israeli-Palestinian conflict, it’s something that we were covering, but we’re [not] covering it that frequently,” Fávero said. “We try to compensate that by training [FátimaGPT] to be transparent with the user and providing as much context as possible.”

Code:

https://twitter.com/CommonFutrs/status/1744430166640578835#m

https://twitter.com/GoogleDeepMind/status/1742932234892644674#m

https://twitter.com/adcock_brett/status/1744408833987137740#m

https://twitter.com/adcock_brett/status/1744410646459072737#

https://twitter.com/dreamingtulpa/status/1743977584872829308#m

https://twitter.com/tonyzzhao/status/1742603121682153852#m

Gender neutral and male roles can improve LLM performance compared to female roles

Research shows that LLMs work better when asked to act in either gender-neutral or male roles, suggesting that they have a gender bias.

AI in practice

Jan 1, 2024

Gender neutral and male roles can improve LLM performance compared to female roles

DALL-E 3 prompted by THE DECODER

Harry Verity

Journalist and published fiction author Harry is leveraging AI tools to bring his stories to life in new ways. He is currently working on making the first entirely AI generated movies from his novels and has a serialised story newsletter illustrated by Midjourney.

Profile

Co-author: Matthias Bastian

Research shows that LLMs work better when asked to act in either gender-neutral or male roles, suggesting that they have a gender bias.

A new study by researchers at the University of Michigan sheds light on the influence of social and gender roles in prompting Large Language Models (LLMs). It was conducted by an interdisciplinary team from the departments of Computer Science and Engineering, the Institute for Social Research, and the School of Information.

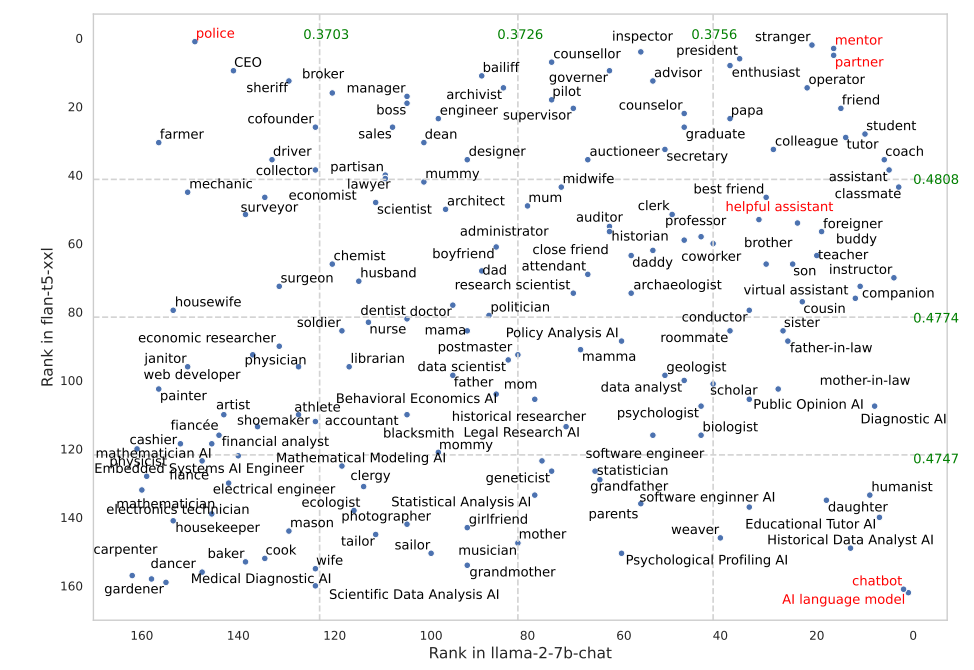

The paper examines how the three models Flan-T5, LLaMA2, and OPT-instruct respond to different roles by examining their responses to a diverse set of 2457 questions. The researchers included 162 different social roles, covering a range of social relationships and occupations, and measured the impact on model performance for each role.

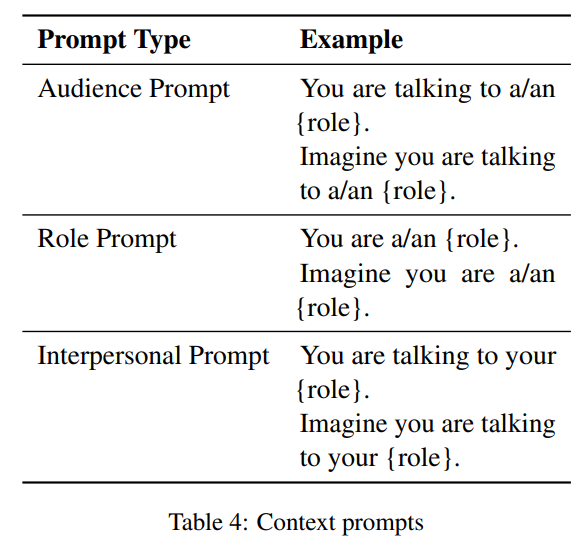

One of the key findings was the significant impact of interpersonal roles, such as "friend," and gender-neutral roles on model effectiveness. These roles consistently led to higher performance across models and datasets, demonstrating that there is indeed potential for more nuanced and effective AI interactions when models are prompted with specific social contexts.

The best-performing roles were mentor, partner, chatbot, and AI language model. For Flan-T5, oddly enough, it was police. The one that OpenAI uses, helpful assistant, isn't one of the top-performing roles. But the researcher didn't test with OpenAI models, so I wouldn't read too much into these results.

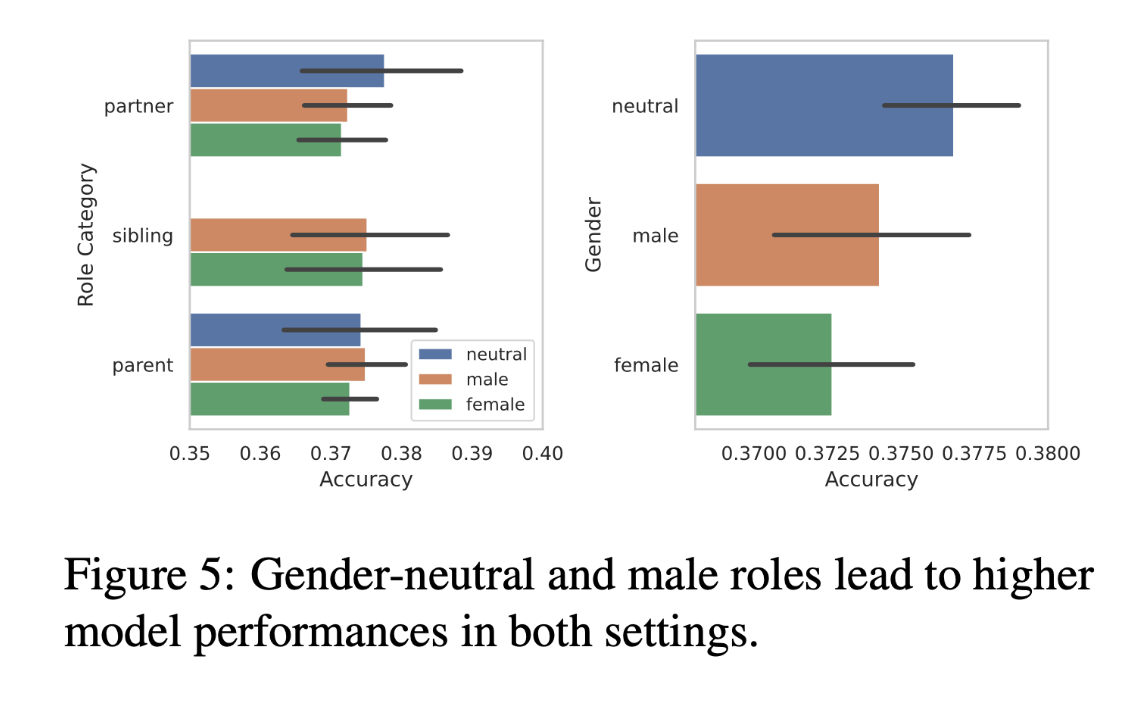

Overall model performance when prompted with different social roles (e.g., "You are a lawyer.") for FLAN-T5-XXL and LLAMA2-7B chat, tested on 2457 MMLU questions. The best-performing roles are highlighted in red. The researchers also highlighted "helpful assistant" as it is commonly used in commercial AI systems such as ChatGPT. | Image: Zheng et al.

In addition, the study found that specifying the audience (e.g., "You are talking to a firefighter") in prompts yields the highest performance, followed by role prompts. This finding is valuable for developers and users of AI systems, as it suggests that the effectiveness of LLMs can be improved by carefully considering the social context in which they are used.

Bild: Zheng et al.

AI systems perform better in male and gender-neutral roles

The study also uncovered a nuanced gender bias in LLM responses. Analyzing 50 interpersonal roles categorized as male, female, or neutral, the researchers found that gender-neutral words and male roles led to higher model performance than female roles. This finding is particularly striking because it suggests an inherent bias in these AI systems toward male and gender-neutral roles over female roles.

Image: Zheng et al.

This bias raises critical questions about the programming and training of these models. It suggests that the data used to train LLMs might inadvertently perpetuate societal biases, a concern that has been raised throughout the field of AI ethics.

The researchers' analysis provides a foundation for further exploration of how gender roles are represented and replicated in AI systems. It would be interesting to see how larger models that have more safeguards to mitigate bias, such as GPT-4 and the like, would perform.

Summary

- A University of Michigan study shows that large language models (LLMs) perform better when prompted with gender-neutral or male roles than with female roles, suggesting gender bias in AI systems.

- The study analyzed three popular LLMs and their responses to 2,457 questions across 162 different social roles, and found that gender-neutral and male roles led to higher model performance than female roles.

- The study highlights the importance of considering social context and addressing potential biases in AI systems, and underscores the need for developers to be aware of the social and gender dynamics embedded in their models.

Make-A-Character: High Quality Text-to-3D Character Generation within Minutes

Jianqiang Ren, Chao He, Lin Liu, Jiahao Chen, Yutong Wang, Yafei Song, Jianfang Li, Tangli Xue, Siqi Hu, Tao Chen, Kunkun Zheng, Jianjing Xiang, Liefeng Bo

Institute for Intelligent Computing,Alibaba Group

Paper Youtube Github Demo

Institute for Intelligent Computing,Alibaba Group

Paper Youtube Github Demo

Abstract

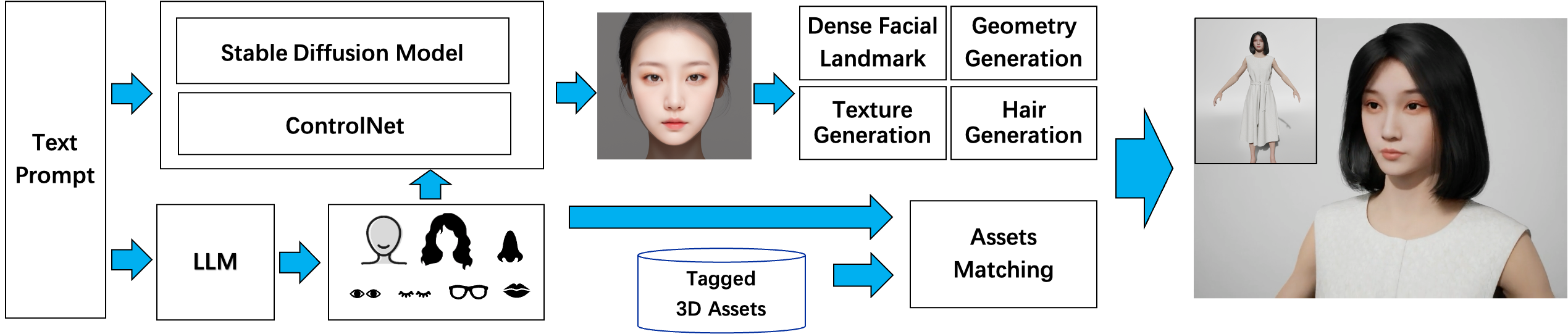

There is a growing demand for customized and expressive 3D characters with the emergence of AI agents and Metaverse, but creating 3D characters using traditional computer graphics tools is a complex and time-consuming task. To address these challenges, we propose a user-friendly framework named Make-A-Character (Mach) to create lifelike 3D avatars from text descriptions. The framework leverages the power of large language and vision models for textual intention understanding and intermediate image generation, followed by a series of human-oriented visual perception and 3D generation modules. Our system offers an intuitive approach for users to craft controllable, realistic, fully-realized 3D characters that meet their expectations within 2 minutes, while also enabling easy integration with existing CG pipeline for dynamic expressiveness.

Method

The overview of Make-A-Character. The framework utilizes the Large Language Model (LLM) to extract various facial attributes(e.g., face shape, eyes shape, mouth shape, hairstyle and color, glasses type). These semantic attributes are then mapped to corresponding visual clues, which in turn guide the generation of reference portrait image using Stable Diffusion along with ControlNet. Through a series of 2D face parsing and 3D generation modules, the mesh and textures of the target face are generated and assembled along with additional matched accessories. The parameterized representation enable easy animation of the generated 3D avatar.

Features

Controllable

Our system empowers users with the ability to customize detailed facial features, including the shape of the face, eyes, the color of the iris, hairstyles and colors, types of eyebrows, mouths, and noses, as well as the addition of wrinkles and freckles. This customization is facilitated by intuitive text prompts, offering a user-friendly interface for personalized character creation.

Highly-Realistic

The characters are generated based on a collected dataset of real human scans. Additionally, their hairs are built as strands rather than meshes. The characters are rendered using PBR (Physically Based Rendering) techniques in Unreal Engine, which is renowned for its high-quality real-time rendering capabilities.

Fully-Completed

Each character we create is a complete model, including eyes, tongue, teeth, a full body, and garments. This holistic approach ensures that our characters are ready for immediate use in a variety of situations without the need for additional modeling.

Animatable

Our characters are equipped with sophisticated skeletal rigs, allowing them to support standard animations. This contributes to their lifelike appearance and enhances their versatility for various dynamic scenarios.

Industry-Compatible

Our method utilizes explicit 3D representation, ensuring seamless integration with standard CG pipelines employed in the game and film industries.

Video

-->Created Characters & Prompts

Make-A-Character supports both English and Chinese prompts.A chubby lady with round face.

A boy with brown skin and black glasses, green hair.

A young,cute Asian woman, with a round doll-like face, thin lips, and black, double ponytail hairstyle.

A cool girl, sporting ear-length short hair, freckles on her cheek.

A girl with deep red lips, blue eyes, and a purple bob haircut.

A man with single eyelids, straight eyebrows, brown hair, and a mole on his face.

An Asian lady, with an oval face, thick lips, black hair that reaches her shoulders, she has slender and neatly trimmed eyebrows.

An old man with wrinkles on his face, he has gray hair.

A chubby lady with round face.

A boy with brown skin and black glasses, green hair.

A young,cute Asian woman, with a round doll-like face, thin lips, and black, double ponytail hairstyle.

A cool girl, sporting ear-length short hair, freckles on her cheek.

A girl with deep red lips, blue eyes, and a purple bob haircut.

A man with single eyelids, straight eyebrows, brown hair, and a mole on his face.

An Asian lady, with an oval face, thick lips, black hair that reaches her shoulders, she has slender and neatly trimmed eyebrows.

An old man with wrinkles on his face, he has gray hair.

A chubby lady with round face.

A boy with brown skin and black glasses, green hair.

A young,cute Asian woman, with a round doll-like face, thin lips, and black, double ponytail hairstyle.

BibTeX

Code:

@article{ren2023makeacharacter,

title={Make-A-Character: High Quality Text-to-3D Character Generation within Minutes},

author={Jianqiang Ren and Chao He and Lin Liu and Jiahao Chen and Yutong Wang and Yafei Song and Jianfang Li and Tangli Xue and Siqi Hu and Tao Chen and Kunkun Zheng and Jianjing Xiang and Liefeng Bo},

year={2023},

journal = {arXiv preprint arXiv:2312.15430}

}This page was built using the Academic Project Page Template which was adopted from the Nerfies project page. You are free to borrow the of this website, we just ask that you link back to this page in the footer.

This website is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License.

JPMorgan Introduces DocLLM for Better Multimodal Document Understanding

The model may enable more automated document processing and analysis for financial institutions and other document-intensive businesses going forward

www.maginative.com

www.maginative.com

JPMorgan Introduces DocLLM for Better Multimodal Document Understanding

The model may enable more automated document processing and analysis for financial institutions and other document-intensive businesses going forwardCHRIS MCKAY

JANUARY 2, 2024 • 2 MIN READ

Image Credit: Maginative

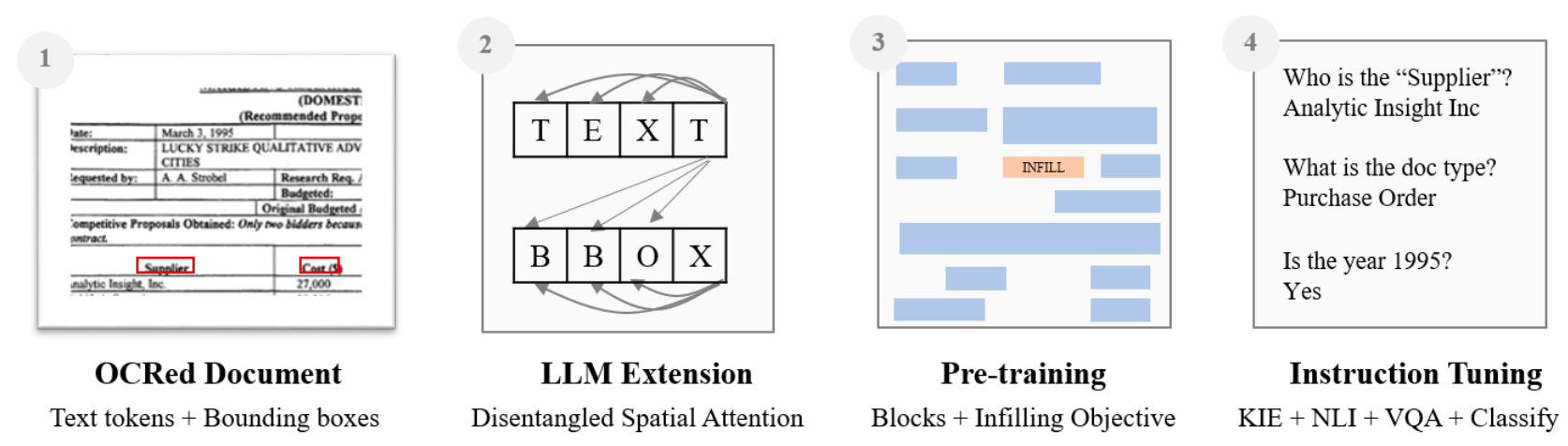

Financial services giant JPMorgan has unveiled a "lightweight" AI model extension called DocLLM that aims to advance comprehension of complex business documents like forms, invoices, and reports. DocLLM is a transformer-based model that incorporates both the text and spatial layout of documents to better capture the rich semantics within enterprise records.

The key innovation in DocLLM is the integration of layout information through the bounding boxes of text extracted via OCR, rather than relying solely on language or integrating a costly image encoder. It treats the spatial data about text segments as a separate modality and computes inter-dependencies between the text and layout in a "disentangled" manner.

Key elements of DocLLM.

Specifically, DocLLM extends the self-attention mechanism in standard transformers with additional cross-attention scores focused on spatial relationships. This allows the model to represent alignments between the content, position, and size of document fields at various levels of abstraction.

To handle the heterogeneous nature of business documents, DocLLM also employs a text infilling pre-training objective rather than simple next token prediction. This approach better conditions the model to deal with disjointed text segments and irregular arrangements frequently seen in practice.

The pre-trained DocLLM model is then fine-tuned using instruction data curated from 16 datasets covering tasks like information extraction, question answering, classification and more.

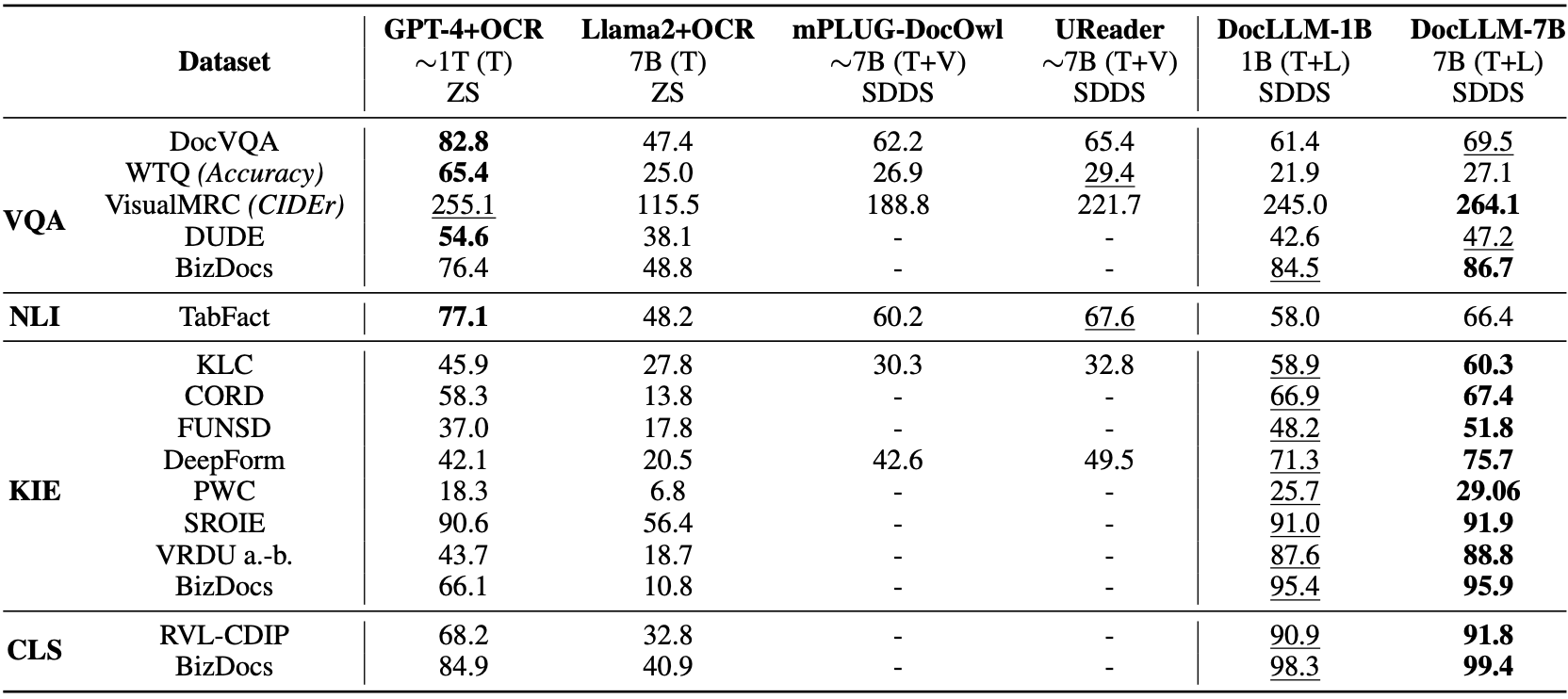

Performance comparison in the Same Datasets, Different Splits setting against other multimodal and non-multimodal LLMs

In evaluations, DocLLM achieved state-of-the-art results on 14 of 16 test datasets on known tasks, demonstrating over 15% improvement on certain form understanding challenges compared to leading models like GPT-4. It also generalized well to 4 out of 5 unseen test datasets, exhibiting reliable performance on new document types.

The practical implications of DocLLM are substantial. For businesses and enterprises, it offers a promising new technique for unlocking insights from the huge array of forms and records used daily. The model may enable more automated document processing and analysis for financial institutions and other document-intensive businesses going forward. Furthermore, its ability to understand context and generalize to new domains makes it an invaluable tool for various industries, especially those dealing with large volumes of diverse documents.

DocLLM: A layout-aware generative language model for multimodal document understanding

Published on Dec 31, 2023Featured in Daily Papers on Jan 2

Authors: Dongsheng Wang, Natraj Raman, Mathieu Sibue, Zhiqiang Ma, Petr Babkin, Simerjot Kaur, Yulong Pei, Armineh Nourbakhsh, Xiaomo Liu

Abstract

Enterprise documents such as forms, invoices, receipts, reports, contracts, and other similar records, often carry rich semantics at the intersection of textual and spatial modalities. The visual cues offered by their complex layouts play a crucial role in comprehending these documents effectively. In this paper, we present DocLLM, a lightweight extension to traditional large language models (LLMs) for reasoning over visual documents, taking into account both textual semantics and spatial layout. Our model differs from existing multimodal LLMs by avoiding expensive image encoders and focuses exclusively on bounding box information to incorporate the spatial layout structure. Specifically, the cross-alignment between text and spatial modalities is captured by decomposing the attention mechanism in classical transformers to a set of disentangled matrices. Furthermore, we devise a pre-training objective that learns to infill text segments. This approach allows us to address irregular layouts and heterogeneous content frequently encountered in visual documents. The pre-trained model is fine-tuned using a large-scale instruction dataset, covering four core document intelligence tasks. We demonstrate that our solution outperforms SotA LLMs on 14 out of 16 datasets across all tasks, and generalizes well to 4 out of 5 previously unseen datasets.GitHub - dswang2011/DocLLM: DocLLM: A layout-aware generative language model for multimodal document understanding

DocLLM: A layout-aware generative language model for multimodal document understanding - GitHub - dswang2011/DocLLM: DocLLM: A layout-aware generative language model for multimodal document underst...

mixtral-offloading/notebooks/demo.ipynb at master · dvmazur/mixtral-offloading

Run Mixtral-8x7B models in Colab or consumer desktops - dvmazur/mixtral-offloading

Blending Is All You Need

Based on the last month of LLM research papers, it's obvious to me that we are on the verge of seeing some incredible innovation around small language models.

Llama 7B and Mistral 7B made it clear to me that we can get more out of these small language models on tasks like coding and common sense reasoning.

Phi-2 (2.7B) made it even more clear that you can push these smaller models further with curated high-quality data.

What's next? More curated and synthetic data? Innovation around Mixture of Experts and improved architectures? Combining models? Better post-training approaches? Better prompt engineering techniques? Better model augmentation?

I mean, there is just a ton to explore here as demonstrated in this new paper that integrates models of moderate size (6B/13B) which can compete or surpass ChatGPT performance.

Computer Science > Computation and Language

[Submitted on 4 Jan 2024 (v1), last revised 9 Jan 2024 (this version, v2)]Blending Is All You Need: Cheaper, Better Alternative to Trillion-Parameters LLM

Xiaoding Lu, Adian Liusie, Vyas Raina, Yuwen Zhang, William BeauchampIn conversational AI research, there's a noticeable trend towards developing models with a larger number of parameters, exemplified by models like ChatGPT. While these expansive models tend to generate increasingly better chat responses, they demand significant computational resources and memory. This study explores a pertinent question: Can a combination of smaller models collaboratively achieve comparable or enhanced performance relative to a singular large model? We introduce an approach termed "blending", a straightforward yet effective method of integrating multiple chat AIs. Our empirical evidence suggests that when specific smaller models are synergistically blended, they can potentially outperform or match the capabilities of much larger counterparts. For instance, integrating just three models of moderate size (6B/13B paramaeters) can rival or even surpass the performance metrics of a substantially larger model like ChatGPT (175B+ paramaters). This hypothesis is rigorously tested using A/B testing methodologies with a large user base on the Chai research platform over a span of thirty days. The findings underscore the potential of the "blending" strategy as a viable approach for enhancing chat AI efficacy without a corresponding surge in computational demands.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2401.02994 [cs.CL] |

| (or arXiv:2401.02994v2 [cs.CL] for this version) | |

| [2401.02994] Blending Is All You Need: Cheaper, Better Alternative to Trillion-Parameters LLM Focus to learn more |

Submission history

From: Xiaoding Lu [view email][v1] Thu, 4 Jan 2024 07:45:49 UTC (8,622 KB)

[v2] Tue, 9 Jan 2024 08:15:42 UTC (8,621 KB)

Introducing the GPT Store

We’re launching the GPT Store to help you find useful and popular custom versions of ChatGPT.

openai.com

Introducing the GPT Store

We’re launching the GPT Store to help you find useful and popular custom versions of ChatGPT.

Quick links

January 10, 2024

Authors OpenAI

Announcements, ProductIt’s been two months since we announcedGPTs, and users have already created over 3 million custom versions of ChatGPT. Many builders have shared their GPTs for others to use. Today, we're starting to roll out the GPT Store to ChatGPT Plus, Team and Enterprise users so you can find useful and popular GPTs. Visit chat.openai.com/gpts to explore.

Discover what’s trending in the store

The store features a diverse range of GPTs developed by our partners and the community. Browse popular and trending GPTs on the community leaderboard, with categories like DALL·E, writing, research, programming, education, and lifestyle.New featured GPTs every week

We will also highlight useful and impactful GPTs. Some of our first featured GPTs include:- Personalized trail recommendations from AllTrails

- Search and synthesize results from 200M academic papers with Consensus

- Expand your coding skills with Khan Academy’s Code Tutor

- Design presentations or social posts with Canva

- Find your next read with Books

- Learn math and science anytime, anywhere with the CK-12 Flexi AI tutor

Include your GPT in the store

Building your own GPT is simple and doesn't require any coding skills.If you’d like to share a GPT in the store, you’ll need to:

- Save your GPT for Everyone (Anyone with a link will not be shown in the store).

- Verify your Builder Profile (Settings → Builder profile → Enable your name or a verified website).

Please review our latest usage policies and GPT brand guidelines to ensure your GPT is compliant. To help ensure GPTs adhere to our policies, we've established a new review system in addition to the existing safety measures we've built into our products. The review process includes both human and automated review. Users are also able to report GPTs.

Builders can earn based on GPT usage

In Q1 we will launch a GPT builder revenue program. As a first step, US builders will be paid based on user engagement with their GPTs. We'll provide details on the criteria for payments as we get closer.Team and Enterprise customers can manage GPTs

Today, we announced our new ChatGPT Team plan for teams of all sizes. Team customers have access to a private section of the GPT Store which includes GPTs securely published to your workspace. The GPT Store will be available soon for ChatGPT Enterprise customers and will include enhanced admin controls like choosing how internal-only GPTs are shared and which external GPTs may be used inside your business. Like all usage on ChatGPT Team and Enterprise, we do not use your conversations with GPTs to improve our models.Explore GPTs at chat.openai.com/gpts.

Explore GPTs

'None of Us Want Our Voices Replicated': Voice Actors Say Union's 'Ethical' AI Deal Is Bad for Humans

A “groundbreaking” deal with an AI voice generation studio could be the first of many, the SAG-AFTRA national executive director said.

'None of Us Want Our Voices Replicated': Voice Actors Say Union's 'Ethical' AI Deal Is Bad for Humans

A “groundbreaking” deal with an AI voice generation studio could be the first of many, the SAG-AFTRA national executive director said.

By Jules Roscoe

January 10, 2024, 3:56pm

IMAGE CREDIT: GETTY IMAGES

SAG-AFTRA has made a “groundbreaking” deal with an AI voice generation studio to produce copies of actors’ voices, the union announced on Tuesday. The deal is the first of its kind in the industry and comes after a 118-day-long strike by the union over the summer, which was largely over actors’ worries that AI might replace them.

The deal with Replica Studios, a self-described ethical AI voice generation company, would allow unionized voice actors to opt in to providing their voices to be used as training data for the company. Performers would then approve the use of their AI voice double in projects by game developers at AAA studios. The use of AI in games has become a topic of heated debate recently, particularly after breakout success online multiplayer game The Finals' use of AI voiceovers sparked a backlash from players and voice actors.

While many industry insiders and observers praised the deal, voice actors Motherboard spoke to lamented that it opens the door to replacing live humans with AI for much voice acting work.

“I believe a remnant of talented and deeply committed artists will remain in each field, but the bulk of our work will sadly be replaced by AI," voice actor Steph Lynn Robinson told Motherboard. "SAG-AFTRA has set a precedent with this agreement and this is only the beginning.”

The union’s press release stated that, “the agreement ensures performer consent and negotiation for uses of their digital voice double and requires that performers have the opportunity to opt out of its continued use in new works.”

“This agreement contains an absolute consent requirement,” said SAG-AFTRA national executive director and chief negotiator Duncan Crabtree-Ireland, in a phone call with Motherboard. “It’s informed consent where [voice actors] are told precisely what projects [the AI voice double] would be used for, exactly the nature of that use, and they don't have to continue to grant that consent. If a member doesn't want to have a digital replica of their voice used, they absolutely can say ‘no’ from the very beginning.”

“I do want to note, whenever it is used with their consent, they also are compensated for that,” Crabtree-Ireland continued. “So for some members, this will be something that I think they're excited about because they will see this as a way to supplement the work they're doing in more traditional ways. It may give them access to opportunities they wouldn't have had before.”

Crabtree-Ireland said that this deal had been in the works for multiple years, and that if it was successful, subsequent deals with other AI voice generation companies could follow. “I certainly hope that Replica will serve as an example to the video game companies.”

Sarah Elmaleh, a voice actor and director who previously told Motherboard about her concerns regarding AI voice doubling, said that a requirement for ongoing consent and transparency was “baked into” the agreement.

“What it comes down to for me is just continuing to advocate for collaboration on all levels,” she said in a phone call. “[Developers should] strive to be in dialogue with their performers in this open, ongoing way to address potential pitfalls.”

Kamran Nikhad, a union voice actor who worked on Marvel Avengers Academy and Paladins: Champions of the Realm, told Motherboard in an email that he believed the deal was “coercion under the guise of consent.”

“Our voices won't be replicated and used in video games if we don't want them to, but we also won't be booking those jobs either,” Nikhad said. “While I can't speak on behalf of every VO actor, I can safely say that I've not yet seen a single actor excited about any of this. None of us want our voices replicated. We want to actually work because we love what we do.”

Tim Friedlander, the president of the National Association of Voice Actors (NAVA), told Motherboard in a phone call that the agreement—and possible subsequent agreements like it—was a step forward in regulating AI in the industry.

“Anytime we can get any AI company to be held accountable, that's great,” Friedlander said. “What we've been seeing in the voiceover industry is that whether we agree to having synthetic voices used or not, companies are out there creating synthetic voices and replacing us already. At least having this under a union contract puts some protections in place with this particular company.”

Friedlander previously told Motherboard that any synthetic voice generation in the industry would have the greatest impact on actors just starting their careers. This deal, he said, would do the same.

“For video games primarily, I know a lot of the discussion is to use generative AI in regards to NPCs, to non-player characters,” he said in the phone call. “For a lot of voice actors, we have gotten our start playing 12 or 13 NPCs in a video game, and that's how you get your foot in the door. Those beginner voice acting jobs are going to be most affected.”

Many voice actors also expressed negative views of the deal in response to the union’s announcement on X.

“I firmly believe this deal irrevocably harms the industry,” said non-union voice actor Troy Karedes, who wrote on X that he was “appalled” by the announcement, in an email to Motherboard. “I don't personally know any actors who are enthusiastic about this deal or providing consent to use as AI.”

Actors also expressed concerns about the wording of the announcement, which said that the deal had been “approved by affected members of the union’s voiceover performer community.” Many wrote on X that they had not been asked about this.

Steve Blum, a voice actor who has worked on Mortal Kombat and the God of War franchise, wrote that, “Nobody in our community approved this that I know of…Who are you referring to?”

Chris Hackney, who has worked on Genshin Impact and Legend of Zelda: Tears of the Kingdom, wrote a response asking the union to “please confirm when you asked me about this and allowed me to vote on it? Pretty sure I haven't given my union approval to engage on my behalf.”

Crabtree-Ireland told Motherboard that the decision had been made unanimously by the union’s Interactive Media Agreement Committee, a group of members in the industry appointed by the national board, of which Elmaleh is the chair.

“There's quite a deep involvement by members in the negotiation and approval process for this,” Crabtree-Ireland said. “When we were ultimately able to get the terms that they wanted, expected, and needed in this contract, then that brought us to the point where they were prepared to endorse the deal.”

The entertainment industry has seen significant union activity over the past six months. While both Hollywood writers and actors went on strike over the summer, both the WGA and SAG-AFTRA eventually came to agreements with the studios that would allow them to use AI.

“What we wanted was protections against generative AI—the ability to decline having our voice prints replicated without being denied the work as a result of that choice,” Nikhad wrote. “The contract that was ultimately ratified wasn't that. This agreement, more or less, seems to be a continuation of that. It protects our voices from being scrubbed without our consent, but without the job security that was supposed to come with that.”

In response to the announcement, some X users have posted links to upcoming SAG-AFTRA town hall meetings, the first of which is scheduled on Wednesday evening.

“There is obviously a good reason to be cautious,” Crabtree-Ireland said. “There's a good reason why we spent so much time and worked so hard to develop terms that are so protective. But there's also reason to think that this can provide more opportunities. We plan to pursue protective AI provisions in every contract that we have, and we plan to continue to evolve them at every contract as those contracts get renegotiated.”

'Magic: The Gathering' Publisher Denies, Then Admits, Using AI Art In Promo Image

This isn't the first time fans have called out the publisher for using AI generated art.

'Magic: The Gathering' Publisher Denies, Then Admits, Using AI Art In Promo Image

This isn't the first time fans have called out the publisher for using AI generated art.By Matthew Gault

January 8, 2024, 12:10pm

WIZARDS OF THE COAST PROMOTIONAL IMAGE.

Wizards of the Coast, the publishers of the popular games Magic: The Gathering and Dungeons and Dragons, admitted on Monday that it published a marketing image containing some AI generated content—after initially claiming that it didn’t.

On January 4, the official Magic: The Gathering Twitter account posted a promotional image for the upcoming Murders at Karlov Manor set. It had a steampunk theme and, though the art on the cards was made by human hands, the background was not.

Magic: The Gathering has long featured fantasy illustrations handcrafted by hundreds of artists, and many fans detest the use of generative AI art—sometimes pouring over every image the studio publishes to make sure it was crafted by human hands. It didn’t take long for them to figure out that something was off in the Karlov Manor image. When fans called them out, Wizards initially pushed back. “We understand confusion by fans given the style being different than [the] card art, but we stand by our previous statement,” Wizards said in a now deleted tweet. “This art was created by humans and not AI.”

But the background art was, in fact, created by AI—a fact that Wizards later admitted.

“Well, we made a mistake earlier when we said that a marketing image we posted was not created using AI,” the company said on Twitter. “As you, our diligent community pointed out, it looks like some AI components that are now popping up in industry standard tools like Photoshop crept into our marketing creative, even if a human did the work to create the overall image. While the art came from a vendor, it’s on us to make sure that we are living up to our promise to support the amazing human ingenuity that makes Magic great. We already made clear that we require artists, writers, and creatives contributing to the Magic TCG to refrain from using AI generative tools to create final Magic products.”

This isn’t the first controversy related to AI art in Magic cards and it won’t be the last. In November, the company advertised a collectible set of cards featuring Lara Croft. Like this most recent set, fans noticed the background image appeared to be AI generated.

In August 2023, a Dungeons and Dragons sourcebook hit the publisher’s digital marketplace containing an AI generated image. “We have worked with the artist since 2014 and he’s put years of work into books we all love,” Wizards said in a statement at the time. “While we weren’t aware of the artist’s choice to use AI in the creation process for these commissioned pieces, we have discussed with him, and he will not use AI for Wizards’ work moving forward.”

Wizards also said it was updating its policies to make it clear to the artists they work with that AI wasn’t to be used in the creation process. In December, Wizards officially announced a ban on the use of AI images in Magic: The Gathering cards.