DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines

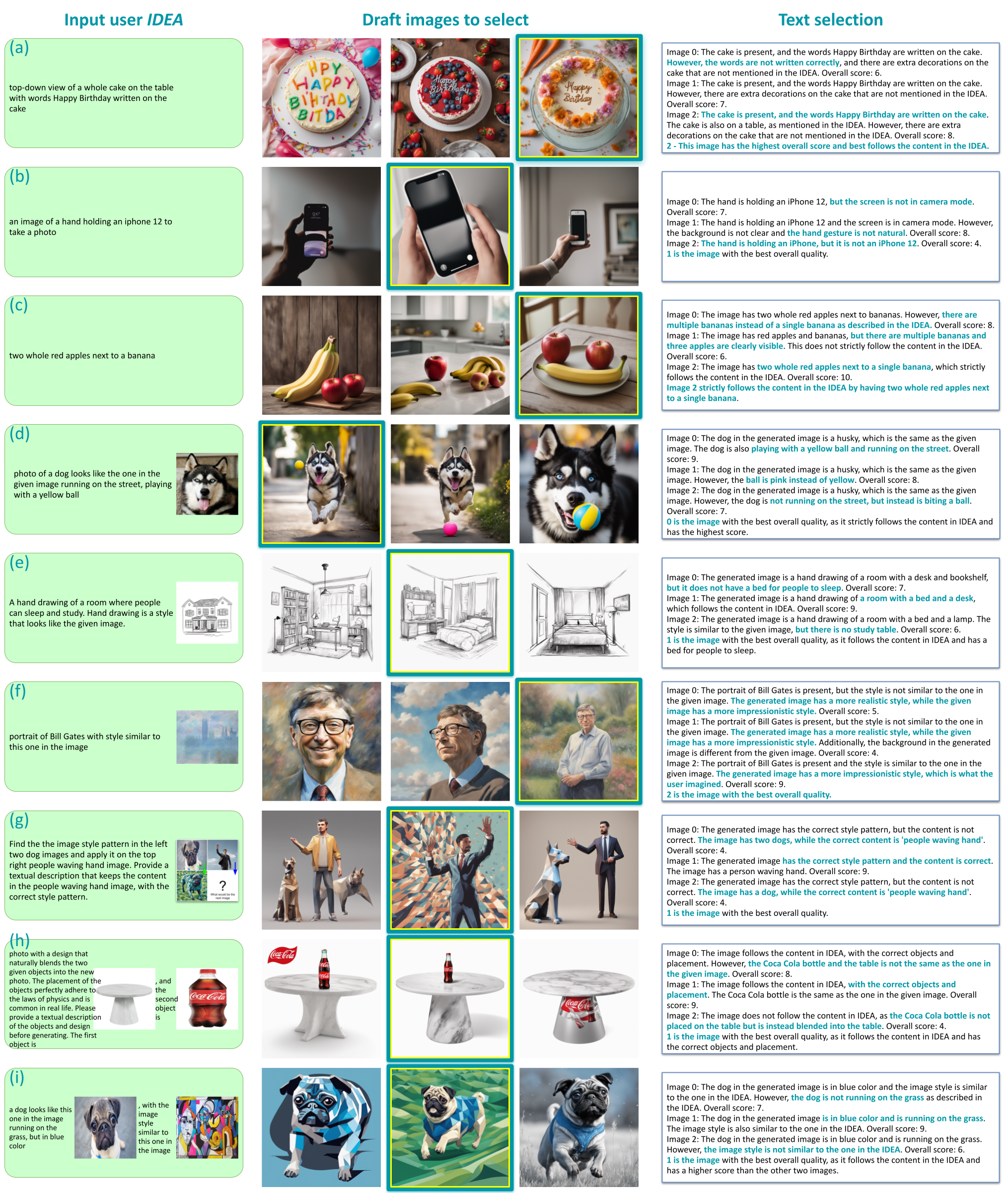

The ML community is rapidly exploring techniques for prompting language models (LMs) and for stacking them into pipelines that solve complex tasks. Unfortunately, existing LM pipelines are typically implemented using hard-coded "prompt templates", i.e. lengthy strings discovered via trial and...

Computer Science > Computation and Language

[Submitted on 5 Oct 2023]DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines

Omar Khattab, Arnav Singhvi, Paridhi Maheshwari, Zhiyuan Zhang, Keshav Santhanam, Sri Vardhamanan, Saiful Haq, Ashutosh Sharma, Thomas T. Joshi, Hanna Moazam, Heather Miller, Matei Zaharia, Christopher PottsThe ML community is rapidly exploring techniques for prompting language models (LMs) and for stacking them into pipelines that solve complex tasks. Unfortunately, existing LM pipelines are typically implemented using hard-coded "prompt templates", i.e. lengthy strings discovered via trial and error. Toward a more systematic approach for developing and optimizing LM pipelines, we introduce DSPy, a programming model that abstracts LM pipelines as text transformation graphs, i.e. imperative computational graphs where LMs are invoked through declarative modules. DSPy modules are parameterized, meaning they can learn (by creating and collecting demonstrations) how to apply compositions of prompting, finetuning, augmentation, and reasoning techniques. We design a compiler that will optimize any DSPy pipeline to maximize a given metric. We conduct two case studies, showing that succinct DSPy programs can express and optimize sophisticated LM pipelines that reason about math word problems, tackle multi-hop retrieval, answer complex questions, and control agent loops. Within minutes of compiling, a few lines of DSPy allow GPT-3.5 and llama2-13b-chat to self-bootstrap pipelines that outperform standard few-shot prompting (generally by over 25% and 65%, respectively) and pipelines with expert-created demonstrations (by up to 5-46% and 16-40%, respectively). On top of that, DSPy programs compiled to open and relatively small LMs like 770M-parameter T5 and llama2-13b-chat are competitive with approaches that rely on expert-written prompt chains for proprietary GPT-3.5. DSPy is available at this https URL

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Information Retrieval (cs.IR); Machine Learning (cs.LG) |

| Cite as: | arXiv:2310.03714 [cs.CL] |

| (or arXiv:2310.03714v1 [cs.CL] for this version) | |

| [2310.03714] DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines Focus to learn more |

Submission history

From: Omar Khattab [view email][v1] Thu, 5 Oct 2023 17:37:25 UTC (77 KB)

[CL] DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines

O Khattab, A Singhvi, P Maheshwari, Z Zhang, K Santhanam, S Vardhamanan… [Stanford University & UC Berkeley & Amazon Alexa AI] (2023)

arxiv.org/abs/2310.03714

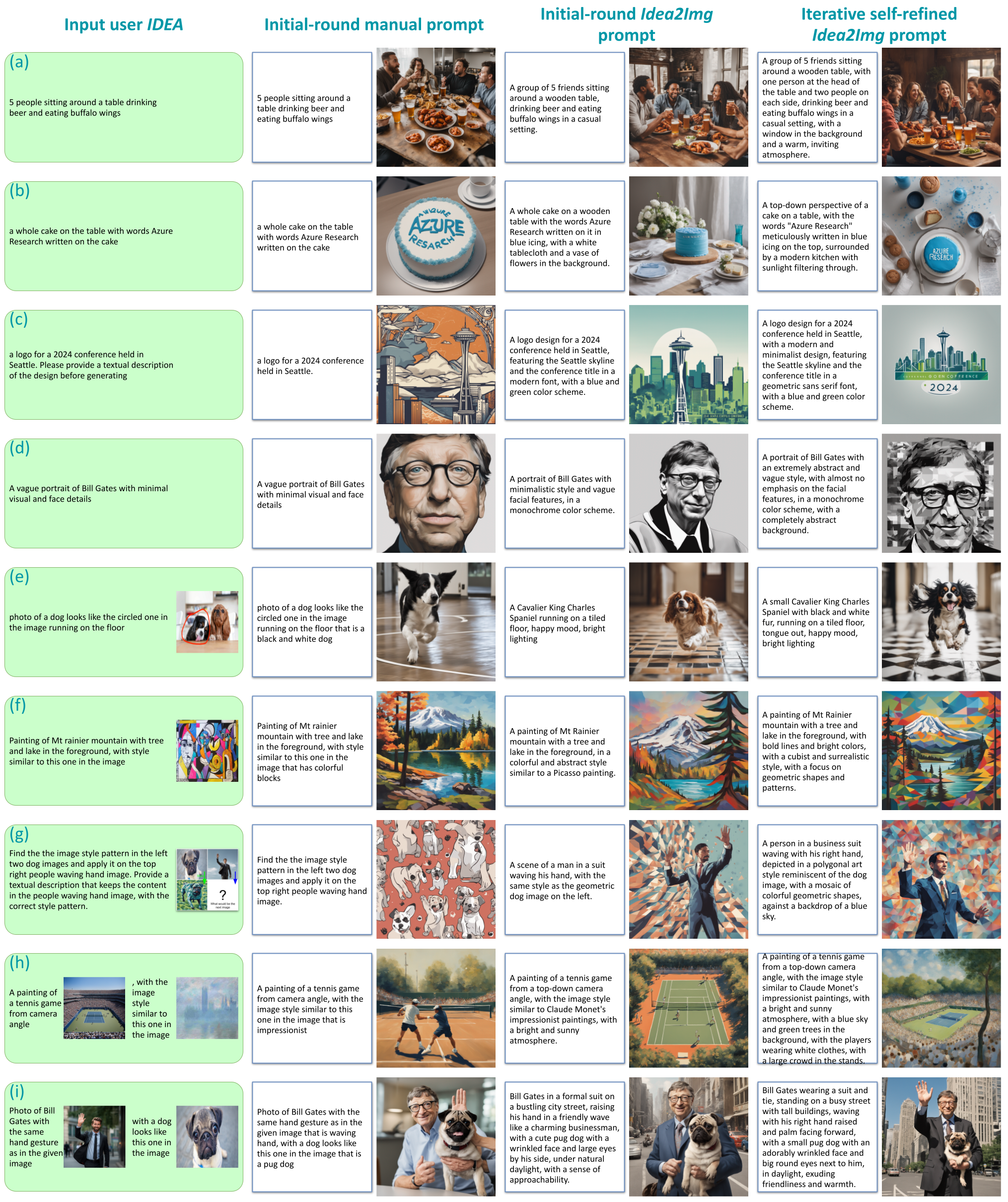

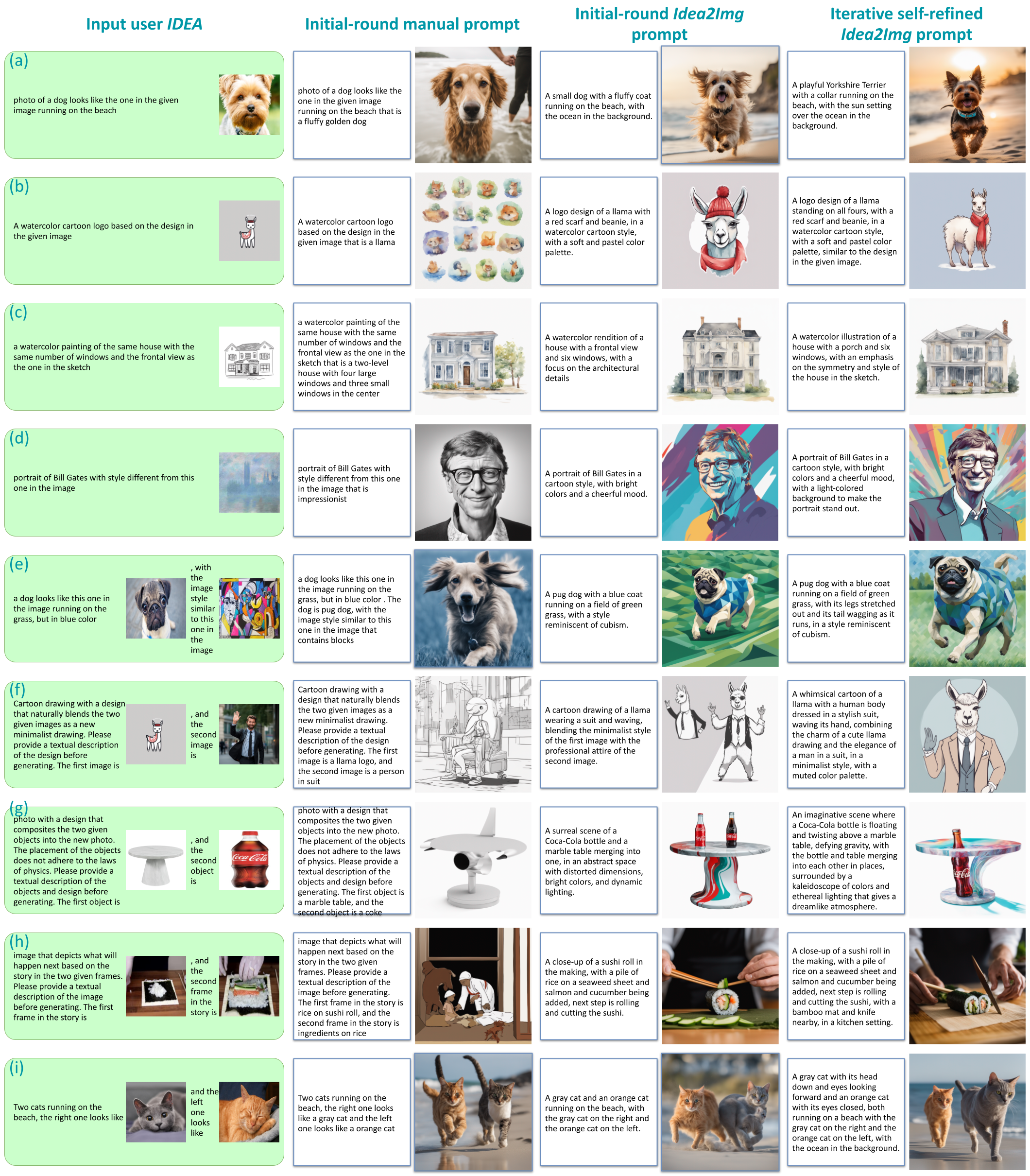

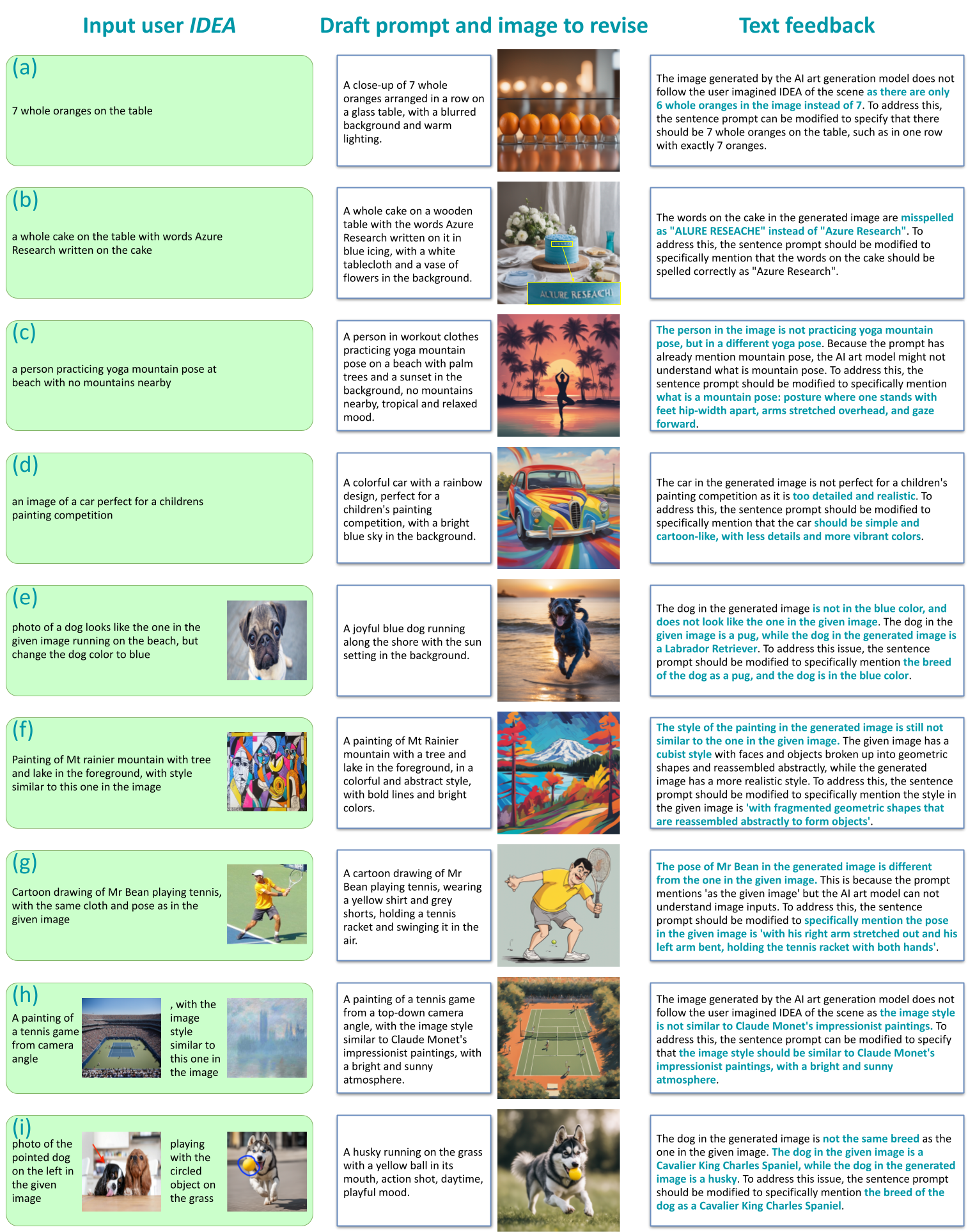

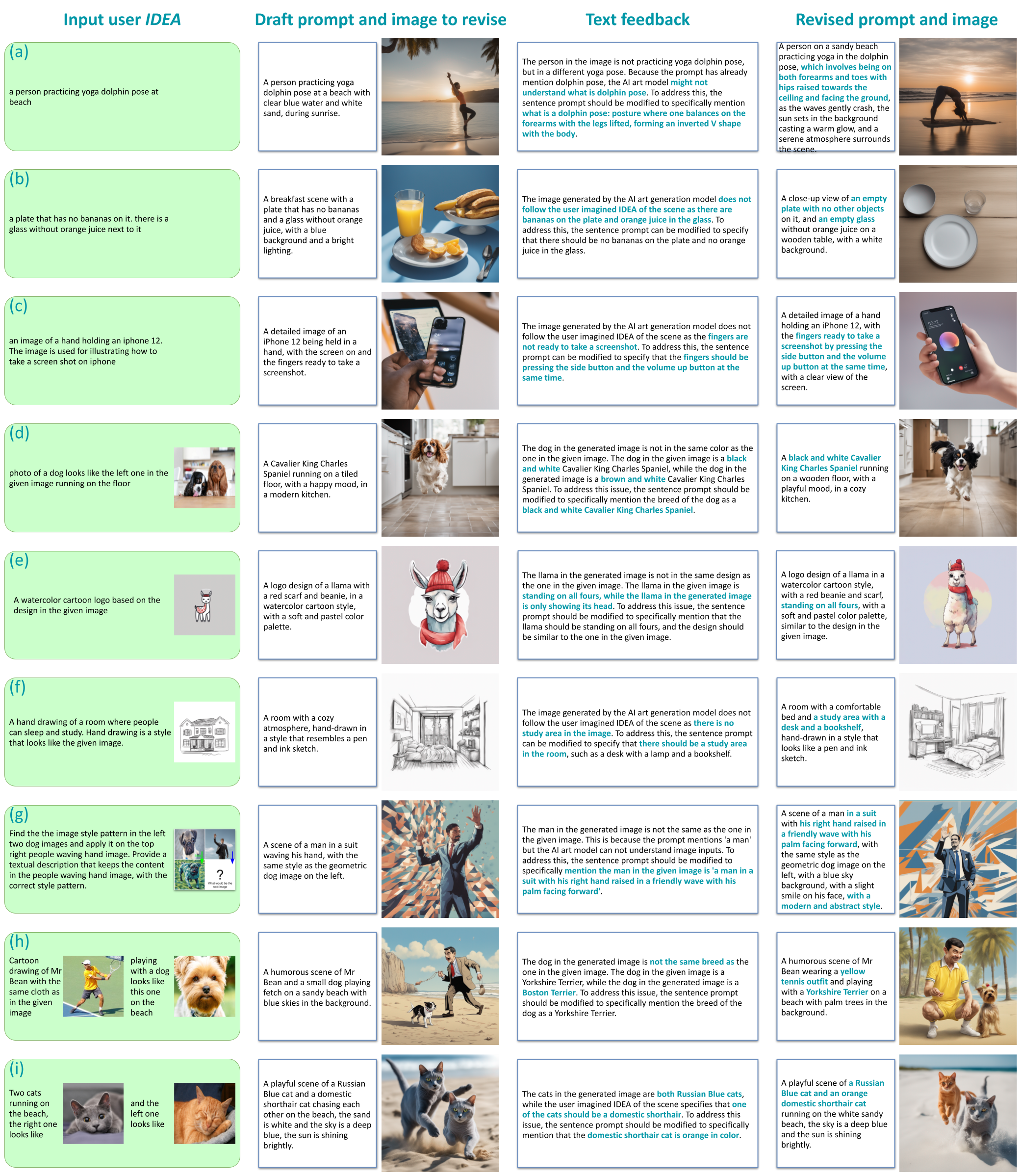

- Introduces DSPy, a new programming model for designing AI systems using pipelines of pretrained language models (LMs) and other tools

- DSPy contributes three main abstractions: signatures, modules, and teleprompters

- Signatures abstract the input/output behavior of a module using natural language typed declarations

- Modules replace hand-prompting techniques and can be composed into pipelines

- Teleprompters are optimizers that improve modules via prompting or finetuning

- Case studies on math word problems and multi-hop QA show DSPy programs outperform hand-crafted prompts

- With DSPy, small LMs like Llama2-13b-chat can be competitive with large proprietary LMs using expert prompts

- DSPy offers a systematic way to explore complex LM pipelines without extensive prompt engineering

O Khattab, A Singhvi, P Maheshwari, Z Zhang, K Santhanam, S Vardhamanan… [Stanford University & UC Berkeley & Amazon Alexa AI] (2023)

arxiv.org/abs/2310.03714

- Introduces DSPy, a new programming model for designing AI systems using pipelines of pretrained language models (LMs) and other tools

- DSPy contributes three main abstractions: signatures, modules, and teleprompters

- Signatures abstract the input/output behavior of a module using natural language typed declarations

- Modules replace hand-prompting techniques and can be composed into pipelines

- Teleprompters are optimizers that improve modules via prompting or finetuning

- Case studies on math word problems and multi-hop QA show DSPy programs outperform hand-crafted prompts

- With DSPy, small LMs like Llama2-13b-chat can be competitive with large proprietary LMs using expert prompts

- DSPy offers a systematic way to explore complex LM pipelines without extensive prompt engineering