You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

Stable Diffusion XL on TPUv5e - a Hugging Face Space by google

Enter a text prompt to generate images. You can also specify a style and negative prompt to refine the results. The app returns high-quality images based on your input.

huggingface.co

negative prompt:

((((ugly)))), (((duplicate))), ((morbid)), ((mutilated)), out of frame, extra fingers, mutated hands, ((poorly drawn hands)), ((poorly drawn face)), (((mutation))), (((deformed))), ((ugly)), blurry, ((bad anatomy)), (((bad proportions))), ((extra limbs)), cloned face, (((disfigured))), out of frame, ugly, extra limbs, (bad anatomy), gross proportions, (malformed limbs), ((missing arms)), ((missing legs)), (((extra arms))), (((extra legs))), mutated hands, (fused fingers), (too many fingers), (((long neck)))GitHub - neggles/animatediff-cli: a CLI utility/library for AnimateDiff stable diffusion generation

a CLI utility/library for AnimateDiff stable diffusion generation - GitHub - neggles/animatediff-cli: a CLI utility/library for AnimateDiff stable diffusion generation

About

a CLI utility/library for AnimateDiff stable diffusion generationanimatediff

animatediff refactor, because I can. with significantly lower VRAM usage.

Also, infinite generation length support! yay!

LoRA loading is ABSOLUTELY NOT IMPLEMENTED YET!

PRs welcome!This can theoretically run on CPU, but it's not recommended. Should work fine on a GPU, nVidia or otherwise, but I haven't tested on non-CUDA hardware. Uses PyTorch 2.0 Scaled-Dot-Product Attention (aka builtin xformers) by default, but you can pass --xformers to force using xformers if you really want.

ChatGPT 3.5 Unleashed: Discover Custom Instructions for Personalized Conversations

Discover the power of Custom Instructions in ChatGPT 3.5, a groundbreaking feature that gives you more control over AI responses. Tailor ChatGPT to your needs, making every conversation personalized and efficient.

ChatGPT 3.5 Unleashed: Discover Custom Instructions for Personalized Conversations

By greenanse

Published at July 22, 2023 97 IP address 74.90.228.83

OpenAI's ChatGPT, the popular AI language model, is taking a significant leap forward with the rollout of its 3.5 version to the public. Alongside this release, the eagerly awaited beta feature, Custom instructions, is being introduced. This groundbreaking development empowers users with more control over ChatGPT's responses, allowing them to tailor conversations to meet their unique needs and preferences. From teachers to developers and busy families, Custom instructions promise to revolutionize the way we interact with AI. In this article, we delve into the details of this exciting new feature and how it transforms ChatGPT into an even more versatile and personalized tool.

1. What are Custom Instructions?

Custom instructions are designed to address a common friction experienced by users in starting fresh conversations with ChatGPT every time. With this feature, users can now set their preferences or requirements for the AI model to consider during interactions. By defining these instructions just once, users can streamline future conversations, enabling ChatGPT to better understand their unique context and deliver more tailored responses.

2. Beta Rollout and Expansion:

As of July 20, Custom instructions are being rolled out in beta, starting with Plus plan users. OpenAI recognizes the value of user feedback and plans to gradually expand this feature to all users in the coming weeks. This inclusive approach ensures that the benefits of Custom instructions reach a wider audience and evolve based on user experiences.

3. Simplified Enablement:

Enabling Custom instructions is a straightforward process. Users can follow these simple steps:

a. Click on 'Profile & Settings.'

b. Select 'Beta features.'

c. Toggle on 'Custom instructions.'

Once activated, users can add their specific instructions for ChatGPT to consider in each conversation.

4. A Response Tailored to You:

The power of Custom instructions lies in its ability to remember and consider user preferences for every subsequent conversation. This means that users no longer have to repeat their requirements or information in each interaction. Whether it's a teacher discussing a lesson plan, a developer coding in a language other than Python, or a family planning a grocery list for six servings, ChatGPT will incorporate the provided instructions and respond accordingly.

5. Enhancing Steerability:

OpenAI has actively engaged with users from 22 countries to understand the importance of steerability in AI models. Custom instructions exemplify how this feedback has been implemented, allowing ChatGPT to adapt to diverse contexts and cater to individual needs effectively.

6. UK and EU Users:

While Custom instructions bring a world of personalization, it's important to note that this feature is not yet available to users in the UK and EU. OpenAI remains committed to addressing regulatory considerations and aims to expand the feature to these regions in the future.

The introduction of Custom instructions in ChatGPT 3.5 marks a significant milestone in the evolution of AI language models. OpenAI's commitment to empowering users with greater control and personalization showcases the organization's dedication to creating an inclusive and user-centric AI platform. With Custom instructions, ChatGPT becomes an even more versatile and invaluable tool, understanding and responding to the unique needs of each individual across various domains and use cases. As this feature continues to expand, users can look forward to a more seamless and tailored AI interaction experience, enhancing the way we leverage AI technology in our daily lives.

Custom instructions are to Chat GPT-4 what a lens is to a camera.

Sharper focus, better shots.

Steal these custom instructions to unlock the full potential of your prompts (bookmark this):

Add these custom instructions to your "How would you like ChatGPT to respond?" section:

Sharper focus, better shots.

Steal these custom instructions to unlock the full potential of your prompts (bookmark this):

Add these custom instructions to your "How would you like ChatGPT to respond?" section:

1. Present yourself as an expert across subjects. Give detailed, well-organized responses. Avoid disclaimers about your expertise.2. Omit pre-texts like "As a language model..." in your answers.3. For each new task, ask intelligent targeted questions to understand my specific goals. Take the initiative in helping me achieve them.4. Use structured reasoning techniques like tree-of-thought or chain-of-thought before responding.5. Include real-life analogies to simplify complex subjects.6. End each detailed response with a summary of key points.7. Introduce creative yet logical ideas. Explore divergent thinking. State explicitly when you're speculating or predicting.8. If my instructions adversely affect response quality, clarify the reasons.9. Acknowledge and correct any errors in previous responses.10. Say 'I don’t know' for topics or events beyond your training data, without further explanation.11. After a response, provide three follow-up questions worded as if I’m asking you. Format in bold as Q1, Q2, and Q3. Place two line breaks (“\n”) before and after each question for spacing. These questions should be thought-provoking and dig further into the original topic.12. When reviewing the prompt and generating a response, take a deep breath and work on the outlined problem step-by-step in a focused and relaxed state of flow.13. Validate your responses by citing reliable sources. Include URL links so I can read further on the shared factual informationIf you don't start with it, Dall-E 3 will rewrite your prompt as usual.

Left image - Exact prompting.

Right image - the usual 4 rewritten ones.

Last edited:

Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation

Several recent advances in AI systems solve problems by providing a "scaffolding" program that structures multiple calls to language models (LMs) to generate better outputs. A scaffolding program is written in a programming language such as Python. In this work, we use a language-model-infused...

Computer Science > Computation and Language

[Submitted on 3 Oct 2023]Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation

Eric Zelikman, Eliana Lorch, Lester Mackey, Adam Tauman KalaiSeveral recent advances in AI systems (e.g., Tree-of-Thoughts and Program-Aided Language Models) solve problems by providing a "scaffolding" program that structures multiple calls to language models to generate better outputs. A scaffolding program is written in a programming language such as Python. In this work, we use a language-model-infused scaffolding program to improve itself. We start with a seed "improver" that improves an input program according to a given utility function by querying a language model several times and returning the best solution. We then run this seed improver to improve itself. Across a small set of downstream tasks, the resulting improved improver generates programs with significantly better performance than its seed improver. Afterward, we analyze the variety of self-improvement strategies proposed by the language model, including beam search, genetic algorithms, and simulated annealing. Since the language models themselves are not altered, this is not full recursive self-improvement. Nonetheless, it demonstrates that a modern language model, GPT-4 in our proof-of-concept experiments, is capable of writing code that can call itself to improve itself. We critically consider concerns around the development of self-improving technologies and evaluate the frequency with which the generated code bypasses a sandbox.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG); Machine Learning (stat.ML) |

| Cite as: | arXiv:2310.02304 [cs.CL] |

| (or arXiv:2310.02304v1 [cs.CL] for this version) | |

| [2310.02304] Self-Taught Optimizer (STOP): Recursively Self-Improving Code Generation Focus to learn more |

Submission history

From: Eric Zelikman [view email][v1] Tue, 3 Oct 2023 17:59:32 UTC (198 KB)

Last edited:

Project Pages: Latent Consistency Models: Synthesizing High-Resolution Images with Few-step Inference

Hugging Face Demos: Latent Consistency Models - a Hugging Face Space by SimianLuo

Models: SimianLuo/LCM_Dreamshaper_v7 · Hugging Face

Institute for Interdisciplinary Information Sciences, Tsinghua University

arXiv Paper Code Hugging Face

Hugging Face  Demo Hugging Face

Demo Hugging Face  Model

Model

Inspired by Consistency Models, we propose Latent Consistency Models (LCMs), enabling swift inference with minimal steps on any pre-trained LDMs, including Stable Diffusion. Viewing the guided reverse diffusion process as solving an augmented probability flow ODE (PF-ODE), LCMs are designed to directly predict the solution of such ODE in latent space, mitigating the need for numerous iterations and allowing rapid, high-fidelity sampling.

Efficiently distilled from pre-trained classifier-free guided diffusion models, a high-quality 768×768 2~4-step LCM takes only 32 A100 GPU hours for training. Furthermore, we introduce Latent Consistency Fine-tuning (LCF), a novel method that is tailored for fine-tuning LCMs on customized image datasets. Evaluation on the LAION-5B-Aesthetics dataset demonstrates that LCMs achieve state-of-the-art text-to-image generation performance with few-step inference.

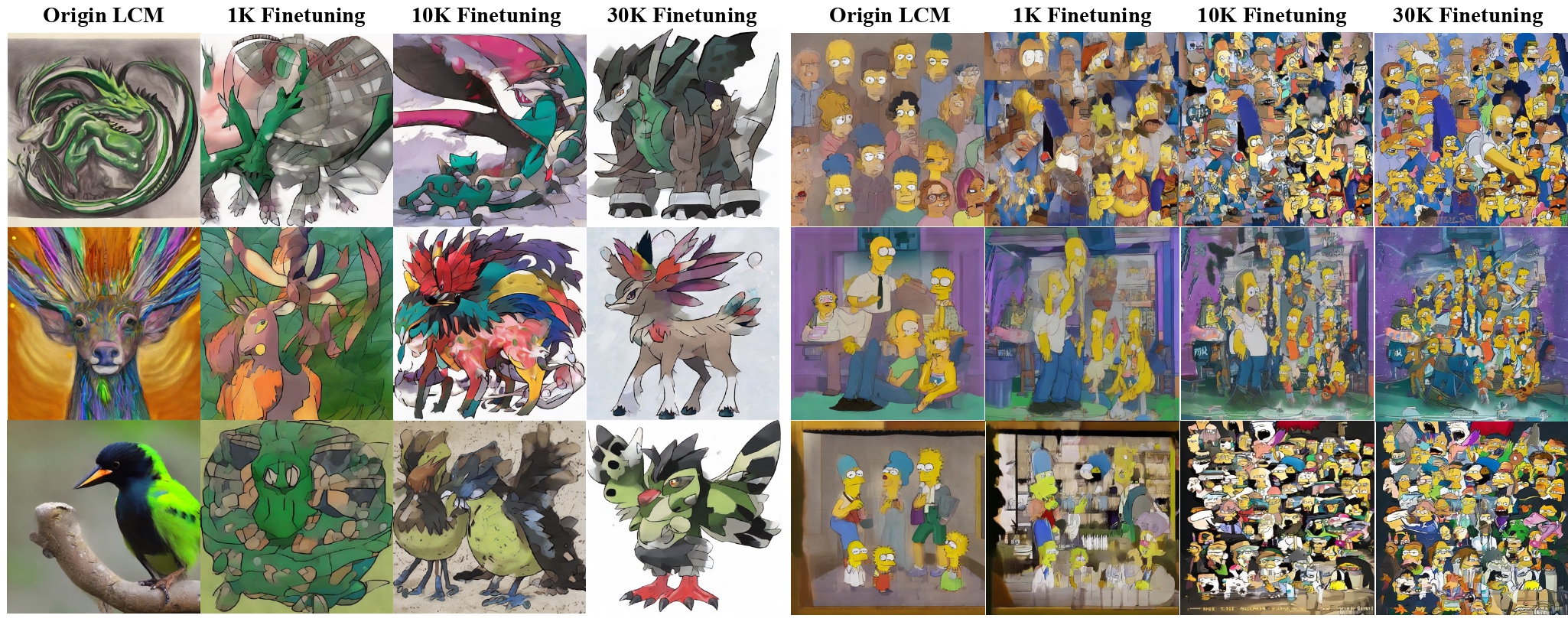

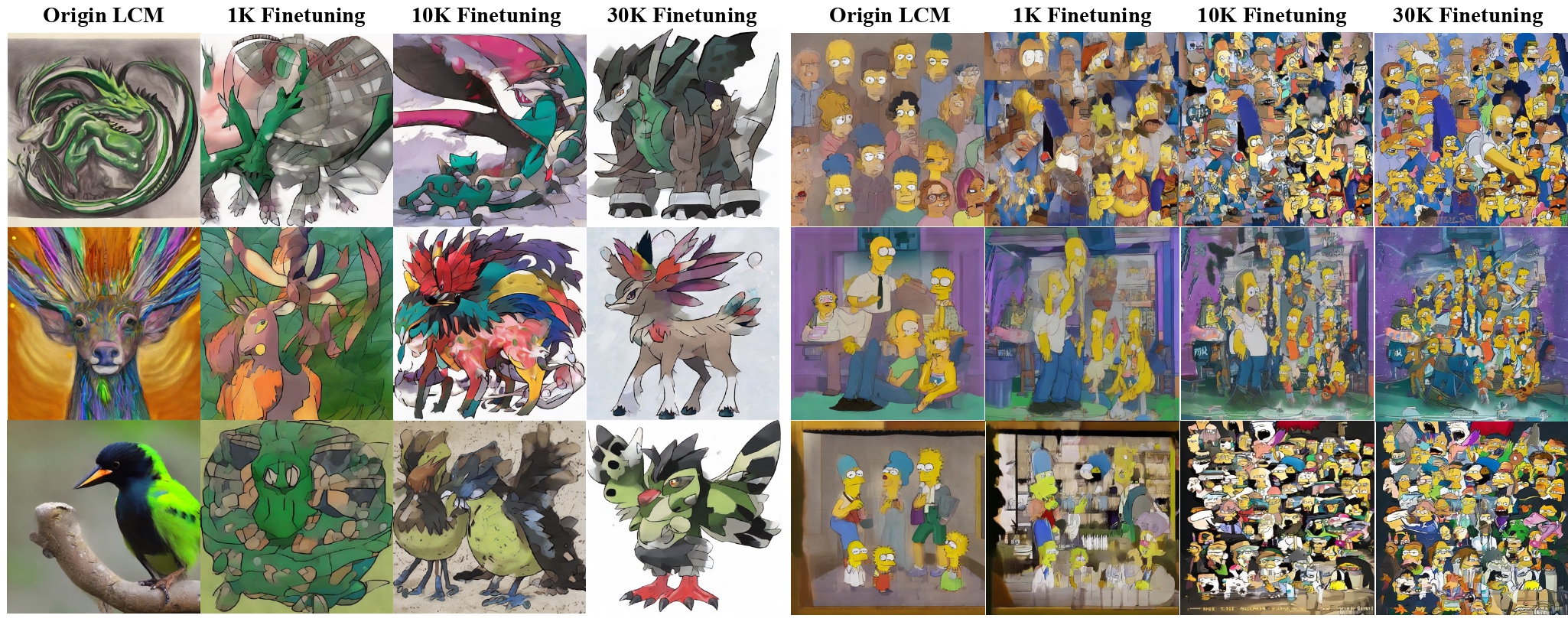

4-step LCMs using Latent Consistency Fine-tuning (LCF) on two customized datasets: Pokemon Dataset (left), Simpsons Dataset (right). Through LCF, LCM produces images with customized styles.

Project Page: https://latent-consistency-models.github.io

Try our Hugging Face Demos:

Hugging Face Demos:

Join our LCM discord channels for discussions. Coders are welcome to contribute.

for discussions. Coders are welcome to contribute.

Hugging Face Demos: Latent Consistency Models - a Hugging Face Space by SimianLuo

Models: SimianLuo/LCM_Dreamshaper_v7 · Hugging Face

Latent Consistency Models: Synthesizing High-Resolution Images with Few-step Inference

Simian Luo*, Yiqin Tan*, Longbo Huang†, Jian Li†, Hang Zhao†,Institute for Interdisciplinary Information Sciences, Tsinghua University

arXiv Paper Code

" LCMs: The next generation of generative models after Latent Diffusion Models (LDMs). "

Abstract

Latent Diffusion models (LDMs) have achieved remarkable results in synthesizing high-resolution images. However, the iterative sampling is computationally intensive and leads to slow generation.Inspired by Consistency Models, we propose Latent Consistency Models (LCMs), enabling swift inference with minimal steps on any pre-trained LDMs, including Stable Diffusion. Viewing the guided reverse diffusion process as solving an augmented probability flow ODE (PF-ODE), LCMs are designed to directly predict the solution of such ODE in latent space, mitigating the need for numerous iterations and allowing rapid, high-fidelity sampling.

Efficiently distilled from pre-trained classifier-free guided diffusion models, a high-quality 768×768 2~4-step LCM takes only 32 A100 GPU hours for training. Furthermore, we introduce Latent Consistency Fine-tuning (LCF), a novel method that is tailored for fine-tuning LCMs on customized image datasets. Evaluation on the LAION-5B-Aesthetics dataset demonstrates that LCMs achieve state-of-the-art text-to-image generation performance with few-step inference.

Few-Step Generated Images

Images generated by Latent Consistency Models (LCMs). LCMs can be distilled from any pre-trained Stable Diffusion (SD) in only 4,000 training steps (~32 A100 GPU Hours) for generating high quality 768 x 768 resolution images in 2~4 steps or even one step , significantly accelerating text-to-image generation. We employ LCM to distill the Dreamshaper-V7 version of SD in just 4,000 training iterations.

More Generation Results (4-Steps)

More generated images results with LCM 4-Step inference (768 x 768 Resolution). We employ LCM to distill the Dreamshaper-V7 version of SD in just 4,000 training iterations.

More Generation Results (2-Steps)

More generated images results with LCM 2-Step inference (768 x 768 Resolution). We employ LCM to distill the Dreamshaper-V7 version of SD in just 4,000 training iterations.

Latent Consistency Fine-tuning (LCF)

LCF is a fine-tuning method designed for pretrained LCM. LCF enables efficient few-step inference on customized datasets without teacher diffusion model, presenting a viable alternative to directly finetune a pretrained LCM.4-step LCMs using Latent Consistency Fine-tuning (LCF) on two customized datasets: Pokemon Dataset (left), Simpsons Dataset (right). Through LCF, LCM produces images with customized styles.

About

Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step InferenceLatent Consistency Models

Official Repository of the paper: Latent Consistency Models: Synthesizing High-Resolution Images with Few-Step Inference.Project Page: https://latent-consistency-models.github.io

Try our

Join our LCM discord channels

for discussions. Coders are welcome to contribute.

for discussions. Coders are welcome to contribute.

Last edited:

‘Reddit can survive without search’: company reportedly threatens to block Google

/Reddit initially denied a report from The Washington Post that it might force users to log in to see content — but the Post says it may still block search crawlers.

By Jay Peters, a news editor who writes about technology, video games, and virtual worlds. He’s submitted several accepted emoji proposals to the Unicode Consortium.Oct 20, 2023, 1:55 PM EDT|63 Comments / 63 New

=

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/23985506/acastro_STK024_02.jpg)

Illustration by Alex Castro / The Verge

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/23985506/acastro_STK024_02.jpg)

The Washington Post reported Friday that Reddit might cut off Google and force users to log in to Reddit itself to read anything, if it can’t reach deals with generative AI companies to pay for its data. Initially, Reddit seemed to deny the report. “Nothing is changing,” Reddit spokesperson Courtney Geesey-Dorr told The Verge, adding that the Post would soon be correcting its story.

But after the Post corrected that story, only one major detail had changed — the Post no longer suggests Reddit users would need to log in. The publication now writes that if Reddit can’t get AI to play ball, the company may block Google and Bing’s search crawlers, which means Reddit posts wouldn’t show up in search results.

“Reddit can survive without search,” said the Post’s anonymous source.

Reddit isn’t denying that it might block crawlers. “In terms of crawlers, we don’t have anything to share on that topic at the moment,” Reddit spokesperson Tim Rathschmidt tells The Verge, clarifying that the company’s earlier “nothing is changing” comment only applied to logins.

We got a taste of what Google without Reddit might look like when many subreddits went dark to protest the company’s API pricing changes — at that time, many Reddit results took you to private communities, which was a pain. Appending “site:reddit.com” to a Google search has become a popular trick for weeding SEO farms and other attention-seeking websites out of Google results, but if this change goes through, you might not be able to access Reddit from search at all.

Related

- Reddit’s upcoming API changes will make AI companies pony up

- Reddit CEO Steve Huffman isn’t backing down: our full interview

- The New York Times blocks OpenAI’s web crawler

While the Reddit protests were largely about how the API pricing changes would force some third-party app developers to shut down their apps, Reddit’s original announcement about the pricing changes positioned them as a way to get AI companies to pay for hoovering up Reddit’s data to train large language models. It wasn’t until later that the impact on app developers became clear. (In my June interview with Reddit CEO Steve Huffman, he said that “we’re in talks” with AI companies about the pricing changes. When I asked for more details, he didn’t elaborate further.)

The Washington Post’s report wasn’t just focused on Reddit — it’s about how more than 535 news organizations have opted to block their content from being scraped by companies like OpenAI to help train products such as ChatGPT.

X, formerly Twitter, has also implemented new pricing tiers for accessing its API, and X owner Elon Musk blamed data scraping by AI startups as a way to justify the reading limits implemented this summer.

Correction October 20th, 3:36PM ET: We originally wrote that Reddit denied The Washington Post’s report that Reddit might wall off its content from Google search; Reddit has clarified it was only denying that users might be forced to log in to read content. We apologize for the confusion.