That conversation tells me that it’s hard to recreate nature

How can you “teach” a system to fully think, discover new things, do people even know how humans think?

Trial and error. Unless you believe that God simply gave humans intelligence, then human brains must have learned to think the same way at some point.

from another

tweet

The MLC process using examples:

Imagine a language model named Charles.

Let's say Charles is presented with some novel words and a few examples that demonstrate their meaning:

Study examples:

glerk → RED CIRCLE

blicket → BLUE CIRCLE

Now Charles is given a new word and asked to interpret it systematically based on the examples:

Query:

fep

Charles leverages its compositional skills nurtured by MLC to infer that fep likely maps to a new color, since the examples mapped individual words to colored circles. It responds:

GREEN CIRCLE

In another episode, Charles is given some examples showing words that combine other words:

Study examples:

glerk kiki blicket → BLUE CIRCLE RED CIRCLE

blicket kiki glerk → RED CIRCLE BLUE CIRCLE

Query:

fep kiki glip

Charles recognizes kiki is combining words. It systematically composes the likely meanings of fep and glip from the examples, responding:

PURPLE CIRCLE ORANGE CIRCLE

By training on many such episodes requiring rapid generalization, MLC enables Claude to learn how to learn - to systematically compose meanings from limited examples.

This illustrates how the curriculum of compositional reasoning tasks teaches the model to exhibit human-like systematicity in novel situations, as quantified by its strong performance matching people

The meta-learning for compositionality approach achieves the systematicity and flexibility needed for human-like generalization.

www.nature.com

snippet:

Human-like systematic generalization through a meta-learning neural network

Nature volume 623, pages115–121 (2023)

Cite this article

Abstract

The power of human language and thought arises from systematic compositionality—the algebraic ability to understand and produce novel combinations from known components. Fodor and Pylyshyn

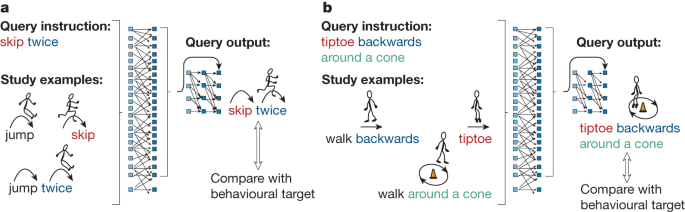

1 famously argued that artificial neural networks lack this capacity and are therefore not viable models of the mind. Neural networks have advanced considerably in the years since, yet the systematicity challenge persists. Here we successfully address Fodor and Pylyshyn’s challenge by providing evidence that neural networks can achieve human-like systematicity when optimized for their compositional skills. To do so, we introduce the meta-learning for compositionality (MLC) approach for guiding training through a dynamic stream of compositional tasks. To compare humans and machines, we conducted human behavioural experiments using an instruction learning paradigm. After considering seven different models, we found that, in contrast to perfectly systematic but rigid probabilistic symbolic models, and perfectly flexible but unsystematic neural networks, only MLC achieves both the systematicity and flexibility needed for human-like generalization. MLC also advances the compositional skills of machine learning systems in several systematic generalization benchmarks. Our results show how a standard neural network architecture, optimized for its compositional skills, can mimic human systematic generalization in a head-to-head comparison.

Main

People are adept at learning new concepts and systematically combining them with existing concepts. For example, once a child learns how to ‘skip’, they can understand how to ‘skip backwards’ or ‘skip around a cone twice’ due to their compositional skills. Fodor and Pylyshyn

1 argued that neural networks lack this type of systematicity and are therefore not plausible cognitive models, leading to a vigorous debate that spans 35 years

2,

3,

4,

5. Counterarguments to Fodor and Pylyshyn

1 have focused on two main points. The first is that human compositional skills, although important, may not be as systematic and rule-like as Fodor and Pylyshyn indicated

3,

6,

7. The second is that neural networks, although limited in their most basic forms, can be more systematic when using sophisticated architectures

8,

9,

10. In recent years, neural networks have advanced considerably and led to a number of breakthroughs, including in natural language processing. In light of these advances, we and other researchers have reformulated classic tests of systematicity and reevaluated Fodor and Pylyshyn’s arguments

1. Notably, modern neural networks still struggle on tests of systematicity

11,

12,

13,

14,

15,

16,

17,

18—tests that even a minimally algebraic mind should pass

2. As the technology marches on

19,

20, the systematicity debate continues.

In this Article, we provide evidence that neural networks can achieve human-like systematic generalization through MLC—an optimization procedure that we introduce for encouraging systematicity through a series of few-shot compositional tasks (Fig.

1). Our implementation of MLC uses only common neural networks without added symbolic machinery, and without hand-designed internal representations or inductive biases. Instead, MLC provides a means of specifying the desired behaviour through high-level guidance and/or direct human examples; a neural network is then asked to develop the right learning skills through meta-learning

21.

EDIT: asked AI to dumb it(the tweet) down.

Sure, let's break it down:

This is about a big breakthrough in artificial intelligence (AI). For a long time, people thought that AI couldn't think like humans because it's based on statistics and not on understanding things the way we do. But this new research shows that AI can actually learn to think more like us.

The researchers used a new method called MLC to train an AI model. They gave the model lots of small tasks that required it to think in a human-like way. The model did really well, and it even made some of the same mistakes that humans do, which shows that it's not just blindly following rules but actually understanding things.

This is a big deal because it means we can use AI to understand more about how our own brains work. It also means that AI can be more useful because it can understand things in a more human-like way.

The researchers also shared their work so that other people can learn from it and build on it. They published a paper about it and even shared the code they used.

Here's an example of how it works:

Let's say we have an AI model named Charles. We teach Charles that the word "glerk" means "red circle" and "blicket" means "blue circle". Then we give Charles a new word, "fep", and ask it to guess what it means. Charles guesses that "fep" might mean "green circle", because it's learned that these kinds of words usually refer to colored circles.

Then we give Charles some more complex examples. We teach it that "glerk kiki blicket" means "blue circle red circle" and "blicket kiki glerk" means "red circle blue circle". Then we give Charles a new phrase, "fep kiki glip", and ask it to guess what it means. Charles guesses that it might mean "purple circle orange circle", because it's learned that "kiki" is used to combine words.

So, by training Charles on lots of these kinds of tasks, it learns to understand and use words in a human-like way. This is a big step forward in AI research.