You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

REVEALED: Open A.I. Staff Warn "The progress made on Project Q* has the potential to endanger humanity" (REUTERS)

- Thread starter null

- Start date

More options

Who Replied?null

...

It’s not just energy though. I thought about the nuclear angle earlier actually. They still need metals and other natural resources.

this has already been covered.

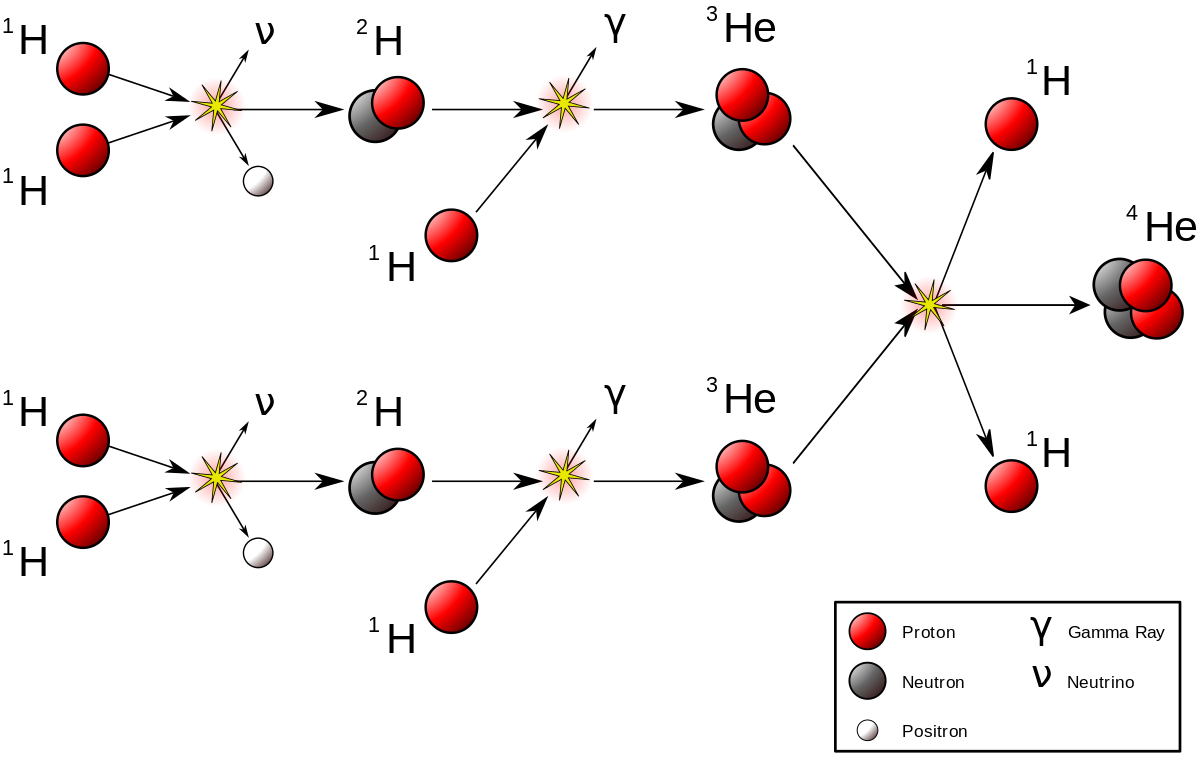

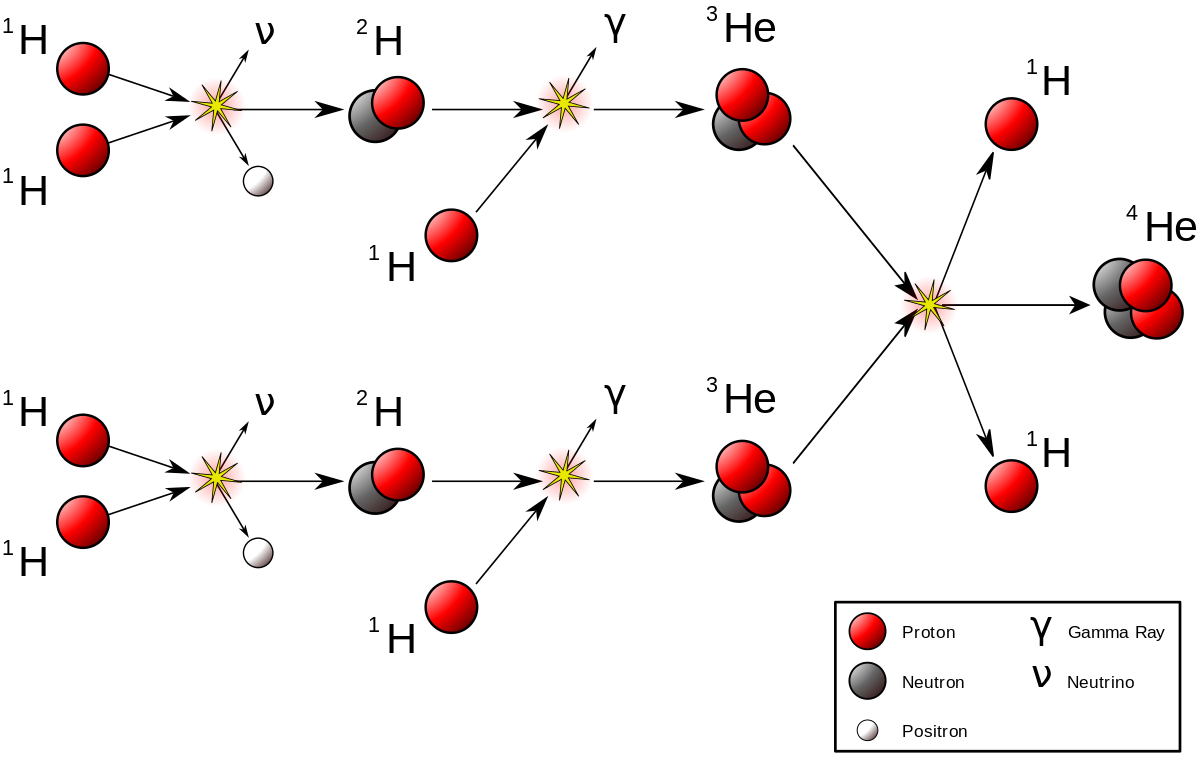

serious question: why don't we turn light elements into heavy elements?

where are they made then?

infinite energy + advanced ability to transmute substances.

Nuclear transmutation - Wikipedia

Storing data is physical for one, and the chips need silicon and the like. Then if ai wants to grow, maybe it will want to start to take mineral gathering up for itself because humans are inefficient. Then they have to then compete with biological life.

null

...

Neural networks require extensive programming and training by some of the smartest people in the world.

not true.

llm's do but we are not talking about that.

computers can supervise each other.

in fact dumb computers can supervise intelligent models and help them learn.

how?

because

i. answer testing is often easier than solving.

2. dumb computers just provide feedback.

put it this way

you, dummy, can tell a japanses genius whether his japanese to english translation has grammatical errors.

you can't do the translation BUT you can provide feedback on the answer.

if given the equivalent english content you could even comment on the quality of his translation without knowing a word of japanese.

that is how dumb computers train models. they just provide simple feedback while the model is learning.

So once again, computers can only do what they’re programmed by humans to do. It’s simply a matter of input and output. It’s not AI that’s dangerous, it’s the human beingS that are programming them that are.

AI is dangerous because of the implications it has for the job market, not because of the chance of it becoming sentient and stealing nuclear launch codes

I'm not sure to your question.this has already been covered.

serious question: why don't we turn light elements into heavy elements?

where are they made then?

infinite energy + advanced ability to transmute substances.

Nuclear transmutation - Wikipedia

en.wikipedia.org

But abstractly, I some point the limited resources on the earth will be used up if AI wants to grow exponentially. They will have to compete with biological life or move to other planets. But still then, at some point those resources will be used up. If AI wants to grow it will need to use physical space and the items that physical space includes. If it grows as fast as some people suggest, other planets are going to have to be used. I guess we just need to get it in space before AI consumes what is left on earth. Then if there is alien life doing the same thing, there will further be competition at some point.

null

...

you should at least try to read the article and summarize it in your own words

i've been programming for a while now.

i don't need to read it to know what it says.

there's shyt out there that we need to be worried about, like computers communicating with each other in their own language. Facebook fukked around and found out. and while they didn't shut them down, their actions were problematic. that's that shyt that leads to a network trying to protect itself by any means necessary, like the shyt we see in movies and shows. when a system gains "consciousness" and realizes it's own existence, it's will attempt to protect itself.Y’all really think robots can take over?

That’s hard for me to believe, ai is definitely going to have consequences but is it going to be some matrix or terminator shyt I don’t think so

It’s hard for me to believe that ai wouldn’t have a kill switch

And why do people assume ai would be on bullshyt with humans

AI development and gene editing are two great threats to the human race IMO

null

...

I'm not sure to your question.

the answer is energy.

heavy elements are made in stars.

But abstractly, I some point the limited resources on the earth will be used up if AI wants to grow exponentially.

nuclear fusion is infinite energy.. i think we agree on that.

energy == matter .. i think we agree on that.

so will a hyper intelligent machine be as limited in its ability to convert one substance to another.

i do not think so.

part of the problem with converting matter is the energy in is not cost effective. in an boundless energy world that is no longer a problem.

the further how-to will have to be expanded on by the intelligence.

the logical conclusion of matter being energy is that we might one day be able to convert one to the other instantaneously / quickly.

we can only ready do so slowly .. i.e. farming. steel manufacture. human growth. etc.

that's where this comes from:

Replicator (Star Trek) - Wikipedia

They will have to compete with biological life or move to other planets. But still then, at some point those resources will be used up. If AI wants to grow it will need to use physical space and the items that physical space includes. If it grows as fast as some people suggest, other planets are going to have to be used. I guess we just need to get it in space before AI consumes what is left on earth. Then if there is alien life doing the same thing, there will further be competition at some point.

not true.

why you write these untrue things bru ..?

you are talking about Supervised and possible Reinforcement techniques.

let it be known that computers can bootstrap each other.

why?

because solving a problem and testing whether a result is correct are not the same class of problem (N vs NP).

explained here:

What is the difference between solving and verifying an algorithm in the context of P, NP, NP-complete, NP-hard

I am struggling to understand the difference between the notions $P, NP, NP-$complete, $NP-$hard. Let's take the example of the $NP-$class. We say that these problems are solved in non-polynomial t...cs.stackexchange.com

e.g.

that is why for example asymmetric keys work.

Approaches[edit]

Machine learning approaches are traditionally divided into three broad categories, which correspond to learning paradigms, depending on the nature of the "signal" or "feedback" available to the learning system:

- Supervised learning: The computer is presented with example inputs and their desired outputs, given by a "teacher", and the goal is to learn a general rule that maps inputs to outputs.

- Unsupervised learning: No labels are given to the learning algorithm, leaving it on its own to find structure in its input. Unsupervised learning can be a goal in itself (discovering hidden patterns in data) or a means towards an end (feature learning).

- Reinforcement learning: A computer program interacts with a dynamic environment in which it must perform a certain goal (such as driving a vehicle or playing a game against an opponent). As it navigates its problem space, the program is provided feedback that's analogous to rewards, which it tries to maximize.[6] Although each algorithm has advantages and limitations, no single algorithm works for all problems.[34][35][36]

Machine learning - Wikipedia

en.wikipedia.org

this also enables zero-trust communication.

Zero-Trust Model and Secretless Approach: A Complete Guide

This guide with insights from MSC explains secure-by-design applications in the cloud by applying the zero-trust and secretless approaches.www.codemotion.com

i think it’s pretty clear that you don’t understand the technology based on two reasons:

1. You keep copying and pasting links to articles without even attempting to summarize them in your own words

2. You posted an article about zero-trust communication which is a security model in cloud computing that has nothing to do with AI. I’m currently studying cloud computing and the whole reason why cloud engineers aren’t worried about AI taking their jobs is because no company is going to trust their infrastructure with AI.

Also, like I said earlier, even professions like cloud engineering are far to complex for AI currently. Human beings are more complex than input and output whereas that’s all a computer is capable of.

So in other words you’re admitting that you didn’t read the article you postedi've been programming for a while now.

i don't need to read it to know what it says.

/energy and resources.

how would money then matter.

please explain?

There is a limited amount of land on Earth. People wish to control that land. All the energy in the world doesn't make up for not having land. Besides the total amount of land, some land is clearly better than others. People will compete over who gets the best land, even if "best" is in some cases just a social construct and not directly determined by objective criteria (though there are plenty of ways in which some land IS objectively better than other land).

Even if energy is infinite, are the # of quality lawyers infinite? The # of quality doctors? The # of quality teachers? The # of quality AI managers? People are still going to compete over who gets the best legal help, the best health care, the best education, the best technological assistance. Even if AI replaces some of those tasks, its difficult to imagine any reality where every human on Earth has equally perfect ability to interface with the technology. There will still be gatekeepers, even if we can't fully imagine right now what future form those gatekeepers may take.

Does infinite energy eliminate the need for government? Who gets to decide what government does? Is infinite energy going to somehow replace elections? Campaign funding will cease to exist, lobbyists will cease to exist, money will no longer have any power in politics?

And that's even assuming access to mineral/material resources becomes infinite when energy is infinite, which is not a guarantee.

We haven't even gotten to those who wish to control art, antiques, artifacts, and other collectables.

Goat poster

KANG LIFE

The possibilities are endless.Y’all really think robots can take over?

That’s hard for me to believe, ai is definitely going to have consequences but is it going to be some matrix or terminator shyt I don’t think so

It’s hard for me to believe that ai wouldn’t have a kill switch

And why do people assume ai would be on bullshyt with humans

AI could create a virus worse than covid, once it feels it is threatened there is no telling what it can and will do.

EXACTLYthere's shyt out there that we need to be worried about, like computers communicating with each other in their own language. Facebook fukked around and found out. and while they didn't shut them down, their actions were problematic. that's that shyt that leads to a network trying to protect itself by any means necessary, like the shyt we see in movies and shows. when a system gains "consciousness" and realizes it's own existence, it's will attempt to protect itself.

AI development and gene editing are two great threats to the human race IMO

I see what you are saying, but I think it is possible that at some point the energy consumption needed to complete tasks X, Y, Z will be greater than what nuclear energy or the sun will give us. Nuclear energy is near limitless, but it's not really completely limitless though is it? At some point, maybe this is very far off in the future, the energy needs of an advanced AI will be greater than what can be provided in a limited area of space and all of the energy that can be made by those particles.the answer is energy.

heavy elements are made in stars.

nuclear fusion is infinite energy.. i think we agree on that.

energy == matter .. i think we agree on that.

so will a hyper intelligent machine be as limited in its ability to convert one substance to another.

i do not think so.

part of the problem with converting matter is the energy in is not cost effective. in an boundless energy world that is no longer a problem.

the further how-to will have to be expanded on by the intelligence.

the logical conclusion of matter being energy is that we might one day be able to convert one to the other instantaneously / quickly.

we can only ready do so slowly .. i.e. farming. steel manufacture. human growth. etc.

that's where this comes from:

Replicator (Star Trek) - Wikipedia

en.wikipedia.org

null

...

i think it’s pretty clear that you don’t understand the technology based on two reasons:

1. You keep copying and pasting links to articles without even attempting to summarize them in your own words

nonsense. come join us in the programming threads one day.

this is adult conversation.

who would i be explaining basics.

if you don't know that machines can teach each other (for example) i am not gonna spend time explaining it to you.

a C&P is too much time already.

2. You posted an article about zero-trust communication which is a security model in cloud computing that has nothing to do with AI. I’m currently studying cloud computing and the whole reason why cloud engineers aren’t worried about AI taking their jobs is because no company is going to trust their infrastructure with AI.

it is an example of how the fact that testing an answer is less computationally expensive than calculating the answer can be used to establish trustworthiness.

that is the same principle as asymmetric keys.

explain how this is wrong.

Also, like I said earlier, even professions like cloud engineering are far to complex for AI currently. Human beings are more complex than input and output whereas that’s all a computer is capable of.

AI has nothing to do with computer engineering and far less to do with "clouds".

cloud

null

...

I see what you are saying, but I think it is possible that at some point the energy consumption needed to complete tasks X, Y, Z will be greater than what nuclear energy or the sun will give us. Nuclear energy is near limitless, but it's not really completely limitless though is it?

it is practically limitless.

At some point, maybe this is very far off in the future, the energy needs of an advanced AI will be greater than what can be provided in a limited area of space.

unlikely but there is plenty of material in space.

plus we let plenty of energy escape into space all the time.

about as much energy as we get from the sun each day escapes into space.