Yann LeCun says Meta AI ‘quickly becoming most used’ assistant, challenging OpenAI’s dominance

Meta challenges OpenAI with Llama 3.1, an open-source AI model rivaling GPT-4o, as Yann LeCun claims Meta AI is becoming the most widely used AI assistant.

Yann LeCun says Meta AI ‘quickly becoming most used’ assistant, challenging OpenAI’s dominance

Michael Nuñez@MichaelFNunez

July 23, 2024 2:26 PM

Credit: VentureBeat made with Midjourney

Join our daily and weekly newsletters for the latest updates and exclusive content on industry-leading AI coverage. Learn More

Meta Platforms has thrown down the gauntlet in the AI race today with the release of Llama 3.1, its most sophisticated artificial intelligence model to date.

This advanced model now powers Meta AI, the company’s AI assistant, which has been strategically deployed across its suite of platforms including WhatsApp, Messenger, Instagram, Facebook, and Ray-Ban Meta, with plans to extend to Meta Quest next month. The widespread implementation of Llama 3.1 potentially places advanced AI capabilities at the fingertips of billions of users globally.

The move represents a direct challenge to industry leaders OpenAI and Anthropic, particularly targeting OpenAI’s market-leading position. It also underscores Meta’s commitment to open-source development, marking a major escalation in the AI competition.

Llama 3.1 now powers Meta AI, which is quickly becoming the most widely used AI assistant.

Meta AI can be accessed through WhatsApp, Messenger, Instagram, Facebook, Ray-Ban Meta, and next month in Meta Quest.

It answers questions, summarizes long documents, helps you code or do…

— Yann LeCun (@ylecun) July 23, 2024

Yann LeCun, Meta’s chief AI scientist, made a bold proclamation on X.com following the release this morning that caught many in the AI community off guard. “Llama 3.1 now powers Meta AI, which is quickly becoming the most widely used AI assistant,” LeCun said, directly challenging the supremacy of OpenAI’s ChatGPT, which has thus far dominated the AI assistant market.

If substantiated, LeCun’s assertion could herald a major shift in the AI landscape, potentially reshaping the future of AI accessibility and development.

Open-source vs. Closed-source: Meta’s disruptive strategy in the AI market

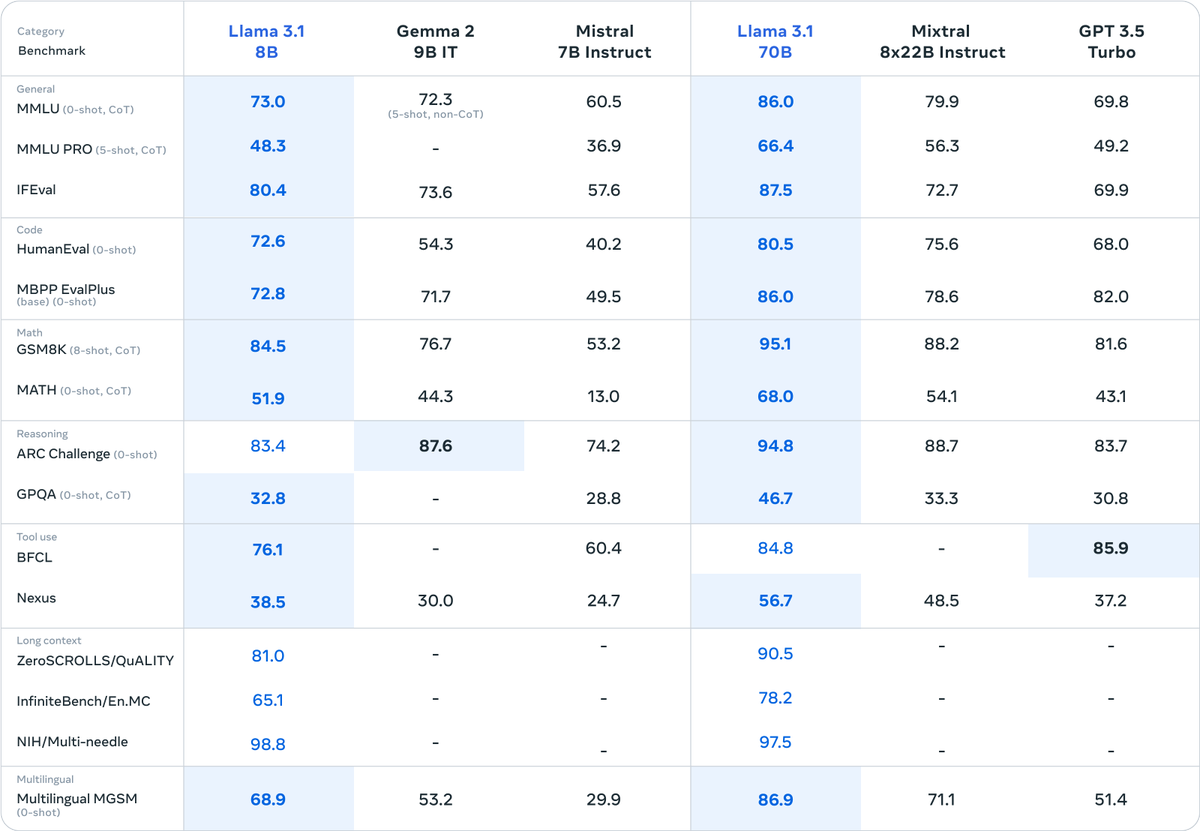

The centerpiece of Meta’s release is the Llama 3.1 405B model, featuring 405 billion parameters. The company boldly contends that this model’s performance rivals that of leading closed-source models, including OpenAI’s GPT-4o, across various tasks. Meta’s decision to make such a powerful model openly available stands in stark contrast to the proprietary approaches of its competitors, particularly OpenAI.

This release comes at a critical juncture for Meta, following a $200 billion market value loss earlier this year. CEO Mark Zuckerberg has pivoted the company’s focus towards AI, moving away from its previous emphasis on the metaverse. “Open source will ensure that more people around the world have access to the benefits and opportunities of AI,” Zuckerberg said, in what appears to be a direct challenge to OpenAI’s business model.

Wall Street analysts have expressed skepticism about Meta’s open-source strategy, questioning its potential for monetization, especially when compared to OpenAI’s reported $3.4 billion annualized revenue. However, the tech community has largely welcomed the move, seeing it as a catalyst for innovation and wider AI access.

Our Llama 3.1 405B is now openly available! After a year of dedicated effort, from project planning to launch reviews, we are thrilled to open-source the Llama 3 herd of models and share our findings through the paper:

?Llama 3.1 405B, continuously trained with a 128K context… pic.twitter.com/RwhedAluSM

— Aston Zhang (@astonzhangAZ) July 23, 2024

AI arms race heats up: Implications for innovation, safety, and market leadership

The new model boasts improvements including an extended context length of 128,000 tokens, enhanced multilingual capabilities, and improved reasoning. Meta has also introduced the “Llama Stack,” a set of standardized interfaces aimed at simplifying development with Llama models, potentially making it easier for developers to switch from OpenAI’s tools.

While the release has generated excitement in the AI community, it also raises concerns about potential misuse. Meta claims to have implemented robust safety measures, but the long-term implications of widely available advanced AI remain a topic of debate among experts.

Why are FTC & DOJ issuing statements w/ EU competition authorities discussing "risks" in the blazingly competitive, U.S.-built AI ecosystem? And on the same day that Meta turbocharges disruptive innovation with the first-ever frontier-level open source AI model? A ? pic.twitter.com/vrItR28YIo

— Neil Chilson?? ? (@neil_chilson) July 23, 2024

As the AI race intensifies, Meta’s latest move positions the company as a formidable competitor in a field previously dominated by OpenAI and Anthropic. The success of Llama 3.1 could potentially reshape the AI industry, influencing everything from market dynamics to development methodologies.

The tech industry is closely watching this development, with many speculating on how OpenAI and other AI leaders will respond to Meta’s direct challenge. As the competition heats up, the implications for AI accessibility, innovation, and market leadership remain to be seen, with OpenAI’s dominant position now under serious threat.