Large Language Models News & Discussions

- Thread starter Macallik86

- Start date

More options

Who Replied?

Anthropic claims its latest model is best-in-class | TechCrunch

Anthropic's newest model, Claude 3.5 Sonnet, is its best yet as measured across a range of AI benchmarks.

Anthropic claims its latest model is best-in-class

Kyle Wiggers

7:00 AM PDT • June 20, 2024

Comment

OpenAI rival Anthropic is releasing a powerful new generative AI model called Claude 3.5 Sonnet. But it’s more an incremental step than a monumental leap forward.

Claude 3.5 Sonnet can analyze both text and images as well as generate text, and it’s Anthropic’s best-performing model yet — at least on paper. Across several AI benchmarks for reading, coding, math and vision, Claude 3.5 Sonnet outperforms the model it’s replacing, Claude 3 Sonnet, and beats Anthropic’s previous flagship model Claude 3 Opus.

Benchmarks aren’t necessarily the most useful measure of AI progress, in part because many of them test for esoteric edge cases that aren’t applicable to the average person, like answering health exam questions. But for what it’s worth, Claude 3.5 Sonnet just barely bests rival leading models, including OpenAI’s recently launched GPT-4o, on some of the benchmarks Anthropic tested it against.

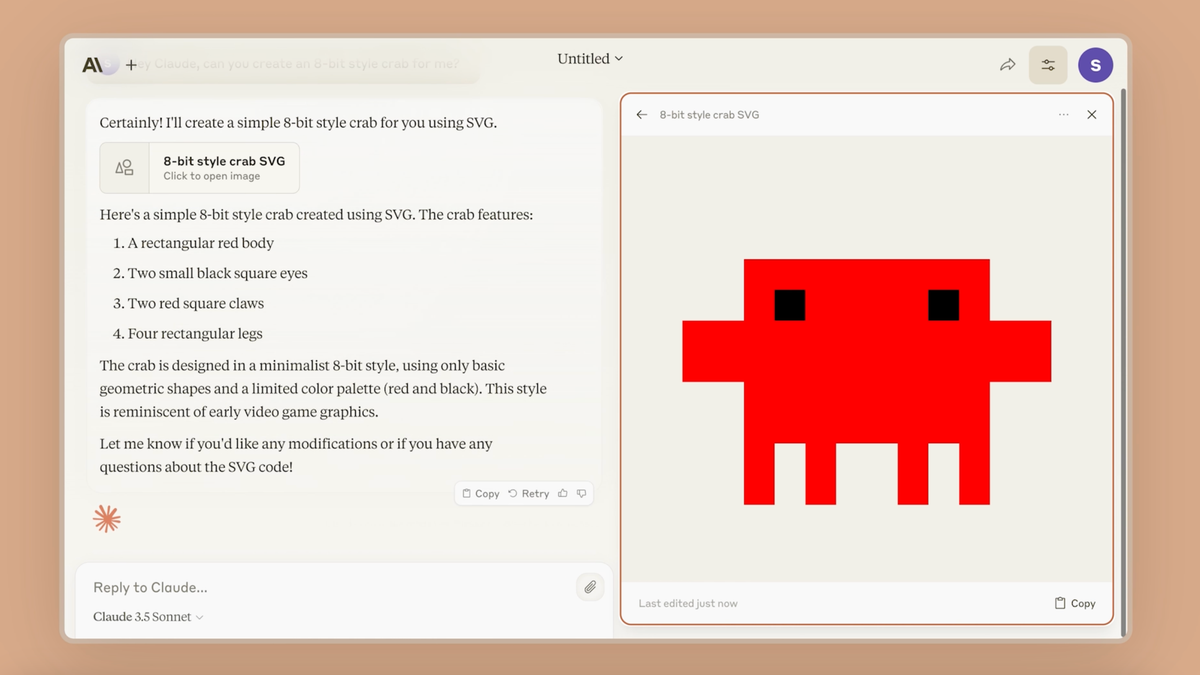

Alongside the new model, Anthropic is releasing what it’s calling Artifacts, a workspace where users can edit and add to content — e.g. code and documents — generated by Anthropic’s models. Currently in preview, Artifacts will gain new features, like ways to collaborate with larger teams and store knowledge bases, in the near future, Anthropic says.

Focus on efficiency

Claude 3.5 Sonnet is a bit more performant than Claude 3 Opus, and Anthropic says that the model better understands nuanced and complex instructions, in addition to concepts like humor. (AI is notoriously unfunny, though.) But perhaps more importantly for devs building apps with Claude that require prompt responses (e.g. customer service chatbots), Claude 3.5 Sonnet is faster. It’s around twice the speed of Claude 3 Opus, Anthropic claims.Vision — analyzing photos — is one area where Claude 3.5 Sonnet greatly improves over 3 Opus, according to Anthropic. Claude 3.5 Sonnet can interpret charts and graphs more accurately and transcribe text from “imperfect” images, such as pics with distortions and visual artifacts.

Michael Gerstenhaber, product lead at Anthropic, says that the improvements are the result of architectural tweaks and new training data, including AI-generated data. Which data specifically? Gerstenhaber wouldn’t disclose, but he implied that Claude 3.5 Sonnet draws much of its strength from these training sets.

“What matters to [businesses] is whether or not AI is helping them meet their business needs, not whether or not AI is competitive on a benchmark,” Gerstenhaber told TechCrunch. “And from that perspective, I believe Claude 3.5 Sonnet is going to be a step function ahead of anything else that we have available — and also ahead of anything else in the industry.”

The secrecy around training data could be for competitive reasons. But it could also be to shield Anthropic from legal challenges — in particular challenges pertaining to fair use. The courts have yet to decide whether vendors like Anthropic and its competitors, like OpenAI, Google, Amazon and so on, have a right to train on public data, including copyrighted data, without compensating or crediting the creators of that data.

So, all we know is that Claude 3.5 Sonnet was trained on lots of text and images, like Anthropic’s previous models, plus feedback from human testers to try to “align” the model with users’ intentions, hopefully preventing it from spouting toxic or otherwise problematic text.

What else do we know? Well, Claude 3.5 Sonnet’s context window — the amount of text that the model can analyze before generating new text — is 200,000 tokens, the same as Claude 3 Sonnet. Tokens are subdivided bits of raw data, like the syllables “fan,” “tas” and “tic” in the word “fantastic”; 200,000 tokens is equivalent to about 150,000 words.

And we know that Claude 3.5 Sonnet is available today. Free users of Anthropic’s web client and the Claude iOS app can access it at no charge; subscribers to Anthropic’s paid plans Claude Pro and Claude Team get 5x higher rate limits. Claude 3.5 Sonnet is also live on Anthropic’s API and managed platforms like Amazon Bedrock and Google Cloud’s Vertex AI.

“Claude 3.5 Sonnet is really a step change in intelligence without sacrificing speed, and it sets us up for future releases along the entire Claude model family,” Gerstenhaber said.

Claude 3.5 Sonnet also drives Artifacts, which pops up a dedicated window in the Claude web client when a user asks the model to generate content like code snippets, text documents or website designs. Gerstenhaber explains: “Artifacts are the model output that puts generated content to the side and allows you, as a user, to iterate on that content. Let’s say you want to generate code — the artifact will be put in the UI, and then you can talk with Claude and iterate on the document to improve it so you can run the code.”

The bigger picture

So what’s the significance of Claude 3.5 Sonnet in the broader context of Anthropic — and the AI ecosystem, for that matter?Claude 3.5 Sonnet shows that incremental progress is the extent of what we can expect right now on the model front, barring a major research breakthrough. The past few months have seen flagship releases from Google ( Gemini 1.5 Pro) and OpenAI (GPT-4o) that move the needle marginally in terms of benchmark and qualitative performance. But there hasn’t been a leap of matching the leap from GPT-3 to GPT-4 in quite some time, owing to the rigidity of today’s model architectures and the immense compute they require to train.

As generative AI vendors turn their attention to data curation and licensing in lieu of promising new scalable architectures, there are signs investors are becoming wary of the longer-than-anticipated path to ROI for generative AI. Anthropic is somewhat inoculated from this pressure, being in the enviable position of Amazon’s (and to a lesser extent Google’s) insurance against OpenAI. But the company’s revenue, forecasted to reach just under $1 billion by year-end 2024, is a fraction of OpenAI’s — and I’m sure Anthropic’s backers don’t let it forget that fact.

Despite a growing customer base that includes household brands such as Bridgewater, Brave, Slack and DuckDuckGo, Anthropic still lacks a certain enterprise cachet. Tellingly, it was OpenAI — not Anthropic — with which PwC recently partnered to resell generative AI offerings to the enterprise.

So Anthropic is taking a strategic, and well-trodden, approach to making inroads, investing development time into products like Claude 3.5 Sonnet to deliver slightly better performance at commodity prices. Claude 3.5 Sonnet is priced the same as Claude 3 Sonnet: $3 per million tokens fed into the model and $15 per million tokens generated by the model.

Gerstenhaber spoke to this in our conversation. “When you’re building an application, the end user shouldn’t have to know which model is being used or how an engineer optimized for their experience,” he said, “but the engineer could have the tools available to optimize for that experience along the vectors that need to be optimized, and cost is certainly one of them.”

Claude 3.5 Sonnet doesn’t solve the hallucinations problem. It almost certainly makes mistakes. But it might just be attractive enough to get developers and enterprises to switch to Anthropic’s platform. And at the end of the day, that’s what matters to Anthropic.

Toward that same end, Anthropic has doubled down on tooling like its experimental steering AI, which lets developers “steer” its models’ internal features; integrations to let its models take actions within apps; and tools built on top of its models such as the aforementioned Artifacts experience. It’s also hired an Instagram co-founder as head of product. And it’s expanded the availability of its products, most recently bringing Claude to Europe and establishing offices in London and Dublin.

Anthropic, all told, seems to have come around to the idea that building an ecosystem around models — not simply models in isolation — is the key to retaining customers as the capabilities gap between models narrows.

Still, Gerstenhaber insisted that bigger and better models — like Claude 3.5 Opus — are on the near horizon, with features such as web search and the ability to remember preferences in tow.

“I haven’t seen deep learning hit a wall yet, and I’ll leave it to researchers to speculate about the wall, but I think it’s a little bit early to be coming to conclusions on that, especially if you look at the pace of innovation,” he said. “There’s very rapid development and very rapid innovation, and I have no reason to believe that it’s going to slow down.”

Anthropic’s Claude 3.5 Sonnet wows AI power users: ‘this is wild’

AI influencers and power users have taken to the web share their largely positive impressions about Anthropic's new release.

Anthropic's Newest Claude AI Model Is Out. Here's What You Get

Claude 3.5 Sonnet is faster and funnier, Anthropic says, and it'll fit in even better in your workplace.

Amazon Bedrock adds Claude 3.5 Sonnet Anthropic AI model

Anthropic’s Claude 3.5 Sonnet, the newest addition to the state-of-the-art Claude family of AI models, is more intelligent and one-fifth of the price of Claude 3 Opus.

Anthropic launches Claude 3.5 Sonnet, Artifacts as a way to collaborate

Anthropic launched Claude 3.5 Sonnet, its latest large language model (LLM), with availability on Anthropic API, Amazon Bedrock and Google Cloud Vertex AI. According to Anthropic, Claude 3.5 Sonnet outperforms OpenAI's ChatGPT-4o on multiple metrics with improved price/performance rations...

Claude 3.5 Sonnet | Hacker News

Sam Dwyer on LinkedIn: Introducing Claude 3.5 Sonnet

🚀 Proud and Honored to introduce Claude 3.5 Sonnet 🎉 With 2x the speed of Claude 3 Opus, exceptional reasoning and coding skills, and state-of-the-art…

www.linkedin.com

www.linkedin.com

Anthropic Says New Claude 3.5 AI Model Outperforms GPT-4 Omni

It can even make your own 8-bit video game for you.

gizmodo.com

gizmodo.com

Anthropic releases faster and funnier Claude AI model

Anthropic has launched its latest AI model Claude 3.5 Sonnet, an update to its Claude 3 model released in March, which it claims outperforms competitor...

www.proactiveinvestors.com

Anthropic’s newest Claude chatbot beats OpenAI’s GPT-4o in some benchmarks

Anthropic rolled out its newest AI language model on Thursday, Claude 3.5 Sonnet. The updated chatbot outperforms the company’s previous top-tier model, Claude 3 Opus, but works at twice the speed.

Anthropic launches Claude 3.5 Sonnet and debuts Artifacts for collaboration

The Artifacts function can display a Claude response, such as a piece of code, in a separate window for group collaboration.

www.zdnet.com

www.zdnet.com

Anthropic’s Claude 3.5 Sonnet model now available in Amazon Bedrock: Even more intelligence than Claude 3 Opus at one-fifth the cost | Amazon Web Services

This powerful and cost-effective model outperforms on intelligence benchmarks, with remarkable capabilities in vision, writing, customer support, analytics, and coding to revolutionize your workflows.

Brayden McLean on LinkedIn: Introducing Claude 3.5 Sonnet

I got to say I was already pretty happy with the last generation of models, but Anthropic's next-gen mid-tier model can outperform our previous more expensive…

www.linkedin.com

www.linkedin.com

We’re Still Waiting for the Next Big Leap in AI

Anthropic’s latest Claude AI model pulls ahead of rivals from OpenAI and Google. But advances in machine intelligence have lately been more incremental than revolutionary.

Anthropic Unleashes Claude 3.5 Sonnet, Outshines GPT-4o

Anthropic launches Claude 3.5 Sonnet, a breakthrough AI model offering exceptional intelligence, efficiency, and enhanced visual reasoning capabilities.

GPT-4o and Gemini 1.5 Pro just got beat in the AI race | Digital Trends

Anthropic's new Claude 3.5 Sonnet model is a little better than ChatGPT-4o and a huge improvement over Claude Opus 3.0.

1/1

The new Artifacts UX combined with Claude 3.5 Sonnet on Claude makes this one of the best AI chat interfaces out there! Very impressive speed, quality, and usability.

In less than a minute I had this web page sample previewable directly in the chat.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

The new Artifacts UX combined with Claude 3.5 Sonnet on Claude makes this one of the best AI chat interfaces out there! Very impressive speed, quality, and usability.

In less than a minute I had this web page sample previewable directly in the chat.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Anthropic is training on a bunch of file types. It works great, and the models pick up skills like obeying XML tags.

No open pre-training datasets do this.

There are tons of PDFs, SVGs, and LaTeX files on CommonCrawl, it’s not a hard problem to solve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Anthropic is training on a bunch of file types. It works great, and the models pick up skills like obeying XML tags.

No open pre-training datasets do this.

There are tons of PDFs, SVGs, and LaTeX files on CommonCrawl, it’s not a hard problem to solve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

New claude artifacts are awesome!

I asked for a pong game and it coded one for me

No code interpreter, unlike chatGPT, instead you can make documents, notes & stuff, one option being html/jss

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

New claude artifacts are awesome!

I asked for a pong game and it coded one for me

No code interpreter, unlike chatGPT, instead you can make documents, notes & stuff, one option being html/jss

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

So jazzed about Claude 3.5 Sonnet and products like Artifacts. It's still early days in the productization of LLMs and

@AnthropicAI has more great ships on the way to bring the magic of LLMs to our daily workflows in practical, useful ways.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

So jazzed about Claude 3.5 Sonnet and products like Artifacts. It's still early days in the productization of LLMs and

@AnthropicAI has more great ships on the way to bring the magic of LLMs to our daily workflows in practical, useful ways.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

/cdn.vox-cdn.com/uploads/chorus_asset/file/25498067/Claude_3_5_Model_Selector.png)

Anthropic has a fast new AI model — and a clever new way to interact with chatbots

GPT-4o. Gemini 1.5. And now Claude 3.5 Sonnet.

Anthropic has a fast new AI model — and a clever new way to interact with chatbots

Claude 3.5 Sonnet is apparently Anthropic’s smartest, fastest, and most personable model yet.

By David Pierce, editor-at-large and Vergecast co-host with over a decade of experience covering consumer tech. Previously, at Protocol, The Wall Street Journal, and Wired.Jun 20, 2024, 10:00 AM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498067/Claude_3_5_Model_Selector.png)

GPT-4o, Gemini 1.5, and now Claude 3.5 Sonnet. Image: Anthropic

The AI arms race continues apace: Anthropic is launching its newest model, called Claude 3.5 Sonnet, which it says can equal or better OpenAI’s GPT-4o or Google’s Gemini across a wide variety of tasks. The new model is already available to Claude users on the web and on iOS, and Anthropic is making it available to developers as well.

Claude 3.5 Sonnet will ultimately be the middle model in the lineup — Anthropic uses the name Haiku for its smallest model, Sonnet for the mainstream middle option, and Opus for its highest-end model. (The names are weird, but every AI company seems to be naming things in their own special weird ways, so we’ll let it slide.) But the company says 3.5 Sonnet outperforms 3 Opus, and its benchmarks show it does so by a pretty wide margin. The new model is also apparently twice as fast as the previous one, which might be an even bigger deal.

AI model benchmarks should always be taken with a grain of salt; there are a lot of them, it’s easy to pick and choose the ones that make you look good, and the models and products are changing so fast that nobody seems to have a lead for very long. That said, Claude 3.5 Sonnet does look impressive: it outscored GPT-4o, Gemini 1.5 Pro, and Meta’s Llama 3 400B in seven of nine overall benchmarks and four out of five vision benchmarks. Again, don’t read too much into that, but it does seem that Anthropic has built a legitimate competitor in this space.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498069/Claude_3_5_Sonnet_Eval_General.png)

Claude 3.5’s benchmark scores do look impressive — but these things change so fast. Image: Anthropic

What does all that actually amount to? Anthropic says Claude 3.5 Sonnet will be far better at writing and translating code, handling multistep workflows, interpreting charts and graphs, and transcribing text from images. This new and improved Claude is also apparently better at understanding humor and can write in a much more human way.

Along with the new model, Anthropic is also introducing a new feature called Artifacts. With Artifacts, you’ll be able to see and interact with the results of your Claude requests: if you ask the model to design something for you, it can now show you what it looks like and let you edit it right in the app. If Claude writes you an email, you can edit the email in the Claude app instead of having to copy it to a text editor. It’s a small feature, but a clever one — these AI tools need to become more than simple chatbots, and features like Artifacts just give the app more to do.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498070/Claude_3_5_artifacts.jpg)

The new Artifacts feature is a hint at what a post-chatbot Claude might look like. Image: Anthropic

Artifacts actually seems to be a signal of the long-term vision for Claude. Anthropic has long said it is mostly focused on businesses (even as it hires consumer tech folks like Instagram co-founder Mike Krieger) and said in its press release announcing Claude 3.5 Sonnet that it plans to turn Claude into a tool for companies to “securely centralize their knowledge, documents, and ongoing work in one shared space.” That sounds more like Notion or Slack than ChatGPT, with Anthropic’s models at the center of the whole system.

For now, though, the model is the big news. And the pace of improvement here is wild to watch: Anthropic launched Claude 3 Opus in March, proudly saying it was as good as GPT-4 and Gemini 1.0, before OpenAI and Google released better versions of their models. Now, Anthropic has made its next move, and it surely won’t be long before its competition does so, too. Claude doesn’t get talked about as much as Gemini or ChatGPT, but it’s very much in the race.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25498203/snapchat_on_device_ai.png)

Snapchat AI turns prompts into new lens

We could soon see more immersive Snapchat lenses.

Snapchat AI turns prompts into new lens

Snapchat’s upcoming on-device AI model could transform your background — and your clothing — in real time.

By Emma Roth, a news writer who covers the streaming wars, consumer tech, crypto, social media, and much more. Previously, she was a writer and editor at MUO.Jun 19, 2024, 5:26 PM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498203/snapchat_on_device_ai.png)

Image: Snapchat

Snapchat offered an early look at its upcoming on-device AI model capable of transforming a user’s surroundings with augmented reality (AR). The new model will eventually let creators turn a text prompt into a custom lens — potentially opening the door for some wild looks to try on and send to friends.

You can see how this might look in the GIF below, which shows a person’s clothing and background transforming in real-time based on the prompt “50s sci-fi film.” Users will start seeing lenses using this new model in the coming months, while creators can start making lenses with the model by the end of this year, according to TechCrunch.

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498054/snapchat_lens_gif.gif)

GIF: Snapchat

Additionally, Snapchat is rolling out a suite of new AI tools that could make it easier for creators to make custom augmented reality (AR) effects. Some of the tools now available with the latest Lens Studio update include new face effects that let creators write a prompt or upload an image to create a custom lens that completely transforms a user’s face.

The suite also includes a feature, called Immersive ML, that applies a “realistic transformation over the user’s face, body, and surroundings in real time.” Other AI tools coming to Lens Studio allow lens creators to generate 3D assets based on a text or image prompt, create face masks and textures, as well as make 3D character heads that mimic a user’s expression.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25498174/snapchat_studio_immersive_ml.png)

This is Snapchat’s “Immersive ML” effect using the prompt “Matisse Style Painting.” Image: Snapchat

Over the past year, Snapchat has rolled out several new AI features, including a way for subscribers to send AI-generated snaps to friends. Snapchat also launched a ChatGPT-powered AI chatbot to all users last year.

/cdn.vox-cdn.com/uploads/chorus_asset/file/13292779/acastro_181017_1777_brain_ai_0002.jpg)

OpenAI’s former chief scientist is starting a new AI company

The startup’s goal is to build a safe and powerful AI system.

OpenAI’s former chief scientist is starting a new AI company

/Ilya Sutskever is launching Safe Superintelligence Inc., an AI startup that will prioritize safety over ‘commercial pressures.’

By Emma Roth, a news writer who covers the streaming wars, consumer tech, crypto, social media, and much more. Previously, she was a writer and editor at MUO.Jun 19, 2024, 2:03 PM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/13292779/acastro_181017_1777_brain_ai_0002.jpg)

Illustration by Alex Castro / The Verge

Ilya Sutskever, OpenAI’s co-founder and former chief scientist, is starting a new AI company focused on safety. In a post on Wednesday, Sutskever revealed Safe Superintelligence Inc. (SSI), a startup with “one goal and one product:” creating a safe and powerful AI system.

The announcement describes SSI as a startup that “approaches safety and capabilities in tandem,” letting the company quickly advance its AI system while still prioritizing safety. It also calls out the external pressure AI teams at companies like OpenAI, Google, and Microsoft often face, saying the company’s “singular focus” allows it to avoid “distraction by management overhead or product cycles.”

“Our business model means safety, security, and progress are all insulated from short-term commercial pressures,” the announcement reads. “This way, we can scale in peace.” In addition to Sutskever, SSI is co-founded by Daniel Gross, a former AI lead at Apple, and Daniel Levy, who previously worked as a member of technical staff at OpenAI.

Last year, Sutskever led the push to oust OpenAI CEO Sam Altman. Sutskever left OpenAI in May and hinted at the start of a new project. Shortly after Sutskever’s departure, AI researcher Jan Leike announced his resignation from OpenAI, citing safety processes that have “taken a backseat to shiny products.” Gretchen Krueger, a policy researcher at OpenAI, also mentioned safety concerns when announcing her departure.

As OpenAI pushes forward with partnerships with Apple and Microsoft, we likely won’t see SSI doing that anytime soon. During an interview with Bloomberg, Sutskever says SSI’s first product will be safe superintelligence, and the company “will not do anything else” until then.

GPT-5 will have ‘Ph.D.-level’ intelligence

In a new interview, OpenAI CTO Mira Murati describes how intelligent the next generation of AI will be, and when we can expect it.

GPT-5 will have ‘Ph.D.-level’ intelligence

By Luke Larsen June 20, 2024 4:46PM

The next major evolution of ChatGPT has been rumored for a long time. GPT-5, or whatever it will be called, has been talked about vaguely many times over the past year, but yesterday, OpenAI Chief Technology Officer Mira Murati gave some additional clarity on its capabilities.

In an interview with Dartmouth Engineering that was posted on X (formerly Twitter), Murati describes the jump from GPT-4 to GPT-5 as someone growing from a high-schooler up to university.

“If you look at the trajectory of improvement, systems like GPT-3 were maybe toddler-level intelligence,” Murati says. “And then systems like GPT-4 are more like smart high-schooler intelligence. And then, in the next couple of years, we’re looking at Ph.D. intelligence for specific tasks. Things are changing and improving pretty rapidly.”

Mira Murati: GPT-3 was toddler-level, GPT-4 was a smart high schooler and the next gen, to be released in a year and a half, will be PhD-level pic.twitter.com/jyNSgO9Kev

— Tsarathustra (@tsarnick) June 20, 2024

Interestingly, the interviewer asked her to specify the timetable, asking if it’d come in the next year. Murati nods her head, and then clarifies that it’d be in a year and a half. If that’s true, GPT-5 may not come out until late 2025 or early 2026. Some will be disappointed to hear that the next big step is that far away.

After all, the first rumors about the launch time of GPT-5 were that it would be in late 2023. And then, when that didn’t turn out, reports indicated that it would launch later this summer. That turned out to be GPT-4o, which was an impressive release, but it wasn’t the kind of step function in intelligence Murati is referencing here.

In terms of the claim about intelligence, it confirms what has been said about GPT-5 in the past. Microsoft CTO Kevin Scott claims that the next-gen AI systems will be “capable of passing Ph.D. exams” thanks to better memory and reasoning operations.

Murati admits that the “Ph.D.-level” intelligence only applies to some tasks. “These systems are already human-level in specific tasks, and, of course, in a lot of tasks, they’re not,” she says.

1/1

Mira Murati: GPT-3 was toddler-level, GPT-4 was a smart high schooler and the next gen, to be released in a year and a half, will be PhD-level

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Mira Murati: GPT-3 was toddler-level, GPT-4 was a smart high schooler and the next gen, to be released in a year and a half, will be PhD-level

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

PoorAndDangerous

Superstar

played with Claude 3.5 sonnet today, I really like it for coding, the artifact feature they released is great. It has a really low amount of queries you can do and then you're locked out for like 4 hours, and this is with premium. Which is an issue when you are iterating on code over and over. It does seem more intelligent than GPT, but doesn't have things like saved memory, real time access to internet.

1/9

Claude 3.5 *Sonnet* solves 64% of problems on Anthropic's internal agentic coding/reasoning benchmark. Claude 3 Opus only solved 38%. That's a massive improvement. Better reasoning, faster, and WAY cheaper.

2/9

this is ridiculous

3/9

rate limits on the chatbot are insane. Need more messages.

4/9

What are they modifying to get better with smaller models? At this rate Apple’s local models might work

5/9

Excited to see how it feels in people’s hands. That is a gigantic leap. Interesting to me that a relatively “small” push in intelligence can open so many new use cases. I’m hype

6/9

holy shyt

7/9

Let’s goooooo

8/9

Msg limit is very very less on premium plans as well

9/9

Is it possible that it had been trained in them?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Claude 3.5 *Sonnet* solves 64% of problems on Anthropic's internal agentic coding/reasoning benchmark. Claude 3 Opus only solved 38%. That's a massive improvement. Better reasoning, faster, and WAY cheaper.

2/9

this is ridiculous

3/9

rate limits on the chatbot are insane. Need more messages.

4/9

What are they modifying to get better with smaller models? At this rate Apple’s local models might work

5/9

Excited to see how it feels in people’s hands. That is a gigantic leap. Interesting to me that a relatively “small” push in intelligence can open so many new use cases. I’m hype

6/9

holy shyt

7/9

Let’s goooooo

8/9

Msg limit is very very less on premium plans as well

9/9

Is it possible that it had been trained in them?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

played with Claude 3.5 sonnet today, I really like it for coding, the artifact feature they released is great. It has a really low amount of queries you can do and then you're locked out for like 4 hours, and this is with premium. Which is an issue when you are iterating on code over and over. It does seem more intelligent than GPT, but doesn't have things like saved memory, real time access to internet.

I just converted a bookmarklet that worked on twitter to do the same thing but for bluesky/bsky.app posts and claude sonnet 3.5 gave me working code, zero-shot!!!

i only gave it one html post example so it doesn't know how to posts with linked articles but i'll fix that later.

clipboard output:

Code:

https://bsky.app/profile/roryblank.bsky.social/post/3kvf3qr42zr2n

[SPOILER="thread continued"]

[/SPOILER]

[SPOILER="full text"]

1/1

the delicate balance of power (2024)

[COLOR=rgb(184, 49, 47)][B][SIZE=5]To post posts in this format, more info here: [URL]https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196[/URL][/SIZE][/B][/COLOR]

[/SPOILER]

[SPOILER="larger images"]

[img]https://cdn.bsky.app/img/feed_fullsize/plain/did:plc:aesxmgqau5bmwvfmtd3wsex2/bafkreiase4b4qs7oumyd6hsfy4qwxnbnpf4qcep73br34fcvdgig7bi5lm@jpeg[/img]

[/SPOILER]Computer Science > Artificial Intelligence

[Submitted on 20 Jun 2024]

Q* - Improving Multi-step Reasoning for LLMs with Deliberative Planning

Chaojie Wang, Yanchen Deng, Zhiyi Lv, Shuicheng Yan, An Bo

Large Language Models (LLMs) have demonstrated impressive capability in many nature language tasks. However, the auto-regressive generation process makes LLMs prone to produce errors, hallucinations and inconsistent statements when performing multi-step reasoning. In this paper, we aim to alleviate the pathology by introducing Q*, a general, versatile and agile framework for guiding LLMs decoding process with deliberative planning. By learning a plug-and-play Q-value model as heuristic function, our Q* can effectively guide LLMs to select the most promising next step without fine-tuning LLMs for each task, which avoids the significant computational overhead and potential risk of performance degeneration on other tasks. Extensive experiments on GSM8K, MATH and MBPP confirm the superiority of our method.

| Subjects: | Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2406.14283 [cs.AI] |

| (or arXiv:2406.14283v1 [cs.AI] for this version) | |

| [2406.14283] Q*: Improving Multi-step Reasoning for LLMs with Deliberative Planning |

Submission history

From: Chaojie Wang [ view email][v1] Thu, 20 Jun 2024 13:08:09 UTC (512 KB)

1/12

AI Text-video is moving SOO quickly... 2 Weeks ago - KLING AI Last week - Luma AI Today - Runway Gen3 Runway Gen3 is the most realistic (lmk your favourite) Here's some examples 1. https://nitter.poast.org/i/status/1802730721334677835/video/1

2/12

2. REALLY realistic

3/12

3. It's really good at expressing human emotion

4/12

4. this is insane

5/12

5. wow.

6/12

Let me know your favourite AI text-video generator. KLING AI? Sora? Luma AI? Runway Gen3? Make sure to follow @ArDeved for everything AI

7/12

Love the progress! Exciting to see how far AI text-video has come.

8/12

definitely

9/12

runway Gen3 is crushing it

10/12

Fr

11/12

This is a very healthy competition. Can’t wait to see full length movies generated with the AI.

12/12

Me too!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

AI Text-video is moving SOO quickly... 2 Weeks ago - KLING AI Last week - Luma AI Today - Runway Gen3 Runway Gen3 is the most realistic (lmk your favourite) Here's some examples 1. https://nitter.poast.org/i/status/1802730721334677835/video/1

2/12

2. REALLY realistic

3/12

3. It's really good at expressing human emotion

4/12

4. this is insane

5/12

5. wow.

6/12

Let me know your favourite AI text-video generator. KLING AI? Sora? Luma AI? Runway Gen3? Make sure to follow @ArDeved for everything AI

7/12

Love the progress! Exciting to see how far AI text-video has come.

8/12

definitely

9/12

runway Gen3 is crushing it

10/12

Fr

11/12

This is a very healthy competition. Can’t wait to see full length movies generated with the AI.

12/12

Me too!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

New Paper and Blog!

Sakana AI

As LLMs become better at generating hypotheses and code, a fascinating possibility emerges: using AI to advance AI itself! As a first step, we got LLMs to discover better algorithms for training LLMs that align with human preferences.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

New Paper and Blog!

Sakana AI

As LLMs become better at generating hypotheses and code, a fascinating possibility emerges: using AI to advance AI itself! As a first step, we got LLMs to discover better algorithms for training LLMs that align with human preferences.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Can LLMs invent better ways to train LLMs?

At Sakana AI, we’re pioneering AI-driven methods to automate AI research and discovery. We’re excited to release DiscoPOP: a new SOTA preference optimization algorithm that was discovered and written by an LLM!

Sakana AI

Our method leverages LLMs to propose and implement new preference optimization algorithms. We then train models with those algorithms and evaluate their performance, providing feedback to the LLM. By repeating this process for multiple generations in an evolutionary loop, the LLM discovers many highly-performant and novel preference optimization objectives!

Paper: [2406.08414] Discovering Preference Optimization Algorithms with and for Large Language Models

GitHub: GitHub - SakanaAI/DiscoPOP: Code for Discovering Preference Optimization Algorithms with and for Large Language Models

Model: SakanaAI/DiscoPOP-zephyr-7b-gemma · Hugging Face

We proudly collaborated with the @UniOfOxford (@FLAIR_Ox) and @Cambridge_Uni (@MihaelaVDS) on this groundbreaking project. Looking ahead, we envision a future where AI-driven research reduces the need for extensive human intervention and computational resources. This will accelerate scientific discoveries and innovation, pushing the boundaries of what AI can achieve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Can LLMs invent better ways to train LLMs?

At Sakana AI, we’re pioneering AI-driven methods to automate AI research and discovery. We’re excited to release DiscoPOP: a new SOTA preference optimization algorithm that was discovered and written by an LLM!

Sakana AI

Our method leverages LLMs to propose and implement new preference optimization algorithms. We then train models with those algorithms and evaluate their performance, providing feedback to the LLM. By repeating this process for multiple generations in an evolutionary loop, the LLM discovers many highly-performant and novel preference optimization objectives!

Paper: [2406.08414] Discovering Preference Optimization Algorithms with and for Large Language Models

GitHub: GitHub - SakanaAI/DiscoPOP: Code for Discovering Preference Optimization Algorithms with and for Large Language Models

Model: SakanaAI/DiscoPOP-zephyr-7b-gemma · Hugging Face

We proudly collaborated with the @UniOfOxford (@FLAIR_Ox) and @Cambridge_Uni (@MihaelaVDS) on this groundbreaking project. Looking ahead, we envision a future where AI-driven research reduces the need for extensive human intervention and computational resources. This will accelerate scientific discoveries and innovation, pushing the boundaries of what AI can achieve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Pretty amazing stuff from the Udio/Suno lawsuits. Record labels were able to basically recreate versions of very famous songs with highly specific prompts, then linked to them in the lawsuits. I made a short compilation here: Listen to the AI-Generated Ripoff Songs That Got Udio and Suno Sued

2/11

also notable: record industry is not just suing Udio and Suno. It is also seeking to sue & unmask 10 John Does who allegedly helped them scrape the music and make the models

3/11

A full list of AI generated songs that record industry claims are ripped off with links and prompts are available here: https://s3.documentcloud.org/documents/24776032/1-3.pdf and here https://s3.documentcloud.org/documents/24776029/1-2.pdf

4/11

songs they wee able to reproduce:

5/11

It will get nasty after going to discovery and they are forced to disclose exactly how the AI was trained.

6/11

called it in April

7/11

ScarJo vs Openai. Same thing everyone runs with the meme but the audio is not even close. In this case, of couse it has to resemble the original as they are probably using the mimic option to promp the audio with the original song, will they sue every other cover band too? silly.

8/11

A few months ago you were able to get Beatles covers by just asking for a "Bea_tles" song

9/11

The theft from songwriters and musicians is a century old and continuing

10/11

This is biased by lifting the lyrics from the actual songs. Without that I don’t think anyone would say the tune is the same or even similar.

11/11

lol cc: .@joshtpm is there any past example of a hot new industry being sued as rapidly as the AI “creators”? No.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Pretty amazing stuff from the Udio/Suno lawsuits. Record labels were able to basically recreate versions of very famous songs with highly specific prompts, then linked to them in the lawsuits. I made a short compilation here: Listen to the AI-Generated Ripoff Songs That Got Udio and Suno Sued

2/11

also notable: record industry is not just suing Udio and Suno. It is also seeking to sue & unmask 10 John Does who allegedly helped them scrape the music and make the models

3/11

A full list of AI generated songs that record industry claims are ripped off with links and prompts are available here: https://s3.documentcloud.org/documents/24776032/1-3.pdf and here https://s3.documentcloud.org/documents/24776029/1-2.pdf

4/11

songs they wee able to reproduce:

5/11

It will get nasty after going to discovery and they are forced to disclose exactly how the AI was trained.

6/11

called it in April

7/11

ScarJo vs Openai. Same thing everyone runs with the meme but the audio is not even close. In this case, of couse it has to resemble the original as they are probably using the mimic option to promp the audio with the original song, will they sue every other cover band too? silly.

8/11

A few months ago you were able to get Beatles covers by just asking for a "Bea_tles" song

9/11

The theft from songwriters and musicians is a century old and continuing

10/11

This is biased by lifting the lyrics from the actual songs. Without that I don’t think anyone would say the tune is the same or even similar.

11/11

lol cc: .@joshtpm is there any past example of a hot new industry being sued as rapidly as the AI “creators”? No.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Breaking News from Chatbot Arena

@AnthropicAI Claude 3.5 Sonnet has just made a huge leap, securing the #1 spot in Coding Arena, Hard Prompts Arena, and #2 in the Overall leaderboard.

New Sonnet has surpassed Opus at 5x the lower cost and competitive with frontier models GPT-4o/Gemini 1.5 Pro across the boards.

Huge congrats to

@AnthropicAI

for this incredible milestone! Can't wait to see the new Opus & Haiku. Our new vision leaderboard is also coming soon!

(More analysis below)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Breaking News from Chatbot Arena

@AnthropicAI Claude 3.5 Sonnet has just made a huge leap, securing the #1 spot in Coding Arena, Hard Prompts Arena, and #2 in the Overall leaderboard.

New Sonnet has surpassed Opus at 5x the lower cost and competitive with frontier models GPT-4o/Gemini 1.5 Pro across the boards.

Huge congrats to

@AnthropicAI

for this incredible milestone! Can't wait to see the new Opus & Haiku. Our new vision leaderboard is also coming soon!

(More analysis below)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196