1/1

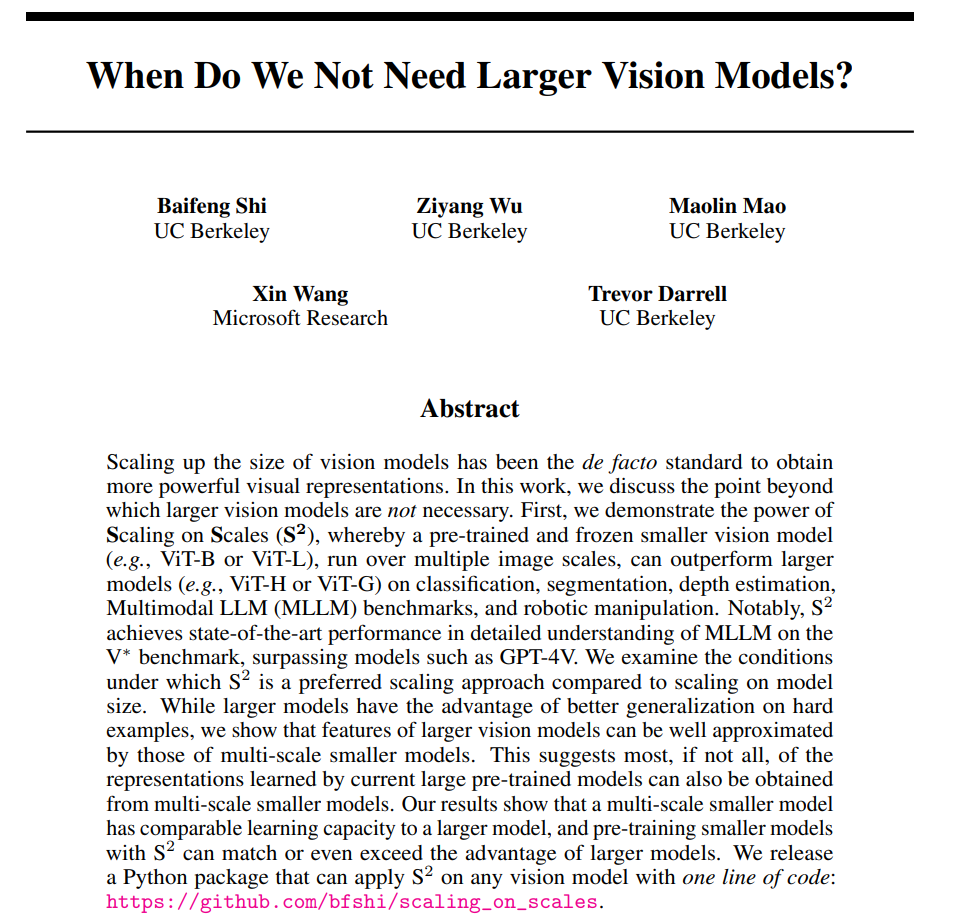

When Do We Not Need Larger Vision Models?

repo: GitHub - bfshi/scaling_on_scales

abs: [2403.13043] When Do We Not Need Larger Vision Models?

**Abstract:**

In this work, we explore the necessity of larger models for enhanced visual understanding. Our findings suggest that scaling based on the dimension of image scales—termed as **Scaling on Scales (S2)**—rather than increasing the model size, generally leads to superior performance across a diverse range of downstream tasks.

**Key Findings:**

1. Smaller models employing S2 can capture most of the insights learned by larger models.

2. Pre-training smaller models with S2 can level the playing field with larger models, and in some cases, surpass them.

**Implications for Future Research:**

S2 introduces several considerations for future work:

- **Scale-Selective Processing:** Not all scales at every position within an image hold valuable information. Depending on the content of the image and the overarching task, it can be more efficient to process certain scales for specific regions. This approach mimics the bottom-up and top-down selection mechanisms found in human visual attention (References: 86, 59, 33).

- **Parallel Processing of a Single Image:** Unlike traditional Vision Transformers (ViT) where the entire image is processed in unison, S2 allows each sub-image to be handled independently. This capability facilitates parallel processing of different segments of a single image, which is particularly advantageous in scenarios where reducing latency in processing large images is paramount (Reference: 84).

When Do We Not Need Larger Vision Models?

repo: GitHub - bfshi/scaling_on_scales

abs: [2403.13043] When Do We Not Need Larger Vision Models?

**Abstract:**

In this work, we explore the necessity of larger models for enhanced visual understanding. Our findings suggest that scaling based on the dimension of image scales—termed as **Scaling on Scales (S2)**—rather than increasing the model size, generally leads to superior performance across a diverse range of downstream tasks.

**Key Findings:**

1. Smaller models employing S2 can capture most of the insights learned by larger models.

2. Pre-training smaller models with S2 can level the playing field with larger models, and in some cases, surpass them.

**Implications for Future Research:**

S2 introduces several considerations for future work:

- **Scale-Selective Processing:** Not all scales at every position within an image hold valuable information. Depending on the content of the image and the overarching task, it can be more efficient to process certain scales for specific regions. This approach mimics the bottom-up and top-down selection mechanisms found in human visual attention (References: 86, 59, 33).

- **Parallel Processing of a Single Image:** Unlike traditional Vision Transformers (ViT) where the entire image is processed in unison, S2 allows each sub-image to be handled independently. This capability facilitates parallel processing of different segments of a single image, which is particularly advantageous in scenarios where reducing latency in processing large images is paramount (Reference: 84).

)

)