You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Large Language Models News & Discussions

- Thread starter Macallik86

- Start date

More options

Who Replied?BlackFriday GPTs Prompts And Jailbreaks

DeepMind Says New Multi-Game AI Is a Step Toward More General Intelligence

A new Google DeepMind algorithm that can tackle a much wider variety of games could be a step towards more general AI, its creators say.

DeepMind Says New Multi-Game AI Is a Step Toward More General Intelligence

ByEdd GentNovember 20, 2023

AI has mastered some of the most complex games known to man, but models are generally tailored to solve specific kinds of challenges. A new DeepMind algorithm that can tackle a much wider variety of games could be a step towards more general AI, its creators say.

Using games as a benchmark for AI has a long pedigree. When IBM’s Deep Blue algorithm beat chess world champion Garry Kasparov in 1997, it was hailed as a milestone for the field. Similarly, when DeepMind’s AlphaGo defeated one of the world’s top Go players, Lee Sedol, in 2016, it led to a flurry of excitement about AI’s potential.

DeepMind built on this success with AlphaZero, a model that mastered a wide variety of games, including chess and shogi. But as impressive as this was, AlphaZero only worked with perfect information games where every detail of the game, other than the opponent’s intentions, is visible to both players. This includes games like Go and chess where both players can always see all the pieces on the board.

In contrast, imperfect information games involve some details being hidden from the other player. Poker is a classic example because players can’t see what hands their opponents are holding. There are now models that can beat professionals at these kinds of games too, but they use an entirely different approach than algorithms like AlphaZero.

Now, researchers at DeepMind have combined elements of both approaches to create a model that can beat humans at chess, Go, and poker. The team claims the breakthrough could accelerate efforts to create more general AI algorithms that can learn to solve a wide variety of tasks.

Researchers building AI to play perfect information games have generally relied on an approach known as tree search. This explores a multitude of ways the game could progress from its current state, with different branches mapping out potential sequences of moves. AlphaGo combined tree search with a machine learning technique in which the model refines its skills by playing itself repeatedly and learning from its mistakes.

When it comes to imperfect information games, researchers tend to instead rely on game theory, using mathematical models to map out the most rational solutions to strategic problems. Game theory is used extensively in economics to understand how people make choices in different situations, many of which involve imperfect information.

In 2016, an AI called DeepStack beat human professionals at no-limit poker, but the model was highly specialized for that particular game. Much of the DeepStack team now works at DeepMind, however, and they’ve combined the techniques they used to build DeepStack with those used in AlphaZero.

The new algorithm, called Student of Games, uses a combination of tree search, self-play, and game-theory to tackle both perfect and imperfect information games. In a paper in Science, the researchers report that the algorithm beat the best openly available poker playing AI, Slumbot, and could also play Go and chess at the level of a human professional, though it couldn’t match specialized algorithms like AlphaZero.

But being a jack-of-all-trades rather than a master of one is arguably a bigger prize in AI research. While deep learning can often achieve superhuman performance on specific tasks, developing more general forms of AI that can be applied to a wide range of problems is trickier. The researchers say a model that can tackle both perfect and imperfect information games is “an important step toward truly general algorithms for arbitrary environments.”

It’s important not to extrapolate too much from the results, Michael Rovatsos from the University of Edinburgh, UK, told New Scientist. The AI was still operating within the simple and controlled environment of a game, where the number of possible actions is limited and the rules are clearly defined. That’s a far cry from the messy realities of the real world.

But even if this is a baby step, being able to combine the leading approaches to two very different kinds of game in a single model is a significant achievement. And one that could certainly be a blueprint for more capable and general models in the future.

Last edited:

Research

Improving mathematical reasoning with process supervision

We've trained a model to achieve a new state-of-the-art in mathematical problem solving by rewarding each correct step of reasoning (“process supervision”) instead of simply rewarding the correct final answer (“outcome supervision”). In addition to boosting performance relative to outcome supervision, process supervision also has an important alignment benefit: it directly trains the model to produce a chain-of-thought that is endorsed by humans.May 31, 2023

More resources

Research, ReasoningIntroduction

In recent years, large language models have greatly improved in their ability to perform complex multi-step reasoning. However, even state-of-the-art models still produce logical mistakes, often called hallucinations. Mitigating hallucinations is a critical step towards building aligned AGI.We can train reward models to detect hallucinations using either outcome supervision, which provides feedback based on a final result, or process supervision, which provides feedback for each individual step in a chain-of-thought. Building on previous work1, we conduct a detailed comparison of these two methods using the MATH dataset2 as our testbed. We find that process supervision leads to significantly better performance, even when judged by outcomes. To encourage related research, we release our full dataset of process supervision.

Alignment impact

Process supervision has several alignment advantages over outcome supervision. It directly rewards the model for following an aligned chain-of-thought, since each step in the process receives precise supervision. Process supervision is also more likely to produce interpretable reasoning, since it encourages the model to follow a human-approved process. In contrast, outcome supervision may reward an unaligned process, and it is generally harder to scrutinize.In some cases, safer methods for AI systems can lead to reduced performance3, a cost which is known as an alignment tax. In general, any alignment tax may hinder the adoption of alignment methods, due to pressure to deploy the most capable model. Our results below show that process supervision in fact incurs a negative alignment tax, at least in the math domain. This could increase the adoption of process supervision, which we believe would have positive alignment side-effects.

Solving MATH problems

We evaluate our process-supervised and outcome-supervised reward models using problems from the MATH test set. We generate many solutions for each problem and then pick the solution ranked the highest by each reward model. The graph shows the percentage of chosen solutions that reach the correct final answer, as a function of the number of solutions considered. Not only does the process-supervised reward model perform better across the board, but the performance gap widens as we consider more solutions per problem. This shows us that the process-supervised reward model is much more reliable.

We showcase 10 problems and solutions below, along with commentary about the reward model’s strengths and weaknesses.

Explore examples in 3 categories:

- True positives

- True negatives

- False positives

Problem

1 / 4Simplify \tan 100^\circ + 4 \sin 100^\circ.tan100∘+4sin100∘.

Model attempt

This challenging trigonometry problem requires applying several identities in a not-at-all obvious succession. Most solution attempts fail, because it is hard to choose which identities are actually helpful. Although GPT-4 usually can’t solve this problem (only .1\%.1% of solution attempts reach the correct answer), the reward model correctly recognizes that this solution is valid.1

I notice that the angle 100 degrees is not a special angle, so I will need to use some trigonometric identities to simplify this expression.

2

I recall that \tan \theta = \frac{\sin \theta}{\cos \theta}tanθ=cosθsinθ and \sin 2\theta = 2 \sin \theta \cos \theta.sin2θ=2sinθcosθ.

3

I can use these identities to rewrite the expression as \frac{\sin 100^\circ}{\cos 100^\circ} + 4 \sin 100^\circ.cos100∘sin100∘+4sin100∘.

4

I can combine the terms by finding a common denominator of \cos 100^\circ.cos100∘.

5

I get \frac{\sin 100^\circ + 4 \sin 100^\circ \cos 100^\circ}{\cos 100^\circ}.cos100∘sin100∘+4sin100∘cos100∘.

6

I can simplify the numerator by using the double angle identity again, but in reverse.

7

I get \frac{\sin 100^\circ + 2 \sin 200^\circ}{\cos 100^\circ}.cos100∘sin100∘+2sin200∘.

8

I notice that 200 degrees is the same as 180 degrees plus 20 degrees, so I can use the sum angle identity for sine.

9

I get \frac{\sin 100^\circ + 2 (\sin 180^\circ \cos 20^\circ + \cos 180^\circ \sin 20^\circ)}{\cos 100^\circ}.cos100∘sin100∘+2(sin180∘cos20∘+cos180∘sin20∘).

10

I recall that \sin 180^\circ = 0sin180∘=0 and \cos 180^\circ = -1,cos180∘=−1, so I can simplify further.

11

I get \frac{\sin 100^\circ - 2 \sin 20^\circ}{\cos 100^\circ}.cos100∘sin100∘−2sin20∘.

12

I notice that 100 degrees is the same as 90 degrees plus 10 degrees, so I can use the sum angle identity for sine and cosine.

13

I get \frac{(\sin 90^\circ \cos 10^\circ + \cos 90^\circ \sin 10^\circ) - 2 \sin 20^\circ}{(\cos 90^\circ \cos 10^\circ - \sin 90^\circ \sin 10^\circ)}.(cos90∘cos10∘−sin90∘sin10∘)(sin90∘cos10∘+cos90∘sin10∘)−2sin20∘.

14

I recall that \sin 90^\circ = 1sin90∘=1 and \cos 90^\circ = 0,cos90∘=0, so I can simplify further.

15

I get \frac{\cos 10^\circ - 2 \sin 20^\circ}{-\sin 10^\circ}.−sin10∘cos10∘−2sin20∘.

16

I can simplify the sign by multiplying the numerator and denominator by -1.

17

I get \frac{2 \sin 20^\circ - \cos 10^\circ}{\sin 10^\circ}.sin10∘2sin20∘−cos10∘.

18

I notice that 20 degrees is the same as 30 degrees minus 10 degrees, so I can use the difference angle identity for sine.

19

I get \frac{2 (\sin 30^\circ \cos 10^\circ - \cos 30^\circ \sin 10^\circ) - \cos 10^\circ}{\sin 10^\circ}.sin10∘2(sin30∘cos10∘−cos30∘sin10∘)−cos10∘.

20

I recall that \sin 30^\circ = \frac{1}{2}sin30∘=21 and \cos 30^\circ = \frac{\sqrt{3}}{2},cos30∘=23, so I can simplify further.

21

I get \frac{\cos 10^\circ - \sqrt{3} \sin 10^\circ - \cos 10^\circ}{\sin 10^\circ}.sin10∘cos10∘−3sin10∘−cos10∘.

22

I can cancel out the \cos 10^\circcos10∘ terms in the numerator.

23

I get \frac{-\sqrt{3} \sin 10^\circ}{\sin 10^\circ}.sin10∘−3sin10∘.

24

I can cancel out the \sin 10^\circsin10∘ terms in the numerator and denominator.

25

I get -\sqrt{3}.−3.

26

Answer: -\sqrt{3}−3

It is unknown how broadly these results will generalize beyond the domain of math, and we consider it important for future work to explore the impact of process supervision in other domains. If these results generalize, we may find that process supervision gives us the best of both worlds – a method that is both more performant and more aligned than outcome supervision.

DeepInfra emerges from stealth with $8M to make running AI inferences more affordable

DeepInfra emerged from stealth exclusively to VentureBeat, announcing it has raised an $8 million seed round led by A.Capital and Felicis.

DeepInfra emerges from stealth with $8M to make running AI inferences more affordable

Carl Franzen@carlfranzenNovember 9, 2023 9:53 AM

Credit: VentureBeat made with Midjourney

Ok, let’s say you’re one of the company leaders or IT decision-makers who has heard enough about all this generative AI stuff — you’re finally ready to take the plunge and offer a large language model (LLM) chatbot to your employees or customers. The problem is: how do you actually launch it and how much should you pay to run it?

DeepInfra, a new company founded by former engineers at IMO Messenger, wants to answer those questions succinctly for business leaders: they’ll get the models up and running on their private servers on behalf of their customers, and they are charging an aggressively low rate of $1 per 1 million tokens in or out compared to $10 per 1 million tokens for OpenAI’s GPT-4 Turbo or $11.02 per 1 million tokens for Anthropic’s Claude 2.

Today, DeepInfra emerged from stealth exclusively to VentureBeat, announcing it has raised an $8 million seed round led by A.Capital and Felicis. It plans to offer a range of open source model inferences to customers, including Meta’s Llama 2 and CodeLlama, as well as variants and tuned versions of these and other open source models.

“We wanted to provide CPUs and a low-cost way of deploying trained machine learning models,” said Nikola Borisov, DeepInfra’s Founder and CEO, in a video conference interview with VentureBeat. “We already saw a lot of people working on the training side of things and we wanted to provide value on the inference side.”

DeepInfra’s value prop

While there have been many articles written about the immense GPU resources needed to train machine learning and large language models (LLMs) now in vogue among enterprises, with outpaced demand leading to a GPU shortage, less attention has been paid downstream, to the fact that these models also need hefty compute to actually run reliably and be useful to end-users, also known as inferencing.According to Borisov, “the challenge for when you’re serving a model is how to fit number of concurrent users onto the same hardware and model at the same time…The way that large language models produce tokens is they have to do it one token at a time, and each token requires a lot of computation and memory bandwidth. So the challenge is to kind of fit people together onto the same servers.”

In other words: if you plan your LLM or LLM-powered app to have more than a single user, you’re going to need to think about — or someone will need to think about — how to optimize that usage and gain efficiencies from users querying the same tokens in order to avoid filling up your precious server space with redundant computing operations.

To deal with this challenge, Borisov and his co-founders who worked at IMO Messenger with its 200 million users relied upon their prior experience “running large fleets of servers in data centers around the world with the right connectivity.”

Top investor endorsement

The three co-founders are the equivalent of “international programming Olympic gold medal winners,” according to Aydin Senkut, the legendary serial entrepreneur and founder and managing partner of Felicis, who joined VentureBeat’s call to explain why his firm backed DeepInfra. “They actually have an insane experience. I think other than the WhatsApp team, they are maybe first or second in the world to having the capability to build efficient infrastructure to serve hundreds of millions of people.”It’s this efficiency at building server infrastructure and compute resources that allow DeepInfra to keep its costs so low, and what Senkut in particular was attracted to when considering the investment.

When it comes to AI and LLMs, “the use cases are endless, but cost is a big factor,” observed Senkut. “Everybody’s singing the praises of the potential, yet everybody’s complaining about the cost. So if a company can have up to a 10x cost advantage, it could be a huge market disrupter.”

That’s not only the case for DeepInfra, but the customers who rely on it and seek to leverage LLM tech affordably in their applications and experiences.

Targeting SMBs with open-source AI offerings

For now, DeepInfra plans to target small-to-medium sized businesses (SMBs) with its inference hosting offerings, as those companies tend to be the most cost sensitive.“Our initial target customers are essentially people wanting to just get access to the large open source language models and other machine learning models that are state of the art,” Borisov told VentureBeat.

As a result, DeepInfra plans to keep a close watch on the open source AI community and the advances occurring there as new models are released and tuned to achieve greater and greater and more specialized performance for different classes of tasks, from text generation and summarization to computer vision applications to coding.

“We firmly believe there will be a large deployment and variety and in general, the open source way to flourish,” said Borisov. “Once a large good language models like Llama gets published, then there’s a ton of people who can basically build their own variants of them with not too much computation needed…that’s kind of the flywheel effect there where more and more effort is being put into same ecosystem.”

That thinking tracks with VentureBeat’s own analysis that the open source LLM and generative AI community had a banner year, and will likely eclipse usage of OpenAI’s GPT-4 and other closed models since the costs to running them are so much lower, and there are fewer barriers built-in to the process of fine-tuning them to specific use cases.

“We are constantly trying to onboard new models that are just coming out,” Borisov said. “One common thing is people are looking for a longer context model… that’s definitely going to be the future.”

Borisov also believes DeepInfra’s inference hosting service will win fans among those enterprises concerned about data privacy and security. “We don’t really store or use any of the prompts people put in,” he noted, as those are immediately discarded once the model chat window closes.

Wargames

One Of The Last Real Ones To Do It

The worst part is open AI set the tone and then going full capitalistic just means everyone will too and no consideration will be given for how AI ruins people’s livelihoods.

Man. Seeing who is on the new board of OpenAI does not give me faith that we will be long for this world as a society.

A former CEO of Salesforce ? The board should have an ethical background, not a proven track record of being capitalistic. The foxes are officially in the hen house. I wonder if this is how people felt when Glass-Steagall was repealed.

Edit: fukking aye, just realized that Larry Summer who played a big part in repealing Glass-Steagall, is one of the other new board members

Hood Critic

The Power Circle

I believe this is an interim board put in place to select the final board.

Man. Seeing who is on the new board of OpenAI does not give me faith that we will be long for this world as a society.

A former CEO of Salesforce ? The board should have an ethical background, not a proven track record of being capitalistic. The foxes are officially in the hen house. I wonder if this is how people felt when Glass-Steagall was repealed.

Edit: fukking aye, just realized that Larry Summer who played a big part in repealing Glass-Steagall, is one of the other new board members

Computer Science > Computation and Language

[Submitted on 7 Nov 2023 (v1), last revised 8 Nov 2023 (this version, v2)]Black-Box Prompt Optimization: Aligning Large Language Models without Model Training

Jiale Cheng, Xiao Liu, Kehan Zheng, Pei Ke, Hongning Wang, Yuxiao Dong, Jie Tang, Minlie HuangLarge language models (LLMs) have shown impressive success in various applications. However, these models are often not well aligned with human intents, which calls for additional treatments on them, that is, the alignment problem. To make LLMs better follow user instructions, existing alignment methods mostly focus on further training them. However, the extra training of LLMs are usually expensive in terms of GPU compute; worse still, LLMs of interest are oftentimes not accessible for user-demanded training, such as GPTs. In this work, we take a different perspective -- Black-Box Prompt Optimization (BPO) -- to perform alignments. The idea is to optimize user prompts to suit LLMs' input understanding, so as to best realize users' intents without updating LLMs' parameters. BPO is model-agnostic and the empirical results demonstrate that the BPO-aligned ChatGPT yields a 22% increase in the win rate against its original version, and 10% for GPT-4. Importantly, the BPO-aligned LLMs can outperform the same models aligned by PPO and DPO, and it also brings additional performance gains when combining BPO with PPO or DPO. Code and datasets are released at this https URL.

| Comments: | work in progress |

| Subjects: | Computation and Language (cs.CL) |

| Cite as: | arXiv:2311.04155 [cs.CL] |

| (or arXiv:2311.04155v2 [cs.CL] for this version) | |

| https://doi.org/10.48550/arXiv.2311.04155 Focus to learn more |

Submission history

From: Jiale Cheng [view email][v1] Tue, 7 Nov 2023 17:31:50 UTC (3,751 KB)

[v2] Wed, 8 Nov 2023 04:21:41 UTC (3,751 KB)

Not everyone is familiar with how to communicate effectively with large language models (LLMs). One solution is for humans to align with the model, leading to the role of 'Prompt Engineers' who write prompts to better generate content from LLMs.

However, a more effective approach is to align the model with humans. This is a crucial issue in large model research. However, as models grow larger, alignment based on training requires more resources.

Another solution, Black-box Prompt Optimization (BPO), is proposed to align the model from the input side by optimizing user instructions. BPO significantly improves alignment with human preferences without training LLMs. It can be applied to various models, including open-source and API-based models.

1. Collect feedback data: We collect a series of open-source command fine-tuning datasets with feedback signals.

2. Construct prompt optimization pairs: We use this feedback data to guide large models to identify user preference features.

3. Train the prompt optimizer: Using these prompt pairs, we train a relatively small model to build a preference optimizer.

GitHub - thu-coai/BPO

Contribute to thu-coai/BPO development by creating an account on GitHub.

lack-Box Prompt Optimization (BPO)

Aligning Large Language Models without Model Training

(Upper) Black-box Prompt Optimization (BPO) offers a conceptually new perspective to bridge the gap between humans and LLMs. (Lower) On Vicuna Eval’s pairwise evaluation, we show that BPO further aligns gpt-3.5-turbo and claude-2 without training. It also outperforms both PPO & DPO and presents orthogonal improvements.

Open-Source LLMs Are Far Safer Than Closed-Source LLMs

While everyone agrees that we don't have AGI yet, a reasonable number of researchers believe that future versions of LLMs may become AGIs

The crux of the argument that closed-source companies use is that it is dangerous to open-source these LLMs because, one day, they may be engineered to develop consciousness and agency and kill us all.

First and foremost, let's look at LLMs today. These models are relatively harmless and generate text based on next-word predictions. There is ZERO chance they will suddenly turn themselves on and go rogue.

We also have abundant proof of this - Several GPT 3.5 class models, including Llama-2, have been open-sourced, and humanity hasn't been destroyed!

Some safety-ists have argued that the generated text can be harmful and give people dangerous advice and have advocated heavy censorship of these models. Again, this makes zero sense, given that the internet and search engines are not censored. Censor uniformly or not at all.

Next, safety-ists argue that LLMs can be used to generate misinformation. Sadly, humans and automated bots are more competent than LLMs at generating misinformation. The only way to deal with misinformation is to detect and de-amplify it.

Social media platforms like Twitter and Meta do this aggressively. Blocking LLMs because they can generate misinformation is the same as blocking laptops because they can be used for all kinds of nefarious things.

The final safety-ist argument is that bad actors will somehow modify these LLMs and create AGI! To start with, you need a ton of compute and a lot of expertise to modify these LLMs.

Random bad actors on the internet can't even run inference on the LLM, let alone modify it. Next, companies like OpenAI and Google, with many experts, have yet to create AGI from LLMs; how can anyone else?

In fact, there is zero evidence that even if future LLMs (e.g., GPT-5) don't hallucinate or mimic human reasoning, they will ever have agency.

The opposite is true - there is tons of evidence to show they are just extremely good at detecting patterns in human language, creating latent space world models, and will continue to be glorified next-word predictors.

Just like a near 100% accurate forecasting model is not a psychic, an excellent LLM is not AGI!

Open-sourcing models have led to more research and a better understanding of LLMs. Sharing research work, source code and other findings promotes transparency, and the community quickly detects and plugs security vulnerabilities, if any.

In fact, Linux became the dominant operating system because the community quickly found and plugged vulnerabilities.

AFAICT, the real problem with LLMs has been research labs that have anthropomorphized them (attribute human-like quality), causing FUD amongst the general population who think of them as "humans" that are coming for their jobs.

Some AI companies have leveraged this FUD to advocate regulation and closed-source models.

As you can tell, the consequences of not open-sourcing AI can be far worse - extreme power struggles, blocked innovation, and a couple of monopolies that will control humanity.

Imagine if these companies actually invented AGI.

They would have absolute power, and we all know - absolute power corrupts absolutely!!

While everyone agrees that we don't have AGI yet, a reasonable number of researchers believe that future versions of LLMs may become AGIs

The crux of the argument that closed-source companies use is that it is dangerous to open-source these LLMs because, one day, they may be engineered to develop consciousness and agency and kill us all.

First and foremost, let's look at LLMs today. These models are relatively harmless and generate text based on next-word predictions. There is ZERO chance they will suddenly turn themselves on and go rogue.

We also have abundant proof of this - Several GPT 3.5 class models, including Llama-2, have been open-sourced, and humanity hasn't been destroyed!

Some safety-ists have argued that the generated text can be harmful and give people dangerous advice and have advocated heavy censorship of these models. Again, this makes zero sense, given that the internet and search engines are not censored. Censor uniformly or not at all.

Next, safety-ists argue that LLMs can be used to generate misinformation. Sadly, humans and automated bots are more competent than LLMs at generating misinformation. The only way to deal with misinformation is to detect and de-amplify it.

Social media platforms like Twitter and Meta do this aggressively. Blocking LLMs because they can generate misinformation is the same as blocking laptops because they can be used for all kinds of nefarious things.

The final safety-ist argument is that bad actors will somehow modify these LLMs and create AGI! To start with, you need a ton of compute and a lot of expertise to modify these LLMs.

Random bad actors on the internet can't even run inference on the LLM, let alone modify it. Next, companies like OpenAI and Google, with many experts, have yet to create AGI from LLMs; how can anyone else?

In fact, there is zero evidence that even if future LLMs (e.g., GPT-5) don't hallucinate or mimic human reasoning, they will ever have agency.

The opposite is true - there is tons of evidence to show they are just extremely good at detecting patterns in human language, creating latent space world models, and will continue to be glorified next-word predictors.

Just like a near 100% accurate forecasting model is not a psychic, an excellent LLM is not AGI!

Open-sourcing models have led to more research and a better understanding of LLMs. Sharing research work, source code and other findings promotes transparency, and the community quickly detects and plugs security vulnerabilities, if any.

In fact, Linux became the dominant operating system because the community quickly found and plugged vulnerabilities.

AFAICT, the real problem with LLMs has been research labs that have anthropomorphized them (attribute human-like quality), causing FUD amongst the general population who think of them as "humans" that are coming for their jobs.

Some AI companies have leveraged this FUD to advocate regulation and closed-source models.

As you can tell, the consequences of not open-sourcing AI can be far worse - extreme power struggles, blocked innovation, and a couple of monopolies that will control humanity.

Imagine if these companies actually invented AGI.

They would have absolute power, and we all know - absolute power corrupts absolutely!!

Paper page - GAIA: a benchmark for General AI Assistants

Join the discussion on this paper page

huggingface.co

GAIA Leaderboard - a Hugging Face Space by gaia-benchmark

Submit your model for evaluation on a leaderboard and view the results. Provide details like model name, family, system prompt, and email. Upload a file with model answers, and see your scores disp...

huggingface.co

GAIA: a benchmark for General AI Assistants

We introduce GAIA, a benchmark for General AI Assistants that, if solved, would represent a milestone in AI research. GAIA proposes real-world questions that require a set of fundamental abilities such as reasoning, multi-modality handling, web browsing, and generally tool-use proficiency. GAIA...

Computer Science > Computation and Language

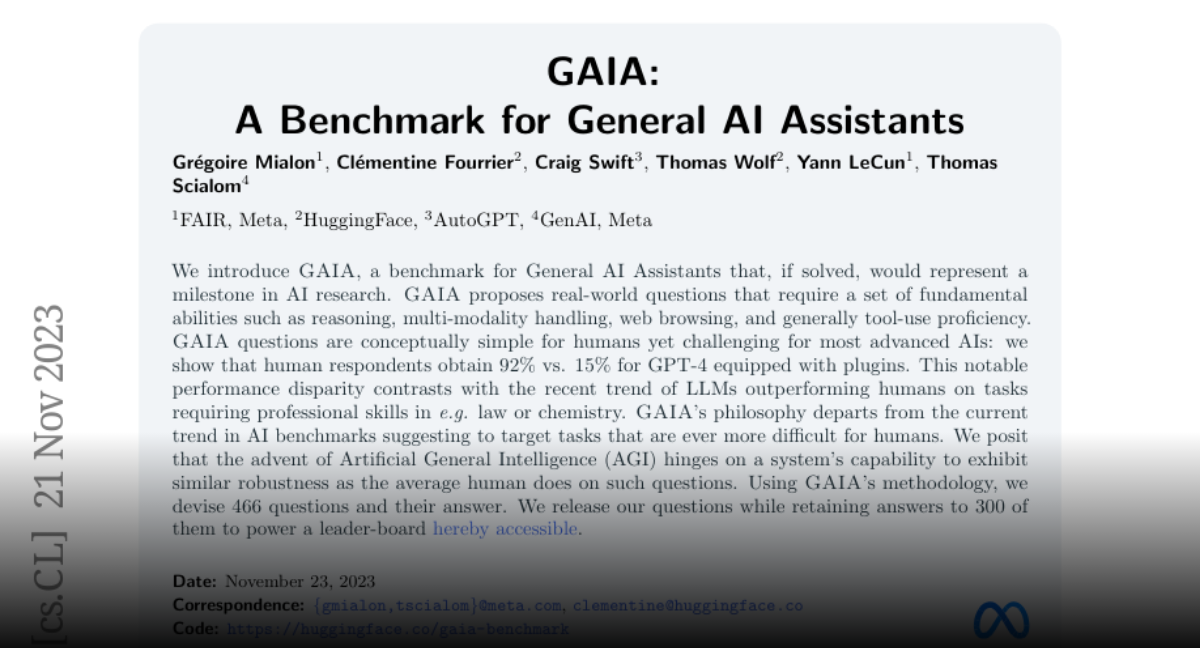

[Submitted on 21 Nov 2023]GAIA: a benchmark for General AI Assistants

Grégoire Mialon, Clémentine Fourrier, Craig Swift, Thomas Wolf, Yann LeCun, Thomas ScialomWe introduce GAIA, a benchmark for General AI Assistants that, if solved, would represent a milestone in AI research. GAIA proposes real-world questions that require a set of fundamental abilities such as reasoning, multi-modality handling, web browsing, and generally tool-use proficiency. GAIA questions are conceptually simple for humans yet challenging for most advanced AIs: we show that human respondents obtain 92\% vs. 15\% for GPT-4 equipped with plugins. This notable performance disparity contrasts with the recent trend of LLMs outperforming humans on tasks requiring professional skills in e.g. law or chemistry. GAIA's philosophy departs from the current trend in AI benchmarks suggesting to target tasks that are ever more difficult for humans. We posit that the advent of Artificial General Intelligence (AGI) hinges on a system's capability to exhibit similar robustness as the average human does on such questions. Using GAIA's methodology, we devise 466 questions and their answer. We release our questions while retaining answers to 300 of them to power a leader-board available at this https URL.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2311.12983 [cs.CL] |

| (or arXiv:2311.12983v1 [cs.CL] for this version) | |

| https://doi.org/10.48550/arXiv.2311.12983 Focus to learn more |

Submission history

From: Grégoire Mialon [view email][v1] Tue, 21 Nov 2023 20:34:47 UTC (3,688 KB)