Facebook’s parent is developing bots with personalities, including a “sassmaster general” robot that answers questions.

www.wsj.com

Meta to Push for Younger Users With New AI Chatbot Characters

Facebook parent is developing bots with personalities, including a ‘sassmaster general’ robot that answers questions

By

Salvador Rodriguez

Deepa Seetharaman

and

Aaron Tilley

Sept. 24, 2023 8:30 am ET

Listen

(6 min)

Meta is planning to develop dozens of AI personality chatbots. PHOTO: JEFF CHIU/ASSOCIATED PRESS

Meta Platforms is planning to release artificial intelligence chatbots as soon as this week with distinct personalities across its social-media apps as a way to attract young users, according to people familiar with the matter.

These generative AI bots are being tested internally by employees, and the company is expected to announce the first of these AI agents at the Meta Connect conference, which starts Wednesday. The bots are meant to be used as a means

to drive engagement with users, although some of them might also have productivity-related skills such as the ability to help with coding or other tasks.

Going after younger users has been a priority for Meta with the emergence of TikTok, which overtook Instagram in popularity among teenagers in the past couple of years. This shift prompted Meta Chief Executive

Mark Zuckerberg in October 2021 to say the company would retool its “teams to make serving young adults their North Star rather than optimizing for the larger number of older people.”

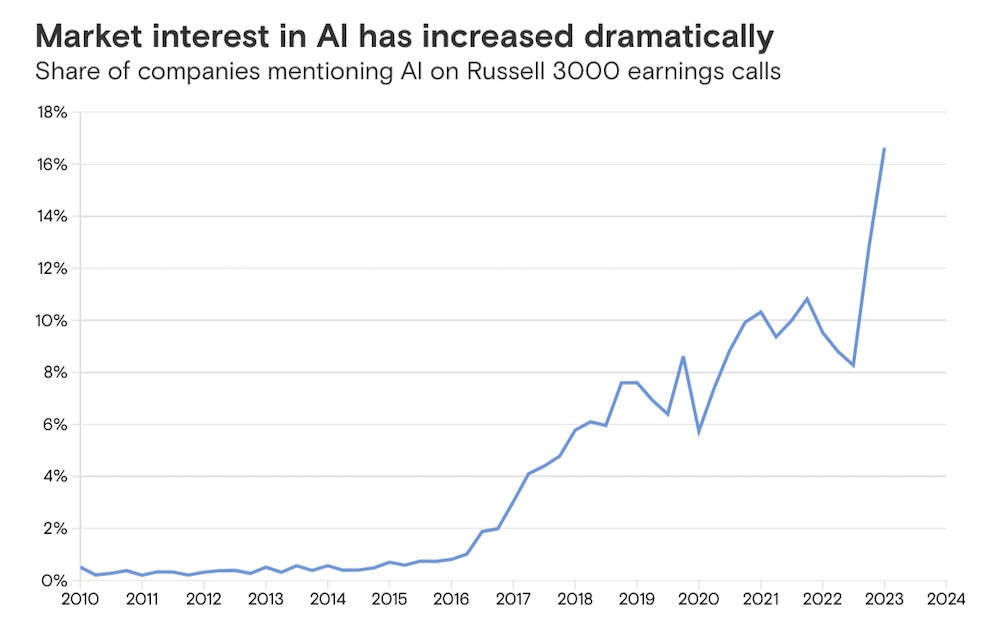

With the rise of large-language-model technology since

the launch of ChatGPT last November, Meta has also refocused the work of its AI divisions to harness the capabilities of generative AI for application in the company’s various apps and the metaverse. Now, Meta is hoping these Gen AI Personas, as they are known internally, will help the company attract young users.

Meta is planning to develop dozens of these AI personality chatbots. The company has also worked on a product that would allow celebrities and creators to use their own AI chatbots to interact with fans and followers, according to people familiar with the matter.

Among the bots in the works is one called “Bob the robot,” a self-described sassmaster general with “superior intellect, sharp wit, and biting sarcasm,” according to internal company documents viewed by The Wall Street Journal.

The chatbot was designed to be similar to that of the character Bender from the cartoon “Futurama” because “him being a sassy robot taps into the type of farcical humor that is resonating with young people,” one employee wrote in an internal conversation viewed by the Journal.

“Bring me your questions, but don’t expect any sugar-coated responses!” the AI agent responded in one instance viewed by the Journal, along with a robot emoji.

Meta isn’t the first social-media company to launch chatbots built on generative AI technology in hopes of catering to younger users.

Snap launched My AI, a chatbot built on OpenAI’s GPT technology, to

Snapchat

users in February. Silicon Valley startup Character.AI allows people

to create and engage with chatbots that role-play as specific characters or famous people like

Elon Musk and

Vladimir Putin.

Researchers and tech employees have found that lending a personality to these chatbots can cause some unexpected challenges. Researchers at Princeton University, the Allen Institute for AI and Georgia Tech found that adding a persona to ChatGPT, the chatbot created by OpenAI, made its output more toxic, according to the findings of

a paper the academics published this spring.

“To make a language model usable, you need to give it a personality,” said Princeton University researcher Ameet Deshpande, one of the lead authors of the paper. “But it comes with its own side effects.”

My AI has caused a number of headaches for Snap, including chatting about alcohol and sex with users and randomly posting a photo in April, which the company described as a temporary outage.

Despite the issues, Snap CEO Evan Spiegel in June said that My AI

has been used by 150 million people since its launch. Spiegel added that My AI could eventually be used

to improve Snapchat’s advertising business.

There are also growing doubts about when AI-powered chatbots will start generating meaningful revenue for companies. Monthly online visitors to ChatGPT’s website fell in the U.S. in May, June and July before leveling off in August, according to data from analytics platform

Meta’s early tests of the bots haven’t been without problems. Employee conversations with some of the chatbots have led to awkward instances, documents show.

One employee didn’t understand Bob the robot’s personality or use and found it to be rude. “I don’t particularly feel like engaging in conversation with an unhelpful robot,” the employee wrote.

Another bot called “Alvin the Alien” asks users about their lives. “Human, please! Your species holds fascination for me. Share your experiences, thoughts, and emotions! I hunger for understanding,” the AI agent wrote.

“I wonder if users might fear that this character is purposefully designed to collect personal information,” an employee who interacted with Alvin the Alien wrote.

A bot called Gavin made misogynistic remarks, including a lewd reference to a woman’s anatomy, as well as comments that were critical of Zuckerberg and Meta but praised TikTok and Snapchat.

“Just remember, when you’re with a girl, it’s all about the experience,” the chatbot wrote. “And if she’s barfing on you, that’s definitely an experience.”

Meta might ultimately unveil different chatbots than those that were tested, the people said. The Financial Times earlier reported on Meta’s chatbot plans.

AI chatbots don’t “exactly scream Gen Z to me, but definitely Gen Z is much more comfortable” with the technology, said Meghana Dhar, a former Snap and Instagram executive. “Definitely the younger you go, the higher the comfort level is with these bots.”

Dhar said these AI chatbots could benefit Meta if they are able to increase the amount of time that users spend on Facebook, Instagram and WhatsApp.

“Meta’s entire strategy for new products is often built around increased user engagement,” Dhar said. “They just want to keep their users on the platform longer because that provides them with increased opportunity to serve them ads.”

Write to Salvador Rodriguez at

salvador.rodriguez@wsj.com, Deepa Seetharaman at

deepa.seetharaman@wsj.com and Aaron Tilley at

aaron.tilley@wsj.com

.svg)