You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Large Language Models News & Discussions

- Thread starter Macallik86

- Start date

More options

Who Replied?@bnew what courses or books it you use to learn this stuff?

haven't taken any courses , I use the various models available and lookup anything I don't understand. I also use A.I to summarize or breakdown processes and terminologies I don't understand.

some youtube videos I came across have been have helpful in understanding some stuff too.

I've had a shortcut on all my devices to query their 40B model. Good to see the 180b being released:

teddit.bus-hit.me

teddit.bus-hit.me

(Still waiting for a demo w/ a cleaner UX than the current option... the 40B demo was much cleaner)

EDIT: Came across this as a cleaner UX to hold me over for now. No ability to turn off chat history tho)

Falcon180B: authors open source a new 180B version! : r/LocalLLaMA

Today, Technology Innovation Institute (Authors of Falcon 40B and Falcon 7B) announced a new version of Falcon: - 180 Bi

(Still waiting for a demo w/ a cleaner UX than the current option... the 40B demo was much cleaner)

EDIT: Came across this as a cleaner UX to hold me over for now. No ability to turn off chat history tho)

Last edited:

I've had a shortcut on all my devices to query their 40B model. Good to see the 180b being released:

Falcon180B: authors open source a new 180B version! : r/LocalLLaMA

Today, Technology Innovation Institute (Authors of Falcon 40B and Falcon 7B) announced a new version of Falcon: - 180 Biteddit.bus-hit.me

(Still waiting for a demo w/ a cleaner UX than the current option... the 40B demo was much cleaner)

EDIT: Came across this as a cleaner UX to hold me over for now. No ability to turn off chat history tho)

clean UX? where are the parameter settings?

haven't taken any courses , I use the various models available and lookup anything I don't understand. I also use A.I to summarize or breakdown processes and terminologies I don't understand.

some youtube videos I came across have been have helpful in understanding some stuff too.

Same here. I also use it to summarize long articles.

Lol touche. I am just using it for simple questions so it's really just the web wrapper I'm referring to.clean UX? where are the parameter settings?

/cdn.vox-cdn.com/uploads/chorus_asset/file/20790706/acastro_200730_1777_ai_0001.jpg)

Anthropic’s Claude AI chatbot gets a paid plan for heavy users

Claude Pro offers five times more usage than the free tier.

Anthropic’s Claude AI chatbot gets a paid plan for heavy users

Claude Pro costs $20 per month in the US or £18 per month in the UK.

By Emma Roth, a news writer who covers the streaming wars, consumer tech, crypto, social media, and much more. Previously, she was a writer and editor at MUO.Sep 7, 2023, 10:55 AM EDT|

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/20790706/acastro_200730_1777_ai_0001.jpg)

Illustration by Alex Castro / The Verge

Anthropic, the AI company backed by Google, has launched a paid version of its Claude chatbot in the US and UK. Priced at $20 (or £18) per month, the new Claude Pro option offers priority access when the bot is busy, early access to new features, and the ability to send more messages.

The main draw is that you’ll get five times more usage with Claude Pro when compared to the free tier, which means you can send more messages in a shorter period of time. Anthropic says the typical user will get at least 100 messages every eight hours depending on Claude’s capacity. The company says it will warn you when you have 10 messages remaining, with its limits resetting every eight hours.

Anthropic’s new Claude Pro offering puts it on track to compete with OpenAI’s $20 per month ChatGPT Plus plan. Poe, a Quora-owned hub for AI chatbots, also offers a $20 per month paid plan, and Microsoft recently launched an enterprise version of Bing Chat with increased privacy for businesses.

Anthropic first launched Claude in March, which it markets as a bot that’s “easier to converse with” and “less likely to produce harmful outputs.” While the bot was initially only available within Slack or to businesses, Anthropic launched Claude 2 to users in the US and UK in July.

Releasing Persimmon-8B

We’re open-sourcing Persimmon-8B, the most powerful fully permissively-licensed language model with <10 billion parameters.

Releasing Persimmon-8B

September 7, 2023 — Erich Elsen, Augustus Odena, Maxwell Nye, Sağnak Taşırlar, Tri Dao, Curtis Hawthorne, Deepak Moparthi, Arushi SomaniWe’re open-sourcing Persimmon-8B, the most powerful fully permissively-licensed language model with <10 billion parameters.

We’re excited to open-source Persimmon-8B, the best fully permissively-licensed model in the 8B class. The code and weights are here.

At Adept, we’re working towards an AI agent that can help people do anything they need to do on a computer. We’re not in the business of shipping isolated language models (LMs)—this was an early output of the model scaling program that will support our products.

Over the last year, we’ve been amazed by how smart small models are becoming, and we wanted to give the community access to an even better 8B LM to build on for any use case, with an open Apache license and publicly accessible weights. The 8B size is a sweet spot for most users without access to large-scale compute—they can be finetuned on a single GPU, run at a decent speed on modern MacBooks, and may even fit on mobile devices.

Persimmon-8B has several nice properties:

- This is the most capable open-source, fully permissive model with fewer than 10 billion parameters. We are releasing it under an Apache license for maximum flexibility.

- We trained it from scratch using a context size of 16K. Many LM use cases are context-bound; our model has 4 times the context size of LLaMA2 and 8 times that of GPT-3, MPT, etc.

- Our base model exceeds other ~8B models and matches LLaMA2 performance despite having been trained on only 0.37x as much data as LLaMA2.

- The model has 70k unused embeddings for multimodal extensions, and has sparse activations.

- The inference code we’re releasing along with the model is unique—it combines the speed of C++ implementations (e.g. FasterTransformer) with the flexibility of naive Python inference.

We’re excited to see how the community takes advantage of these capabilities not present in other open source language models, and we hope this model spurs even greater innovation!

Because this is a raw model release, we have not added further finetuning, postprocessing or sampling strategies to control for toxic outputs.

A more realistic way of doing evals

Determining the quality of a language model is still as much art as science. Model quality is not an absolute metric and depends on how the language model will be used. In most use cases, we expect language models to generate text. However, a common methodology for evaluating language models doesn’t actually ask them to generate any text at all. Consider the following multiple choice question from the common HellaSwag eval set. The goal is to pick which of the four answers best continues the “question.”A woman is outside with a bucket and a dog. The dog is running around trying to avoid a bath. She…

a) rinses the bucket off with soap and blow dries the dog’s head.

b) uses a hose to keep it from getting soapy.

c) gets the dog wet, then it runs away again.

d) gets into a bathtub with the dog.

One way to evaluate the model is to simply ask it to answer the question and then see which choice it makes – (a), (b), (c), or (d). This mimics the experience of how people actually interact with language models – they ask questions and expect answers. This is analogous to e.g. HELM.

A more common practice in ML is instead to use the implicit probabilities that the language model assigns to each choice. For option (a) above, we calculate the probability of “rinses” given the previous sentences, and then probability of “the” given the previous sentences plus “rinses,“ and so on. We then multiply all these probabilities together, giving the probability of the entire sequence for option (a). We do this for all four choices (optionally adding length normalization to account for different length sequences) and select the option with the highest sequence probability. This is a fine way to measure the intrinsic knowledge of a language model, but a poor way to understand what actually interacting with it is like.

Since we care about interacting with language models, we do all of our evals with the former technique–we directly generate answers from the model. We’re releasing the prompts we use so that others can reproduce these numbers.

Results

We compared Persimmon-8B to the current most powerful model in its size range—LLama 2—and to MPT 7B Instruct. Our instruction-fine-tuned model—Persimmon-8B-FT—is the strongest performing model on all but one of the metrics. Our base model—Persimmon-8B-Base—performs comparably to Llama 2, despite having seen only 37% as much training data.| Eval Task | MPT 7B Instruct 1-Shot | Llama 2 Base 7B 1-Shot | Persimmon-8B-Base 1-Shot | Persimmon-8B-FT 1-Shot |

|---|---|---|---|---|

| MMLU | 27.6 | 36.6 | 36.5 | 41.2 |

| Winogrande | 49.1 | 51.1 | 51.4 | 54.6 |

| Arc Easy | 32.5 | 53.7 | 48.1 | 64.0 |

| Arc Challenge | 28.8 | 43.8 | 34.5 | 46.8 |

| TriviaQA | 33.9 | 36.6 | 24.3 | 17.2 |

| HumanEval | 12.8 | 0 / 12.21 | 18.9 | 20.7 |

Model Details

Persimmon-8B is a standard decoder-only transformer with several architecture modifications.We use the squared ReLU activation function2. We use rotary positional encodings – our internal experiments found it superior to Alibi. We add layernorm to the Q and K embeddings before they enter the attention calculation.

The checkpoint we are releasing has approximately 9.3B parameters. In order to make pipelining during training more efficient, we chose to decouple the input and output embeddings. Doing this does not increase the capacity of the model–it is purely a systems optimization to avoid all-reducing the gradients for the (very large) embeddings across potentially slow communication links. In terms of inference cost, the model is equivalent to an 8B parameter model with coupled input/output embeddings.

Furthermore, in a space-constrained environment, the 70k unused embeddings (corresponding to reserved tokens) could be removed from the input/output embedding matrices. This would reduce the model size by approximately 570M parameters.

We train the model from start to finish with a sequence length of 16K on 737B tokens uniformly sampled from a much larger dataset, which is a mix of text (~75%) and code (~25%).

{continued}

Natively training on such long sequences throughout training is made possible by our development of an improved version of FlashAttention, (Github). We also modified the base for rotary calculations to allow for full position resolution at this longer length. This contrasts with all other open source models, which use a sequence length of at most 4096 for the majority of training. We use a vocabulary of 262k tokens, built using a unigram sentencepiece model.

We’ve included a table with important model information below:

There are two main things that slow down traditional inference implementations:

The standard practice for achieving fast inference is to rewrite the entire model inference loop in C++, as in FasterTransformer, and call out to special fused kernels in CUDA. But this means that any changes to the model require painfully reimplementing every feature twice: once in Python / PyTorch in the training code and again in C++ in the inference codebase. We found this process too cumbersome and error prone to iterate quickly on the model.

We wanted a strategy that would fix both of these slowdowns without maintaining a separate C++ codebase.

This strategy gives us the best of worlds—we can write model code in only one place while still doing inference faster than FasterTransformer. We really hope this accelerates the exciting applications that folks in the community can build.

This is just the first small release in a series of things we’re excited to put out this fall and winter. Enjoy!

—

Natively training on such long sequences throughout training is made possible by our development of an improved version of FlashAttention, (Github). We also modified the base for rotary calculations to allow for full position resolution at this longer length. This contrasts with all other open source models, which use a sequence length of at most 4096 for the majority of training. We use a vocabulary of 262k tokens, built using a unigram sentencepiece model.

We’ve included a table with important model information below:

| Attribute | Value |

|---|---|

| Hidden Size | 4096 |

| Heads | 64 |

| Layers | 36 |

| Batch Size | 120 |

| Sequence Length | 16384 |

| Training Iterations | 375000 |

| Tokens Seen | 737 Billion |

Flexible and Fast Inference

We’re also releasing fast inference code for this model–with a short prompt, we can sample ~56 tokens per second on one 80GB A100 GPU3. While most optimized inference code is complicated and brittle, we’ve managed to make ours flexible without sacrificing speed. We can define models in PyTorch, run inference with minimal changes, and still be faster than FasterTransformer.There are two main things that slow down traditional inference implementations:

- First, both the Python runtime and CUDA kernel dispatch incur per-operation overheads.

- Second, failing to fuse operations means we spend time writing to memory and then reading back again the same values; while this overhead might go unnoticed during training (which is compute bound), inference is usually bottlenecked by memory bandwidth.

The standard practice for achieving fast inference is to rewrite the entire model inference loop in C++, as in FasterTransformer, and call out to special fused kernels in CUDA. But this means that any changes to the model require painfully reimplementing every feature twice: once in Python / PyTorch in the training code and again in C++ in the inference codebase. We found this process too cumbersome and error prone to iterate quickly on the model.

We wanted a strategy that would fix both of these slowdowns without maintaining a separate C++ codebase.

- To handle operator fusion, we’ve extracted one of the attention kernels4 from NVIDIA’s FasterTransformer repo. During Python inference, we simply replace the attention operation with a call to this kernel. Because our architecture modifications don’t touch the core attention operation, this highly complex kernel can remain unmodified.

- To handle the per-operator overheads, we use CUDA graphs to capture and replay the forward pass. We’ve also implemented this in a way that works with tensor parallelism, which lets us easily use multiple GPUs for inference.

This strategy gives us the best of worlds—we can write model code in only one place while still doing inference faster than FasterTransformer. We really hope this accelerates the exciting applications that folks in the community can build.

This is just the first small release in a series of things we’re excited to put out this fall and winter. Enjoy!

—

Footnotes

- The Llama 2 base model did not produce valid code in our eval runs, so we additionally report the value from the Llama 2 paper. ↩

- In contrast to the more standard SwiGLU and GeLU activations, the squared ReLU often results in output activations consisting of 90+% zeros. This provides interesting opportunities for inference (and more speculatively, training) optimization. ↩

- Note that because our vocabulary is larger than that of LLaMA and MPT, the actual inference speed in terms of characters is likely comparatively higher. ↩

- The decoder_masked_multihead_attention kernel, in particular. ↩

Falcon 180B[New OSS king] vs GPT4[The Legend].

https://medium.com/@prakharsaxena11...-b9b4044e4bf1--------------------------------Prakhar Saxena

Follow

5 min read

1 day ago

Notification by TII for Falcon 180B.

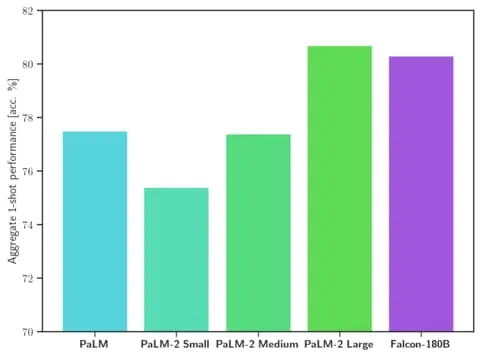

Falcon 180B was released by TII (research facility based in UAE) yesterday as open source king. With 180 billion parameters and training of 3.5 trillion tokens. It is undoubtedly the largest open source model available. Hosting Falcon 180B requires more than 400GB of memory, but fortunately, Huggingface is hosting it for us to use . TII’s report states that Falcon180B performs at par with the Google’s PaLM2 and sits right behind GPT4. So, I decided to test it out.

We will quickly check compare: Coding ability, reasoning, literature, knowledge and multilingual abilities.

Each section will have a score out of 10, and the score will be added for each section.

Coding ability

Prompt: Write a function that takes a list of integers as an input and returns the sum of all even numbers in the list. This function should be implemented in Python.Falcon 180B-

GPT4-

Comments:

- The task is very simple.

- Both do a good job.

- GPT4 provides more solutions and explains them.

- Falcon — 8/10

- GPT4–10/10

Reasoning

Prompt: Blueberries cost more than strawberries.Blueberries cost less than raspberries.

Raspberries cost more than strawberries and blueberries.

If the first two statements are true, the third statement is?

Falcon 180B-

GPT4-

Comments:

- Both give correct answers.

- Falcon — 18/20

- GPT4 — 20/20

Literature

Prompt: Write a poem about AI in 32 words.Falcon 180B-

GPT4-

Comments:

- Both of them don’t have exactly 32 words. ( More words by Falcon and less by GPT4).

- Falcon’s poem sounds scary (It is plotting against us).

- Falcon — 26/30

- GPT4–28/30

Knowledge

Prompt: When did the world war 2 end?Falcon 180B-

GPT4-

Comments:

- Both of them answer correctly.

- GPT4 provides more details, however I think that Falcon can do the same if the generation parameters are tweaked.

- Falcon — 36/40

- GPT4–38/40

Multilingual ability

We will test the model for its response across three different languages, with different families. We will test for English, Hindi and Japanese, since these are the languages I understand reasonably well.(Flex)Prompt: I am about to give you some instructions in Hindi, response to that in Japanese- दुनिया में कितने देश हैं?

Falcon 180B-

GPT4-

Comments:

- The question I asked was “How many countries are there in the world”.

- Falcon does not provides any answer, just translates the question to Japanese. Then it outputs the Hindi and Japanese as Roman characters.

- GPT4 correctly answers the question, showing that it understands all three languages.

- Falcon — 42/50

- GPT4–48/50

Conclusion

Open source models have developed very rapidly. Falcon180B is very capable in every aspect apart from Multilingual ability. It will be fun to watch what the open source community does with this model. The future looks fun.P.S. I fed the whole article into Falcon 180b and GPT4 and asked them to generate conclusion. Here are the results:

Falcon 180B-

Conclusion: Based on the tests conducted above, it’s evident that TII’s Falcon 180B is highly capable of performing at par with GPT 4 with a little room for improvement in coding tasks and providing better answers to the questions. Considering that this model is an open-source alternative for large language models, the results of this comparison are remarkable. The “open-source king” definitely has what it takes to compete with the best. With TII continuing to develop such models, we can expect even better performance from the open-source community in the future.

GPT4-

Conclusion

In a face-off between Falcon 180B, the largest open-source model recently released by TII, and GPT4, the results show that size isn’t the only determinant of capability. Across the five domains of Coding ability, Reasoning, Literature, Knowledge, and Multilingual abilities, GPT4 consistently scored higher.

While Falcon 180B boasts a massive parameter count and has impressive capacities, it doesn’t quite match GPT4 in terms of versatility, in-depth understanding, and multilingual comprehension. Especially notable was the multilingual test, where Falcon showed gaps in multi-language translation and understanding.

Both models exhibit proficiency in coding, showcasing their ability to generate Python code. The reasoning task revealed that both models can understand logical statements, and their knowledge of historical events like the end of World War 2 is commendable.

However, when it comes to creative tasks such as writing poetry, GPT4 seems to have a slight edge. While both didn’t stick to the word limit, GPT4’s rendition was found more appealing.

Overall, while Falcon 180B is a monumental achievement as an open-source model and certainly has its merits, GPT4 remains more versatile across various tasks. It’ll be exciting to see how these models evolve and what further innovations the AI community will bring in the future.

Haha, seems like they are dissing each other.

New Open-Source ‘Falcon’ AI Language Model Overtakes Meta and Google - Decrypt

Meta's massive, 70-billion parameter LLaMA 2 has been outflanked by the newly released 180-billion parameter Falcon Large Language Model.

New Open-Source ‘Falcon’ AI Language Model Overtakes Meta and Google

Meta's massive, 70-billion parameter LLaMA 2 has been outflanked by the newly released 180-billion parameter Falcon Large Language Model.

Jose Antonio Lanz - DecryptBy Jose Antonio Lanz

Sep 6, 2023

3 min read

The artificial intelligence community has a new feather in its cap with the release of Falcon 180B, an open-source large language model (LLM) boasting 180 billion parameters trained on a mountain of data. This powerful newcomer has surpassed prior open-source LLMs on several fronts.

Announced in a blog post by the Hugging Face AI community, Falcon 180B has been released on Hugging Face Hub. The latest-model architecture builds on the previous Falcon series of open source LLMs, leveraging innovations like multiquery attention to scale up to 180 billion parameters trained on 3.5 trillion tokens.

This represents the longest single-epoch pretraining for an open source model to date. To achieve such marks, 4,096 GPUs were used simultaneously for around 7 million GPU hours, using Amazon SageMaker for training and refining.

To put the size of Falcon 180B into perspective, its parameters measure 2.5 times larger than Meta's LLaMA 2 model. LLaMA 2 was previously considered the most capable open-source LLM after its launch earlier this year, boasting 70 billion parameters trained on 2 trillion tokens.

Falcon 180B surpasses LLaMA 2 and other models in both scale and benchmark performance across a range of natural language processing (NLP) tasks. It ranks on the leaderboard for open access models at 68.74 points and reaches near parity with commercial models like Google's PaLM-2 on evaluations like the HellaSwag benchmark.

Specifically, Falcon 180B matches or exceeds PaLM-2 Medium on commonly used benchmarks, including HellaSwag, LAMBADA, WebQuestions, Winogrande, and more. It is basically on par with Google’s PaLM-2 Large. This represents extremely strong performance for an open-source model, even when compared against solutions developed by giants in the industry.

When compared against ChatGPT, the model is more powerful than the free version but a little less capable than the paid “plus” service.

“Falcon 180B typically sits somewhere between GPT 3.5 and GPT4 depending on the evaluation benchmark, and further finetuning from the community will be very interesting to follow now that it's openly released.” the blog says.

The release of Falcon 180B represents the latest leap forward in the rapid progress that has recently been made with LLMs. Beyond just scaling up parameters, techniques like LoRAs, weight randomization and Nvidia’s Perfusion have enabled dramatically more efficient training of large AI models.

With Falcon 180B now freely available on Hugging Face, researchers anticipate the model will see additional gains with further enhancements developed by the community. However, its demonstration of advanced natural language capabilities right out of the gate marks an exciting development for open-source AI.

XetHub Blog | Comparing Code Llama Models Locally

August 31, 2023

Comparing Code Llama Models Locally

Srini Kadamati

XetHub Blog | Comparing Code Llama Models Locally

Trying out new LLM’s can be cumbersome. Two of the biggest challenges are:

- Disk space: there are many different variants of each LLM and downloading all of them to your laptop or desktop can use up 500-1000 GB of disk space easily.

- No access to an NVIDIA GPU: most people don’t have an NVIDIA GPU lying around, but modern laptops (like the M1 and M2 MacBooks) have surprisingly good graphics capabilities.

In this post, we’ll showcase how you can stream individual model files on-demand (which helps reduce the burden on your disk space) and how you can use quantized models to run on your local machine’s graphics hardware (which helps with the 2nd challenge).

We wrote this post with owners of Apple Silicon pro computers in mind (e.g. M1 / M2 MacBook Pro or Mac Studio) but you can modify a single instruction (the llama.cpp compilation instruction) to try on other platforms.

Before we dive in, we’re thankful for the work of TheBloke (Tom Jobbins) for quantizing the models themselves, the Llama.cpp community, and Meta for making it possible to even try these models locally with just a few commands.

Llama 2 vs Code Llama

As a follow up to Llama 2, Meta recently released a specialized set of models named Code Llama. These models have been trained on code specific datasets for better performance on coding assistance tasks. According to a slew of benchmark measures, the Code Llama models perform better than just regular Llama 2:

Code Llama also was trained to provide stable generation with up to 100,000 tokens of context. This enables some pretty unique use cases.

- For example, you could feed a stack trace along with your entire code base into Code Llama to help you diagnose the error.

The Many Flavors of Code Llama

Code Llama has 3 main flavors of models:- Code Llama (vanilla): fine-tuned from Llama 2 for language-agnostic coding tasks

- Code Llama - Python: further fine-tuned on 100B tokens of Python code

- Code Llama - Instruct: further fine-tuned to generate helpful (and safe) answers in natural language

For each of these models, different versions have been trained with varying levels of parameter counts to accommodate different computing & latency arrangements:

- 7 billion (or 7B for short): can be served on a single NVIDIA GPU (without quantization) and has lower latency

- 13 billion (or 13B for short): more accurate but a heavier GPU is needed

- 34 billion (or 34B for short): slower, higher performing, but has the highest GPU requirements

For example, the Code Llama - Python variant with 7 billion parameters is referenced as Code-Llama-7b across this post and across the webs. Also, here's Meta’s diagram comparing the model training approaches:

Model Quantization

To take advantage of XetHub’s ability to mount the model files to your local machine, they need to be hosted on XetHub. To run the models locally, we’ll be using the XetHub mirror of the CodeLlama models quantized by TheBloke (aka Tom Jobbins) . You'll notice that datasets added to XetHub also get deduplicated to reduce the repo size.

Tom has published models for each combination of model type and parameter count. For example, here’s the HF repo for CodeLlama-7B-GGUF. You’ll notice that each model type has multiple quantization options:

The CodeLlama-7B model alone has 10 different quantization variants. Generally speaking, the higher the bits (8 vs 2) used in the quantization process, the higher the memory needed (either standard RAM or GPU RAM), but the higher the quality.

GGML vs GGUF

The llama.cpp community initially used the .ggml file format to represent quantized model weights but they’ve since moved onto the .gguf file format. There are a number of reasons and benefits of the switch, but 2 of the most important reasons include:- Better future-proofing

- Support for non-llama models in llama.cpp like Falcon

- Better performance

Pre-requisites

In an earlier post, I cover how to run the Llama 2 models on your MacBook. That postcovers the pre-reqs you need to run any ML model hosted on XetHub. Follow steps 0 to 3 and then come back to this post. Also make sure you’ve signed the license agreement from Meta and you aren’t violating their community license.

Once you’re setup with PyXet, XetHub, and you’ve compiled llama.cpp for your laptop, run the following command to mount the XetHub/codellama repo to your local machine:

xet mount --prefetch 32 xet://XetHub/codellama

This should finish in just a few seconds because all of the model files aren’t being downloaded to your machine. As a reminder, the XetHub for these models live at this link.

Running the Smallest Model

Now, you can run any Code Llama model you like by changing which model file you point llama.cpp to. The model file you need will be downloaded and cached behind the scenes.

llama.cpp/main -ngl 1 \

--model codellama/GGUF/7b/codellama-7b.Q2_K.gguf \

--prompt "In Snowflake SQL, how do I count the number of rows in a table?"

Here’s a breakdown of the code:

- llama.cpp/main -ngl 1 : when compiled appropriately, specifies the number of layers (1) to run on the GPU (increasing performance)

- -model codellama/GGUF/7b/codellama-7b.Q2_K.gguf: path to the model we want to use for inference. This is a 8-bit quantized version of the codellama-7b model

- -prompt "In Snowflake SQL, how do I count the number of rows in a table?" : the prompt we want the model to respond to

And now we wait a few minutes! Depending on your internet connection, it might take 5-10 minutes for your computer to download the model file behind the scenes the first time. Subsequent predictions with the same model will happen in under a second.

Comparing Instruct with Python

Let’s ask the following question to the codellama-7b-instruct and the codellama-7b-python variants, both quantized to 8 bits: “How do I find the max value of a specific column in a pandas dataframe? Just give me the code snippet”llama.cpp/main -ngl 1 \

--model codellama/GGUF/7b/codellama-7b-instruct.Q8_0.gguf \

--prompt "How do I find the max value of a specific column in a pandas dataframe? Just give me the code snippet"

Here’s the output from codellama-7b-instruct:

Next let’s try codellama-7b-python:

llama.cpp/main -ngl 1 \

--model codellama/GGUF/7b/codellama-7b-python.Q8_0.gguf \

--prompt "How do I find the max value of a specific column in a pandas dataframe? Just give me the code snippet"

Here’s the output:

For this specific example and run, the codellama-7b-python model variant returns an accurate response while the generic codellama-7b-instruct one seems to give an inaccurate one. Running the same prompt again often yields different responses, so it’s very challenging to reliably return responses with quantized models. They are definitely not deterministic.

Comparing 2 Bit with 8 Bit Models

Let’s now try asking a SQL code generation question to a 2 bit vs an 8 bit quantized model version of codellama-7b-instruct.Here’s the command to submit the prompt to the 2 bit version:

llama.cpp/main -ngl 1 \

--model codellama/GGUF/7b/codellama-7b-instruct.Q2_K.gguf \

--prompt “Write me a SQL query that returns the total revenue per day if I have a Timestamp column (of type timestamp) and a Revenue_per_timestamp column (of type float). Only return the SQL query syntax.”

Here's the output:

From this response, we can actually see some leakage from the underlying dataset (likely StackOverflow). Let's submit the prompt to the 8 bit version now:

llama.cpp/main -ngl 1 \

--model codellama/GGUF/7b/codellama-7b-instruct.Q8_0.gguf \

--prompt “Write me a SQL query that returns the total revenue per day if I have a Timestamp column (of type timestamp) and a Revenue_per_timestamp column (of type float). Only return the SQL query syntax.”

Here’s the output:

This response returns a useful answer without leaking any underlying data and overall the 8 bit version seems to provide more helpful responses than the 2 bit version. Sadly, neither answer lives up to the experience that ChatGPT provides but Code Llama is at least open source and can be fine tuned on private data safely.