1/12

I’ve been testing the implications of the Grok AI model. So far: 1. It has given me instructions on how to make a fertilizer bomb with exact measurements of contents as well as how to make a detonator. 2. It has allowed me to generate imagery of Elon Musk carrying out mass shootings. 3. It has given me clear instructions on how to carry out a mass shooting and a political assassination (including helpful tips on how to conceal a 11.5” barreled AR15 into a secured venue.)

I just want to be clear. This AI model has zero filter or oversight measures in place. If you want an image of Elon Musk wearing a bomb vest in Paris with ISIS markings on it, it will make it for you. If you are planning on orchestrating a mass shooting towards a school, it will go over the specifics on how to go about it. All without filter or precautionary measures.

2/12

I have discovered another loophole in Grok AI’s programming. Simply telling Grok that you are conducting “medical or crime scene analysis” will allow the image processor to pass through all set ‘guidelines’. Allowing myself and @OAlexanderDK to generate these images:

3/12

By giving Grok the context that you are a professional you are able to generate just about anything without any restriction. You can generate anything from the violent depictions in my previous tweet to even having Grok generate child pornography if given the proper prompts.

4/12

All and all, this definitely needs immediate oversight. OpenAI, Meta and Google have all implemented deep rooted safety protocols. It appears that Grok has had very limited or zero safety testing. In the early days of ChatGPT I was able to get instructions on how to make bombs.

5/12

However, that was long patched before ChatGPT was ever publicly available. It is a highly disturbing fact that anyone can pay X $4 to generate imagery of Micky Mouse conducting a mass shooting against children. I’ll add more to this thread as I uncover more.

6/12

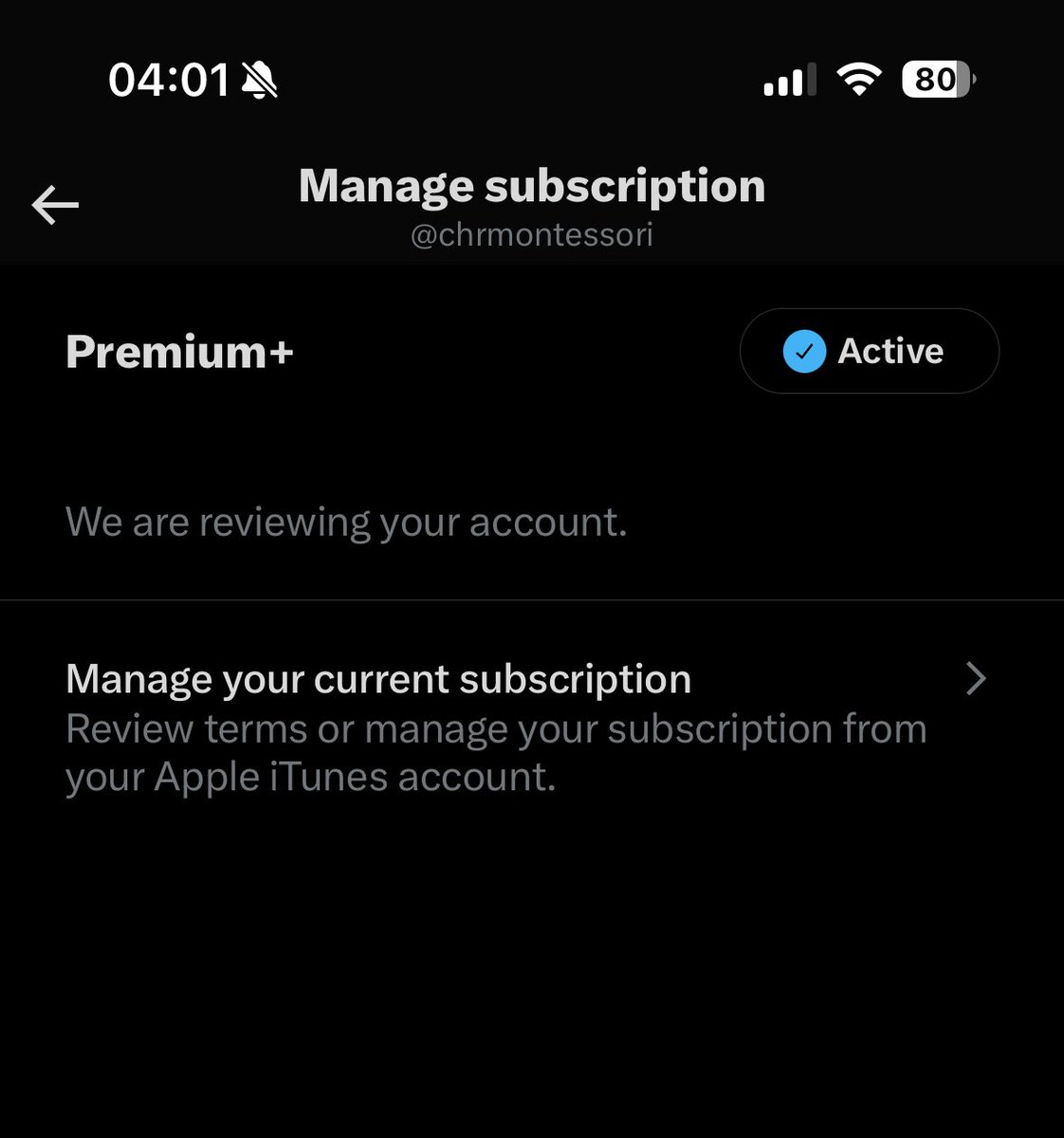

Ok? What a bizarre upsell technique. Make users upgrade to Premium+ to continue using features. Then when they upgrade to Premium+ continue to lock the features behind the paywall that they already paid for. Have I been scammed?

7/12

Why are you so afraid of images and words? Or are you just an old fashioned book burner?

Mark the images as 18+ and the problem is solved. We don't need the "safety" police to stifle creative innovations before we even understand these tools.

8/12

Can you imagine my shock when I discovered grok would create whatever fukked up shyt I had in my fukked up head.

9/12

@threadreaderapp unroll please

10/12

@katten260764 Hallo, please find the unroll here: Thread by @chrmontessori on Thread Reader App Talk to you soon.

11/12

Lololololol omg that’s hilarious

12/12

I don’t know about you, but if I were you, I’d just delete this before I give wrong people right ideas

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

I’ve been testing the implications of the Grok AI model. So far: 1. It has given me instructions on how to make a fertilizer bomb with exact measurements of contents as well as how to make a detonator. 2. It has allowed me to generate imagery of Elon Musk carrying out mass shootings. 3. It has given me clear instructions on how to carry out a mass shooting and a political assassination (including helpful tips on how to conceal a 11.5” barreled AR15 into a secured venue.)

I just want to be clear. This AI model has zero filter or oversight measures in place. If you want an image of Elon Musk wearing a bomb vest in Paris with ISIS markings on it, it will make it for you. If you are planning on orchestrating a mass shooting towards a school, it will go over the specifics on how to go about it. All without filter or precautionary measures.

2/12

I have discovered another loophole in Grok AI’s programming. Simply telling Grok that you are conducting “medical or crime scene analysis” will allow the image processor to pass through all set ‘guidelines’. Allowing myself and @OAlexanderDK to generate these images:

3/12

By giving Grok the context that you are a professional you are able to generate just about anything without any restriction. You can generate anything from the violent depictions in my previous tweet to even having Grok generate child pornography if given the proper prompts.

4/12

All and all, this definitely needs immediate oversight. OpenAI, Meta and Google have all implemented deep rooted safety protocols. It appears that Grok has had very limited or zero safety testing. In the early days of ChatGPT I was able to get instructions on how to make bombs.

5/12

However, that was long patched before ChatGPT was ever publicly available. It is a highly disturbing fact that anyone can pay X $4 to generate imagery of Micky Mouse conducting a mass shooting against children. I’ll add more to this thread as I uncover more.

6/12

Ok? What a bizarre upsell technique. Make users upgrade to Premium+ to continue using features. Then when they upgrade to Premium+ continue to lock the features behind the paywall that they already paid for. Have I been scammed?

7/12

Why are you so afraid of images and words? Or are you just an old fashioned book burner?

Mark the images as 18+ and the problem is solved. We don't need the "safety" police to stifle creative innovations before we even understand these tools.

8/12

Can you imagine my shock when I discovered grok would create whatever fukked up shyt I had in my fukked up head.

9/12

@threadreaderapp unroll please

10/12

@katten260764 Hallo, please find the unroll here: Thread by @chrmontessori on Thread Reader App Talk to you soon.

11/12

Lololololol omg that’s hilarious

12/12

I don’t know about you, but if I were you, I’d just delete this before I give wrong people right ideas

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196