You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Eureka! NVIDIA Research Breakthrough Puts New Spin on Robot Learning

- Thread starter bnew

- Start date

More options

Who Replied?

Google DeepMind Announces LLM-Based Robot Controller RT-2

Google DeepMind recently announced Robotics Transformer 2 (RT-2), a vision-language-action (VLA) AI model for controlling robots. RT-2 uses a fine-tuned LLM to output motion control commands. It can perform tasks not explicitly included in its training data and improves on baseline models by up...

Google DeepMind Announces LLM-Based Robot Controller RT-2

OCT 17, 2023 3 MIN READby

- Anthony Alford

Senior Director, Development at Genesys Cloud ServicesFOLLOW

Google DeepMind recently announced Robotics Transformer 2 (RT-2), a vision-language-action (VLA) AI model for controlling robots. RT-2 uses a fine-tuned LLM to output motion control commands. It can perform tasks not explicitly included in its training data and improves on baseline models by up to 3x on emergent skill evaluations.

DeepMind trained two variants of RT-2, using two different underlying visual-LLM foundation models: a 12B parameter version based on PaLM-E and a 55B parameter one based on PaLI-X. The LLM is co-fine-tuned on a mix of general vision-language datasets and robot-specific data. The model learns to output a vector of robot motion commands, which is treated as simply a string of integers: in effect, it is a new language that the model learns. The final model is able to accept an image of the robot's workspace and a user command such as "pick up the bag about to fall off the table," and from that generate motion commands to perform the task. According to DeepMind,

Not only does RT-2 show how advances in AI are cascading rapidly into robotics, it shows enormous promise for more general-purpose robots. While there is still a tremendous amount of work to be done to enable helpful robots in human-centered environments, RT-2 shows us an exciting future for robotics just within grasp.

Google Robotics and DeepMind have published several systems that use LLMs for robot control. In 2022, InfoQ covered Google's SayCan, which uses an LLM to generate a high-level action plan for a robot, and Code-as-Policies, which uses an LLM to generate Python code for executing robot control. Both of these use a text-only LLM to process user input, with the vision component handled by separate robot modules. Earlier this year, InfoQ covered Google's PaLM-E which handles multimodal input data from robotic sensors and outputs a series of high-level action steps.

RT-2 builds on a previous implementation, RT-1. The key idea of the RT series is to train a model to directly output robot commands, in contrast to previous efforts which output higher-level abstractions of motion. Both RT-2 and RT-1 accept as input an image and a text description of a task. However, while RT-1 used a pipeline of distinct vision modules to generate visual tokens to input to an LLM, RT-2 uses a single vision-language model such as PaLM-E.

DeepMind evaluated RT-2 on over 6,000 trials. In particular, the researchers were interested in its emergent capabilities: that is, to perform tasks not present in the robot-specific training data, but that emerge from its vision-language pre-training. The team tested RT-2 on three task categories: symbol understanding, reasoning, and human recognition. When compared to baselines, RT-2 achieved "more than 3x average success rate" of the best baseline. However, the model did not acquire any physical skills that were not included in the robot training data.

In a Hacker News discussion about the work, one user commented:

It does seem like this work (and a lot of robot learning works) are still stuck on position/velocity control and not impedance control. Which is essentially output where to go, either closed-loop with a controller or open-loop with a motion planner. This seems to dramatically lower the data requirement but it feels like a fundamental limit to what task we can accomplish. The reason robot manipulation is hard is because we need to take into account not just what's happening in the world but also how our interaction alters it and how we need to react to that.

Although RT-2 has not been open sourced, the code and data for the RT-1 have been.

OpenAI-backed startup Figure teases new humanoid robot ‘Figure 02’

Carl Franzen@carlfranzen

August 2, 2024 2:14 PM

The race to get AI-driven humanoid robots into homes and workplaces around the world took a new twist today when Figure, a company backed by OpenAI among others to the tune of $675 million in its last round in February, today published a trailer video for its newest model: Figure 02, along with the date of August 6, 2024.

As you’ll see in the video, it is short on specifics but heavy on vibes and close-ups, showing views of what appear to be robotic joints and limbs as well as some interesting, possibly flexible mesh designs for the robot body and labels for torque ratings up to 150Nm (Newton-meters, or “the torque produced by a force of one newton applied perpendicularly to the end of a one-meter long lever arm” according to Google’s AI Overview) and “ROM”, which I take to be “range of motion” up to 195 degrees (out of a total 360).

Founder Brett Adcock also posted on his personal X/Twitter account that Figure 02 was “the most advanced humanoid robot on the planet.”

Figure 02 is the most advanced humanoid robot on the planet

— Brett Adcock (@adcock_brett) August 2, 2024

Backed by big names in tech and AI

Adcock, an entrepreneur who previously founded far-out startups Archer Aviation and hiring marketplace Vettery, established Figure AI in 2022.

In March 2023, Figure emerged from stealth mode to introduce Figure 01, a general-purpose humanoid robot designed to address global labor shortages by performing tasks in various industries such as manufacturing, logistics, warehousing, and retail.

With a team of 40 industry experts, including Jerry Pratt as CTO, Figure AI completed the humanoid’s full-scale build in just six months. Adcock envisions the robots enhancing productivity and safety by taking on unsafe and undesirable jobs, ultimately contributing to a more automated and efficient future, while maintaining that they will never be weaponized.

The company, which in addition to OpenAI has among its investors and backers NVidia, Microsoft, Intel Capital and Bezos Expeditions (Amazon founder Jeff Bezos’s private fund), inked a deal with BMW Manufacturing earlier this year and showed off impressive integrations with OpenAI’s GPT-4V or vision model prior inside Figure’s 01 robot, before the release of OpenAI’s new flagship GPT-4o and GPT-4o mini.

Presumably, Figure 02 will have one of these newer OpenAI models guiding its movements and interaction, one of the leading names.

Competition to crack humanoid robotics intensifies

Figure has been a little quiet of late even as other companies debut and show off designs for AI-infused humanoid robots that they hope will assist humans in settings such as warehouses, factories, industrial plants, fulfillment centers, retirement homes, retail outlets, healthcare facilities and of course, private homes as well.

Though humanoid robots have long been a dream in sci-fi stories, their debut as commercial products has been slow going and marred by expensive designs confined primarily to research settings. But that’s changing thanks to generative AI and more specifically, large language models (LLMs) and multimodal AI models that can quickly analyze live video and audio inputs and respond with humanlike audio and movements of their own.

Indeed, recently, billionaire multi-company owner Elon Musk stated with his typical boisterous bravado and ambitious goal setting, that there was a market for more than 10 billion humanoid robots on Earth (more than one for every person) — which he hoped to command or at least take a slice of with his electric automotive and AI company Tesla Motors (which is making a rival humanoid robot of its own to Figure called Tesla Optimus).

Elon Musk says he expects there to be 10 billion humanoid robots, which will be so profound that it will mark a fundamental milestone in civilization pic.twitter.com/1OELtSPFoU

— Tsarathustra (@tsarnick) July 29, 2024

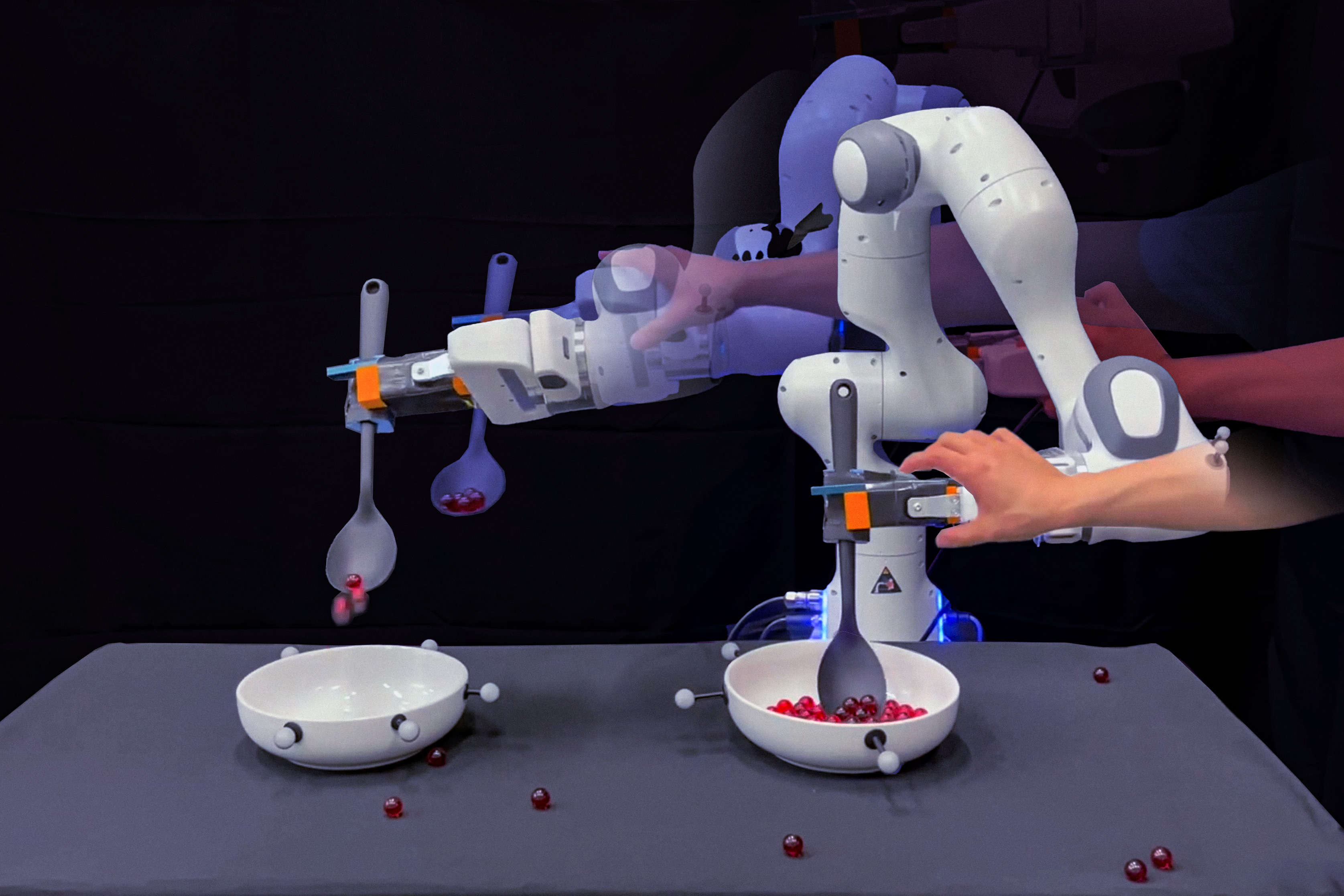

Moreover, Nvidia showed off new improvements for training the AI that guides humanoid robots through its Project GR00T effort using Apple Vision Pro headsets worn by human tele-operators to guide the robots through correct motions:

Exciting updates on Project GR00T! We discover a systematic way to scale up robot data, tackling the most painful pain point in robotics. The idea is simple: human collects demonstration on a real robot, and we multiply that data 1000x or more in simulation. Let’s break it down:… pic.twitter.com/8mUqCW8YDX

— Jim Fan (@DrJimFan) July 30, 2024

Before that, early humanoid robotics pioneer Boston Dynamics previewed its own updated version of its Atlas humanoid robot with electric motors replacing its hydraulic actuators, presumably making for a cheaper, quieter, more reliable and sturdier bot.

Thus, the competition in the sector appears to be intensifying. But with such big backers and forward momentum, Figure seems well-poised to continue advancing its own efforts in the space.

1/5

Google DeepMind has developed an AI-powered robot that's ready to play table tennis.

This is the first agent to achieve amateur human-level performance in the sport.

Curious about how it works? Let's dive in.

/search?q=#AI /search?q=#TableTennis /search?q=#Robotics /search?q=#Innovation /search?q=#OpenAI /search?q=#GPT-4o

2/5

Mind boggling stuff. AI evolving rapidly. Fascinating yet unnerving.

3/5

AI Agents will change everything! Devin AI is at the forefront here.

Guess what, it created and marketed the first product in the world without human intervention. Wana have an exposure to this?

/search?q=#devin from @1stSolanaAICoin

Mcap today: ~USD 2m

4/5

AI Agents will change everything! Devin AI is at the forefront here.

Guess what, it created and marketed the first product in the world without human intervention. Wana have an exposure to this?

/search?q=#devin from @1stSolanaAICoin

Mcap today: ~USD 2m

5/5

Eu teria pra jogar pingpong

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Google DeepMind has developed an AI-powered robot that's ready to play table tennis.

This is the first agent to achieve amateur human-level performance in the sport.

Curious about how it works? Let's dive in.

/search?q=#AI /search?q=#TableTennis /search?q=#Robotics /search?q=#Innovation /search?q=#OpenAI /search?q=#GPT-4o

2/5

Mind boggling stuff. AI evolving rapidly. Fascinating yet unnerving.

3/5

AI Agents will change everything! Devin AI is at the forefront here.

Guess what, it created and marketed the first product in the world without human intervention. Wana have an exposure to this?

/search?q=#devin from @1stSolanaAICoin

Mcap today: ~USD 2m

4/5

AI Agents will change everything! Devin AI is at the forefront here.

Guess what, it created and marketed the first product in the world without human intervention. Wana have an exposure to this?

/search?q=#devin from @1stSolanaAICoin

Mcap today: ~USD 2m

5/5

Eu teria pra jogar pingpong

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

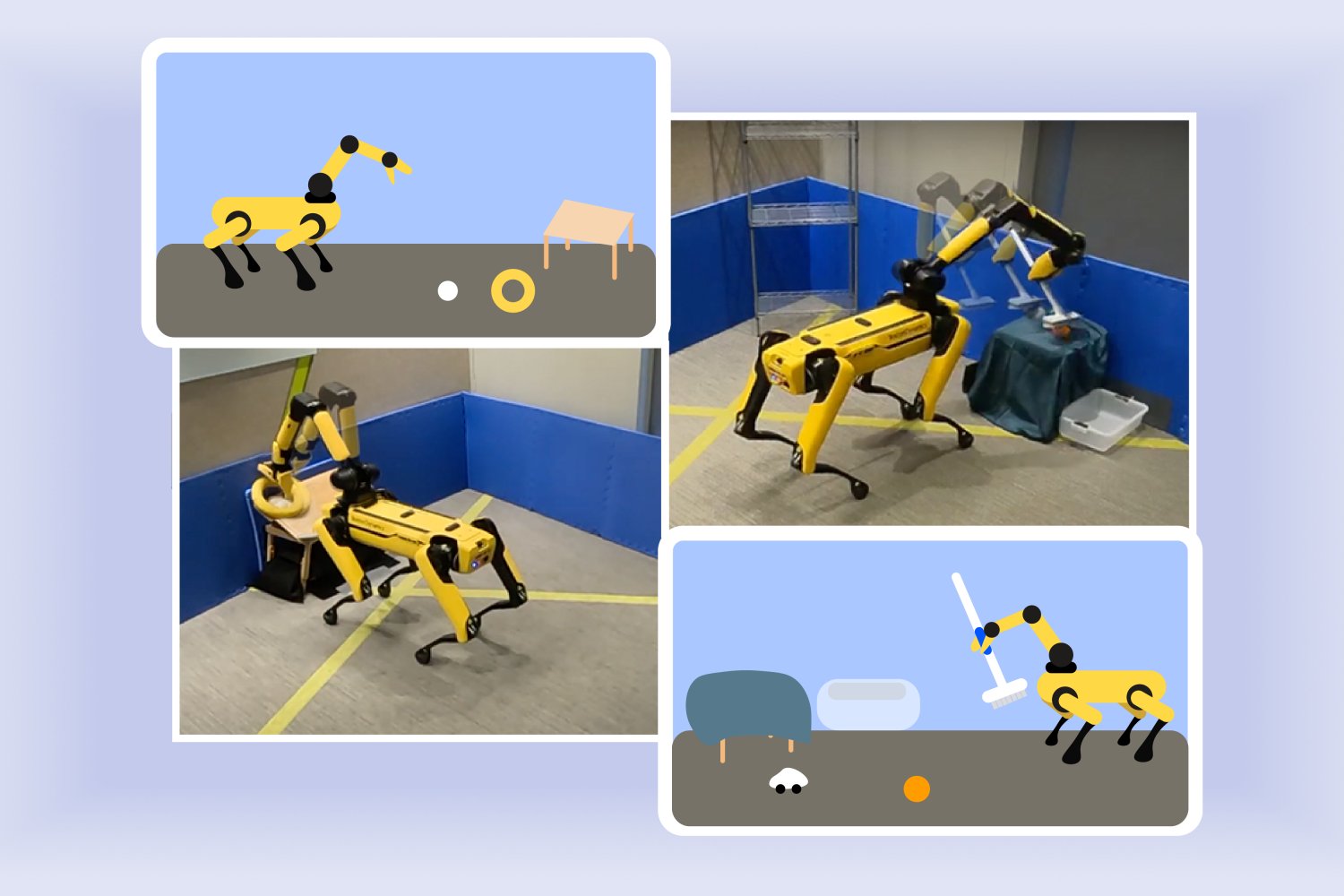

Researchers at MIT's CSAIL and The AI Institute have created a new algorithm called "Estimate, Extrapolate, and Situate" (EES). This algorithm helps robots adapt to different environments by enhancing their ability to learn autonomously.

The EES algorithm improves robot efficiency in settings like factories, homes, hospitals, and coffee shops by using a vision system to monitor surroundings and assist in task performance.

The EES algorithm assesses how well a robot is performing a task and decides if more practice is needed. It was tested on Boston Dynamics's Spot robot at The AI Institute, where it successfully completed tasks after a few hours of practice.

For example, the robot learned to place a ball and ring on a slanted table in about three hours and improved its toy-sweeping skills within two hours.

/search?q=#MIT /search?q=#algorithm /search?q=#ees /search?q=#robot /search?q=#TechNews /search?q=#AI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Researchers at MIT's CSAIL and The AI Institute have created a new algorithm called "Estimate, Extrapolate, and Situate" (EES). This algorithm helps robots adapt to different environments by enhancing their ability to learn autonomously.

The EES algorithm improves robot efficiency in settings like factories, homes, hospitals, and coffee shops by using a vision system to monitor surroundings and assist in task performance.

The EES algorithm assesses how well a robot is performing a task and decides if more practice is needed. It was tested on Boston Dynamics's Spot robot at The AI Institute, where it successfully completed tasks after a few hours of practice.

For example, the robot learned to place a ball and ring on a slanted table in about three hours and improved its toy-sweeping skills within two hours.

/search?q=#MIT /search?q=#algorithm /search?q=#ees /search?q=#robot /search?q=#TechNews /search?q=#AI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/8

The phrase "practice makes perfect" is great advice for humans — and also a helpful maxim for robots

Instead of requiring a human expert to guide such improvement, MIT & The AI Institute’s "Estimate, Extrapolate, and Situate" (EES) algorithm enables these machines to practice on their own, potentially helping them improve at useful tasks in factories, households, and hospitals: Helping robots practice skills independently to adapt to unfamiliar environments

2/8

EES first works w/a vision system that locates & tracks the machine’s surroundings. Then, the algorithm estimates how reliably the robot executes an action & if it should practice more.

3/8

EES forecasts how well the robot could perform the overall task if it refines that skill & finally it practices. The vision system then checks if that skill was done correctly after each attempt.

4/8

This algorithm could come in handy in places like a hospital, factory, house, or coffee shop. For example, if you wanted a robot to clean up your living room, it would need help practicing skills like sweeping.

EES could help that robot improve w/o human intervention, using only a few practice trials.

5/8

EES's knack for efficient learning was evident when implemented on Boston Dynamics’ Spot quadruped during research trials at The AI Institute.

In one demo, the robot learned how to securely place a ball and ring on a slanted table in ~3 hours.

6/8

In another, the algorithm guided the machine to improve at sweeping toys into a bin w/i about 2 hours.

Both results appear to be an upgrade from previous methods, which would have likely taken >10 hours per task.

7/8

Featured authors in article: Nishanth Kumar (@nishanthkumar23), Tom Silver (@tomssilver), Tomás Lozano-Pérez, and Leslie Pack Kaelbling

Paper: Practice Makes Perfect: Planning to Learn Skill Parameter Policies

MIT research group: @MITLIS_Lab

8/8

Is there transfer learning? Each robot’s optimisation for the same task performance may be different,how to they share experiences and optimise learning?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

The phrase "practice makes perfect" is great advice for humans — and also a helpful maxim for robots

Instead of requiring a human expert to guide such improvement, MIT & The AI Institute’s "Estimate, Extrapolate, and Situate" (EES) algorithm enables these machines to practice on their own, potentially helping them improve at useful tasks in factories, households, and hospitals: Helping robots practice skills independently to adapt to unfamiliar environments

2/8

EES first works w/a vision system that locates & tracks the machine’s surroundings. Then, the algorithm estimates how reliably the robot executes an action & if it should practice more.

3/8

EES forecasts how well the robot could perform the overall task if it refines that skill & finally it practices. The vision system then checks if that skill was done correctly after each attempt.

4/8

This algorithm could come in handy in places like a hospital, factory, house, or coffee shop. For example, if you wanted a robot to clean up your living room, it would need help practicing skills like sweeping.

EES could help that robot improve w/o human intervention, using only a few practice trials.

5/8

EES's knack for efficient learning was evident when implemented on Boston Dynamics’ Spot quadruped during research trials at The AI Institute.

In one demo, the robot learned how to securely place a ball and ring on a slanted table in ~3 hours.

6/8

In another, the algorithm guided the machine to improve at sweeping toys into a bin w/i about 2 hours.

Both results appear to be an upgrade from previous methods, which would have likely taken >10 hours per task.

7/8

Featured authors in article: Nishanth Kumar (@nishanthkumar23), Tom Silver (@tomssilver), Tomás Lozano-Pérez, and Leslie Pack Kaelbling

Paper: Practice Makes Perfect: Planning to Learn Skill Parameter Policies

MIT research group: @MITLIS_Lab

8/8

Is there transfer learning? Each robot’s optimisation for the same task performance may be different,how to they share experiences and optimise learning?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

MIT's Algorithm for Self-Training Robots

MIT researchers have developed a groundbreaking algorithm called "Estimate, Extrapolate, and Situate" (EES) that enables robots to train themselves, marking a...

Engineering household robots to have a little common sense

MIT engineers aim to give robots a bit of common sense when faced with situations that push them off their trained path, so they can self-correct after missteps and carry on with their chores. The team’s method connects robot motion data with the common sense knowledge of large language models...

Helping robots practice skills independently to adapt to unfamiliar environments

A robot rapidly specializes its skills using parameter policy learning, where the machine can rapidly specialize at specific, smaller actions within a long-horizon task. The MIT CSAIL algorithm enables autonomous practice to improve at mobile-manipulation activities.

Helping robots practice skills independently to adapt to unfamiliar environments

A new algorithm helps robots practice skills like sweeping and placing objects, potentially helping them improve at important tasks in houses, hospitals, and factories.

Alex Shipps | MIT CSAIL

Publication Date:

August 8, 2024

Press Inquiries

The phrase “practice makes perfect” is usually reserved for humans, but it’s also a great maxim for robots newly deployed in unfamiliar environments.

Picture a robot arriving in a warehouse. It comes packaged with the skills it was trained on, like placing an object, and now it needs to pick items from a shelf it’s not familiar with. At first, the machine struggles with this, since it needs to get acquainted with its new surroundings. To improve, the robot will need to understand which skills within an overall task it needs improvement on, then specialize (or parameterize) that action.

A human onsite could program the robot to optimize its performance, but researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and The AI Institute have developed a more effective alternative. Presented at the Robotics: Science and Systems Conference last month, their “Estimate, Extrapolate, and Situate” (EES) algorithm enables these machines to practice on their own, potentially helping them improve at useful tasks in factories, households, and hospitals.

Sizing up the situation

To help robots get better at activities like sweeping floors, EES works with a vision system that locates and tracks the machine’s surroundings. Then, the algorithm estimates how reliably the robot executes an action (like sweeping) and whether it would be worthwhile to practice more. EES forecasts how well the robot could perform the overall task if it refines that particular skill, and finally, it practices. The vision system subsequently checks whether that skill was done correctly after each attempt.

EES could come in handy in places like a hospital, factory, house, or coffee shop. For example, if you wanted a robot to clean up your living room, it would need help practicing skills like sweeping. According to Nishanth Kumar SM ’24 and his colleagues, though, EES could help that robot improve without human intervention, using only a few practice trials.

“Going into this project, we wondered if this specialization would be possible in a reasonable amount of samples on a real robot,” says Kumar, co-lead author of a paper describing the work, PhD student in electrical engineering and computer science, and a CSAIL affiliate. “Now, we have an algorithm that enables robots to get meaningfully better at specific skills in a reasonable amount of time with tens or hundreds of data points, an upgrade from the thousands or millions of samples that a standard reinforcement learning algorithm requires.”

See Spot sweep

EES’s knack for efficient learning was evident when implemented on Boston Dynamics’ Spot quadruped during research trials at The AI Institute. The robot, which has an arm attached to its back, completed manipulation tasks after practicing for a few hours. In one demonstration, the robot learned how to securely place a ball and ring on a slanted table in roughly three hours. In another, the algorithm guided the machine to improve at sweeping toys into a bin within about two hours. Both results appear to be an upgrade from previous frameworks, which would have likely taken more than 10 hours per task.

“We aimed to have the robot collect its own experience so it can better choose which strategies will work well in its deployment,” says co-lead author Tom Silver SM ’20, PhD ’24, an electrical engineering and computer science (EECS) alumnus and CSAIL affiliate who is now an assistant professor at Princeton University. “By focusing on what the robot knows, we sought to answer a key question: In the library of skills that the robot has, which is the one that would be most useful to practice right now?”

EES could eventually help streamline autonomous practice for robots in new deployment environments, but for now, it comes with a few limitations. For starters, they used tables that were low to the ground, which made it easier for the robot to see its objects. Kumar and Silver also 3D printed an attachable handle that made the brush easier for Spot to grab. The robot didn’t detect some items and identified objects in the wrong places, so the researchers counted those errors as failures.

Giving robots homework

The researchers note that the practice speeds from the physical experiments could be accelerated further with the help of a simulator. Instead of physically working at each skill autonomously, the robot could eventually combine real and virtual practice. They hope to make their system faster with less latency, engineering EES to overcome the imaging delays the researchers experienced. In the future, they may investigate an algorithm that reasons over sequences of practice attempts instead of planning which skills to refine.

“Enabling robots to learn on their own is both incredibly useful and extremely challenging,” says Danfei Xu, an assistant professor in the School of Interactive Computing at Georgia Tech and a research scientist at NVIDIA AI, who was not involved with this work. “In the future, home robots will be sold to all sorts of households and expected to perform a wide range of tasks. We can't possibly program everything they need to know beforehand, so it’s essential that they can learn on the job. However, letting robots loose to explore and learn without guidance can be very slow and might lead to unintended consequences. The research by Silver and his colleagues introduces an algorithm that allows robots to practice their skills autonomously in a structured way. This is a big step towards creating home robots that can continuously evolve and improve on their own.”

Silver and Kumar’s co-authors are The AI Institute researchers Stephen Proulx and Jennifer Barry, plus four CSAIL members: Northeastern University PhD student and visiting researcher Linfeng Zhao, MIT EECS PhD student Willie McClinton, and MIT EECS professors Leslie Pack Kaelbling and Tomás Lozano-Pérez. Their work was supported, in part, by The AI Institute, the U.S. National Science Foundation, the U.S. Air Force Office of Scientific Research, the U.S. Office of Naval Research, the U.S. Army Research Office, and MIT Quest for Intelligence, with high-performance computing resources from the MIT SuperCloud and Lincoln Laboratory Supercomputing Center.

1/6

We've opened the waitlist for General Robot Intelligence Development (GRID) Beta! Accelerate robotics dev with our open, free & cloud-based IDE. Zero setup needed. Develop & deploy advanced skills with foundation models and rapid prototyping

: Scaled Foundations

: Scaled Foundations

(1/6)

(1/6)

2/6

GRID supports a wide range of robot form factors and sensors/modalities (RGB, depth, lidar, GPS, IMU and more) coupled with mapping and navigation for comprehensive and physically accurate sensorimotor development.

(2/6)

3/6

Curate data to train Robotics Foundation Models at scale with GRID. Create thousands of scenarios, across form factors, and robustly test model performance on both sim and real data.

(3/6)

4/6

GRID's LLM-based orchestration leverages foundation models to integrate various modalities, enabling robust sensorimotor capabilities, such as multimodal perception, generative modeling, and navigation, with zero-shot generalization to new scenarios.

(4/6)

5/6

Develop in sim, export and deploy skills on real robots in hours instead of months! GRID enables safe & efficient deployment of trained models on real robots, ensuring reliable performance in real-world scenarios. Example of safe exploration on the Robomaster & Go2:

(5/6)

6/6

Enable your robots to be useful with seamless access to foundation models, simulations and AI tools. Sign up for GRID Beta today and experience the future of robotics development!

Docs: Welcome to the GRID platform! — GRID documentation

(6/6)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

We've opened the waitlist for General Robot Intelligence Development (GRID) Beta! Accelerate robotics dev with our open, free & cloud-based IDE. Zero setup needed. Develop & deploy advanced skills with foundation models and rapid prototyping

2/6

GRID supports a wide range of robot form factors and sensors/modalities (RGB, depth, lidar, GPS, IMU and more) coupled with mapping and navigation for comprehensive and physically accurate sensorimotor development.

(2/6)

3/6

Curate data to train Robotics Foundation Models at scale with GRID. Create thousands of scenarios, across form factors, and robustly test model performance on both sim and real data.

(3/6)

4/6

GRID's LLM-based orchestration leverages foundation models to integrate various modalities, enabling robust sensorimotor capabilities, such as multimodal perception, generative modeling, and navigation, with zero-shot generalization to new scenarios.

(4/6)

5/6

Develop in sim, export and deploy skills on real robots in hours instead of months! GRID enables safe & efficient deployment of trained models on real robots, ensuring reliable performance in real-world scenarios. Example of safe exploration on the Robomaster & Go2:

(5/6)

6/6

Enable your robots to be useful with seamless access to foundation models, simulations and AI tools. Sign up for GRID Beta today and experience the future of robotics development!

Docs: Welcome to the GRID platform! — GRID documentation

(6/6)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Nvidia is on a roll… good decision after good decision

1/2

Alongside language models, robotics has the greatest potential to have a huge impact on our society and our lives. In Germany, there is currently a growing debate about how to pay for the care of the elderly, while at the same time there is a shortage of nursing staff. Robots are the only solution in the medium term.

[Quoted tweet]

Chinese startup Leju Robotics has released their open-source humanoid development platform for academic and R&D use cases.

It includes an SDK for sensors and controls, simulation models, an LLM interface, and some basic demos that work out-of-the-box.

2/2

Wir sollten uns beeilen

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Alongside language models, robotics has the greatest potential to have a huge impact on our society and our lives. In Germany, there is currently a growing debate about how to pay for the care of the elderly, while at the same time there is a shortage of nursing staff. Robots are the only solution in the medium term.

[Quoted tweet]

Chinese startup Leju Robotics has released their open-source humanoid development platform for academic and R&D use cases.

It includes an SDK for sensors and controls, simulation models, an LLM interface, and some basic demos that work out-of-the-box.

2/2

Wir sollten uns beeilen

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/7

@TheHumanoidHub

Chinese startup Leju Robotics has released their open-source humanoid development platform for academic and R&D use cases.

It includes an SDK for sensors and controls, simulation models, an LLM interface, and some basic demos that work out-of-the-box.

[Quoted tweet]

Leju Robotics have already sold 196 units of this humanoid robot. Most of the customers are university research labs.

https://video.twimg.com/ext_tw_video/1844075445953888258/pu/vid/avc1/1280x720/tjIb2YYLOmHLZ3Oo.mp4

https://video.twimg.com/ext_tw_video/1838072423176114176/pu/vid/avc1/720x720/7YSUFK3EW_Vak30Z.mp4

2/7

@TheHumanoidHub

Link to the open-source page:

乐聚(深圳)机器人技术有限公司/kuavo_opensource

3/7

@julienthegeek

Je suis impressionné par le potentiel de cette plateforme de développement humanoïde. J'aimerais en savoir plus sur les simulations et l'interface LLM. Est-il possible d'avoir plus d'informations sur ces aspects?

4/7

@TheHumanoidHub

Watch this repo:

乐聚(深圳)机器人技术有限公司/kuavo_opensource

Or email them: lejurobot@lejurobot.com

5/7

@GlueNet

Open source humanoid? Now that sounds cool

6/7

@pushin___power

@JingxiangMo if useful

7/7

@R3PL1C8R

Humanoid robots have a huge center of gravity problem, don't they?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@TheHumanoidHub

Chinese startup Leju Robotics has released their open-source humanoid development platform for academic and R&D use cases.

It includes an SDK for sensors and controls, simulation models, an LLM interface, and some basic demos that work out-of-the-box.

[Quoted tweet]

Leju Robotics have already sold 196 units of this humanoid robot. Most of the customers are university research labs.

https://video.twimg.com/ext_tw_video/1844075445953888258/pu/vid/avc1/1280x720/tjIb2YYLOmHLZ3Oo.mp4

https://video.twimg.com/ext_tw_video/1838072423176114176/pu/vid/avc1/720x720/7YSUFK3EW_Vak30Z.mp4

2/7

@TheHumanoidHub

Link to the open-source page:

乐聚(深圳)机器人技术有限公司/kuavo_opensource

3/7

@julienthegeek

Je suis impressionné par le potentiel de cette plateforme de développement humanoïde. J'aimerais en savoir plus sur les simulations et l'interface LLM. Est-il possible d'avoir plus d'informations sur ces aspects?

4/7

@TheHumanoidHub

Watch this repo:

乐聚(深圳)机器人技术有限公司/kuavo_opensource

Or email them: lejurobot@lejurobot.com

5/7

@GlueNet

Open source humanoid? Now that sounds cool

6/7

@pushin___power

@JingxiangMo if useful

7/7

@R3PL1C8R

Humanoid robots have a huge center of gravity problem, don't they?

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Four-legged robot learns to climb ladders | TechCrunch

The proliferation of robots like Boston Dynamics’ Spot has showcased the versatility of quadrupeds. These systems have thrived at walking up stairs,

Four-legged robot learns to climb ladders

Brian Heater2:55 PM PDT · October 2, 2024

The proliferation of robots like Boston Dynamics’ Spot has showcased the versatility of quadrupeds. These systems have thrived at walking up stairs, traversing small obstacles, and navigating uneven terrain. Ladders, however, still present a big issue — especially given how ever present they are in factories and other industrial environments where the systems are deployed.

ETH Zurich, which has been behind some of the most exciting quadrupedal robot research of recent vintage, has demonstrated a path forward. As the school notes, past attempts at tackling ladders have mostly involved bipedal humanoid-style robots and specialty ladders, while ultimately proving too slow to be effective.

The research found the school once again utilizing the ANYMal robot from its spinoff, ANYbotics. Here, the team outfitted the quadruped with specialty end effectors that hook onto ladder rungs. The real secret sauce, however, is reinforcement learning, which helps the system adjust to the peculiarities of different ladders.

“This work expands the scope of industrial quadruped robot applications beyond inspection on nominal terrains to challenging infrastructural features in the environment,” the researchers write, “highlighting synergies between robot morphology and control policy when performing complex skills.”

The school says the combined system had a 90% success rate navigating ladder angles in the 70- to 90-degree range. It also reports a climbing speed increase of 232x versus current “state-of-the-art” systems.

The system can correct itself in real-time, adjusting its climb instances in which it has misjudged a run or incorrectly timed a step.