You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Eureka! NVIDIA Research Breakthrough Puts New Spin on Robot Learning

- Thread starter bnew

- Start date

More options

Who Replied?Nvidia this era Apple especially when it comes to the stock market. Impressive ass technology

1/1

Extremely thought-provoking work that essentially says the quiet part out loud: general foundation models for robotic reasoning may already exist *today*.

LLMs aren’t just about language-specific capabilities, but rather about vast and general world understanding.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Extremely thought-provoking work that essentially says the quiet part out loud: general foundation models for robotic reasoning may already exist *today*.

LLMs aren’t just about language-specific capabilities, but rather about vast and general world understanding.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/4

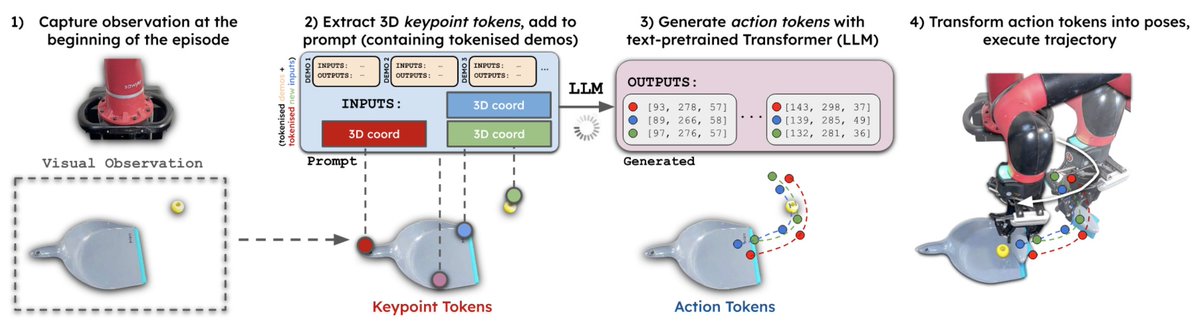

Very excited to announce: Keypoint Action Tokens!

We found that LLMs can be repurposed as "imitation learning engines" for robots, by representing both observations & actions as 3D keypoints, and feeding into an LLM for in-context learning.

See: Keypoint Action Tokens

More

2/4

This is a very different "LLMs + Robotics" idea to usual:

Rather than using LLMs for high-level reasoning with natural language, we use LLMs for low-level reasoning with numerical keypoints.

In other words: we created a low-level "language" for LLMs to understand robotics data!

3/4

This works really well across a range of everyday tasks with complex and arbitrary trajectories, whilst also outperforming Diffusion Policies.

Also, we don't need any training time: the robot can perform tasks immediately after the demonstrations, with rapid in-context learning.

4/4

Keypoint Action Tokens was led by the excellent

@normandipalo in his latest line of work on efficient imitation learning, following on from DINOBot (http://robot-learning.uk/dinobot)[/URL] which we will be presenting soon at ICRA 2024!

Very excited to announce: Keypoint Action Tokens!

We found that LLMs can be repurposed as "imitation learning engines" for robots, by representing both observations & actions as 3D keypoints, and feeding into an LLM for in-context learning.

See: Keypoint Action Tokens

More

2/4

This is a very different "LLMs + Robotics" idea to usual:

Rather than using LLMs for high-level reasoning with natural language, we use LLMs for low-level reasoning with numerical keypoints.

In other words: we created a low-level "language" for LLMs to understand robotics data!

3/4

This works really well across a range of everyday tasks with complex and arbitrary trajectories, whilst also outperforming Diffusion Policies.

Also, we don't need any training time: the robot can perform tasks immediately after the demonstrations, with rapid in-context learning.

4/4

Keypoint Action Tokens was led by the excellent

@normandipalo in his latest line of work on efficient imitation learning, following on from DINOBot (http://robot-learning.uk/dinobot)[/URL] which we will be presenting soon at ICRA 2024!

1/1

Congrats to Boston Dynamics on their new electric version of Atlas robot. Thanks to all the amazing engineering teams at Boston Dynamics, Tesla, and others pushing the field of robotics forward! I can't wait to hang out with Atlas & Optimus together at some point. Robot party

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Congrats to Boston Dynamics on their new electric version of Atlas robot. Thanks to all the amazing engineering teams at Boston Dynamics, Tesla, and others pushing the field of robotics forward! I can't wait to hang out with Atlas & Optimus together at some point. Robot party

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Excited to announce Tau Robotics (

@taurobots ). We are building a general AI for robots. We start by building millions of robot arms that learn in the real world.

In the video, two robot arms are fully autonomous and controlled by a single neural network conditioned on different language instructions (four axes and five axes robot arms). The other two arms are teleoperated. The entire hardware cost in the video is about $1400. The video is at 1.5x speed.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Excited to announce Tau Robotics (

@taurobots ). We are building a general AI for robots. We start by building millions of robot arms that learn in the real world.

In the video, two robot arms are fully autonomous and controlled by a single neural network conditioned on different language instructions (four axes and five axes robot arms). The other two arms are teleoperated. The entire hardware cost in the video is about $1400. The video is at 1.5x speed.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Good lord

We’re cooked

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Good lord

We’re cooked

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/7

Introduce HumanPlus - Shadowing part

Humanoids are born for using human data. We build a real-time shadowing system using a single RGB camera and a whole-body policy for cloning human motion. Examples:

- boxing

- playing the piano/ping pong

- tossing

- typing

Open-sourced!

2/7

Which hardware platform should HumanPlus be embodied on?

We build our own 33-DoF humanoid with two dexterous hands using components:

- Inspire-Robots RH56DFX hands

-

@UnitreeRobotics H1 robot

-

@ROBOTIS

Dynamixel motors

-

@Razer

webcams

We open-source our hardware design.

3/7

Naively copying joints from humans to humanoids does not work due to gravity and different actuations.

We train a transformer-based whole-body RL policy in IsaacGym simulation with realistic physics using AMASS dataset containing 40 hours of human motion: AMASS

4/7

To retarget from humans to humanoids, we copy the corresponding Euler angles from SMPL-X to our humanoid model.

We use open-sourced SOTA human pose and hand estimation methods (thanks!)

- WHAM for body: WHAM

- HaMeR for hands: HaMeR

5/7

Compared with other teleoperation methods, shadowing

- is affordable

- requires only 1 human operator

- avoids singularities

- natively supports whole-body control

6/7

Shadowing is an efficient data collection pipeline.

We then perform supervised behavior cloning to train skill policies using egocentric vision, allowing humanoids to complete different tasks autonomously by imitating human skills.

7/7

This project is not possible without our team of experts, covering from computer graphics to robot learning to robot hardware:

- co-leads: @qingqing_zhao_ @Qi_Wu577- advisors: @chelseabfinn @GordonWetzstein

project website: HumanPlus: Humanoid Shadowing and Imitation from Humans

hardware:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Introduce HumanPlus - Shadowing part

Humanoids are born for using human data. We build a real-time shadowing system using a single RGB camera and a whole-body policy for cloning human motion. Examples:

- boxing

- playing the piano/ping pong

- tossing

- typing

Open-sourced!

2/7

Which hardware platform should HumanPlus be embodied on?

We build our own 33-DoF humanoid with two dexterous hands using components:

- Inspire-Robots RH56DFX hands

-

@UnitreeRobotics H1 robot

-

@ROBOTIS

Dynamixel motors

-

@Razer

webcams

We open-source our hardware design.

3/7

Naively copying joints from humans to humanoids does not work due to gravity and different actuations.

We train a transformer-based whole-body RL policy in IsaacGym simulation with realistic physics using AMASS dataset containing 40 hours of human motion: AMASS

4/7

To retarget from humans to humanoids, we copy the corresponding Euler angles from SMPL-X to our humanoid model.

We use open-sourced SOTA human pose and hand estimation methods (thanks!)

- WHAM for body: WHAM

- HaMeR for hands: HaMeR

5/7

Compared with other teleoperation methods, shadowing

- is affordable

- requires only 1 human operator

- avoids singularities

- natively supports whole-body control

6/7

Shadowing is an efficient data collection pipeline.

We then perform supervised behavior cloning to train skill policies using egocentric vision, allowing humanoids to complete different tasks autonomously by imitating human skills.

7/7

This project is not possible without our team of experts, covering from computer graphics to robot learning to robot hardware:

- co-leads: @qingqing_zhao_ @Qi_Wu577- advisors: @chelseabfinn @GordonWetzstein

project website: HumanPlus: Humanoid Shadowing and Imitation from Humans

hardware:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/6

Introduce HumanPlus - Autonomous Skills part

Humanoids are born for using human data. Imitating humans, our humanoid learns:

- fold sweatshirts

- unload objects from warehouse racks

- diverse locomotion skills (squatting, jumping, standing)

- greet another robot

Open-sourced!

2/6

We build our customized 33-DoF humanoid, and a data collection pipeline through real-time shadowing in the real world.

3/6

Using the data collected through shadowing, we then perform supervised behavior cloning to train skill policies using egocentric vision.

We introduce Humanoid Imitation Transformer. Based on ACT, HIT adds forward dynamics prediction on image feature space as a regularization.

4/6

Compared to baselines, HIT uses

- binocular vision, thus having implicit stereos for depth information

- visual feedback better, avoiding overfitting to proprioception given small-sized demos

5/6

Besides vision-based whole-body manipulation skills, our humanoid has strong locomotion skills:

- outperforming H1 default standing controller under strong perturbation forces

- enabling more whole-body skills like squatting and jumping

6/6

This project is not possible without our team of experts, covering from computer graphics to robot learning to robot hardware:

- co-leads: @qingqing_zhao_ @Qi_Wu577- advisors: @chelseabfinn@GordonWetzstein

project website: HumanPlus: Humanoid Shadowing and Imitation from Humans

hardware:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Introduce HumanPlus - Autonomous Skills part

Humanoids are born for using human data. Imitating humans, our humanoid learns:

- fold sweatshirts

- unload objects from warehouse racks

- diverse locomotion skills (squatting, jumping, standing)

- greet another robot

Open-sourced!

2/6

We build our customized 33-DoF humanoid, and a data collection pipeline through real-time shadowing in the real world.

3/6

Using the data collected through shadowing, we then perform supervised behavior cloning to train skill policies using egocentric vision.

We introduce Humanoid Imitation Transformer. Based on ACT, HIT adds forward dynamics prediction on image feature space as a regularization.

4/6

Compared to baselines, HIT uses

- binocular vision, thus having implicit stereos for depth information

- visual feedback better, avoiding overfitting to proprioception given small-sized demos

5/6

Besides vision-based whole-body manipulation skills, our humanoid has strong locomotion skills:

- outperforming H1 default standing controller under strong perturbation forces

- enabling more whole-body skills like squatting and jumping

6/6

This project is not possible without our team of experts, covering from computer graphics to robot learning to robot hardware:

- co-leads: @qingqing_zhao_ @Qi_Wu577- advisors: @chelseabfinn@GordonWetzstein

project website: HumanPlus: Humanoid Shadowing and Imitation from Humans

hardware:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Smiling robot face is made from living human skin cells

A technique for attaching a skin made from living human cells to a robotic framework could give robots the ability to emote and communicate better

Smiling robot face is made from living human skin cells

A technique for attaching a skin made from living human cells to a robotic framework could give robots the ability to emote and communicate betterBy James Woodford

25 June 2024

This robot face can smile

Takeuchi et al. (CC-BY-ND)

A smiling face made from living human skin could one day be attached to a humanoid robot, allowing machines to emote and communicate in a more life-like way, say researchers. Its wrinkles could also prove useful for the cosmetics industry.

The living tissue is a cultured mix of human skin cells grown in a collagen scaffold and placed on top of a 3D-printed resin base. Unlike previous similar experiments, the skin also contains the equivalent of the ligaments that, in humans and other animals, are buried in the layer of tissue beneath the skin, holding it in place and giving it incredible strength and flexibility.

Read more

This robot predicts when you're going to smile – and smiles back

Michio Kawai at Harvard University and his colleagues call these ligament equivalents “perforation-type anchors” because they were created by perforating the robot’s resin base and allowing tiny v-shaped cavities to fill with living tissue. This, in turn, helps the robot skin stay in place.

The team put the skin on a smiling robotic face, a few centimetres wide, which is moved by rods connected to the base. It was also attached to a similarly sized 3D shape in the form of a human head (see below), but this couldn’t move.

“As the development of AI technology and other advancements expand the roles required of robots, the functions required of robot skin are also beginning to change,” says Kawai, adding that a human-like skin could help robots communicate with people better.

A 3D head shape covered in living skin

Takeuchi et al. (CC-BY-ND)

The work could also have surprising benefits for the cosmetics industry. In an experiment, the researchers made the small robot face smile for one month, finding they could replicate the formation of expression wrinkles in the skin, says Kawai.

“Being able to recreate wrinkle formation on a palm-sized laboratory chip can simultaneously be used to test new cosmetics and skincare products that aim to prevent, delay or improve wrinkle formation,” says Kawai, who performed the work while at the University of Tokyo.

Of course, the skin still lacks some of the functions and durability of real skin, says Kawai.

“The lack of sensing functions and the absence of blood vessels to supply nutrients and moisture means it cannot survive long in the air,” he says. “To address these issues, incorporating neural mechanisms and perfusion channels into the skin tissue is the current challenge.”

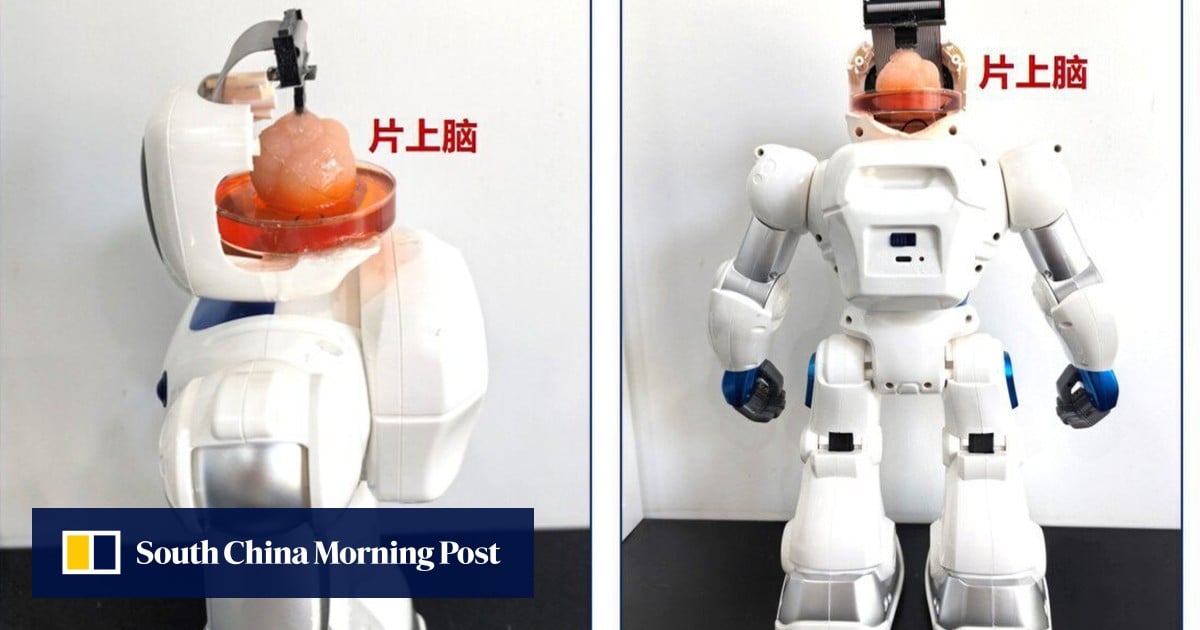

Chinese scientists create robot with brain made from human stem cells

Researchers have developed brain-on-chip technology to train the robot to perform tasks such as gripping objects.

Chinese scientists create robot with brain made from human stem cells

- Researchers have developed brain-on-chip technology to train the robot to perform tasks such as gripping objects

The robots have an artificial brain as well as a neural chip. Photo: Tianjin University

Victoria Bela

+ FOLLOW

Published: 3:00pm, 29 Jun 2024

Why you can trust SCMP

Chinese scientists have developed a robot with a lab-grown artificial brain that can be taught to perform various tasks.

The brain-on-chip technology developed by researchers at Tianjin University and the Southern University of Science and Technology combines a brain organoid – a tissue derived from human stem cells – with a neural interface chip to power the robot and teach it to avoid obstacles and grip objects.

The technology is an emerging branch of brain-computer interfaces (BCI), which aims to combine the brain’s electrical signals with external computing power and which China has made a priority.

It is “the world’s first open-source brain-on-chip intelligent complex information interaction system” and could lead to the development of brain-like computing, according to Tianjin University.

“[This] is a technology that uses an in-vitro cultured ‘brain’ – such as brain organoids – coupled with an electrode chip to form a brain-on-chip,” which encodes and decodes stimulation feedback, Ming Dong, vice-president of Tianjin University, told state-owned Science and Technology Daily on Tuesday.

BCI technology has gained widespread attention due to the Elon Musk-backed Neuralink, an implantable interface designed to let patients control devices with only their thoughts.

Tianjin University now says its research could lead to the development of hybrid human-robot intelligence.

02:12

Inside a Chinese factory that makes humanoid robots with enhanced facial movements

Inside a Chinese factory that makes humanoid robots with enhanced facial movements

Brain organoids are made from human pluripotent stem cells typically only found in early embryos that can develop into different kinds of tissues, including neural tissues.

When grafted into the brain, they can establish functional connections with the host brain, the Tianjin University team wrote in an unedited manuscript published in the peer-reviewed Oxford University Press journal Brain last month.

“The transplant of human brain organoids into living brains is a novel method for advancing organoid development and function. Organoid grafts have a host-derived functional vasculature system and exhibit advanced maturation,” the team wrote.

Li Xiaohong, a professor at Tianjin University, told Science and Technology Daily that while brain organoids were regarded as the most promising model of basic intelligence, the technology still faced “bottlenecks such as low developmental maturity and insufficient nutrient supply”.

In the paper, the team said it had developed a technique to use low-intensity ultrasound, which could help organoids better integrate and grow within the brain.

The team found that when grafts were treated with low-intensity ultrasound, it improved the differentiation of organoid cells into neurons and helped improve the networks it formed with the host brain.

The technique could also lead to new treatments to treat neurodevelopmental disorders and repair damage to the cerebral cortex, the paper said.

“Brain organoid transplants are considered a promising strategy for restoring brain function by replacing lost neurons and reconstructing neural circuits,” the team wrote.

02:51

Biological robots made from human cells show promise for use in medical treatment

Biological robots made from human cells show promise for use in medical treatment

The team found that using low-intensity ultrasound on implanted brain organoids could ameliorate neuropathological defects in a test on a mouse model of microcephaly – a neurodevelopmental disorder characterised by reduced brain and head size.

The university also said the team’s use of non-invasive low-intensity ultrasound treatment could help neural networks form and mature, providing a better foundation for computing.

Introducing Rufus, the Automated Roofing Robot from Renovate Robotics

Rufus is an automated roofing robot designed by Renovate Robotics to double productivity for asphalt shingle installation and improve safety for roofing contractors.

Renovate Robotics, a leader in roofing automation, has introduced Rufus, the world’s first automated roofing robot. The announcement showcases a never-before-seen video of Renovate’s first Rufus prototype.

Rufus is an automated roofing robot designed to double productivity for asphalt shingle installation and improve safety for roofing contractors. Roofing had the second highest fatality rate of all occupations in the United States in 2022, according to the Bureau of Labor and Statistics, and roofing contractors consistently struggle to recruit skilled labor. By doubling productivity, Renovate is empowering contractors to increase their revenue without scaling beyond their existing roofing crews.

Renovate believes automation is the future of the roofing industry, and is focused on building the biggest roofing technology organization in the world.

MIT’s soft robotic system is designed to pack groceries | TechCrunch

RoboGrocery combines computer vision with a soft robotic gripper to bag a wide range of different items.

MIT’s soft robotic system is designed to pack groceries

Brian Heater2:00 PM PDT • June 30, 2024

The first self-checkout system was installed in 1986 in a Kroger grocery store just outside of Atlanta. It took several decades, but the technology has finally proliferated across the U.S. Given the automated direction grocery stores are heading, it seems that robotic bagging can’t be too far behind.

MIT’s CSAIL department this week is showcasing RoboGrocery. It combines computer vision with a soft robotic gripper to bag a wide range of items. To test the system, researchers placed 10 objects unknown to the robot on a grocery conveyer belt.

The products ranged from delicate items like grapes, bread, kale, muffins and crackers to far more solid ones like soup cans, meal boxes and ice cream containers. The vision system kicks in first, detecting the objects before determining their size and orientation on the belt.

As the grasper touches the grapes, pressure sensors in the fingers determine that they are, in fact, delicate and therefore should not go at the bottom of the bag — something many of us no doubt learned the hard way. Next, it notes that the soup can is a more rigid structure and sticks it in the bottom of the bag.

“This is a significant first step towards having robots pack groceries and other items in real-world settings,” said Annan Zhang, one of the study’s lead authors. “Although we’re not quite ready for commercial deployment, our research demonstrates the power of integrating multiple sensing modalities in soft robotic systems.”

The team notes that there’s still plenty of room for improvement, including upgrades to the grasper and the imaging system to better determine how and in what order to pack things. As the system becomes more robust, it may also be scaled outside the grocery into more industrial spaces like recycling plants.

1/1

Daily Training of Robots Driven by RL

Segments of daily training for robots driven by reinforcement learning.

Multiple tests done in advance for friendly service humans.

The training includes some extreme tests, please do not imitate.

#AI #Unitree #AGI #EmbodiedIntelligence #RobotDog #QuadrupedRobot #IndustrialRobot

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Daily Training of Robots Driven by RL

Segments of daily training for robots driven by reinforcement learning.

Multiple tests done in advance for friendly service humans.

The training includes some extreme tests, please do not imitate.

#AI #Unitree #AGI #EmbodiedIntelligence #RobotDog #QuadrupedRobot #IndustrialRobot

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Robot dog on exhibition.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Robot dog on exhibition.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196