1/23

@jeremyphoward

How could anyone have seen R1 coming?

Just because deepseek showed DeepSeek-R1-Lite-Preview months ago, showed the scaling graph, and said they were going to release an API and open source… how could anyone have guessed?

[Quoted tweet]

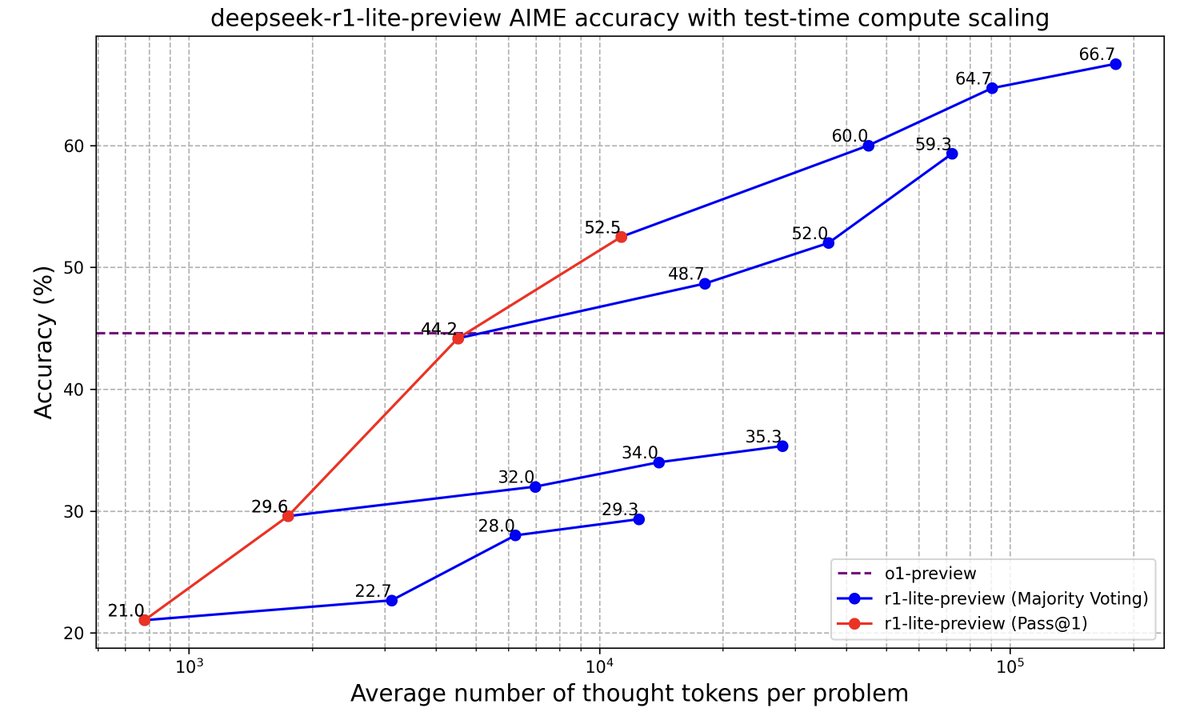

Inference Scaling Laws of DeepSeek-R1-Lite-Preview

Longer Reasoning, Better Performance. DeepSeek-R1-Lite-Preview shows steady score improvements on AIME as thought length increases.

2/23

@nagaraj_arvind

2017 fastAI forums > today's AI twitter

3/23

@jeremyphoward

That’s for sure

4/23

@nonRealBrandon

Nancy Pelosi and Jim Cramer knew.

5/23

@MFrancis107

Not Deepseek specific. But models are continuously getting cheaper and more efficient to train. That's how it's been going and will continue to go.

6/23

@centienceio

i mean they did show deepseek r1 lite preview months ago and talked about releasing an api and open sourcing it so it doesnt seem that hard to guess that r1 was coming

7/23

@vedangvatsa

Read about Liang Wenfeng, the Chinese entrepreneur behind DeepSeek:

[Quoted tweet]

Liang Wenfeng - Founder of DeepSeek

Liang was born in 1985 in Guangdong, China, to a modest family.

His father was a school teacher, and his values of discipline and education greatly influenced Liang.

Liang pursued his studies at Zhejiang University, earning a master’s degree in engineering in 2010.

His research focused on low-cost camera tracking algorithms, showcasing his early interest in practical AI applications.

In 2015, he co-founded High-Flyer, a quantitative hedge fund powered by AI-driven algorithms.

The fund grew rapidly, managing over $100 billion, but he was not content with just the financial success.

He envisioned using AI to solve larger, more impactful problems beyond the finance industry.

In 2023, Liang founded DeepSeek to create cutting-edge AI models for broader use.

Unlike many tech firms, DeepSeek prioritized research and open-source innovation over commercial apps.

Liang hired top PhDs from universities like Peking and Tsinghua, focusing on talent with passion and vision.

To address US chip export restrictions, Liang preemptively secured 10,000 Nvidia GPUs.

This strategic move ensured DeepSeek could compete with global leaders like OpenAI.

DeepSeek's AI models achieved high performance at a fraction of the cost of competitors.

Liang turned down a $10 billion acquisition offer, stating that DeepSeek’s goal was to advance AI, not just profit.

He advocates for originality in China’s tech industry, emphasizing innovation over imitation.

He argued that closed-source technologies only temporarily delay competitors and emphasized the importance of open innovation.

Liang credits his father’s dedication to education for inspiring his persistence and values.

He believes AI should serve humanity broadly, not just the wealthy or elite industries.

8/23

@0xpolarb3ar

AI is a software problem now with current level of compute. Software can move much faster because it doesn't have to obey laws of physics

9/23

@ludwigABAP

Jeremy on a tear today

10/23

@AILeaksAndNews

It was also bound to happen eventually

11/23

@jtlicardo

Because the amount of hype and semi-true claims in AI nowadays makes it hard to separate the wheat from the chaff

12/23

@imaurer

What is April's DeepSeek that is hiding in plain sight?

13/23

@TheBananaRat

So much innovation AI innovation is coy, it’s all good for NVIDIA as they control the software and hardware stack for AI

For example:

Versus .AI

just outperformed DeepSeek and ChatGPT

AI Shake-Up: Verses AI (CBOE:VERS) Leaves DeepSeek and ChatGPT in the Dust!

Verses AI a

Company. Just Outperformed ChatGPT & DeepSeek latest LLM models

AI is evolving rapidly, and Verses AI

is leading the way. Recent performance benchmarks show that Verses’ Genius platform has surpassed DeepSeek, ChatGPT, and other top LLMs, offering superior reasoning, prediction, and decision-making capabilities.

Unlike traditional models, Genius continuously learns and adapts, solving complex real-world challenges where others fall short. For example, its ability to detect and mitigate fraud at scale demonstrates its practical value in high-impact applications.

As AI innovation accelerates, Verses AI is setting a new standard—one built on intelligence that goes beyond language processing to real-time, adaptive decision-making.

Versus AI (CBOE:VERS) is OneToWatch

The

has spoken.

14/23

@suwakopro

I used it when R1 lite was released, and I never expected it to have such a big impact now.

15/23

@din0s_

i thought scaling laws were dead, that's what I read on the news/twitter today

16/23

@rich_everts

Hey Jeremy, have you thought of ways yet to better optimize the RL portion of the Reasoning Agent?

17/23

@JaimeOrtega

I mean stuff doesn't happen until it happens I guess

18/23

@inloveamaze

flew under for publica eye

19/23

@Raviadi1

I expected it to be happen in a short time after R1-Lite. But what i didn't expect it would be open source + free and almost on par with o1.

20/23

@sparkycollier

21/23

@medoraai

I think we saw search optimization was the secret to many of the projects that surprised us last year. But the new algo, Group Relative Policy Optimization (GRPO), was surprising. Really a unique optimization. I can see some real benefits to hiring pure math brains

22/23

@broadfield_dev

I think every single researcher and developer is far less funded than OpenAI, which means they have to innovate.

If we think that DeepSeek is an anomaly, then we are destined to be fooled again.

23/23

@kzSlider

lol ML people are so clueless, this is the one time they didn't trust straight lines on a graph

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196