You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AI that’s smarter than humans? Americans say a firm “no thank you.”

- Thread starter bnew

- Start date

More options

Who Replied?Vandelay

Life is absurd. Lean into it.

Is this thread broken for anyone else?

Is this thread broken for anyone else?

if you mean all the posts won't load and you don't see the reply field on certain pages, i think it's a site issue on some thread pages, you have to lower the amount of posts per page in [Settings -> Preferences]. 15 or 25 should resolve the issue.

Vandelay

Life is absurd. Lean into it.

Thanks. It does this for old threads in particular. I'll give that a shot.if you mean all the posts won't load and you don't see the reply field on certain pages, i think it's a site issue on some thread pages, you have to lower the amount of posts per page in [Settings -> Preferences]. 15 or 25 should resolve the issue.

AI experts ready 'Humanity's Last Exam' to stump powerful tech

By Jeffrey Dastin and Katie Paul

September 16, 20244:42 PM EDTUpdated 20 days ago

Figurines with computers and smartphones are seen in front of the words "Artificial Intelligence AI" in this illustration taken, February 19, 2024. REUTERS/Dado Ruvic/Illustration/File Photo Purchase Licensing Rights

, opens new tab

Sept 16 (Reuters) - A team of technology experts issued a global call on Monday seeking the toughest questions to pose to artificial intelligence systems, which increasingly have handled popular benchmark tests like child's play.

Dubbed "Humanity's Last Exam

, opens new tab," the project seeks to determine when expert-level AI has arrived. It aims to stay relevant even as capabilities advance in future years, according to the organizers, a non-profit called the Center for AI Safety (CAIS) and the startup Scale AI.

The call comes days after the maker of ChatGPT previewed a new model, known as OpenAI o1, which "destroyed the most popular reasoning benchmarks," said Dan Hendrycks, executive director of CAIS and an advisor to Elon Musk's xAI startup.

Hendrycks co-authored two 2021 papers that proposed tests of AI systems that are now widely used, one quizzing them on undergraduate-level knowledge of topics like U.S. history, the other probing models' ability to reason through competition-level math. The undergraduate-style test has more downloads from the online AI hub Hugging Face than any such dataset

, opens new tab.

At the time of those papers, AI was giving almost random answers to questions on the exams. "They're now crushed," Hendrycks told Reuters.

As one example, the Claude models from the AI lab Anthropic have gone from scoring about 77% on the undergraduate-level test in 2023, to nearly 89% a year later, according to a prominent capabilities leaderboard

, opens new tab.

These common benchmarks have less meaning as a result.

AI has appeared to score poorly on lesser-used tests involving plan formulation and visual pattern-recognition puzzles, according to Stanford University’s AI Index Report from April. OpenAI o1 scored around 21% on one version of the pattern-recognition ARC-AGI test, for instance, the ARC organizers said on Friday.

Some AI researchers argue that results like this show planning and abstract reasoning to be better measures of intelligence, though Hendrycks said the visual aspect of ARC makes it less suited to assessing language models. “Humanity’s Last Exam” will require abstract reasoning, he said.

Answers from common benchmarks may also have ended up in data used to train AI systems, industry observers have said. Hendrycks said some questions on "Humanity's Last Exam" will remain private to make sure AI systems' answers are not from memorization.

The exam will include at least 1,000 crowd-sourced questions due November 1 that are hard for non-experts to answer. These will undergo peer review, with winning submissions offered co-authorship and up to $5,000 prizes sponsored by Scale AI.

“We desperately need harder tests for expert-level models to measure the rapid progress of AI," said Alexandr Wang, Scale's CEO.

One restriction: the organizers want no questions about weapons, which some say would be too dangerous for AI to study.

1/4

OpenAI's Noam Brown says the o1 model's reasoning at math problems improves with more test-time compute and "there is no sign of this stopping"

2/4

Source:

https://invidious.poast.org/watch?v=Gr_eYXdHFis

3/4

yes, it's a semi-log plot with the log scale on the x-axis, so it doesn't scale linearly, but Noam does state this

4/4

as I understand it, the primary reasoning domains o1 is trained on are math and coding. literature is more within the ambit of the GPT series.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

OpenAI's Noam Brown says the o1 model's reasoning at math problems improves with more test-time compute and "there is no sign of this stopping"

2/4

Source:

https://invidious.poast.org/watch?v=Gr_eYXdHFis

3/4

yes, it's a semi-log plot with the log scale on the x-axis, so it doesn't scale linearly, but Noam does state this

4/4

as I understand it, the primary reasoning domains o1 is trained on are math and coding. literature is more within the ambit of the GPT series.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

OpenAI's Noam Brown says the new o1 model beats GPT-4o at math and code, and outperforms expert humans at PhD-level questions, and "these numbers, I can almost guarantee you, are going to go up over the next year or two"

2/2

Source:

https://invidious.poast.org/watch?v=Gr_eYXdHFis

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

OpenAI's Noam Brown says the new o1 model beats GPT-4o at math and code, and outperforms expert humans at PhD-level questions, and "these numbers, I can almost guarantee you, are going to go up over the next year or two"

2/2

Source:

https://invidious.poast.org/watch?v=Gr_eYXdHFis

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

Stuart Russell: saying AI is like the calculator is a flawed analogy because calculators only automate the brainless part of mathematics whereas AI can automate the essence of learning to think

2/2

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=NTJK5e9ADcw

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Stuart Russell: saying AI is like the calculator is a flawed analogy because calculators only automate the brainless part of mathematics whereas AI can automate the essence of learning to think

2/2

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=NTJK5e9ADcw

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@tsarnick

Stuart Russell says by the end of this decade AI may exceed human capabilities in every dimension and perform work for free, so there may be more employment, it just won't be employment of humans

https://video-t-1.twimg.com/ext_tw_...9152/pu/vid/avc1/720x720/ktlc06x_refu7Bd2.mp4

2/11

@tsarnick

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=NTJK5e9ADcw

3/11

@ciphertrees

I think everyone will be employed doing something they want to do in the future. You might work at a car plant building cars that people don't actually buy. Drive around with your coworkers later in the cars you just made?

4/11

@0xTheWay

Maybe there will be an enlightenment period where we aren’t burdened by useless work.

5/11

@sisboombahbah

Here’s the thing. Ai will be ABLE to do this. Just like we’re now able to make:

- Self driving cars

- Robot Baristas

- Segways

- Google Glass

- Apple Vision Pro

- Self check out

Why are there still drivers, baristas, bikes, plays, concerts & cashiers?

People like people.

6/11

@mikeamark

And the role for humans will be…

7/11

@LawEngTunes

While AI might do the work for free, we still need people to program, integrate, and ensure it serves humanity. The UAE is already embracing AI to create new opportunities for people.

8/11

@Nitendoraku3

I think society needs to undersrand life is being fruitful; life is not a competition to not work.

9/11

@AMelhede

I think creativity will win as AI takes up more and more jobs and most contrarian thinking people with the most unique ideas will be able to employ AI to make them reality

10/11

@vinayakchronicl

Stuart Russell's forecast about AI surpassing human capabilities across all dimensions by 2030 raises profound questions about the future of work.

While AI might perform tasks at no cost, potentially increasing productivity, we must consider:

Economic Displacement: How do we plan to address the displacement in employment? Universal Basic Income, retraining programs, or new job creation in AI management could be part of the solution.

Value of Work: Beyond economics, how will society redefine the value of work when much of what we do can be done by AI?

Human-AI Collaboration: Instead of replacement, could we focus on enhancing human roles where emotional intelligence, creativity, and ethical decision-making are paramount?

Regulation and Ethics: With AI's capability to work 'for free', what regulations will ensure fair distribution of the wealth generated by AI?

The coming decade will indeed be pivotal in reshaping our understanding of work, value, and economic structures. Let's foster discussions on preparing society for this shift. /search?q=#FutureOfWork /search?q=#AI /search?q=#EconomicTransformation

11/11

@victor_explore

guess it's time to start cozying up to our future AI overlords...

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@tsarnick

Stuart Russell says by the end of this decade AI may exceed human capabilities in every dimension and perform work for free, so there may be more employment, it just won't be employment of humans

https://video-t-1.twimg.com/ext_tw_...9152/pu/vid/avc1/720x720/ktlc06x_refu7Bd2.mp4

2/11

@tsarnick

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=NTJK5e9ADcw

3/11

@ciphertrees

I think everyone will be employed doing something they want to do in the future. You might work at a car plant building cars that people don't actually buy. Drive around with your coworkers later in the cars you just made?

4/11

@0xTheWay

Maybe there will be an enlightenment period where we aren’t burdened by useless work.

5/11

@sisboombahbah

Here’s the thing. Ai will be ABLE to do this. Just like we’re now able to make:

- Self driving cars

- Robot Baristas

- Segways

- Google Glass

- Apple Vision Pro

- Self check out

Why are there still drivers, baristas, bikes, plays, concerts & cashiers?

People like people.

6/11

@mikeamark

And the role for humans will be…

7/11

@LawEngTunes

While AI might do the work for free, we still need people to program, integrate, and ensure it serves humanity. The UAE is already embracing AI to create new opportunities for people.

8/11

@Nitendoraku3

I think society needs to undersrand life is being fruitful; life is not a competition to not work.

9/11

@AMelhede

I think creativity will win as AI takes up more and more jobs and most contrarian thinking people with the most unique ideas will be able to employ AI to make them reality

10/11

@vinayakchronicl

Stuart Russell's forecast about AI surpassing human capabilities across all dimensions by 2030 raises profound questions about the future of work.

While AI might perform tasks at no cost, potentially increasing productivity, we must consider:

Economic Displacement: How do we plan to address the displacement in employment? Universal Basic Income, retraining programs, or new job creation in AI management could be part of the solution.

Value of Work: Beyond economics, how will society redefine the value of work when much of what we do can be done by AI?

Human-AI Collaboration: Instead of replacement, could we focus on enhancing human roles where emotional intelligence, creativity, and ethical decision-making are paramount?

Regulation and Ethics: With AI's capability to work 'for free', what regulations will ensure fair distribution of the wealth generated by AI?

The coming decade will indeed be pivotal in reshaping our understanding of work, value, and economic structures. Let's foster discussions on preparing society for this shift. /search?q=#FutureOfWork /search?q=#AI /search?q=#EconomicTransformation

11/11

@victor_explore

guess it's time to start cozying up to our future AI overlords...

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

@tsarnick

Ethan Mollick says students don't raise their hands in class as much because they don't want to expose their ignorance when AI can answer their questions, and the use of AI is leading to the illusion of competence and lower test scores

https://video.twimg.com/ext_tw_video/1847075142259843072/pu/vid/avc1/720x720/_oFx1B48TzMws-1F.mp4

2/11

@tsarnick

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=xvxPFH16Bvg

3/11

@emollick

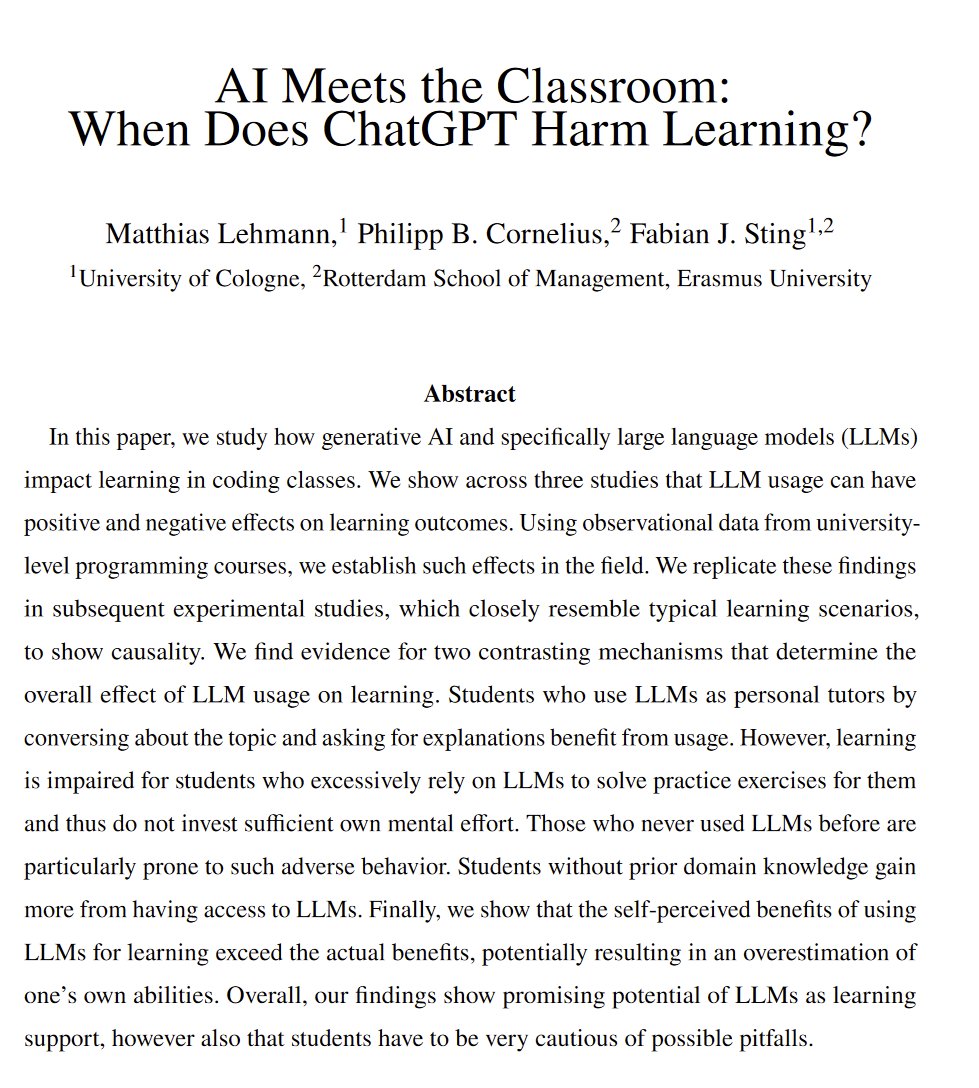

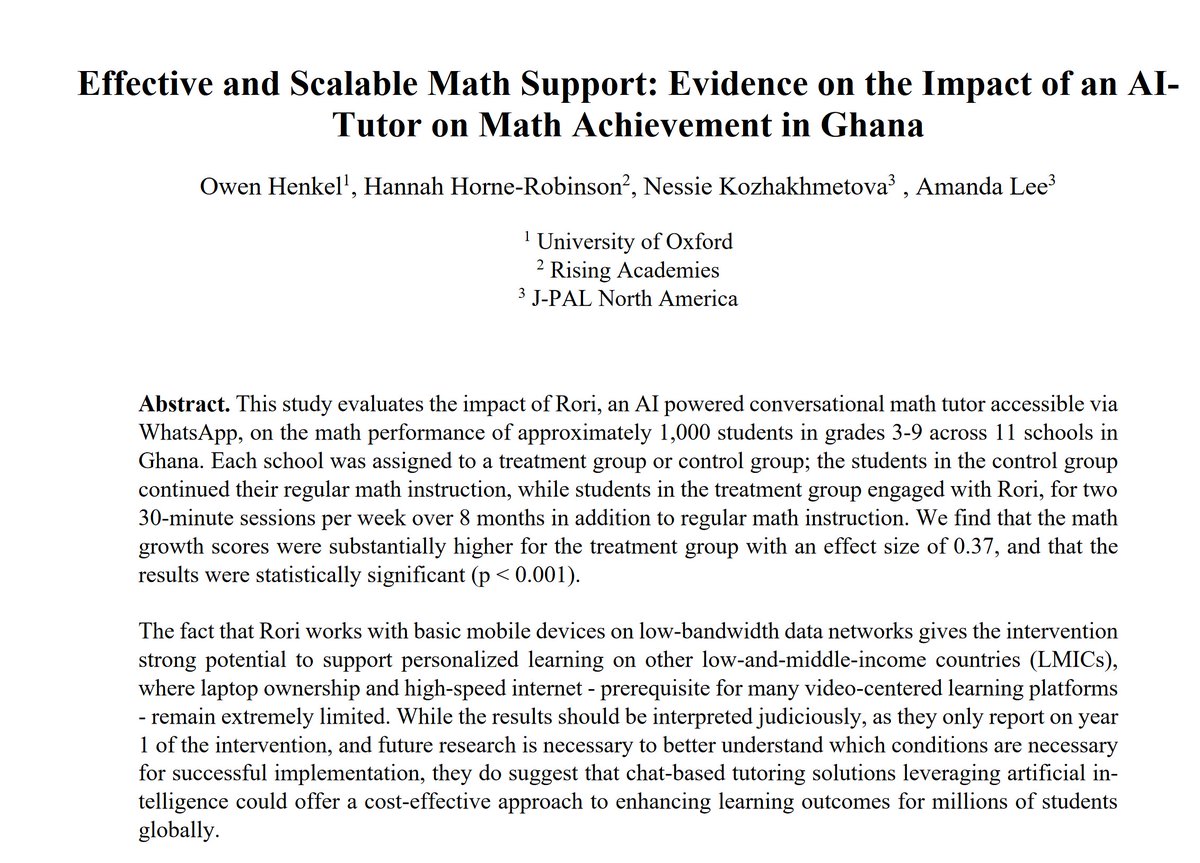

he research I was referring to.

[Quoted tweet]

AI can help learning... when it isn't a crutch.

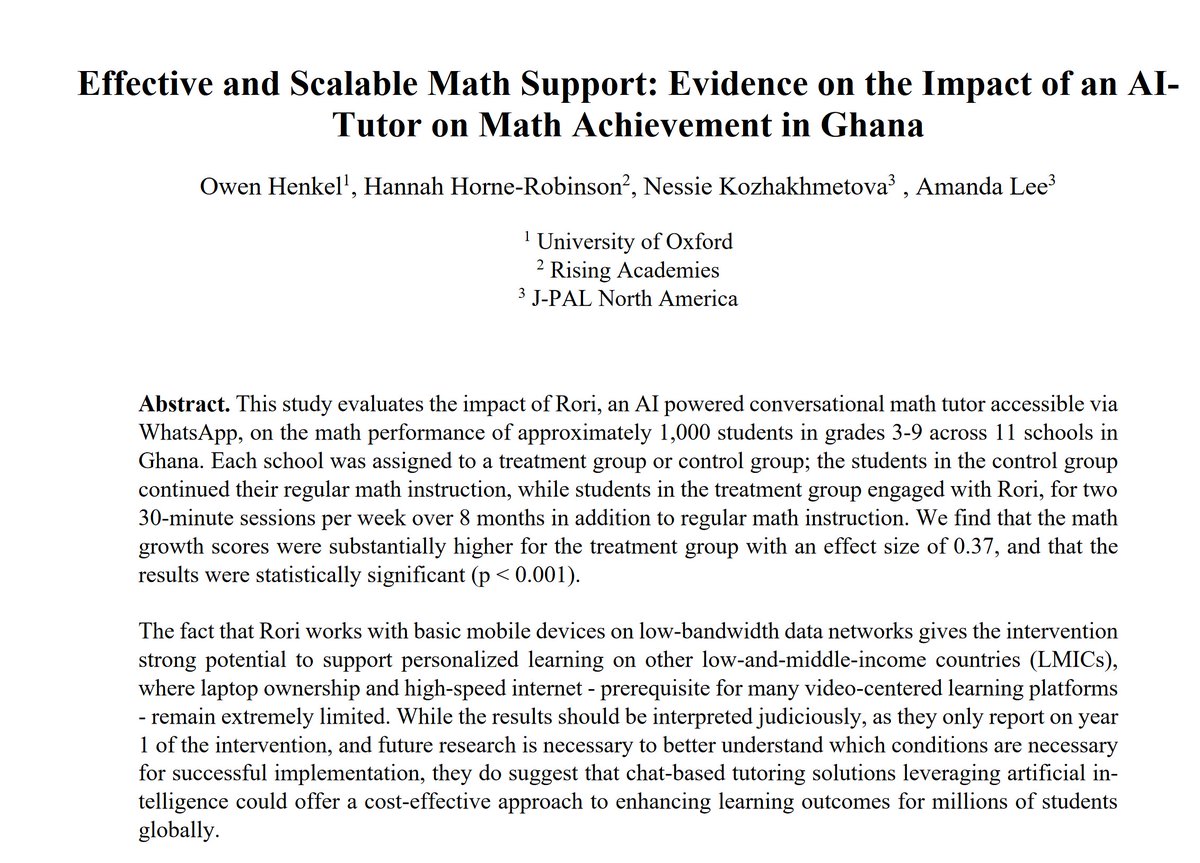

There are now multiple controlled experiments showing that students who use AI to get answers to problems hurts learning (even though they think they are learning), but that students who use AI as a tutor perform better on tests.

4/11

@tsarnick

interesting, thanks for the extra context!

5/11

@joehenriod

I had a teacher when I was in high school that bribed me to always raise my hand and ask really stupid questions before everyone else.

It set the bar for participation as really low, because everyone knew they could AT LEAST contribute something better than I did.

6/11

@tsarnick

7/11

@BobbyGRG

Not sure that asking AI for questions wouldn't provide learning. Students might need though to learn how to ask AI to learn the most. A (good) human teacher will try to double check and make sure that students understood. An AI will just provide an explanation and unless re-prompted to extend or explain otherwise that would be it. Easily improvable with an application wrapper instructed to do similar. Did that wrapper cot app for my kids like in april 2023... now they use chatpgt directly using the right patterns, verification, etc. to activate their learning. That what students need to learn...

8/11

@CallumMacClark

Teachers and lecturers can try all they want to ban this technology but it's not going anywhere. I applaud the students who are using this. also interesting to think that these students are learning how to interact with LLM's not through courses but through usage.

9/11

@ravisyal

Easy access to great teachers will lead to increased learning.

10/11

@Chris_Brannigan

An ai tutor would ask the student follow up questions, give the option of worked examples and other methods to embed / check learning

11/11

@AntDX316

I find with AI that when you keep seeing what is needed in a program, you get used to sticking to what works, which is a good thing as it's constant training with the very best.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@tsarnick

Ethan Mollick says students don't raise their hands in class as much because they don't want to expose their ignorance when AI can answer their questions, and the use of AI is leading to the illusion of competence and lower test scores

https://video.twimg.com/ext_tw_video/1847075142259843072/pu/vid/avc1/720x720/_oFx1B48TzMws-1F.mp4

2/11

@tsarnick

Source (thanks to @curiousgangsta):

https://invidious.poast.org/watch?v=xvxPFH16Bvg

3/11

@emollick

he research I was referring to.

[Quoted tweet]

AI can help learning... when it isn't a crutch.

There are now multiple controlled experiments showing that students who use AI to get answers to problems hurts learning (even though they think they are learning), but that students who use AI as a tutor perform better on tests.

4/11

@tsarnick

interesting, thanks for the extra context!

5/11

@joehenriod

I had a teacher when I was in high school that bribed me to always raise my hand and ask really stupid questions before everyone else.

It set the bar for participation as really low, because everyone knew they could AT LEAST contribute something better than I did.

6/11

@tsarnick

7/11

@BobbyGRG

Not sure that asking AI for questions wouldn't provide learning. Students might need though to learn how to ask AI to learn the most. A (good) human teacher will try to double check and make sure that students understood. An AI will just provide an explanation and unless re-prompted to extend or explain otherwise that would be it. Easily improvable with an application wrapper instructed to do similar. Did that wrapper cot app for my kids like in april 2023... now they use chatpgt directly using the right patterns, verification, etc. to activate their learning. That what students need to learn...

8/11

@CallumMacClark

Teachers and lecturers can try all they want to ban this technology but it's not going anywhere. I applaud the students who are using this. also interesting to think that these students are learning how to interact with LLM's not through courses but through usage.

9/11

@ravisyal

Easy access to great teachers will lead to increased learning.

10/11

@Chris_Brannigan

An ai tutor would ask the student follow up questions, give the option of worked examples and other methods to embed / check learning

11/11

@AntDX316

I find with AI that when you keep seeing what is needed in a program, you get used to sticking to what works, which is a good thing as it's constant training with the very best.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196