Apple Tests ‘Apple GPT,’ Develops Generative AI Tools to Catch OpenAI

- Company builds large language models and internal chatbot

- Executives haven’t decided how to release tools to consumers

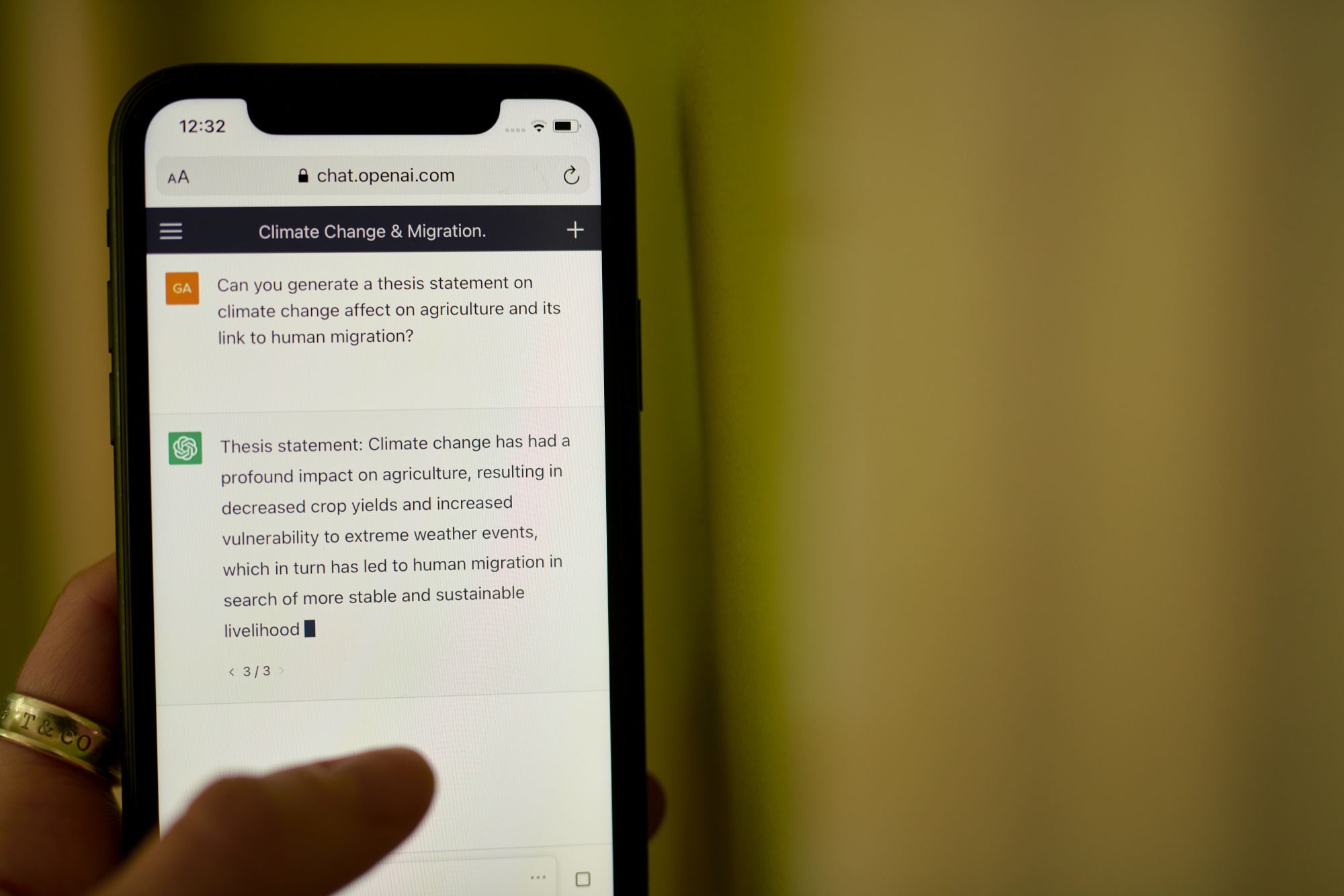

An Apple internal chatbot, dubbed by some as “Apple GPT,” bears similarities to OpenAI’s ChatGPT, pictured.Source: Bloomberg

By

Mark Gurman

July 19, 2023 at 12:03 PM EDT

Updated on

July 19, 2023 at 12:15 PM EDT

Apple Inc. is quietly working on artificial intelligence tools that could challenge those of

OpenAI Inc., Alphabet Inc.’s Google and others, but the company has yet to devise a clear strategy for releasing the technology to consumers.

The iPhone maker has built its own framework to create large language models — the AI-based systems at the heart of new offerings like ChatGPT and Google’s Bard — according to people with knowledge of the efforts. With that foundation, known as “Ajax,” Apple also has created a chatbot service that some engineers call “Apple GPT.”

Apple Races to Build Own Generative AI Framework

WATCH: Apple is making its own Generative AI tools.

In recent months, the AI push has become a major effort for Apple, with several teams collaborating on the project, said the people, who asked not to be identified because the matter is private. The work includes trying to address potential privacy concerns related to the technology.

Apple shares gained as much as 2.3% to a record high of $198.23 after Bloomberg reported on the AI effort Wednesday, rebounding from earlier losses.

Microsoft Corp., OpenAI’s partner and main backer, slipped about 1% on the news.

A spokesman for Cupertino, California-based Apple declined to comment.

The company was caught flat-footed in the past year with the introduction of OpenAI’s ChatGPT, Google Bard and Microsoft’s Bing AI. Though Apple has woven AI features into products for years, it’s now playing catch-up in the buzzy market for generative tools, which can create essays, images and even video based on text prompts. The technology has captured the imagination of consumers and businesses in recent months, leading to a stampede of related products.

Apple has been conspicuously absent from the frenzy. Its main artificial intelligence product, the Siri voice assistant, has stagnated in recent years. But the company has made AI headway in other areas, including improvements to photos and search on the iPhone. There’s also a smarter version of auto-correct coming to its mobile devices this year.

Publicly, Chief Executive Officer Tim Cook has been

circumspect about the flood of new AI services hitting the market. Though the technology has potential, there are still a “number of issues that need to be sorted,” he said during a conference call in May. Apple will be adding AI to more of its products, he said, but on a “very thoughtful basis.”

In

an interview with

Good Morning America, meanwhile, Cook said he uses ChatGPT and that it’s something that the company is “looking at closely.”

Behind the scenes, Apple has grown concerned about missing a potentially paramount shift in how devices operate. Generative AI promises to transform how people interact with phones, computers and other technology. And Apple’s devices, which produced revenue of nearly $320 billion in the last fiscal year, could suffer if the company doesn’t keep up with AI advances.

That’s why Apple began laying the foundation for AI services with the Ajax framework, as well as a ChatGPT-like tool for use internally. Ajax was first created last year to unify machine learning development at Apple, according to the people familiar with the effort.

The company has already deployed AI-related improvements to search, Siri and maps based on that system. And Ajax is now being used to create large language models and serve as the foundation for the internal ChatGPT-style tool, the people said.

How Does Artificial Intelligence Fit Into the Apple Empire?

Apple is developing new AI tools, but isn’t sure how to commercialize them

Source: Apple’s 2022 annual report

The chatbot app was created as an experiment at the end of last year by a tiny engineering team. Its rollout within Apple was initially halted over security concerns about generative AI, but has since been extended to more employees. Still, the system requires special approval for access. There’s also a significant caveat: Any output from it can’t be used to develop features bound for customers.

Even so, Apple employees are using it to assist with product prototyping. It also summarizes text and answers questions based on data it has been trained with.

Apple isn’t the only one taking this approach.

Samsung Electronics Co. and other technology companies have developed their own internal ChatGPT-like tools after

concerns emerged about third-party services leaking sensitive data.

Read More:

AI Doomsday Scenarios Are Gaining Traction in Silicon Valley

Apple employees say the company’s tool essentially replicates Bard, ChatGPT and Bing AI, and doesn’t include any novel features or technology. The system is accessible as a web application and has a stripped-down design not meant for public consumption. As such, Apple has no current plans to release it to consumers, though it is actively working to improve its underlying models.

Beyond the state of the technology, Apple is still trying to determine the consumer angle for generative AI. It’s now working on several related initiatives — a cross-company effort between its AI and software engineering groups, as well as the cloud services engineering team that would supply the infrastructure for any significant new features. While the company doesn’t yet have a concrete plan, people familiar with the work believe Apple is aiming to make a significant AI-related announcement next year.

John Giannandrea, the company’s head of machine learning and AI, and Craig Federighi, Apple’s top software engineering executive, are leading the efforts. But they haven’t presented a unified front within Apple, said the people. Giannandrea has signaled that he wants to take a more conservative approach, with a desire to see how recent developments from others evolve.

Publicly, Apple’s Tim Cook has expressed caution about the flood of generative AI services hitting the market.Source: Bloomberg

Around the same time that it began developing its own tools, Apple conducted a corporate trial of OpenAI’s technology. It also weighed signing a larger contract with OpenAI, which licenses its services to Microsoft,

Shutterstock Inc. and Salesforce Inc.

Apple’s Ajax system is built on top of Google Jax, the search giant’s machine learning framework. Apple’s system runs on Google Cloud, which the company uses to power cloud services alongside its own infrastructure and

Amazon.com Inc.’s AWS.

As part of its recent work, Apple is seeking to hire more experts in generative AI. On its website, it is advertising for engineers with a “robust understanding of large language models and generative AI” and promises to work on applying that technology to the way “people communicate, create, connect and consume media” on iPhones and its other devices.

An ideal spot for Apple to integrate its LLM technology would be inside Siri, allowing the voice assistant to conduct more tasks on behalf of users. Despite launching in 2011, before rival systems, Siri lagged competitors as Apple focused on other areas and adopted fewer features in favor of privacy.

In his May remarks, Cook defended the company’s AI strategy, saying the technology is used across much of its product lineup, including in features like car-crash and fall detection. More recently, Cook said that LLMs have “great promise,” while warning about the possibility of bias and misinformation. He also called for guardrails and regulation in the space.

The company expanded its artificial intelligence efforts in 2018 with the hiring of Giannandrea, who previously led search and AI at Google. Since then, Apple hasn’t released many splashy new AI features, but at least two initiatives could help put it on the map.

The company is planning a new health coaching service codenamed Quartz that relies on data from an Apple Watch and uses AI to personalize plans, Bloomberg

reported in April. And the company’s

future electric car will use artificial intelligence to power the vehicle’s self-driving capabilities.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24794375/M365Copilot_HeroBanner_Apps_BLOG_FEATURE.jpg)

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24512919/copilot_word_gifs_web.gif)

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24513003/copilot_outlook_web.gif)