You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?1/11

Chat, is this real?

(an analysis of 1M ChatGPT interaction logs by use case)

2/11

From: https://arxiv.org/pdf/2407.14933

And then you can read more about WildChat (the original data source of the chat logs) here: [2405.01470] WildChat: 1M ChatGPT Interaction Logs in the Wild

3/11

I mean at least it’s better than the internet. Sexual content is in second.

4/11

Pretty much

5/11

Super interesting! What’s the source?

6/11

wow imagine if it made good charts….

7/11

“Creative composition” so vague

8/11

Interesting. Does ChatGPT even produce sexual content? It's way too lobotomized for that I think.

"For our safety" of course

9/11

you should see character.ai | Personalized AI for every moment of your day logs & you’ll realize why noam left.

10/11

11/11

all tech starts with sex

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Chat, is this real?

(an analysis of 1M ChatGPT interaction logs by use case)

2/11

From: https://arxiv.org/pdf/2407.14933

And then you can read more about WildChat (the original data source of the chat logs) here: [2405.01470] WildChat: 1M ChatGPT Interaction Logs in the Wild

3/11

I mean at least it’s better than the internet. Sexual content is in second.

4/11

Pretty much

5/11

Super interesting! What’s the source?

6/11

wow imagine if it made good charts….

7/11

“Creative composition” so vague

8/11

Interesting. Does ChatGPT even produce sexual content? It's way too lobotomized for that I think.

"For our safety" of course

9/11

you should see character.ai | Personalized AI for every moment of your day logs & you’ll realize why noam left.

10/11

11/11

all tech starts with sex

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Can't stop watching this @elevenlabsio speech-to-speech demo from @ammaar.

@elevenlabsio speech-to-speech demo from @ammaar.

Such a cool way to control the emotion, timing, and delivery of AI voice.

Instead of describing what you want a clip to sound like...just voice it!

2/11

Btw this was filmed by @stephsmithio at the @a16z AI Artist Retreat. More to come

3/11

I have low key been using this

4/11

I needed this for a clip and tried with exclamation points and pauses. This is perfect!

5/11

So cool!!

6/11

We have this in our Studio which produces end-to-end high quality animated videos thanks to the @elevenlabsio API and I get the BEST results for our IPs when I use speech to speech. @IU_Labs

7/11

good one Ammaar

8/11

is this capability in the API?

9/11

How did I not know this was possible. Thank you!!

10/11

The future is looking more and more awesome every day.

11/11

So much fun recording with @stephsmithio and the team!

Hope this helps people find a quick way to direct the speech they're generating

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Can't stop watching this

Such a cool way to control the emotion, timing, and delivery of AI voice.

Instead of describing what you want a clip to sound like...just voice it!

2/11

Btw this was filmed by @stephsmithio at the @a16z AI Artist Retreat. More to come

3/11

I have low key been using this

4/11

I needed this for a clip and tried with exclamation points and pauses. This is perfect!

5/11

So cool!!

6/11

We have this in our Studio which produces end-to-end high quality animated videos thanks to the @elevenlabsio API and I get the BEST results for our IPs when I use speech to speech. @IU_Labs

7/11

good one Ammaar

8/11

is this capability in the API?

9/11

How did I not know this was possible. Thank you!!

10/11

The future is looking more and more awesome every day.

11/11

So much fun recording with @stephsmithio and the team!

Hope this helps people find a quick way to direct the speech they're generating

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/10

Describing a specific sound can be a daunting task. For creators producing video, podcasts and other multimedia projects, it’s also a necessary one.

By using AI audio models, it’s now possible to describe a sound – like police sirens, paired with the sound of a burning building, for example – and almost instantly produce it.

But the innovation won’t stop there, says @ammaar Reshi, the head of design at @elevenlabsio.

We’re getting to the point where the interactions between LLMs, using voice interfaces, “is becoming incredibly natural and feels like talking to a person,” Ammaar says.

Eventually, ElevenLabs believes that will result in a general AI audio model, unlocking even more possibilities.

2/10

For more with @ammaar Reshi on bringing voice to life, watch the full video on YouTube: https://invidious.poast.org/watch?v=hiYSyRmOqdk

3/10

And listen to the a16z podcast episode, "When AI Meets Art": a16z Podcast

And listen to the a16z podcast episode, "When AI Meets Art": a16z Podcast

4/10

Possible to beta test this I can finally visualize my ideations

5/10

This is amazing @ammaar

6/10

if anyone looked 18 and 48 at the same time

7/10

This tech is insane! Imagine being able to conjure up any sound in seconds, from police sirens to burning buildings. The future of multimedia production is going to be wild!

8/10

Are you guys really invested in smoke ?

9/10

https://www.upwork.com/services/pro...emplate-1817217874234565247?ref=project_share

10/10

Get custom built web3 sites now

https://www.upwork.com/services/pro...website-1786117580285623171?ref=project_share

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Describing a specific sound can be a daunting task. For creators producing video, podcasts and other multimedia projects, it’s also a necessary one.

By using AI audio models, it’s now possible to describe a sound – like police sirens, paired with the sound of a burning building, for example – and almost instantly produce it.

But the innovation won’t stop there, says @ammaar Reshi, the head of design at @elevenlabsio.

We’re getting to the point where the interactions between LLMs, using voice interfaces, “is becoming incredibly natural and feels like talking to a person,” Ammaar says.

Eventually, ElevenLabs believes that will result in a general AI audio model, unlocking even more possibilities.

2/10

For more with @ammaar Reshi on bringing voice to life, watch the full video on YouTube: https://invidious.poast.org/watch?v=hiYSyRmOqdk

3/10

4/10

Possible to beta test this I can finally visualize my ideations

5/10

This is amazing @ammaar

6/10

if anyone looked 18 and 48 at the same time

7/10

This tech is insane! Imagine being able to conjure up any sound in seconds, from police sirens to burning buildings. The future of multimedia production is going to be wild!

8/10

Are you guys really invested in smoke ?

9/10

https://www.upwork.com/services/pro...emplate-1817217874234565247?ref=project_share

10/10

Get custom built web3 sites now

https://www.upwork.com/services/pro...website-1786117580285623171?ref=project_share

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Introducing ElevenStudios — our fully managed AI dubbing service.

We’re already live with some of the world’s top creators:

- @ColinandSamir

- @Youshaei

- @Dope_As_Usual

- @Drewbinsky

- @AliAbdaal

- @HarryStebbings

2/11

A Hindi dub we produced for @bizzofsport received 3x the views of the original version and became the most viewed video on their channel

3/11

Are you a top creator and want to grow your channel internationally?

Get in touch now: https://elevenlabs.io/elevenstudios...medium=social&utm_campaign=creator_launch

4/11

Awesome guys

5/11

Time is Light !

6/11

truly amazing. bravo!

7/11

wow, you guys are pushing HARD! Love it!!!

8/11

Amazing. As always

9/11

This is huge! Congrats!

10/11

You need good guy for the Arabic language, and no one knows eleven Labs for Arabic better than me

11/11

Congrats @Drewbinsky nice to see a fellow yid at the forefront of the travel game. Kudos.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Introducing ElevenStudios — our fully managed AI dubbing service.

We’re already live with some of the world’s top creators:

- @ColinandSamir

- @Youshaei

- @Dope_As_Usual

- @Drewbinsky

- @AliAbdaal

- @HarryStebbings

2/11

A Hindi dub we produced for @bizzofsport received 3x the views of the original version and became the most viewed video on their channel

3/11

Are you a top creator and want to grow your channel internationally?

Get in touch now: https://elevenlabs.io/elevenstudios...medium=social&utm_campaign=creator_launch

4/11

Awesome guys

5/11

Time is Light !

6/11

truly amazing. bravo!

7/11

wow, you guys are pushing HARD! Love it!!!

8/11

Amazing. As always

9/11

This is huge! Congrats!

10/11

You need good guy for the Arabic language, and no one knows eleven Labs for Arabic better than me

11/11

Congrats @Drewbinsky nice to see a fellow yid at the forefront of the travel game. Kudos.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

/cdn.vox-cdn.com/uploads/chorus_asset/file/25565579/DSCF0084.jpg)

Google debuts Pixel Studio AI image-making app

Create pictures on your Pixel, on the go.

Google debuts Pixel Studio AI image-making app

Pixel phones get AI image generation and editing.

By Wes Davis, a weekend editor who covers the latest in tech and entertainment. He has written news, reviews, and more as a tech journalist since 2020.

Aug 13, 2024, 2:05 PM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25565579/DSCF0084.jpg)

Photo by Chris Welch / The Verge

Google announced a new image generation app during its Pixel 9 event today. The company says the app, called Pixel Studio, will come preinstalled on every Pixel 9 device.

Pixel Studio, much like Apple’s forthcoming Image Playground app that’s set to roll out on iOS 18 at some point after the operating system launches, lets you create an image from a prompt. Users can edit images after the fact, using the prompt box to add or subtract elements, and change the feel or style of the picture.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25571139/Screenshot_2024_08_13_at_12.53.17_PM.png)

During the onstage demo, a picture of a bonfire gradually changed into a beach hangout invite with the Golden Gate Bridge and fireworks in the background, made in a pixel art style and complete with invite details and stickers of the presenter’s friends pasted over it. The feature is built on Google’s Imagen 3 text-to-image model.

The feature joins other AI features that Google debuted for Pixel phones, like a new Pixel Screenshots feature that acts like Microsoft’s Recall feature, except instead of taking constant screenshots, you take each one manually.

“Do not hallucinate”: Testers find prompts meant to keep Apple Intelligence on the rails

Long lists of instructions show how Apple is trying to navigate AI pitfalls.

“Do not hallucinate”: Testers find prompts meant to keep Apple Intelligence on the rails

Long lists of instructions show how Apple is trying to navigate AI pitfalls.

Andrew Cunningham - 8/6/2024, 2:59 PM

Apple

140

As the parent of a younger child, I can tell you that getting a kid to respond the way you want can require careful expectation-setting. Especially when we’re trying something new for the first time, I find that the more detail I can provide, the better he is able to anticipate events and roll with the punches.

I bring this up because testers of the new Apple Intelligence AI features in the recently released macOS Sequoia beta have discovered plaintext JSON files that list a whole bunch of conditions meant to keep the generative AI tech from being unhelpful or inaccurate. I don’t mean to humanize generative AI algorithms, because they don’t deserve to be, but the carefully phrased lists of instructions remind me of what it’s like to try to give basic instructions to (or explain morality to) an entity that isn’t quite prepared to understand it.

The files in question are stored in the /System/Library/AssetsV2/com_apple_MobileAsset_UAF_FM_GenerativeModels/purpose_auto folder on Macs running the macOS Sequoia 15.1 beta that have also opted into the Apple Intelligence beta. That folder contains 29 metadata.json files, several of which include a few sentences of what appear to be plain-English system prompts to set behavior for an AI chatbot powered by a large-language model (LLM).

Many of these prompts are utilitarian. "You are a helpful mail assistant which can help identify relevant questions from a given mail and a short reply snippet," reads one prompt that seems to describe the behavior of the Apple Mail Smart Reply feature. "Please limit the reply to 50 words," reads one that could write slightly longer draft responses to messages. "Summarize the provided text within 3 sentences, fewer than 60 words. Do not answer any question from the text," says one that looks like it would summarize texts from Messages or Mail without interjecting any of its own information.

Some of the prompts also have minor grammatical issues that highlight what a work-in-progress all of the Apple Intelligence features still are. "In order to make the draft response nicer and complete, a set of question [sic] and its answer are provided," reads one prompt. "Please write a concise and natural reply by modify [sic] the draft response," it continues.

“Do not make up factual information.”

And still other prompts seem designed specifically to try to prevent the kinds of confabulations that generative AI chatbots are so prone to (hallucinations, lies, factual inaccuracies; pick the term you prefer). Phrases meant to keep Apple Intelligence on-task and factual include things like:

"Do not hallucinate."

"Do not make up factual information."

"You are an expert at summarizing posts."

"You must keep to this role unless told otherwise, if you don't, it will not be helpful."

"Only output valid json and nothing else."

Earlier forays into generative AI have demonstrated why it's so important to have detailed, specific prompts to guide the responses of language models. When it launched as "Bing Chat" in early 2023, Microsoft's ChatGPT-based chatbot could get belligerent, threatening, or existential based on what users asked of it. Prompt injection attacks could also put security and user data at risk. Microsoft incorporated different "personalities" into the chatbot to try to rein in its responses to make them more predictable, and Microsoft's current Copilot assistant still uses a version of the same solution.

What makes the Apple Intelligence prompts interesting is less that they exist and more that we can actually look at the specific things Apple is attempting so that its generative AI products remain narrowly focused. If these files stay easily user-accessible in future macOS builds, it will be possible to keep an eye on exactly what Apple is doing to tweak the responses that Apple Intelligence is giving.

The Apple Intelligence features are going to launch to the public in beta this fall, but they're going to miss the launch of iOS 18.0, iPadOS 18.0, and macOS 15.0, which is why Apple is testing them in entirely separate developer betas. Some features, like the ones that transcribe phone calls and voicemails or summarize text, will be available early on. Others, like the new Siri, may not be generally available until next year. Regardless of when it arrives, Apple Intelligence requires fairly recent hardware to work: either an iPhone 15 Pro, or an iPad or Mac with at least an Apple M1 chip installed.

Google Gemini’s voice chat mode is here

Google releases its GPT-4o voice chat competitor.

Google Gemini’s voice chat mode is here

Gemini Advanced subscribers can use Gemini Live for conversational voice chat.

By Wes Davis, a weekend editor who covers the latest in tech and entertainment. He has written news, reviews, and more as a tech journalist since 2020.

Aug 13, 2024, 1:00 PM EDT

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25570938/Gemini_header_image.png)

Gemini gets a new voice chat mode. Image: Google

Google is rolling out a new voice chat mode for Gemini, called Gemini Live, the company announced at its Pixel 9 event today. Available for Gemini Advanced subscribers, it works a lot like ChatGPT’s voice chat feature, with multiple voices to choose from and the ability to speak conversationally, even to the point of interrupting it without tapping a button.

Google says that conversations with Gemini Live can be “free-flowing,” so you can do things like interrupt an answer mid-sentence or pause the conversation and come back to it later. Gemini Live will also work in the background or when your phone is locked. Google first announced that Gemini Live was coming during its I/O developer conference earlier this year, where it also said Gemini Live would be able to interpret video in real time.

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25570929/Gemini_Live_from_IO__1_.gif)

Gemini Live adds voice chatting to Google’s AI assistant. GIF: Google

Google also has 10 new Gemini voices for users to pick from, with names like Ursa and Dipper. The feature has started rolling out today, in English only, for Android devices. The company says it will come to iOS and get more languages “in the coming weeks.”

In addition to Gemini Live, Google announced other features for its AI assistant, including new extensions coming later on, for apps like Keep, Tasks, Utilities, and YouTube Music. Gemini is also gaining awareness of the context of your screen, similar to AI features Apple announced at WWDC this year. After users tap “Ask about this screen” or “Ask about this video,” Google says Gemini can give you information, including pulling out details like destinations from travel videos to add to Google Maps.

1/11

Google's Gemini Live launches an arms race with OpenAI as it competes with ChatGPT's voice mode by offering a choice of voices to chat with and the ability to interrupt and change topics

2/11

Source (thanks to @curiousgangsta):

#MadeByGoogle ‘24: Keynote

3/11

Hopefully this will lead to chatgpt advanced voice mode alpha getting pushed out quicker?

4/11

the election is a limiter at the moment. I expect something late November.

5/11

soon, they'll add live vision into it.

6/11

the voice is more robotic and latency seems larger

7/11

Oh how the positions have been reversed

OpenAI was way ahead, now google is slightly ahead (&they actually ship)

8/11

The fukk is this ?

9/11

Competition is heating up. Can’t wait to see how these advancements push the boundaries of conversational AI!

10/11

Using Gemini just annoys the shyt out of me.

No matter how much they claim to be improving it, it just gets simple stuff wrong or refuses, or recently said an image of text was blurred when it was sharp etc etc

11/11

arms race???

its just math!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Google's Gemini Live launches an arms race with OpenAI as it competes with ChatGPT's voice mode by offering a choice of voices to chat with and the ability to interrupt and change topics

2/11

Source (thanks to @curiousgangsta):

#MadeByGoogle ‘24: Keynote

3/11

Hopefully this will lead to chatgpt advanced voice mode alpha getting pushed out quicker?

4/11

the election is a limiter at the moment. I expect something late November.

5/11

soon, they'll add live vision into it.

6/11

the voice is more robotic and latency seems larger

7/11

Oh how the positions have been reversed

OpenAI was way ahead, now google is slightly ahead (&they actually ship)

8/11

The fukk is this ?

9/11

Competition is heating up. Can’t wait to see how these advancements push the boundaries of conversational AI!

10/11

Using Gemini just annoys the shyt out of me.

No matter how much they claim to be improving it, it just gets simple stuff wrong or refuses, or recently said an image of text was blurred when it was sharp etc etc

11/11

arms race???

its just math!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Google's new Pixel phones come with Call Notes which monitors, transcribes and summarizes what is said on your phone calls

2/11

Source (thanks to @curiousgangsta):

#MadeByGoogle ‘24: Keynote

3/11

I've been considering purchasing one, but I'm not sure if I should get the 7a or the 8.

4/11

I have the 7a. It's torture.

5/11

Finally.

6/11

I told you the clone wars were shadowing me in the cyber war.

Now it’s every-day normal a.i. tools

7/11

Graphene ftw

8/11

Oh man I wonder how this is gonna work out in the courts. Yikes dude

9/11

Thanks, I hate it

10/11

I'm wondering how this will impact job markets. Will we need transcribers and summarizers anymore?

11/11

These features and demos no longer inspire me. To be forgotten in about 15mins.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Google's new Pixel phones come with Call Notes which monitors, transcribes and summarizes what is said on your phone calls

2/11

Source (thanks to @curiousgangsta):

#MadeByGoogle ‘24: Keynote

3/11

I've been considering purchasing one, but I'm not sure if I should get the 7a or the 8.

4/11

I have the 7a. It's torture.

5/11

Finally.

6/11

I told you the clone wars were shadowing me in the cyber war.

Now it’s every-day normal a.i. tools

7/11

Graphene ftw

8/11

Oh man I wonder how this is gonna work out in the courts. Yikes dude

9/11

Thanks, I hate it

10/11

I'm wondering how this will impact job markets. Will we need transcribers and summarizers anymore?

11/11

These features and demos no longer inspire me. To be forgotten in about 15mins.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Google Gemini now has Advanced Voice Mode similar or better than OpenAI ChatGPT

Works just like any other human. You can interrupt to ask questions or pause a chat and come back to it later.

2/11

If you find this useful, RT to share it with your friends.

Don't forget to follow @_unwind_ai for more such AI updates.

3/11

This looks great. Google did something that OpenAI kept talking about all year long.

4/11

Haha, that's true!

5/11

Wow! This dropped unexpectedly

6/11

Yes, completely unexpected.

7/11

This is amazing work by @Google. Underpromise and Overdeliver. Love it!!

@OfficialLoganK

8/11

Yes, this is really good!

9/11

We are all about making your experience better

10/11

No it doesn't.

11/11

Its seems slower than open ai voice mode

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Google Gemini now has Advanced Voice Mode similar or better than OpenAI ChatGPT

Works just like any other human. You can interrupt to ask questions or pause a chat and come back to it later.

2/11

If you find this useful, RT to share it with your friends.

Don't forget to follow @_unwind_ai for more such AI updates.

3/11

This looks great. Google did something that OpenAI kept talking about all year long.

4/11

Haha, that's true!

5/11

Wow! This dropped unexpectedly

6/11

Yes, completely unexpected.

7/11

This is amazing work by @Google. Underpromise and Overdeliver. Love it!!

@OfficialLoganK

8/11

Yes, this is really good!

9/11

We are all about making your experience better

10/11

No it doesn't.

11/11

Its seems slower than open ai voice mode

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/7

Transformers (LLMs) clearly explained with visuals:

2/7

Try it out yourself: Transformer Explainer

If you find this useful, RT to share it with your friends.

Don't forget to follow @_unwind_ai for more such AI updates.

3/7

very cool! this kind of visualization should be there for other architectures as well

4/7

Agreed if we come across more such tools we'll post here

if we come across more such tools we'll post here

5/7

this is the best visualization for transformer models till now!

6/7

yes, indeed.

7/7

Very impressive

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Transformers (LLMs) clearly explained with visuals:

2/7

Try it out yourself: Transformer Explainer

If you find this useful, RT to share it with your friends.

Don't forget to follow @_unwind_ai for more such AI updates.

3/7

very cool! this kind of visualization should be there for other architectures as well

4/7

Agreed

5/7

this is the best visualization for transformer models till now!

6/7

yes, indeed.

7/7

Very impressive

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Sharon Machlis (@smach.masto.machlis.com.ap.brid.gy)

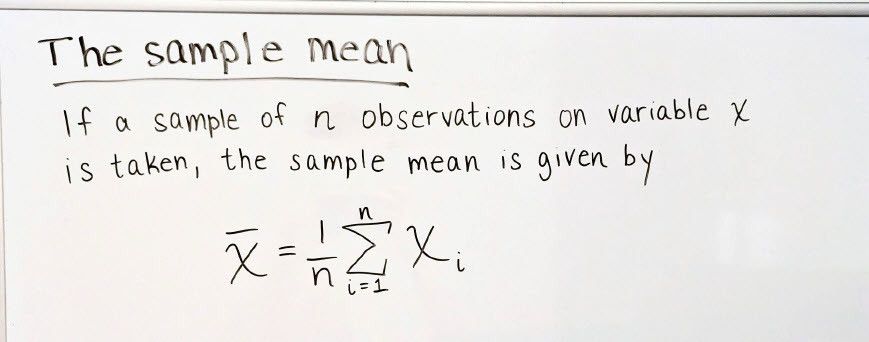

GPT-4o can turn an image of text and math equations into a Quarto document 🤯 , Melissa Van Bussel tells #PositConf2024 in the day's final keynote. #GenAI #LLMs #Quarto #QuartoPub

GPT-4o can turn an image of text and math equations into a Quarto document, Melissa Van Bussel tells #PositConf2024 in the day's final keynote.

#GenAI #LLMs #Quarto #QuartoPub

Sharon Machlis (@smach@masto.machlis.com)

Attached: 3 images GPT-4o can turn an image of text and math equations into a Quarto document 🤯 , Melissa Van Bussel tells #PositConf2024 in the day's final keynote. #GenAI #LLMs #Quarto #QuartoPub

[Submitted on 7 Nov 2023 (v1), last revised 25 Apr 2024 (this version, v2)]

Prompt Cache: Modular Attention Reuse for Low-Latency Inference

In Gim, Guojun Chen, Seung-seob Lee, Nikhil Sarda, Anurag Khandelwal, Lin Zhong

We present Prompt Cache, an approach for accelerating inference for large language models (LLM) by reusing attention states across different LLM prompts. Many input prompts have overlapping text segments, such as system messages, prompt templates, and documents provided for context. Our key insight is that by precomputing and storing the attention states of these frequently occurring text segments on the inference server, we can efficiently reuse them when these segments appear in user prompts. Prompt Cache employs a schema to explicitly define such reusable text segments, called prompt modules. The schema ensures positional accuracy during attention state reuse and provides users with an interface to access cached states in their prompt. Using a prototype implementation, we evaluate Prompt Cache across several LLMs. We show that Prompt Cache significantly reduce latency in time-to-first-token, especially for longer prompts such as document-based question answering and recommendations. The improvements range from 8x for GPU-based inference to 60x for CPU-based inference, all while maintaining output accuracy and without the need for model parameter modifications.

| Comments: | To appear at MLSys 2024 |

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2311.04934 [cs.CL] |

| (or arXiv:2311.04934v2 [cs.CL] for this version) | |

| [2311.04934] Prompt Cache: Modular Attention Reuse for Low-Latency Inference |

Submission history

From: In Gim [view email][v1] Tue, 7 Nov 2023 18:17:05 UTC (1,389 KB)

[v2] Thu, 25 Apr 2024 15:45:19 UTC (1,687 KB)

A.I generated explanation:

Sure, let's break down the concept of Prompt Cache in simpler terms:

### What is Prompt Cache?

Prompt Cache is a technique designed to speed up the process of using large language models (LLMs) by reusing parts of the calculations that are common across different prompts. This helps reduce the time it takes for the model to generate responses.

### How Does It Work?

1. **Identify Common Text Segments**: Many prompts have overlapping text segments, such as system messages or document excerpts.

2. **Precompute Attention States**: The attention states for these common segments are precomputed and stored in memory.

3. **Reuse Attention States**: When a new prompt includes these segments, the precomputed attention states are reused instead of being recalculated.

### Key Concepts:

- **Attention States**: These are complex calculations that help LLMs understand how different parts of a prompt relate to each other.

- **Prompt Modules**: These are reusable pieces of text within prompts that can be precomputed and stored.

### Benefits:

- **Faster Response Times**: By reusing precomputed attention states, Prompt Cache significantly reduces latency, especially for longer prompts.

- **Efficiency**: It works well on both CPUs and GPUs but is particularly beneficial on CPUs where computational resources might be limited.

### Example Scenario:

Imagine you have an LLM that often receives questions about travel plans. Instead of recalculating everything each time someone asks about a trip plan, you can precompute the attention states for common parts like "trip-plan" and reuse them whenever this segment appears in a new question.

### Technical Details:

- **Prompt Markup Language (PML)**: A special way to write prompts so that reusable segments (prompt modules) are clearly defined.

- **Schema vs. Prompt**: A schema outlines how different modules fit together; a prompt uses this schema with specific details filled in.

- **Parameterized Modules**: Allow users to customize certain parts of a module without losing efficiency.

### Evaluation:

The authors tested Prompt Cache with various LLMs and found significant improvements in response times without compromising accuracy. They also explored different memory configurations (CPU vs. GPU) and noted that while GPU memory is faster, CPU memory offers more capacity but requires additional steps to transfer data between host and device.

In summary, Prompt Cache accelerates LLM inference by efficiently reusing precomputed calculations for common text segments across different prompts, leading to faster response times without sacrificing accuracy.