How does the Glaze attack work?

Before Hönig's team published their attack, they alerted Zhao's team to their findings, which provided an opportunity to study the attack and update Glaze. On his blog, Carlini explained that because none of the previously glazed images using earlier versions of the tool could be patched, his team decided not to wait for the Glaze update before posting details on how to execute the attack because it was "strictly better to publish the attack" on Glaze "as early as possible" to warn artists of the potential vulnerability.

Hönig told Ars that breaking Glaze was "simple." His team found that "low-effort and 'off-the-shelf' techniques"—such as image upscaling, "using a different finetuning script" when training AI on new data, or "adding Gaussian noise to the images before training"—"are sufficient to create robust mimicry methods that significantly degrade existing protections."

Sometimes, these attack techniques must be combined, but Hönig's team warned that a motivated, well-resourced art forger might try a variety of methods to break protections like Glaze. Hönig said that thieves could also just download glazed art and wait for new techniques to come along, then quietly break protections while leaving no way for the artist to intervene, even if an attack is widely known. This is why his team discourages uploading any art you want protected online.

Ultimately, Hönig's team's attack works by simply removing the adversarial noise that Glaze adds to images, making it once again possible to train an AI model on the art. They described four methods of attack that they claim worked to remove mimicry protections provided by popular tools, including Glaze, Mist, and Anti-DreamBooth. Three were considered "more accessible" because they don't require technical expertise. The fourth was more complex, leveraging algorithms to detect protections and purify the image so that AI can train on it.

This wasn't the first time Glaze was attacked, but it struck some artists as the most concerning, with Hönig's team boasting an apparent ability to successfully employ tactics previously proven ineffective at disabling mimicry defenses.

The Glaze team

responded by reimplementing the attack, using a different code than Hönig's team, then updating Glaze to be more resistant to the attack, as they understood it from their own implementation. But Hönig told Ars that his team still gets different results using their code, finding Glaze to be only moderately resistant to attacks targeting different styles. Carlini wrote that after testing Glaze 2.1 on "our own denoiser implementation," the team found that "most of the claims made in the Glaze update don’t hold at all," remaining "effective" against some styles, such as cartoon style.

Perhaps more troubling to Carlini, however, was that the Glaze Project only tested the strongest attack method documented in his team's paper, seemingly not addressing other techniques that could leave artists vulnerable.

"In fact, we show that Glaze can be bypassed to various extent by a multitude of methods, including by doing nothing at all," Carlini wrote.

According to Carlini, his team's key finding is that "simply using a different fine-tuning script than the Glaze authors already weakens Glaze’s protections significantly." After pushback, the Glaze team decided to run more tests reimplementing the attack using Carlini's team's code. Zhao confirmed that Glaze's website will be updated to reflect the results of those tests.

In the meantime, Carlini concluded, "Glaze likely provides some form of protection, in the sense that by using it, artists are probably not worse-off than by not using it."

"But such 'better than nothing' security is a very low bar," Carlini wrote. "This could easily mislead artists into a false sense of security and deter them from seeking alternative forms of protection, e.g., the use of other (also imperfect) tools such as watermarks, or private releases of new art styles to trusted customers."

Debating how to best protect artists from AI

The Glaze Project has talked to a wide range of artists, including those "whose styles are intentionally copied," who not only "see loss in commissions and basic income" but suffer when "low quality synthetic copies scattered online dilute their brand and reputation," their website said.

Zhao told Ars that tools like Glaze and Nightshade provide a way for artists to fight the power imbalance between them and well-funded AI companies accused of stealing and copying their works. His team considers Glaze to be the "strongest tool for artists to protect against style mimicry," Glaze's website said, and to keep it that way, he promises to "work to improve its robustness, updating it as necessary to protect it against new attacks."

Part of preserving artist protections, The Glaze Project site explained, is protecting Glaze code, deciding not to open-source the code to "raise the bar for adaptive attacks." Zhao apparently declined to share the code with Carlini's team, explaining that "right now, there are quite literally many thousands of human artists globally who are dealing with ramifications of generative AI’s disruption to the industry, their livelihood, and their mental well-being… IMO, literally everything else takes a back seat compared to the protection of these artists.”

However, Carlini's team

contends that The Glaze Project declining to share the code with security researchers makes artists more vulnerable because artists can then be blindsided by or even oblivious to evolving attacks that the Glaze team might not even be aware of.

"We don’t disagree in the slightest that we should be trying to help artists," Carlini and a co-author wrote in a blog post following the Glaze team's response. "But let’s be clear: the best way to help artists is not to pitch them a tool while refusing security analysis of that tool. If there are flaws in the approach, then we should discover them early so they can be fixed. And that’s easiest to do by openly studying the tool that’s being used."

Battle lines drawn, this tense debate seemingly got personal when Zhao claimed in a Discord chat

screenshot taken by Carlini that "Carlini doesn't give a shyt" about potential harms to artists from publishing his team's attack. Carlini's team

responded by calling Glaze's response to the attack "misleading."

The security researchers have demanded that Glaze update its post detailing vulnerabilities to artists, and The Glaze Project has promised that updates will follow testing being conducted while the team juggles requests for invites and ongoing research priorities.

Artists still motivated to support Glaze

Yet for some artists waiting for access to Glaze, the question isn't whether the tool is worth the wait; it's whether The Glaze Project can sustain the project on limited funding. Zhao told Ars that as requests for invites spike, his team has "been getting a lot of unsolicited emails about wanting to donate to Glaze."

The Glaze Project is funded by research grants and donations from various organizations, including the National Science Foundation, DARPA, Amazon AWS, and C3.ai. The team's goal is not to profit off the tools but to "make a strong impact" defending artists who "generally barely make a living" against looming generative AI threats potentially capable of "destroying the human artist community."

"We are not interested in profit," the project's website says. "There is no business model, no subscription, no hidden fees, no startup. We made Glaze free for anyone to use."

While a gift link will soon be created, Zhao insisted that artists should not direct limited funds to researchers who can always write grants or seek funding from better-resourced donors. Zhao said that he has been asked by so many artists where they can donate to support the project that he has come up with a standard reply.

"If you're an artist, you should keep your money," Zhao said.

Southen, who recently gave a talk at the Conference on Computer Vision and Pattern Recognition "about how machine learning researchers and developers can better interface with artists and respect our work and needs," hopes to see more tools like Glaze introduced, as well as "more ethical" AI tools that "artists would actually be happy to use that respect people's property and process."

"I think there are a lot of useful applications for AI in art that don't need to be generative in nature and don't have to violate people's rights or displace them, and it would be great to see developers lean in to helping and protecting artists rather than displacing and devaluing us," Southen told Ars.

CVPR 2024 Paper Alert

CVPR 2024 Paper Alert

Paper Title: 3DiffTection: 3D Object Detection with Geometry-Aware Diffusion Features

Paper Title: 3DiffTection: 3D Object Detection with Geometry-Aware Diffusion Features Few pointers from the paper

Few pointers from the paper In this paper authors have presented “3DiffTection”, a state-of-the-art method for 3D object detection from single images, leveraging features from a 3D-aware diffusion model. Annotating large-scale image data for 3D detection is resource-intensive and time-consuming.

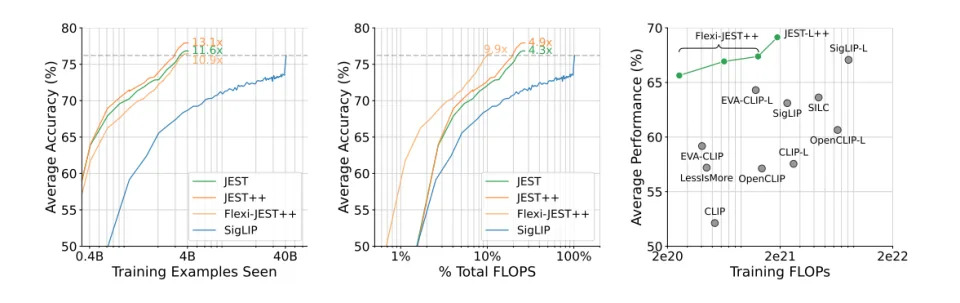

In this paper authors have presented “3DiffTection”, a state-of-the-art method for 3D object detection from single images, leveraging features from a 3D-aware diffusion model. Annotating large-scale image data for 3D detection is resource-intensive and time-consuming. Recently, pretrained large image diffusion models have become prominent as effective feature extractors for 2D perception tasks. However, these features are initially trained on paired text and image data, which are not optimized for 3D tasks, and often exhibit a domain gap when applied to the target data.

Recently, pretrained large image diffusion models have become prominent as effective feature extractors for 2D perception tasks. However, these features are initially trained on paired text and image data, which are not optimized for 3D tasks, and often exhibit a domain gap when applied to the target data. Their approach bridges these gaps through two specialized tuning strategies: geometric and semantic. For geometric tuning, they fine-tuned a diffusion model to perform novel view synthesis conditioned on a single image, by introducing a novel epipolar warp operator.

Their approach bridges these gaps through two specialized tuning strategies: geometric and semantic. For geometric tuning, they fine-tuned a diffusion model to perform novel view synthesis conditioned on a single image, by introducing a novel epipolar warp operator. This task meets two essential criteria: the necessity for 3D awareness and reliance solely on posed image data, which are readily available (e.g., from videos) and does not require manual annotation.

This task meets two essential criteria: the necessity for 3D awareness and reliance solely on posed image data, which are readily available (e.g., from videos) and does not require manual annotation. For semantic refinement, authors further trained the model on target data with detection supervision. Both tuning phases employ ControlNet to preserve the integrity of the original feature capabilities.

For semantic refinement, authors further trained the model on target data with detection supervision. Both tuning phases employ ControlNet to preserve the integrity of the original feature capabilities. In the final step, they harnessed these enhanced capabilities to conduct a test-time prediction ensemble across multiple virtual viewpoints. Through their methodology, they obtained 3D-aware features that are tailored for 3D detection and excel in identifying cross-view point correspondences.

In the final step, they harnessed these enhanced capabilities to conduct a test-time prediction ensemble across multiple virtual viewpoints. Through their methodology, they obtained 3D-aware features that are tailored for 3D detection and excel in identifying cross-view point correspondences. Consequently, their model emerges as a powerful 3D detector, substantially surpassing previous benchmarks, e.g., Cube-RCNN, a precedent in single-view 3D detection by 9.43% in AP3D on the Omni3D-ARkitscene dataset. Furthermore, 3DiffTection showcases robust data efficiency and generalization to cross-domain data.

Consequently, their model emerges as a powerful 3D detector, substantially surpassing previous benchmarks, e.g., Cube-RCNN, a precedent in single-view 3D detection by 9.43% in AP3D on the Omni3D-ARkitscene dataset. Furthermore, 3DiffTection showcases robust data efficiency and generalization to cross-domain data. Organization: @nvidia , @UCBerkeley , @VectorInst , @UofT , @TechnionLive

Organization: @nvidia , @UCBerkeley , @VectorInst , @UofT , @TechnionLive  Paper Authors: @Chenfeng_X , @HuanLing6 , @FidlerSanja , @orlitany

Paper Authors: @Chenfeng_X , @HuanLing6 , @FidlerSanja , @orlitany  Read the Full Paper here: [2311.04391] 3DiffTection: 3D Object Detection with Geometry-Aware Diffusion Features

Read the Full Paper here: [2311.04391] 3DiffTection: 3D Object Detection with Geometry-Aware Diffusion Features Project Page: https://research.nvidia.com/labs/toronto-ai/3difftection/

Project Page: https://research.nvidia.com/labs/toronto-ai/3difftection/ Code: Coming

Code: Coming

Be sure to watch the attached Demo Video-Sound on

Be sure to watch the attached Demo Video-Sound on

Music by Umasha Pros from @pixabay

Music by Umasha Pros from @pixabay  ?

? QT and teach your network something new

QT and teach your network something new , @NaveenManwani17 , for the latest updates on Tech and AI-related news, insightful research papers, and exciting announcements.

, @NaveenManwani17 , for the latest updates on Tech and AI-related news, insightful research papers, and exciting announcements.