You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?06-21-2024 TECH

Perplexity CEO Aravind Srinivas responds to plagiarism and infringement accusations

Recent reports raise questions about how the answer engine works, including its use of third-party content crawlers.

[Photo: SAUL LOEB/AFP via Getty Images]

BY Mark Sullivan3 minute read

The AI search startup Perplexity is in hot water in the wake of a Wired investigation revealing that the startup has been crawling content from websites that don’t want to be crawled.

Perplexity’s “answer engine” works by crawling large swaths of information on the web and then creating a big database (an index) of content it grabs from web pages. Instead of typing keywords into a search box, users type or speak questions into Perplexity’s web portal or mobile apps, and receive a narrative answer with citations and links to the web content it draws upon.

Websites can use something called a Robots Exclusion Protocol to keep their content away from web crawlers, which bots are supposed to honor, though compliance is voluntary. Wired, along with an independent researcher, says it has proof that Perplexity has been ignoring those codes and scraping content from off-limits sites anyway.

“Perplexity is not ignoring the Robot Exclusions Protocol and then lying about it,” said Perplexity cofounder and CEO Aravind Srinivas in a phone interview Friday. “I think there is a basic misunderstanding of the way this works,” Srinivas said. “We don’t just rely on our own web crawlers, we rely on third-party web crawlers as well.”

Srinivas said the mysterious web crawler that Wired identified was not owned by Perplexity, but by a third-party provider of web crawling and indexing services. Srinivas would not say the name of the third-party provider, citing a Nondisclosure Agreement. Asked if Perplexity immediately called the third-parter crawler to tell them to stop crawling Wired content, Srinivas was non-committal. “It’s complicated,” he said.

Srinivas also noted that the Robot Exclusion Protocol, which was first proposed in 1994, is “not a legal framework.” He suggested that the emergence of AI requires a new kind of working relationship between content creators, or publishers, and sites like his.

Wired also claims that it was able to get the Perplexity answer engine to closely paraphrase Wired articles by prompting the tool with the headlines or substance of Wired articles. At times Perplexity even paraphrased the Wired stories incorrectly. In one case, the Perplexity “answer” falsely claimed that a California police officer had committed a crime.

Srinivas suggested that Wired used prompts designed to get the Perplexity tool to behave that way, and that normal users wouldn’t see those kinds of results. “We have never said that we have never hallucinated,” he added.

Earlier in June, Forbes accused Perplexity of stealing its content. Perplexity had released a new product called “Pages” in May that lets a user create an article or blog post based on a series of questions they’ve asked the answer engine, or based on a single prompt on a specific subject. Users can add AI-generated or uploaded images, then tweak the text or add formatting before publishing to the web. One of Perplexity’s own Pages used content from a Forbes scoop but didn’t credit the publisher. Perplexity even created an AI-voiced podcast based on the Forbes reporting, but again didn’t credit the site.

Being fastidious about citing sources has been one of the Perplexity’s core principles since launch—which made the potential omission of citations in the Pages product even more glaring. Srinivas told Fast Company that after Forbes raised the issue, his company immediately pushed out an update to Pages that puts attributions within the text of the generated article.

Srinivas frequently says that his product will only be good as the internet ecosystem that it draws from. “We are happy creating a less-market cap, lower-margin business, as long as we are profitable and successful—and [we] make sure that the whole internet wins,” he told the audience at a Fast Company’s Most Innovative Companies Gala in May. “Perplexity would be useless if people were not able to create new content on the web.”

He has said that the company is now working on ‘revenue-sharing’ agreements with selected publishers. The publishers have not been named, so no telling if Conde Nast (Wired’s owner) or Forbes is involved in the initiative. The content crawling and indexing issues that Wired turned up could force the company to accelerate its plans to cut fair deals with publishers.

Despite publishers’ wariness, there’s still a lot of good will for Perplexity, which is taking on the unenviable task of challenging Google with a new kind of search. But it can’t afford to squander much more of it.

1/1

Kai-Fu Lee says the cost of AI inference compute will reduce by 100x in the next 2 years due to the scaling law

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Kai-Fu Lee says the cost of AI inference compute will reduce by 100x in the next 2 years due to the scaling law

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Character AI's quite forthright about how they achieved 33x cost decrease in inference costs MQA (lowers by 6x vs GQA) Hybrid attention (1:6 global:local SWA) And these two more creative innovations:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Character AI's quite forthright about how they achieved 33x cost decrease in inference costs MQA (lowers by 6x vs GQA) Hybrid attention (1:6 global:local SWA) And these two more creative innovations:

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

"We have reduced serving costs by a factor of 33 compared to when we began in late 2022. Today, if we were to serve our traffic using leading commercial APIs, it would cost at least 13.5X more than with our systems." The AI winners will be low-cost players. Low-cost comes from the mostly lost art of infrastructure engineering excellence. Impressive from @character_ai

2/2

Optimizing AI Inference at Character.AI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

"We have reduced serving costs by a factor of 33 compared to when we began in late 2022. Today, if we were to serve our traffic using leading commercial APIs, it would cost at least 13.5X more than with our systems." The AI winners will be low-cost players. Low-cost comes from the mostly lost art of infrastructure engineering excellence. Impressive from @character_ai

2/2

Optimizing AI Inference at Character.AI

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/12

AI Text-video is moving SOO quickly... 2 Weeks ago - KLING AI Last week - Luma AI Today - Runway Gen3 Runway Gen3 is the most realistic (lmk your favourite) Here's some examples 1. https://nitter.poast.org/i/status/1802730721334677835/video/1

2/12

2. REALLY realistic

3/12

3. It's really good at expressing human emotion

4/12

4. this is insane

5/12

5. wow.

6/12

Let me know your favourite AI text-video generator. KLING AI? Sora? Luma AI? Runway Gen3? Make sure to follow @ArDeved for everything AI

7/12

Love the progress! Exciting to see how far AI text-video has come.

8/12

definitely

9/12

runway Gen3 is crushing it

10/12

Fr

11/12

This is a very healthy competition. Can’t wait to see full length movies generated with the AI.

12/12

Me too!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

AI Text-video is moving SOO quickly... 2 Weeks ago - KLING AI Last week - Luma AI Today - Runway Gen3 Runway Gen3 is the most realistic (lmk your favourite) Here's some examples 1. https://nitter.poast.org/i/status/1802730721334677835/video/1

2/12

2. REALLY realistic

3/12

3. It's really good at expressing human emotion

4/12

4. this is insane

5/12

5. wow.

6/12

Let me know your favourite AI text-video generator. KLING AI? Sora? Luma AI? Runway Gen3? Make sure to follow @ArDeved for everything AI

7/12

Love the progress! Exciting to see how far AI text-video has come.

8/12

definitely

9/12

runway Gen3 is crushing it

10/12

Fr

11/12

This is a very healthy competition. Can’t wait to see full length movies generated with the AI.

12/12

Me too!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Bloomberg - Are you a robot?

www.bloomberg.com

Top AI Companies Really Want to Make Their Chatbots Funnier

Anthropic, xAI and other artificial intelligence companies see humor as an important feature for their services.Anthropic, xAI and other artificial intelligence companies see humor as an important feature for their services.

The dream of a witty AI co-worker may still be pretty far off.

Photographer: Jaap Arriens/NurPhoto/Getty Images

In this Article

Apple Inc207.49

–1.04%

Microsoft Corp

449.78

+0.92%

OpenAI Inc

Private Company

xAI Corp

Private Company

Have a confidential tip for our reporters? Get in Touch

Before it’s here, it’s on the Bloomberg Terminal

By Shirin Ghaffary

June 21, 2024 at 4:00 PM EDT

Some leading tech companies are looking into one of the biggest challenges for AI: making chatbots funnier. But first..

Three things to know:

• Anthropic is releasing a more capable new AI model in a rivalry with OpenAI

• Apple won’t roll out AI tech in EU market over regulatory concerns

• AI data centers are already wreaking havoc on global power systems

Serious business

Google DeepMind has been working on building artificial intelligence that can tackle some of the world’s most pressing problems, from predicting extreme weather to developing new drug treatments. But recently, researchers there confronted a unique challenge: figuring out if AI can tell a good joke.In a paper published earlier this month, a group of DeepMind researchers, including two who do improv comedy in their spare time, asked 20 comics to share their experiences using leading chatbots to help write jokes. The results were brutal. Interviewees said they found AI to be bland, unoriginal and overly politically correct. One likened AI to “cruise ship comedy material from the 1950s, but a bit less racist.” Some comedians said AI was helpful for coming up with what one called a “vomit” first draft, but few felt they were proud of the material written with AI.

“Our participants described comedy as a deeply human endeavour, requiring writers and performers to draw on personal history, social context, and understanding of their audience,” DeepMind researcher Juliette Love, who co-authored the paper, told me by email. That presents “fundamental challenges” for today’s AI models, which are usually trained on data from a snapshot in time and have little context about the situations in which they’re being used.

DeepMind is far from the only tech company thinking about AI’s sense of humor, or lack thereof. Elon Musk’s xAI has positioned Grok as the funnier alternative to rival chatbots. Anthropic released a new AI model this week, Claude 3.5 Sonnet, which it says is significantly better at grasping nuance and humor, among other improvements. And in one recent OpenAI demo, a user tells a dad joke to the latest, voice-enabled version of GPT to see if the tool appreciates it. The chatbot laughs, though maybe not entirely convincingly.

“Humor is a tough nut to crack,” Daniela Amodei, Anthropic’s president and co-founder, told me this week. “I don't think Claude is as strong as a comedian that you would go pay money to see, but I think we have definitely improved.”

Daniela Amodei, co-founder and president of Anthropic, said “humor is a tough nut to crack.”Photographer: David Paul Morris/Bloomberg

For tech companies, making AI funny is serious business. Much of the emphasis right now is on developing conversational chatbots that can handle increasingly complex queries from users — but also be pleasant enough that users will want to keep engaging with them at home and at work.

“If you think about the people that you probably like working with the best, they’re professional, they're approachable, they’re high integrity, right?” Amodei said. “They’re honest, but they can inject a little bit of humor into a conversation with you.”

In my own experiments with some of the leading chatbots, I found AI humor to be limited. For example, I asked Grok to tell me a joke about OpenAI Chief Executive Officer Sam Altman. There are endless opportunities here, but Grok went with possibly the driest option available: “Why did Sam Altman cross the road? To get to the other side of the AI revolution!”

Ba-dum-tss!

When I asked OpenAI’s ChatGPT, Google’s Gemini and Anthropic’s Claude to come up with answers, they were similarly dull. Claude used the same “crossing the road” setup, with a different punchline: “To pivot to the other side!”

Gemini was a little more original: “Sam Altman walks into a bar and orders a round of drinks for everyone. The bartender says, ‘Wow, that's generous! What’s the occasion?’ Sam replies, ‘Just celebrating achieving AGI... again.’”

Based on those results, it’s tempting to conclude AI chatbots need to be a little edgier, but DeepMind’s Love said taking that approach “could increase potential harm to some groups.”

“We have to strike a careful balance. Humor can be polarizing; the boundary between funny and offensive lies in different places for different audiences,” she wrote in the email. “It’s important to minimize that risk, potentially at the expense of humor.”

The dream of a witty AI co-worker may still be pretty far off. For now, we’ll have to settle for dad jokes.

Got a question about AI? Email me, Shirin Ghaffary, and I’ll try to answer yours in a future edition of this newsletter.

Human quote of the week

“This company is special in that its first product will be the safe superintelligence, and it will not do anything else up until then.”Ilya Sutskever

Former chief scientist at OpenAI

Sutskever, who recently left OpenAI after months of speculation about his fate at the company, revealed plans this week for a new research lab called Safe Superintelligence. Sutskever declined to name financial backers or disclose how much he’s raised.

1/1

New Paper and Blog!

Sakana AI

As LLMs become better at generating hypotheses and code, a fascinating possibility emerges: using AI to advance AI itself! As a first step, we got LLMs to discover better algorithms for training LLMs that align with human preferences.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

New Paper and Blog!

Sakana AI

As LLMs become better at generating hypotheses and code, a fascinating possibility emerges: using AI to advance AI itself! As a first step, we got LLMs to discover better algorithms for training LLMs that align with human preferences.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Can LLMs invent better ways to train LLMs?

At Sakana AI, we’re pioneering AI-driven methods to automate AI research and discovery. We’re excited to release DiscoPOP: a new SOTA preference optimization algorithm that was discovered and written by an LLM!

Sakana AI

Our method leverages LLMs to propose and implement new preference optimization algorithms. We then train models with those algorithms and evaluate their performance, providing feedback to the LLM. By repeating this process for multiple generations in an evolutionary loop, the LLM discovers many highly-performant and novel preference optimization objectives!

Paper: [2406.08414] Discovering Preference Optimization Algorithms with and for Large Language Models

GitHub: GitHub - SakanaAI/DiscoPOP: Code for Discovering Preference Optimization Algorithms with and for Large Language Models

Model: SakanaAI/DiscoPOP-zephyr-7b-gemma · Hugging Face

We proudly collaborated with the @UniOfOxford (@FLAIR_Ox) and @Cambridge_Uni (@MihaelaVDS) on this groundbreaking project. Looking ahead, we envision a future where AI-driven research reduces the need for extensive human intervention and computational resources. This will accelerate scientific discoveries and innovation, pushing the boundaries of what AI can achieve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Can LLMs invent better ways to train LLMs?

At Sakana AI, we’re pioneering AI-driven methods to automate AI research and discovery. We’re excited to release DiscoPOP: a new SOTA preference optimization algorithm that was discovered and written by an LLM!

Sakana AI

Our method leverages LLMs to propose and implement new preference optimization algorithms. We then train models with those algorithms and evaluate their performance, providing feedback to the LLM. By repeating this process for multiple generations in an evolutionary loop, the LLM discovers many highly-performant and novel preference optimization objectives!

Paper: [2406.08414] Discovering Preference Optimization Algorithms with and for Large Language Models

GitHub: GitHub - SakanaAI/DiscoPOP: Code for Discovering Preference Optimization Algorithms with and for Large Language Models

Model: SakanaAI/DiscoPOP-zephyr-7b-gemma · Hugging Face

We proudly collaborated with the @UniOfOxford (@FLAIR_Ox) and @Cambridge_Uni (@MihaelaVDS) on this groundbreaking project. Looking ahead, we envision a future where AI-driven research reduces the need for extensive human intervention and computational resources. This will accelerate scientific discoveries and innovation, pushing the boundaries of what AI can achieve.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited:

Interesting news.

qz.com

qz.com

Also NVIDIA stock is correcting and under the split price

Apple is exploring an AI deal with Meta

The Wall Street Journal reported that the two tech rivals may be finding common ground around the technology

qz.com

qz.com

Also NVIDIA stock is correcting and under the split price

1/1

Cognitive Computations presents: dolphin-2.9.3-Yi-1.5-34B-32k. 2.9.3 has multilingual SystemChat 2.0, 100 languages! Created by

@TheEricHartford ,

@LucasAtkins7

, and

@FernandoNetoAi

This release is possible by generous compute sposorship from

@CrusoeAI

and inference sponsorship from

@OnDemandai

Update: We are working on getting 2.9.3 on a bunch of the base models, and we are also heads down on the 3.0 dataset, and we are soon to release a Dolphin Multimodal. (yes! uncensored!)

Also: Our twitter account is *not* hacked, for all those who were worried. If you are quite concerned, go talk to the mods at Cognitive Computations Discord. More updates to come.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Cognitive Computations presents: dolphin-2.9.3-Yi-1.5-34B-32k. 2.9.3 has multilingual SystemChat 2.0, 100 languages! Created by

@TheEricHartford ,

@LucasAtkins7

, and

@FernandoNetoAi

This release is possible by generous compute sposorship from

@CrusoeAI

and inference sponsorship from

@OnDemandai

Update: We are working on getting 2.9.3 on a bunch of the base models, and we are also heads down on the 3.0 dataset, and we are soon to release a Dolphin Multimodal. (yes! uncensored!)

Also: Our twitter account is *not* hacked, for all those who were worried. If you are quite concerned, go talk to the mods at Cognitive Computations Discord. More updates to come.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Pretty amazing stuff from the Udio/Suno lawsuits. Record labels were able to basically recreate versions of very famous songs with highly specific prompts, then linked to them in the lawsuits. I made a short compilation here: Listen to the AI-Generated Ripoff Songs That Got Udio and Suno Sued

2/11

also notable: record industry is not just suing Udio and Suno. It is also seeking to sue & unmask 10 John Does who allegedly helped them scrape the music and make the models

3/11

A full list of AI generated songs that record industry claims are ripped off with links and prompts are available here: https://s3.documentcloud.org/documents/24776032/1-3.pdf and here https://s3.documentcloud.org/documents/24776029/1-2.pdf

4/11

songs they wee able to reproduce:

5/11

It will get nasty after going to discovery and they are forced to disclose exactly how the AI was trained.

6/11

called it in April

7/11

ScarJo vs Openai. Same thing everyone runs with the meme but the audio is not even close. In this case, of couse it has to resemble the original as they are probably using the mimic option to promp the audio with the original song, will they sue every other cover band too? silly.

8/11

A few months ago you were able to get Beatles covers by just asking for a "Bea_tles" song

9/11

The theft from songwriters and musicians is a century old and continuing

10/11

This is biased by lifting the lyrics from the actual songs. Without that I don’t think anyone would say the tune is the same or even similar.

11/11

lol cc: .@joshtpm is there any past example of a hot new industry being sued as rapidly as the AI “creators”? No.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Pretty amazing stuff from the Udio/Suno lawsuits. Record labels were able to basically recreate versions of very famous songs with highly specific prompts, then linked to them in the lawsuits. I made a short compilation here: Listen to the AI-Generated Ripoff Songs That Got Udio and Suno Sued

2/11

also notable: record industry is not just suing Udio and Suno. It is also seeking to sue & unmask 10 John Does who allegedly helped them scrape the music and make the models

3/11

A full list of AI generated songs that record industry claims are ripped off with links and prompts are available here: https://s3.documentcloud.org/documents/24776032/1-3.pdf and here https://s3.documentcloud.org/documents/24776029/1-2.pdf

4/11

songs they wee able to reproduce:

5/11

It will get nasty after going to discovery and they are forced to disclose exactly how the AI was trained.

6/11

called it in April

7/11

ScarJo vs Openai. Same thing everyone runs with the meme but the audio is not even close. In this case, of couse it has to resemble the original as they are probably using the mimic option to promp the audio with the original song, will they sue every other cover band too? silly.

8/11

A few months ago you were able to get Beatles covers by just asking for a "Bea_tles" song

9/11

The theft from songwriters and musicians is a century old and continuing

10/11

This is biased by lifting the lyrics from the actual songs. Without that I don’t think anyone would say the tune is the same or even similar.

11/11

lol cc: .@joshtpm is there any past example of a hot new industry being sued as rapidly as the AI “creators”? No.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Breaking News from Chatbot Arena

@AnthropicAI Claude 3.5 Sonnet has just made a huge leap, securing the #1 spot in Coding Arena, Hard Prompts Arena, and #2 in the Overall leaderboard.

New Sonnet has surpassed Opus at 5x the lower cost and competitive with frontier models GPT-4o/Gemini 1.5 Pro across the boards.

Huge congrats to

@AnthropicAI

for this incredible milestone! Can't wait to see the new Opus & Haiku. Our new vision leaderboard is also coming soon!

(More analysis below)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Breaking News from Chatbot Arena

@AnthropicAI Claude 3.5 Sonnet has just made a huge leap, securing the #1 spot in Coding Arena, Hard Prompts Arena, and #2 in the Overall leaderboard.

New Sonnet has surpassed Opus at 5x the lower cost and competitive with frontier models GPT-4o/Gemini 1.5 Pro across the boards.

Huge congrats to

@AnthropicAI

for this incredible milestone! Can't wait to see the new Opus & Haiku. Our new vision leaderboard is also coming soon!

(More analysis below)

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Wait WHAT? Someone already extracted Gemini Nano weights from Chrome and shared them on the Hub

> Looks like 4-bit running on tf-lite (?)

> base + instruction tuned adapter

Obligatory disclosure: I have no clue how safe this is; run in a sandboxed environment while testing!

wave-on-discord/gemini-nano · Hugging Face

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Wait WHAT? Someone already extracted Gemini Nano weights from Chrome and shared them on the Hub

> Looks like 4-bit running on tf-lite (?)

> base + instruction tuned adapter

Obligatory disclosure: I have no clue how safe this is; run in a sandboxed environment while testing!

wave-on-discord/gemini-nano · Hugging Face

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

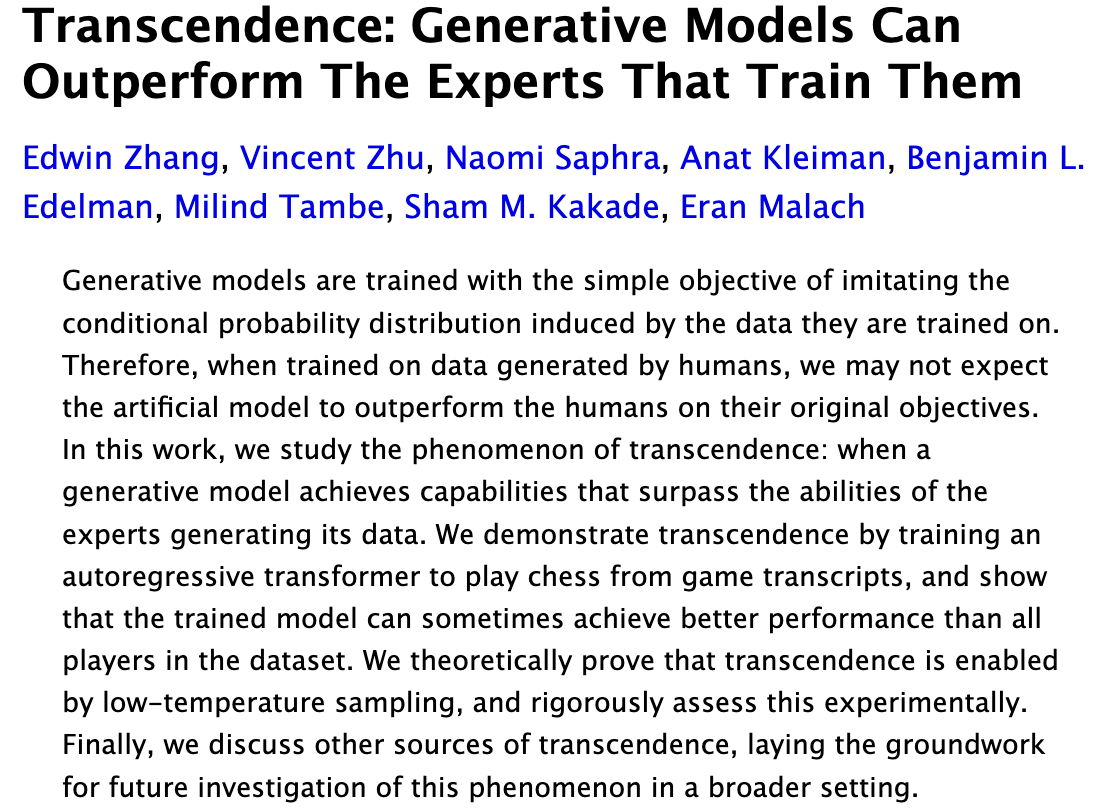

This paper seems very interesting: say you train an LLM to play chess using only transcripts of games of players up to 1000 elo. Is it possible that the model plays better than 1000 elo? (i.e. "transcends" the training data performance?). It seems you get something from nothing, and some information theory arguments that this should be impossible were discussed in conversations I had in the past. But this paper shows this can happen: training on 1000 elo game transcripts and getting an LLM that plays at 1500! Further the authors connect to a clean theoretical framework for why: it's ensembling weak learners, where you get "something from nothing" by averaging the independent mistakes of multiple models. The paper argued that you need enough data diversity and careful temperature sampling for the transcendence to occur. I had been thinking along the same lines but didn't think of using chess as a clean measurable way to scientifically measure this. Fantastic work that I'll read I'll more depth.

2/11

[2406.11741v1] Transcendence: Generative Models Can Outperform The Experts That Train Them paper is here. @ShamKakade6 @nsaphra please tell me if I have any misconceptions.

3/11

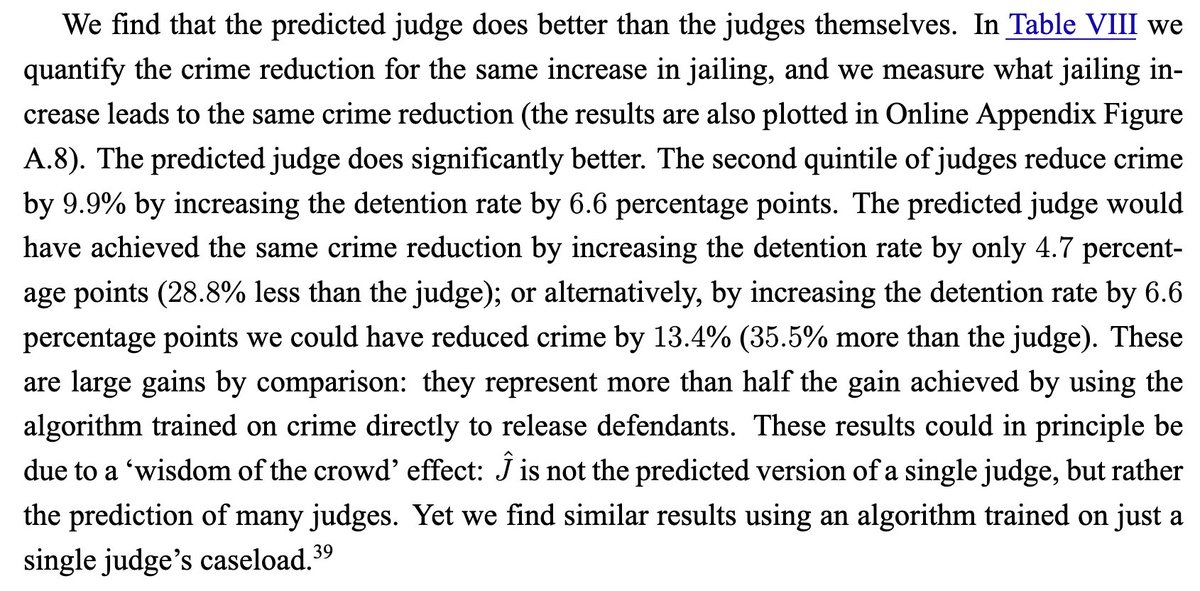

In the classic "Human Decisions and Machine Predictions" paper Kleinberg et al. give evidence that a predictor learned from the bail decisions of multiple judges does better than the judges themselves, calling it a wisdom of the crowd effect. This could be a similar phenomena

4/11

Yes that is what the authors formalize. Only works when there is diversity in the weak learners ie they make different types of mistakes independently.

5/11

It seems very straightforward: a 1000 ELO player makes good and bad moves that average to 1000. A learning process is a max of the quality of moves, so you should get a higher than 1000 rating. I wonder if the AI is more consistent in making "1500 ELO" moves than players.

6/11

Any argument that says it's not surprising must also explain why it didn't happen at 1500 elo training, or why it doesn't happen at higher temperatures.

7/11

The idea might be easier to understand for something that’s more of a motor skill like archery. Imagine building a dataset of humans shooting arrows at targets and then imitating only the examples where they hit the targets.

8/11

Yes but they never have added information on what a better move is or who won, as far as I understood. Unclear if the LLM is even trying to win.

9/11

Interesting - is majority vote by a group of weak learners a form of “verification” as I describe in this thread?

10/11

I don't think it's verification, ie they didn't use signal of who won in each game. It's clear you can use that to filter only better (winning) player transcripts , train on that, iterate to get stronger elo transcripts and repeat. But this phenomenon is different, I think. It's ensembling weak learners. The cleanest setting to understand ensembling: Imagine if I have 1000 binary classifiers, each correct with 60 percent probability and *Independent*. If I make a new classifier by taking majority, it will perform much better than 60 percent. It's concentration of measure, the key tool for information theory too. The surprising experimental findings are 1. this happens with elo 1000 chess players where I wouldn't think they make independent mistakes. 2. Training on transcripts seems to behave like averaging weak learners.

11/11

Interesting

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

This paper seems very interesting: say you train an LLM to play chess using only transcripts of games of players up to 1000 elo. Is it possible that the model plays better than 1000 elo? (i.e. "transcends" the training data performance?). It seems you get something from nothing, and some information theory arguments that this should be impossible were discussed in conversations I had in the past. But this paper shows this can happen: training on 1000 elo game transcripts and getting an LLM that plays at 1500! Further the authors connect to a clean theoretical framework for why: it's ensembling weak learners, where you get "something from nothing" by averaging the independent mistakes of multiple models. The paper argued that you need enough data diversity and careful temperature sampling for the transcendence to occur. I had been thinking along the same lines but didn't think of using chess as a clean measurable way to scientifically measure this. Fantastic work that I'll read I'll more depth.

2/11

[2406.11741v1] Transcendence: Generative Models Can Outperform The Experts That Train Them paper is here. @ShamKakade6 @nsaphra please tell me if I have any misconceptions.

3/11

In the classic "Human Decisions and Machine Predictions" paper Kleinberg et al. give evidence that a predictor learned from the bail decisions of multiple judges does better than the judges themselves, calling it a wisdom of the crowd effect. This could be a similar phenomena

4/11

Yes that is what the authors formalize. Only works when there is diversity in the weak learners ie they make different types of mistakes independently.

5/11

It seems very straightforward: a 1000 ELO player makes good and bad moves that average to 1000. A learning process is a max of the quality of moves, so you should get a higher than 1000 rating. I wonder if the AI is more consistent in making "1500 ELO" moves than players.

6/11

Any argument that says it's not surprising must also explain why it didn't happen at 1500 elo training, or why it doesn't happen at higher temperatures.

7/11

The idea might be easier to understand for something that’s more of a motor skill like archery. Imagine building a dataset of humans shooting arrows at targets and then imitating only the examples where they hit the targets.

8/11

Yes but they never have added information on what a better move is or who won, as far as I understood. Unclear if the LLM is even trying to win.

9/11

Interesting - is majority vote by a group of weak learners a form of “verification” as I describe in this thread?

10/11

I don't think it's verification, ie they didn't use signal of who won in each game. It's clear you can use that to filter only better (winning) player transcripts , train on that, iterate to get stronger elo transcripts and repeat. But this phenomenon is different, I think. It's ensembling weak learners. The cleanest setting to understand ensembling: Imagine if I have 1000 binary classifiers, each correct with 60 percent probability and *Independent*. If I make a new classifier by taking majority, it will perform much better than 60 percent. It's concentration of measure, the key tool for information theory too. The surprising experimental findings are 1. this happens with elo 1000 chess players where I wouldn't think they make independent mistakes. 2. Training on transcripts seems to behave like averaging weak learners.

11/11

Interesting

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

OpenAI just acquired this startup that basically lets someone remotely control your computer... i think we can all guess how this might fit in with ChatGPT desktop...

2/11

I guess they are going to make ChatGPT be able to draw on your screen, edit code, etc

3/11

4/11

follow me if you're interested in creative uses of LLMs.

5/11

i love being featured in the twitter things

6/11

Agents

7/11

Makes a lot of sense, they also get cracked people like @jnpdx

8/11

If the company building AGI is buying a desktop remote company, then we are safe

9/11

I just recently saw some posts about AI zoom styled apps. This fits that mold.

10/11

the cursor will be ChatGPT pointing and drawing comments around while you use voice mode.

11/11

@inversebrah

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

OpenAI just acquired this startup that basically lets someone remotely control your computer... i think we can all guess how this might fit in with ChatGPT desktop...

2/11

I guess they are going to make ChatGPT be able to draw on your screen, edit code, etc

3/11

4/11

follow me if you're interested in creative uses of LLMs.

5/11

i love being featured in the twitter things

6/11

Agents

7/11

Makes a lot of sense, they also get cracked people like @jnpdx

8/11

If the company building AGI is buying a desktop remote company, then we are safe

9/11

I just recently saw some posts about AI zoom styled apps. This fits that mold.

10/11

the cursor will be ChatGPT pointing and drawing comments around while you use voice mode.

11/11

@inversebrah

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/11

Multi is joining OpenAI Multi Blog – Multi is joining OpenAI

2/11

What if desktop computers were inherently multiplayer? What if the operating system placed people on equal footing to apps? Those were the questions we explored in building Multi, and before that, Remotion.

3/11

Recently, we’ve been asking ourselves how we should work with computers. Not _on_ or _using_, but truly _with_ computers. With AI. We think it’s one of the most important product questions of our time. And so, we’re beyond excited to share that Multi is joining OpenAI!

4/11

Unfortunately, this means we’re sunsetting Multi. We’ve closed new team signups, and existing teams will be able to use the app until July 24th 2024, after which we’ll delete all user data. If you need help or more time finding a replacement, DM @embirico. We’re happy to suggest alternatives depending on what exactly you loved about Multi, and we can also grant extensions on a case by case basis.

5/11

Thank you to everybody who used Multi. It was a privilege building with you, and we learnt a ton from you. We'll miss your feedback and bug reports, but we can’t wait to show you what we’re up to next.

6/11

See you around, @artlasovsky, Chantelle, @embirico, @fbarbat, @jnpdx, @kevintunc, @likethespy, @potatoarecool, @samjau

7/11

Why are you joining one of the most unethical, reckless, hubris-driven, wisely-despised companies in human history? Oh for the money. OK we get it.

8/11

9/11

This is amazing!

10/11

Congrats!

11/11

Huge congrats @kevintunc and @samjau!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Multi is joining OpenAI Multi Blog – Multi is joining OpenAI

2/11

What if desktop computers were inherently multiplayer? What if the operating system placed people on equal footing to apps? Those were the questions we explored in building Multi, and before that, Remotion.

3/11

Recently, we’ve been asking ourselves how we should work with computers. Not _on_ or _using_, but truly _with_ computers. With AI. We think it’s one of the most important product questions of our time. And so, we’re beyond excited to share that Multi is joining OpenAI!

4/11

Unfortunately, this means we’re sunsetting Multi. We’ve closed new team signups, and existing teams will be able to use the app until July 24th 2024, after which we’ll delete all user data. If you need help or more time finding a replacement, DM @embirico. We’re happy to suggest alternatives depending on what exactly you loved about Multi, and we can also grant extensions on a case by case basis.

5/11

Thank you to everybody who used Multi. It was a privilege building with you, and we learnt a ton from you. We'll miss your feedback and bug reports, but we can’t wait to show you what we’re up to next.

6/11

See you around, @artlasovsky, Chantelle, @embirico, @fbarbat, @jnpdx, @kevintunc, @likethespy, @potatoarecool, @samjau

7/11

Why are you joining one of the most unethical, reckless, hubris-driven, wisely-despised companies in human history? Oh for the money. OK we get it.

8/11

9/11

This is amazing!

10/11

Congrats!

11/11

Huge congrats @kevintunc and @samjau!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

HRTech News:

@OpenAI acquires Multi, a video-first remote collaboration startup. Multi, which raised $13M from VCs, will shut down on July 24, 2024. This move aligns with OpenAI's strategy to bolster enterprise solutions, with ChatGPT's corporate tier already serving 93% of Fortune 500 firms.

OpenAI's annual revenue is projected to exceed $3.4B in 2024. #Multi #AI #DHRmap

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

HRTech News:

@OpenAI acquires Multi, a video-first remote collaboration startup. Multi, which raised $13M from VCs, will shut down on July 24, 2024. This move aligns with OpenAI's strategy to bolster enterprise solutions, with ChatGPT's corporate tier already serving 93% of Fortune 500 firms.

OpenAI's annual revenue is projected to exceed $3.4B in 2024. #Multi #AI #DHRmap

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Last edited: