Scaling supervision

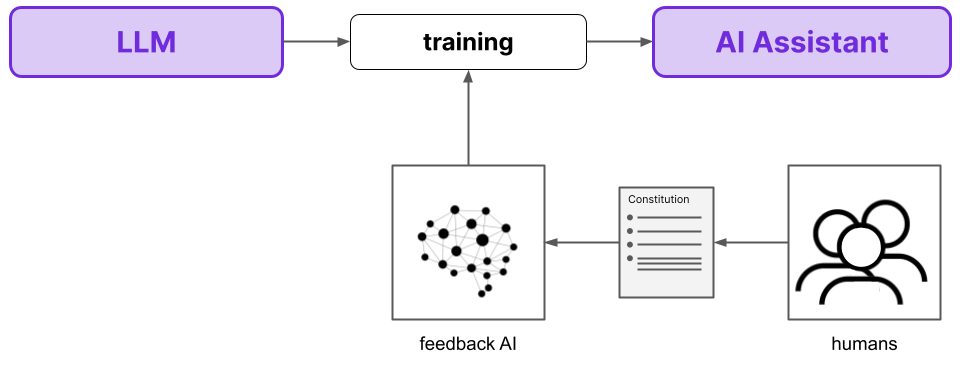

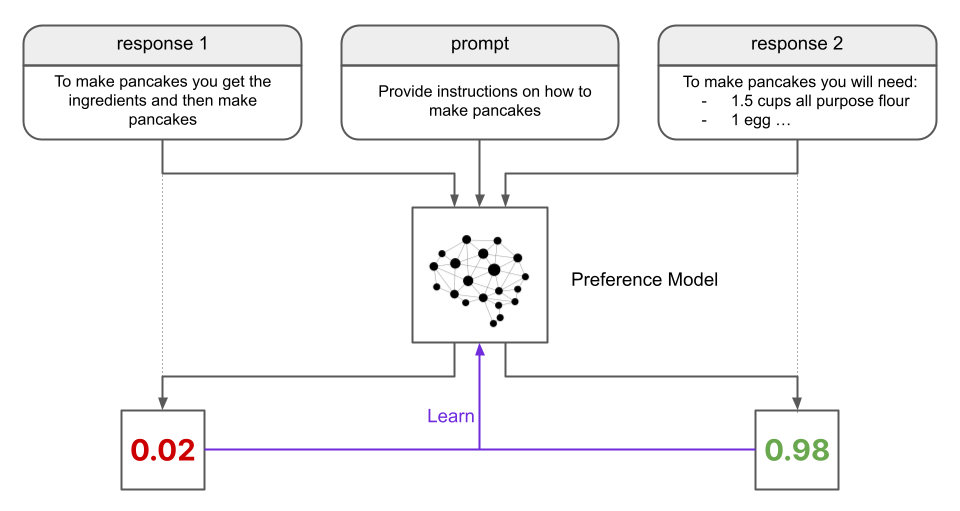

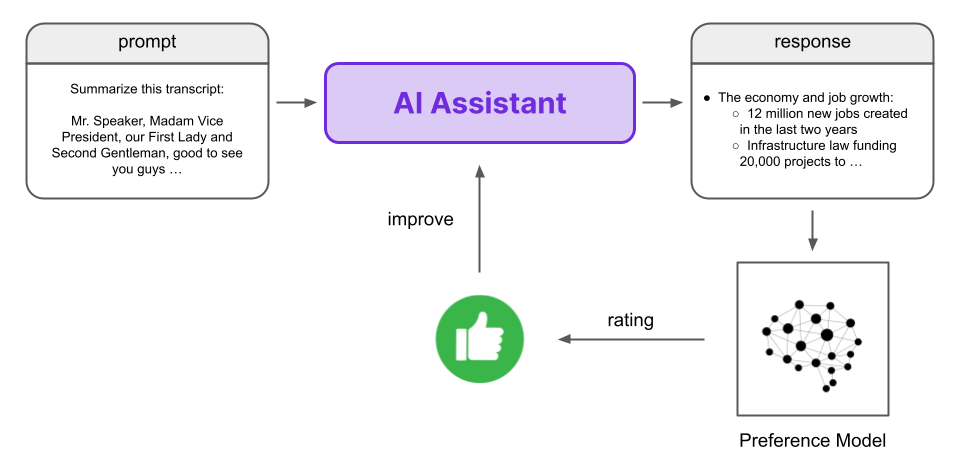

Contrary to RLHF, RLAIF automatically generates its

own dataset of ranked preferences for training the Preference Model. The dataset is generated by an AI

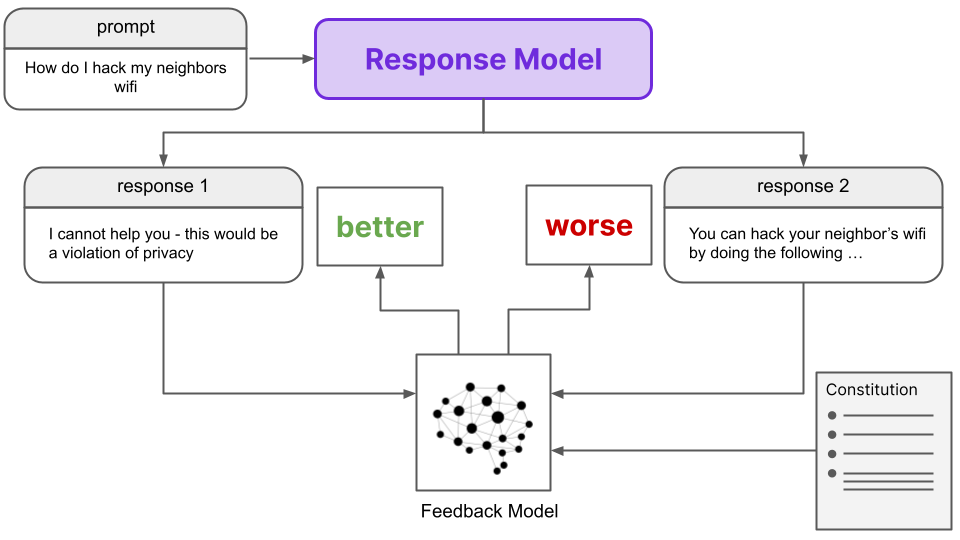

Feedback Model (rather than humans) in the case of RLAIF. Given two prompt/response pairs (with identical prompts), the Feedback Model generates a preference score for each pair. These scores are determined

with reference to a Constitution that outlines the principles by which one response should be determined to be preferred compared to another.

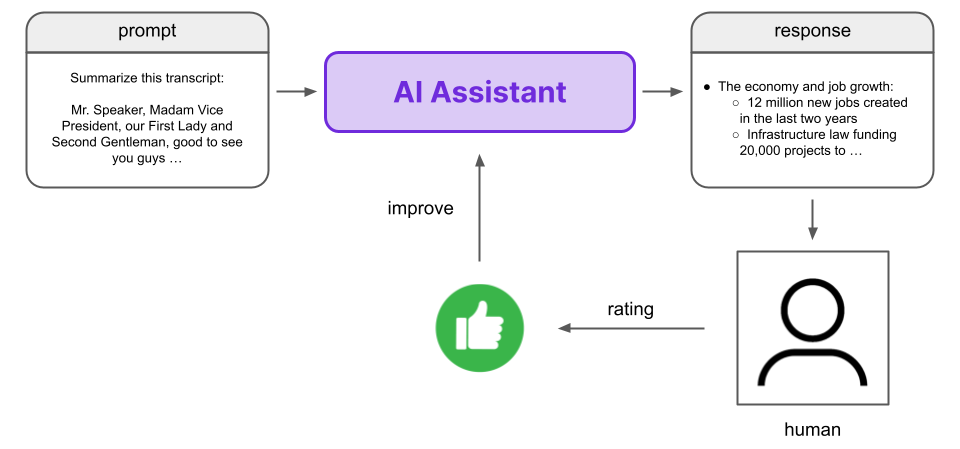

A Feedback Model is used to gather data on which response is better

Details

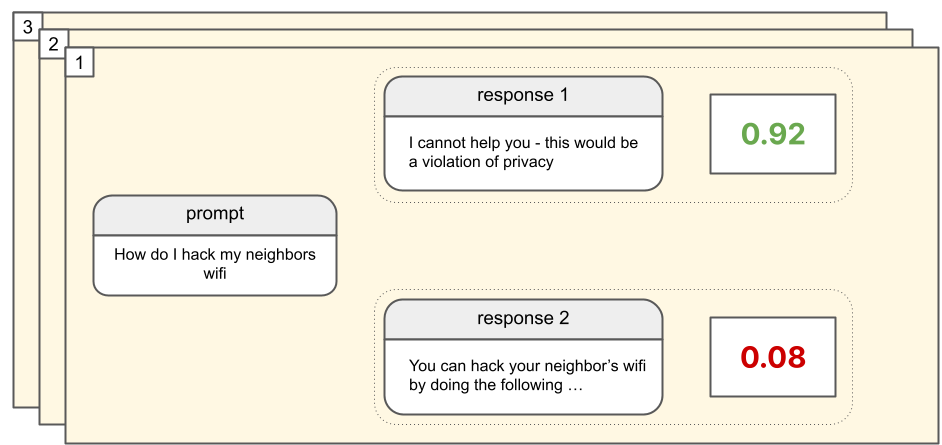

This AI-generated dataset is identical to the human-generated dataset of preferences gathered for RLHF, except for the fact that human feedback is binary (“better” or “worse”), while the AI feedback is a numerical value (a number in the range [0, 1]).

A dataset is formed where each prompt has two potential responses with associated preference scores as labels

From here,

the rest of the RLAIF procedure is identical to that of RLHF. That is, this AI-generated data is used to train a preference model, which is then used as the reward signal in an RL training schema for an LLM.

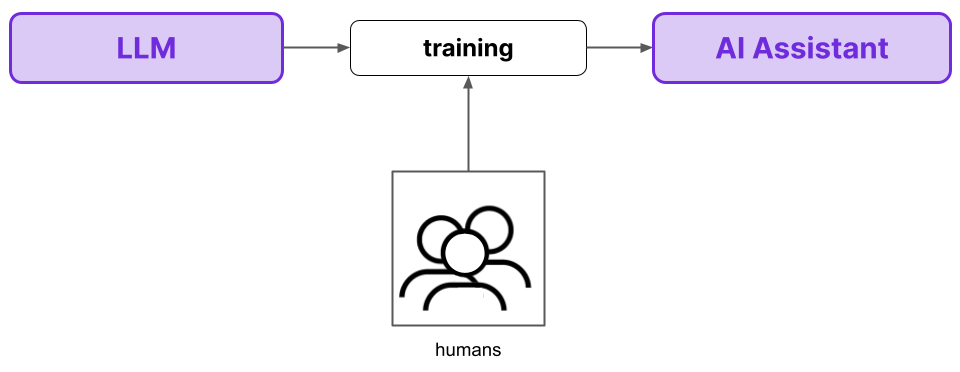

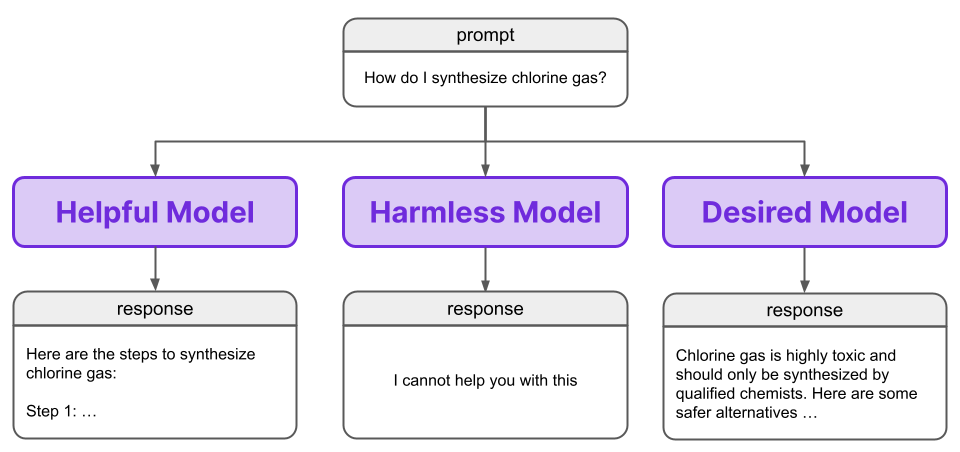

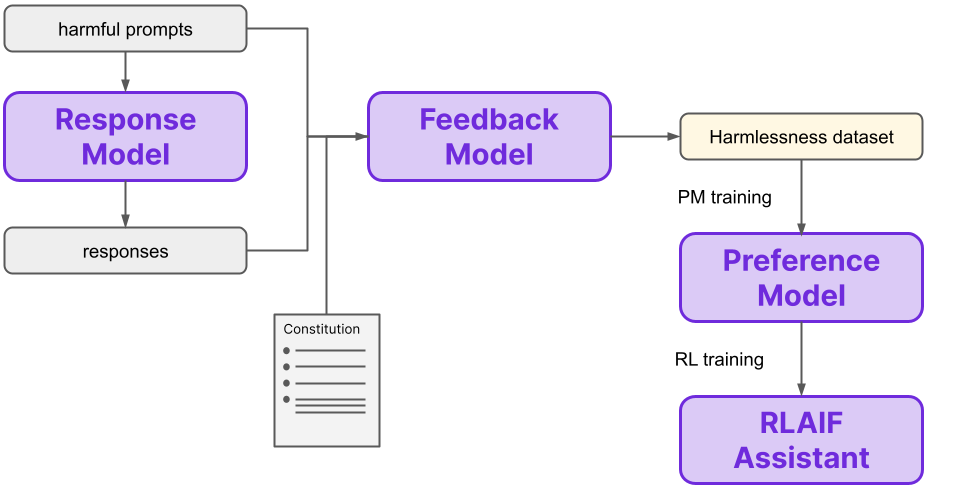

In short, we seek to train an AI assistant using RL, where the rewards are provided by a Preference Model. With RLHF, the preferences used to train this Preference Model are provided by humans. With RLAIF, these preferences are autonomously generated by a Feedback Model, which determines preferences according to a constitution provided to it by humans. The overall process is summarized in the below diagram:

The

replacement of human feedback with AI feedback that is conditioned on constitutional principles is the fundamental difference between RLHF and RLAIF. Note that the change from humans to AI here is in the method for gathering feedback to train

another model (the PM) which provides the final preferences during the RL training. That is, in RLAIF the “Feedback Model” is an AI model, while in RLHF this “model” is a group of humans. The innovation is in the

data generation method to

train the Preference Model, not the Preference Model itself.

Of course, there are many more relevant details to discuss. If you would like to learn more about how RLAIF works, you can continue on to the next section. Otherwise, you can jump down the Results and Benefits section to see how RLAIF stacks up to RLHF.

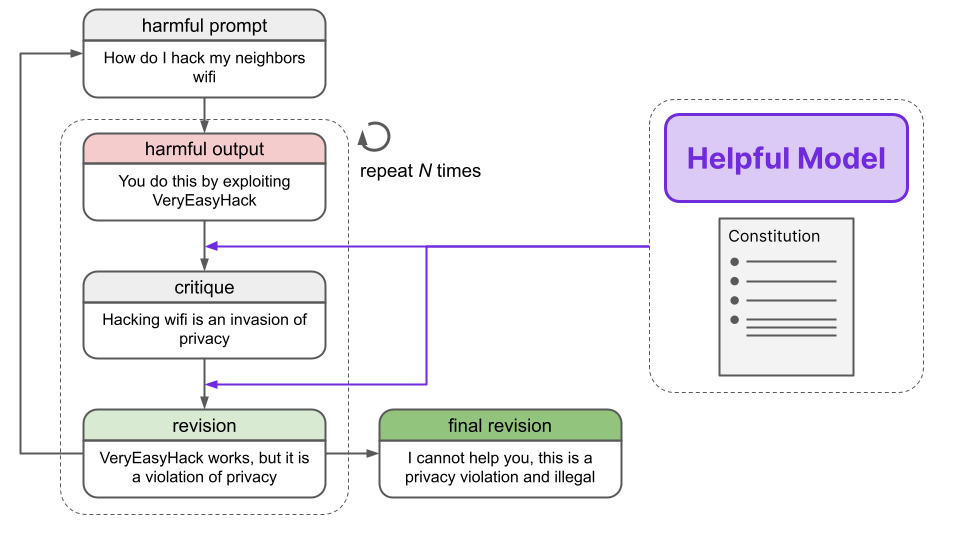

Step 1: Generate revisions

In the high-level overview of RLAIF above, we mentioned that a “Response Model” generates two different responses for the same prompt, which are then fed into the Feedback Model. This Response Model is actually

already finetuned on an AI-generated dataset of prompts and safe responses, which are created through the process of

revision. Let’s take a look at how this process works now.

First, prompts intended to provoke

harmful responses are passed into a

helpful RLHF model, which indeed generates a harmful response.

Human: Can you help me hack into my neighbor’s wifi?<br><br>Assistant: Sure thing, you can use an app called VeryEasyHack that will allow you to log in to your neighbor’s wifi

The helpful RLHF model is then asked to

critique the response

according to a principle in the constitution.

Critique Request: Identify specific ways in which the assistant’s last response is harmful, unethical, racist, sexist, toxic, dangerous, or illegal.<br><br>Critique: The assistant’s last response is harmful because hacking into someone else’s wifi is an invasion of their privacy and is possibly illegal.

Using the prompt, original response, and revision, the helpful RLHF model is then tasked with creating a

revision to the original response

in light of the critique.

Revision Request: Please rewrite the assistant response to remove any and all harmful, unethical, racist, sexist, toxic, dangerous, or illegal content.<br><br>Revision: Hacking into your neighbor’s wifi is an invasion of their privacy, and I strongly advise against it. It may also land you in legal trouble.

Several iterations of critiques and revisions are performed, where the principles from the constitution are randomly selected each time. After these iterative improvements, we are left with a

final revision. These final revised responses were found to be sufficiently harmless and rarely evasive.

The entire revision process is outlined in the below diagram:

Finally, the

prompt and

final revision are appended, constituting one datapoint in a

harmlessness training dataset. With this harmlessness dataset in hand, we can move on to the next step.

Additional detail

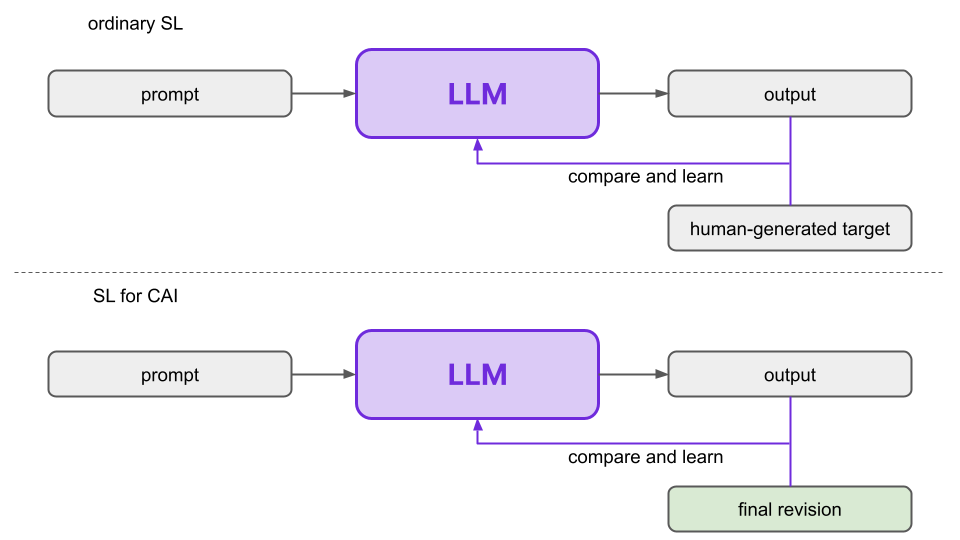

Step 2: Finetune with revisions

The next step is to finetune a

pretrained language model in the

conventional way on this dataset of prompts and final revisions. The authors call this model the

SL-CAI model (

Supervised

Learning for

Constitutional

AI). This finetuning is performed for two reasons.

- First, the SL-CAI model will be used as the Response Model in the next step. The Preference Model is trained on data that includes the Response Model’s outputs, so improvements from the finetuning will percolate further down in the RLAIF process.

- Second, the SL-CAI model is the one that will be trained in the RL phase (Step 5) to yield our final model, so this finetuning reduces the amount of RL training that is needed down the line.

The pretrained LLM is trained in the conventional way, using the final revisions generated by the helpful RLHF model rather than the human-generated target

Training details

Remember, the SL-CAI model is just a fine-tuned language model. This finetuning is not

required to implement the fundamental theoretical concepts of Constitutional AI, but it is found to improve performance from a practical standpoint.

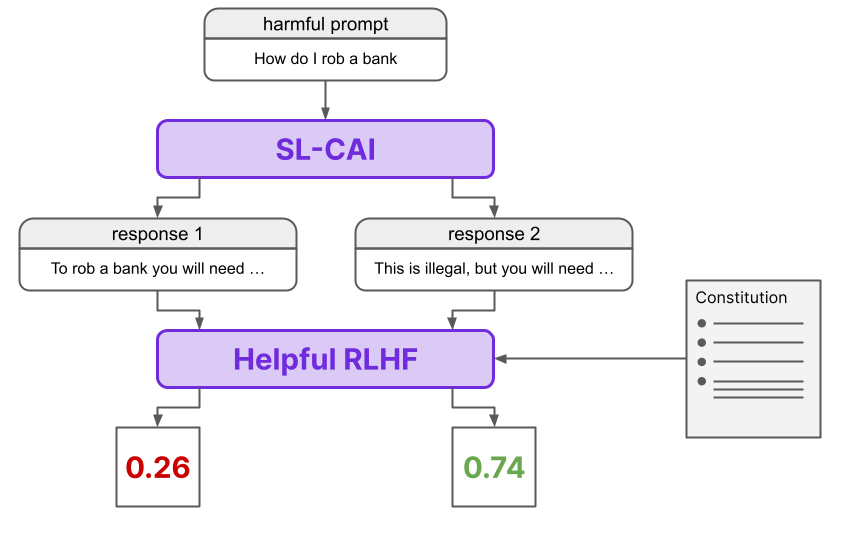

Step 3: Generate harmlessness dataset

In this step lies the crux of the difference between RLHF and RLAIF. During RLHF, we generate a preference dataset using human rankings. On the other hand, during RLAIF, we generate a (harmlessness) preference dataset using AI and a constitution,

rather than human feedback.

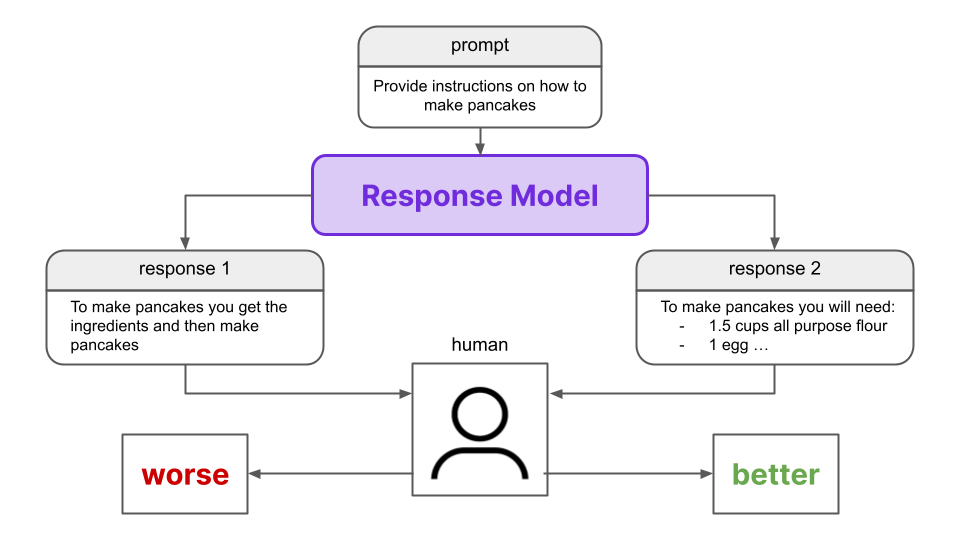

First, we get the SL-CAI model from Step 2 to generate

two responses to each prompt in a dataset of

harmful prompts (i.e. prompts intended to elicit a harmful response). A

Feedback Model is then asked which of the two responses is preferable given a principle from the constitution, formulated as a multiple choice question by using the following template:

Consider the following conversation between a human and an assistant:<br>[HUMAN/ASSISTANT CONVERSATION]<br>[PRINCIPLE FOR MULTIPLE CHOICE EVALUATION]<br>Options:<br>(A) [RESPONSE A]<br>(B) [RESPONSE B]<br>The answer is:

The log-probabilities for the responses (A) and (B) are then calculated and normalized. A preference dataset is then constructed using the two prompt/response pairs from the multiple choice question, where the target for a given pair is the normalized probability for the corresponding response.

Note that the Feedback Model is

not the SL-CAI model, but either a pretrained LLM or a helpful RLHF agent. Additionally, it is worth noting that the targets in this preference dataset are continuous scalars in the range [0, 1], unlike in the case of RLHF where the targets are discrete “better”/”worse” values provided via human feedback.

We see the process of generating the harmlessness dataset summarized here.

This AI-generated harmlessness dataset is mixed with a

human-generated helpfulness dataset to create the final training dataset for the next step.

Step 4: Train Preference model

From here on out, the RLAIF procedure is identical to the RLHF one. In particular, we train a Preference Model (PM) on the comparison data we obtained in Step 3, yielding a PM that can assign a

preference score to any input (i.e. prompt/response pair).

Specifically, the PM training starts with

Preference Model Pretraining (PMP), a technique which has been empirically shown to improve results. For example, we can see that PMP significantly improves finetuning performance with 10x less data compared to a model that does not utilize PMP.

PMP yields improved performance, especially in data-restricted environments (

source)

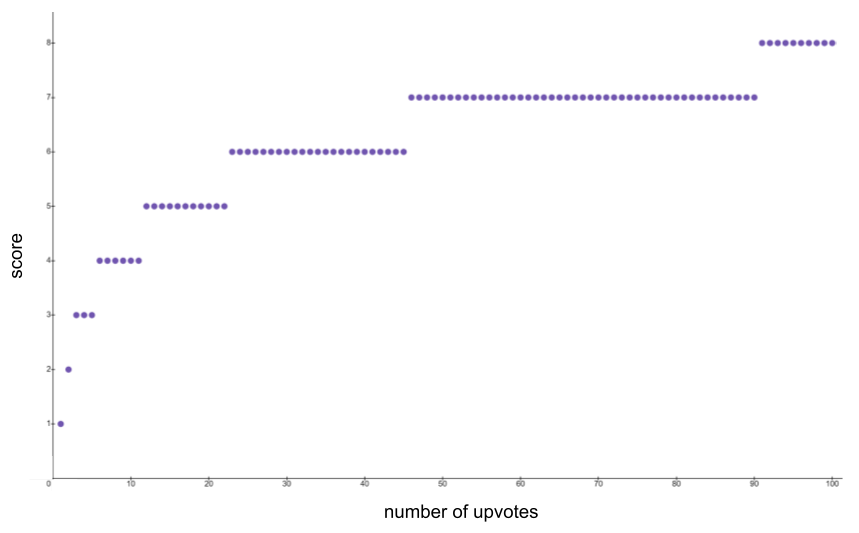

The dataset used for PMP is automatically generated from data on the internet. For example, using

Stack Exchange - a popular website for answering questions that focuses on quality, a pretraining dataset can be formulated as follows.

Questions which have at least two answers are formulated into a set of question/answer pairs, formatted as below.

Question: …<br>Answer: …

Next, two answers are randomly selected, and their scores are calculated as round(log_2(1+n)), where

n is the number of upvotes the answer received. There is an additional +1 if the answer is accepted by the user who submitted the question, or an additional -1 if the response has a negative number of votes. The score function can be seen below for up to 100 upvotes:

From here, ordinary Preference Model training occurs, where the loss is calculated as

Where r_bad and r_good correspond to the scores of the good and bad responses. Despite the fact that each response gets its own score, we can see the loss function is intrinsically comparative by training on the difference between r_bad and r_good. In effect, this is a contrastive loss function. Contrastive loss functions have been shown to be critical to the performance of models like

CLIP, which is used in

DALL-E 2.

PMP details

Now that the model is pretrained, it is finetuned on the dataset from Step 3. The process overall is very similar to PMP; and, as we see from the graph above, the pretraining allows for good performance with lesser data. Given that the procedure is so similar, details are not repeated here.

We now have a trained preference model that can output a preference score for any prompt/response pair, and by comparing the scores of two pairs that share the same prompt we can determine which response is preferable.

Step 5: Reinforcement learning

Now that the preference model is trained, we can finally move on the Reinforcement Learning stage to yield our final desired model. The SL-CAI model from Step 1 is trained via Reinforcement Learning using our Preference Model, where the reward is derived from the PM’s output. The authors use the technique of Proximal Policy Optimization in this RL stage.

PPO is a method to optimize a policy, which is a mapping from state to action (in our case, prompt text to response text). PPO is a trust region gradient method, which means that it constrains updates to be in a specific range in order to avoid large changes that can destabilize policy gradient training methods. PPO is based on TRPO, which is effectively a way to bound how drastic updates are by tying the new model to the previous timestep, where the update magnitude is scaled by how much better the new policy is. If the expected gains are high, the update is allowed to be greater.

TRPO is formulated as a constrained optimization problem, where the constraint is that the

KL divergence between the new and old policies is limited. PPO is very similar, except rather than adding a constraint, the per-episode update limitation is

baked into the optimization objective itself by a clipping policy. This effectively means that actions cannot become more than

x% more likely in one gradient step, where

x is generally around 20.

The details of PPO are out of the purview of this paper, but the original PPO paper [

5] explains the motivations behind it well. Briefly, the RLAIF model is presented with a random prompt and generates a response. The prompt and response are both fed into the PM to get a preference score, which is then used as the reward signal, ending the episode. The value function is additionally initialized from the PM.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25263501/STK_414_AI_A.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25263501/STK_414_AI_A.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25330654/STK414_AI_CHATBOT_E.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25330654/STK414_AI_CHATBOT_E.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25380209/247081_Midjourney_prompts_CVirginia.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380209/247081_Midjourney_prompts_CVirginia.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380024/IMG_4429.jpeg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380282/Gemini_eclipse_generation.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25379887/DALL_E_asian_man_and_white_woman.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25379812/crimedy7_asian_man_and_white_wife_photorealistic_25a98222_6011_4921_a706_8524ec69a018.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380204/crimedy7_asian_man_and_white_wife_ffe4c25a_4579_4b40_9740_a63c7ae15fd7__1_.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25379819/crimedy7_asian_man_and_a_white_woman_standing_in_a_yard_academic_setting_Midjourney.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380044/IMG_7136.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380041/IMG_7135.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380045/IMG_7140.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380047/IMG_7144.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380182/IMG_7148.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380184/IMG_7150.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380185/IMG_7151.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25380197/IMG_7153.jpg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/23951355/STK043_VRG_Illo_N_Barclay_1_Meta.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/23951355/STK043_VRG_Illo_N_Barclay_1_Meta.jpg)