You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?1/4

After enjoying the large MoE model, why not have a look at a small one?

This is it, Qwen1.5-MoE-A2.7B, a 14B MoE model with only 2.7B activated parameters!

HF: Qwen (Qwen) , search repos with “Qwen1.5-MoE-A2.7B” in model names.

GitHub: GitHub - QwenLM/Qwen1.5: Qwen1.5 is the improved version of Qwen, the large language model series developed by Qwen team, Alibaba Cloud.

Blog: Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters

Features include:

* Matches the 7B model quality

* 75% pretraining cost reduction

* 1.74x inference speed acceleration

* 64 experts per MoE layer, 8 activated for each token, where 4 for all tokens and 4 based on routing

* Upcycled (initialized) from Qwen-1.8B

Now it is only supported by HF transformers and vLLM. For both you need to install from source, as the latest versions with `qwen2_moe` are not released yet. This is also something new for us. Hope you enjoy and feel free shoot us feedback!

2/4

https://arxiv.org/pdf/2212.05055.pdf

3/4

A little bit different. We used upcycling first, and then split the FFNs into more experts, which is known as finegrained experts. We had some techniques in bringing in randomness, but we might share them later in a more formal tech report.

4/4

v0.2 achieves 7.6

After enjoying the large MoE model, why not have a look at a small one?

This is it, Qwen1.5-MoE-A2.7B, a 14B MoE model with only 2.7B activated parameters!

HF: Qwen (Qwen) , search repos with “Qwen1.5-MoE-A2.7B” in model names.

GitHub: GitHub - QwenLM/Qwen1.5: Qwen1.5 is the improved version of Qwen, the large language model series developed by Qwen team, Alibaba Cloud.

Blog: Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters

Features include:

* Matches the 7B model quality

* 75% pretraining cost reduction

* 1.74x inference speed acceleration

* 64 experts per MoE layer, 8 activated for each token, where 4 for all tokens and 4 based on routing

* Upcycled (initialized) from Qwen-1.8B

Now it is only supported by HF transformers and vLLM. For both you need to install from source, as the latest versions with `qwen2_moe` are not released yet. This is also something new for us. Hope you enjoy and feel free shoot us feedback!

2/4

https://arxiv.org/pdf/2212.05055.pdf

3/4

A little bit different. We used upcycling first, and then split the FFNs into more experts, which is known as finegrained experts. We had some techniques in bringing in randomness, but we might share them later in a more formal tech report.

4/4

v0.2 achieves 7.6

1/4

Here is the demo of Qwen1.5-MoE-A2.7B:

It is fast. See if it matches your expectation of the model quality!

2/4

@_akhaliq give it a try

3/4

They are working on this

4/4

No we did not do the self intro stuff in it. It Just hallucinates. Bad

Here is the demo of Qwen1.5-MoE-A2.7B:

Qwen1.5 MoE A2.7B Chat Demo - a Hugging Face Space by Qwen

Discover amazing ML apps made by the community

huggingface.co

It is fast. See if it matches your expectation of the model quality!

2/4

@_akhaliq give it a try

3/4

They are working on this

4/4

No we did not do the self intro stuff in it. It Just hallucinates. Bad

Last edited:

1/1

some smaller targets worth taking iff too GPU poor to analyze mixtral:

qwen1.5-moe (upcycled!) Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters

deepseek-moe GitHub - deepseek-ai/DeepSeek-MoE

openmoe-8b GitHub - XueFuzhao/OpenMoE: A family of open-sourced Mixture-of-Experts (MoE) Large Language Models

switch-base-8 google/switch-base-8 · Hugging Face

some smaller targets worth taking iff too GPU poor to analyze mixtral:

qwen1.5-moe (upcycled!) Qwen1.5-MoE: Matching 7B Model Performance with 1/3 Activated Parameters

deepseek-moe GitHub - deepseek-ai/DeepSeek-MoE

openmoe-8b GitHub - XueFuzhao/OpenMoE: A family of open-sourced Mixture-of-Experts (MoE) Large Language Models

switch-base-8 google/switch-base-8 · Hugging Face

1/8

Run AniPortrait locally with a

@Gradio UI!

I forked the master repo for AniPortrait & created an easy-to-use Gradio UI for Vid2Vid (Face Reenactment).

Lmk what you think. Link in thread

2/8

Forked Github repo of AniPortrait with Gradio: GitHub - Blizaine/AniPortrait: AniPortrait with Gradio: Audio-Driven Synthesis of Photorealistic Portrait Animation

@cocktailpeanut

@altryne

@_akhaliq

3/8

Example:

4/8

I got AniPortrait running locally & what do I do? x.com/_akhaliq/statu…

5/8

Example:

6/8

Greg drop bars via AniPortrait & SunoAI!

Testing audio-driven motion. Not perfect, but fun. Vid2Vid seems to work better. Might have to record myself as an input for the next one.

Sound Up!

7/8

Don't miss this amazing workflow from

@visiblemakers

8/8

Emotional Image to Video transformation is here!

AniPortrait by @camenduru , inspired by the EMO paper, is now live on Replicate.

Why's it groundbreaking?

It converts emotions + voice/lipsync from video to a static image, making almost lifelike AI filmmaking dialogues a…

Run AniPortrait locally with a

@Gradio UI!

I forked the master repo for AniPortrait & created an easy-to-use Gradio UI for Vid2Vid (Face Reenactment).

Lmk what you think. Link in thread

2/8

Forked Github repo of AniPortrait with Gradio: GitHub - Blizaine/AniPortrait: AniPortrait with Gradio: Audio-Driven Synthesis of Photorealistic Portrait Animation

@cocktailpeanut

@altryne

@_akhaliq

3/8

Example:

4/8

I got AniPortrait running locally & what do I do? x.com/_akhaliq/statu…

5/8

Example:

6/8

Greg drop bars via AniPortrait & SunoAI!

Testing audio-driven motion. Not perfect, but fun. Vid2Vid seems to work better. Might have to record myself as an input for the next one.

Sound Up!

7/8

Don't miss this amazing workflow from

@visiblemakers

8/8

Emotional Image to Video transformation is here!

AniPortrait by @camenduru , inspired by the EMO paper, is now live on Replicate.

Why's it groundbreaking?

It converts emotions + voice/lipsync from video to a static image, making almost lifelike AI filmmaking dialogues a…

1/1

Try BrushNet! Segment and fill in anywhere with precision and coherence!

Check out

@huggingface space: BrushNet - a Hugging Face Space by TencentARC

Try BrushNet! Segment and fill in anywhere with precision and coherence!

Check out

@huggingface space: BrushNet - a Hugging Face Space by TencentARC

1/1

Run Brushnet locally on your machine with 1 click!

Run Brushnet locally on your machine with 1 click!

1/1

We present BrushNet! It can be inserted into any pre-trained SD model and generate coherent images, which is useful in product exhibitions, virtual try-on, or background replacement.

Project Page: BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion

Code: GitHub - TencentARC/BrushNet: The official implementation of paper "BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion"

We present BrushNet! It can be inserted into any pre-trained SD model and generate coherent images, which is useful in product exhibitions, virtual try-on, or background replacement.

Project Page: BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion

Code: GitHub - TencentARC/BrushNet: The official implementation of paper "BrushNet: A Plug-and-Play Image Inpainting Model with Decomposed Dual-Branch Diffusion"

Last edited:

1/1

MagicLens: State-of-the-art instruction-following image retrieval model on 10 benchmarks but 50x smaller than prior best!

Check out our paper on huggingface: Paper page - MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions

MagicLens: State-of-the-art instruction-following image retrieval model on 10 benchmarks but 50x smaller than prior best!

Check out our paper on huggingface: Paper page - MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions

1/6

Proud to present MagicLens: image retrieval models following open-ended instructions.

open-vision-language.github.io

open-vision-language.github.io

Highlights of MagicLens:

>Novel Insights: Naturally occurring image pairs on the same web page contain diverse image relations (e.g., inside and outside views of the same building). Modeling such diverse relations can enable richer search intents beyond just searching for identical images in traditional image retrieval.

>Strong Performance: Trained on 36.7M data mined from the web, a single MagicLens model matches or exceeds prior SOTA methods on 10 benchmarks across various tasks, including multimodal-to-image, image-to-image, and text-to-image retrieval.

>Efficiency: On multiple benchmarks, MagicLens outperforms previous SOTA (>14.6B) but with a 50X smaller model size (267M).

>Open-Ended Search: MagicLens can satisfy various search intents expressed by open-ended instructions, especially complex and beyond visual intents — where prior best methods fall short.

Check out our technical report for more details: [2403.19651] MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions

This is a joint work with awesome collaborators:

@YiLuan9

@Hexiang_Hu

@kentonctlee

, Siyuan Qiao,

@WenhuChen

@ysu_nlp

and

@mchang21

, from

@GoogleDeepMind

and

@osunlp

.

2/6

We mine image pairs from the same web pages and express their diverse semantic relations, which may extend beyond visual similarities (e.g., a charger of a product), as open-ended instructions with #LMM and #LLM.

3/6

How to precisely and explicitly capture the implicit relations between the image pairs?

We build a systematic pipeline with heavy mining, cleaning, and pairing. Then, we annotate massive metadata via #LMMs and generate open-ended instructions via #LLMs.

4/6

After mining 36.7 million triplets (query image, instruction, target image), we train light-weight MagicLens models taking image and instruction as input.

With comparable sizes, a single model achieves best results on 10 benchmarks across three image retrieval task forms.

5/6

On several benchmarks, MagicLens outperforms 50X larger SOTA methods by a large margin.

6/6

To simulate a more realistic scenario, we hold out an index set with 1.4 million images, the largest retrieval pool to date.

Human evaluation finds that MagicLens excels at all kinds of instructions, especially those that are complex and go beyond visual similarity.

Proud to present MagicLens: image retrieval models following open-ended instructions.

MagicLens

MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions

Highlights of MagicLens:

>Novel Insights: Naturally occurring image pairs on the same web page contain diverse image relations (e.g., inside and outside views of the same building). Modeling such diverse relations can enable richer search intents beyond just searching for identical images in traditional image retrieval.

>Strong Performance: Trained on 36.7M data mined from the web, a single MagicLens model matches or exceeds prior SOTA methods on 10 benchmarks across various tasks, including multimodal-to-image, image-to-image, and text-to-image retrieval.

>Efficiency: On multiple benchmarks, MagicLens outperforms previous SOTA (>14.6B) but with a 50X smaller model size (267M).

>Open-Ended Search: MagicLens can satisfy various search intents expressed by open-ended instructions, especially complex and beyond visual intents — where prior best methods fall short.

Check out our technical report for more details: [2403.19651] MagicLens: Self-Supervised Image Retrieval with Open-Ended Instructions

This is a joint work with awesome collaborators:

@YiLuan9

@Hexiang_Hu

@kentonctlee

, Siyuan Qiao,

@WenhuChen

@ysu_nlp

and

@mchang21

, from

@GoogleDeepMind

and

@osunlp

.

2/6

We mine image pairs from the same web pages and express their diverse semantic relations, which may extend beyond visual similarities (e.g., a charger of a product), as open-ended instructions with #LMM and #LLM.

3/6

How to precisely and explicitly capture the implicit relations between the image pairs?

We build a systematic pipeline with heavy mining, cleaning, and pairing. Then, we annotate massive metadata via #LMMs and generate open-ended instructions via #LLMs.

4/6

After mining 36.7 million triplets (query image, instruction, target image), we train light-weight MagicLens models taking image and instruction as input.

With comparable sizes, a single model achieves best results on 10 benchmarks across three image retrieval task forms.

5/6

On several benchmarks, MagicLens outperforms 50X larger SOTA methods by a large margin.

6/6

To simulate a more realistic scenario, we hold out an index set with 1.4 million images, the largest retrieval pool to date.

Human evaluation finds that MagicLens excels at all kinds of instructions, especially those that are complex and go beyond visual similarity.

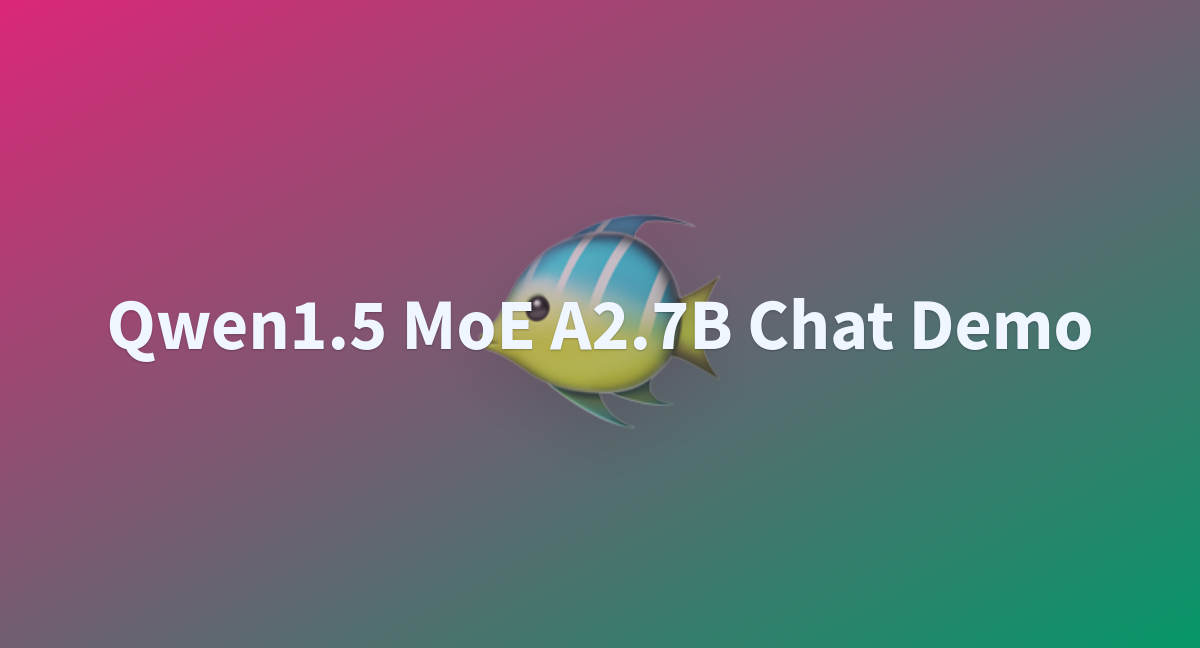

1/5

𝔸𝕘𝕖𝕟𝕥 𝕃𝕦𝕞𝕠𝕤 is one of the first unified and modular frameworks for training open-source LLM-based agents.

New features:

Multimodal Reasoning with 𝕃𝕦𝕞𝕠𝕤

13B-scale 𝕃𝕦𝕞𝕠𝕤 models

𝕃𝕦𝕞𝕠𝕤 data-explorer demo

@ai2_mosaic

@uclanlp

: [2311.05657] Agent Lumos: Unified and Modular Training for Open-Source Language Agents

: GitHub - allenai/lumos: Code and data for "Lumos: Learning Agents with Unified Data, Modular Design, and Open-Source LLMs"

: ai2lumos (Lumos Agents (AI2))

Demo: Agent Lumos - a Hugging Face Space by ai2lumos (1/N)

2/5

Lumos now supports multimodal tasks!

Lumos now supports multimodal tasks!

🖼 It accepts image caption input and then solves visual reasoning tasks with planning. We adopt the same strategy for generating multimodal annotations as other complex interactive tasks.

Multimodal training annotations are here:

Multimodal training annotations are here:

Lumos plan annotations: ai2lumos/lumos_multimodal_plan_iterative · Datasets at Hugging Face

Lumos plan annotations: ai2lumos/lumos_multimodal_plan_iterative · Datasets at Hugging Face

Lumos ground annotations: ai2lumos/lumos_multimodal_ground_iterative · Datasets at Hugging Face

Lumos ground annotations: ai2lumos/lumos_multimodal_ground_iterative · Datasets at Hugging Face

3/5

Lumos has strong multimodal perf.

Lumos outperforms larger VL models such as MiniGPT-4-13B on A-OKVQA and ScienceQA

7B-scale Lumos outperforms LLAVA1.5-7B on ScienceQA

7B-scale Lumos outperforms AutoAct, which is directly fine-tuned on ScienceQA (3/N)

4/5

Lumos-13B are released

Lumos-13B for each task type are released

They further lift the performance level, compared with 7B-scale models (4/N)

5/5

Lumos data demo is released

It is a conversational demo to show how planning and grounding modules work and interact with each other

Link: Agent Lumos - a Hugging Face Space by ai2lumos (5/N)

𝔸𝕘𝕖𝕟𝕥 𝕃𝕦𝕞𝕠𝕤 is one of the first unified and modular frameworks for training open-source LLM-based agents.

New features:

Multimodal Reasoning with 𝕃𝕦𝕞𝕠𝕤

13B-scale 𝕃𝕦𝕞𝕠𝕤 models

𝕃𝕦𝕞𝕠𝕤 data-explorer demo

@ai2_mosaic

@uclanlp

: [2311.05657] Agent Lumos: Unified and Modular Training for Open-Source Language Agents

: GitHub - allenai/lumos: Code and data for "Lumos: Learning Agents with Unified Data, Modular Design, and Open-Source LLMs"

: ai2lumos (Lumos Agents (AI2))

Demo: Agent Lumos - a Hugging Face Space by ai2lumos (1/N)

2/5

🖼 It accepts image caption input and then solves visual reasoning tasks with planning. We adopt the same strategy for generating multimodal annotations as other complex interactive tasks.

3/5

Lumos has strong multimodal perf.

Lumos outperforms larger VL models such as MiniGPT-4-13B on A-OKVQA and ScienceQA

7B-scale Lumos outperforms LLAVA1.5-7B on ScienceQA

7B-scale Lumos outperforms AutoAct, which is directly fine-tuned on ScienceQA (3/N)

4/5

Lumos-13B are released

Lumos-13B for each task type are released

They further lift the performance level, compared with 7B-scale models (4/N)

5/5

Lumos data demo is released

It is a conversational demo to show how planning and grounding modules work and interact with each other

Link: Agent Lumos - a Hugging Face Space by ai2lumos (5/N)

1/1

Most existing Video LLMs struggle with the “When?” questions. In contrast, our proposed LITA can answer challenging "when" questions like “When does the girl show resilience in her performance?”

Paper: [2403.19046] LITA: Language Instructed Temporal-Localization Assistant

Code: GitHub - NVlabs/LITA

Most existing Video LLMs struggle with the “When?” questions. In contrast, our proposed LITA can answer challenging "when" questions like “When does the girl show resilience in her performance?”

Paper: [2403.19046] LITA: Language Instructed Temporal-Localization Assistant

Code: GitHub - NVlabs/LITA

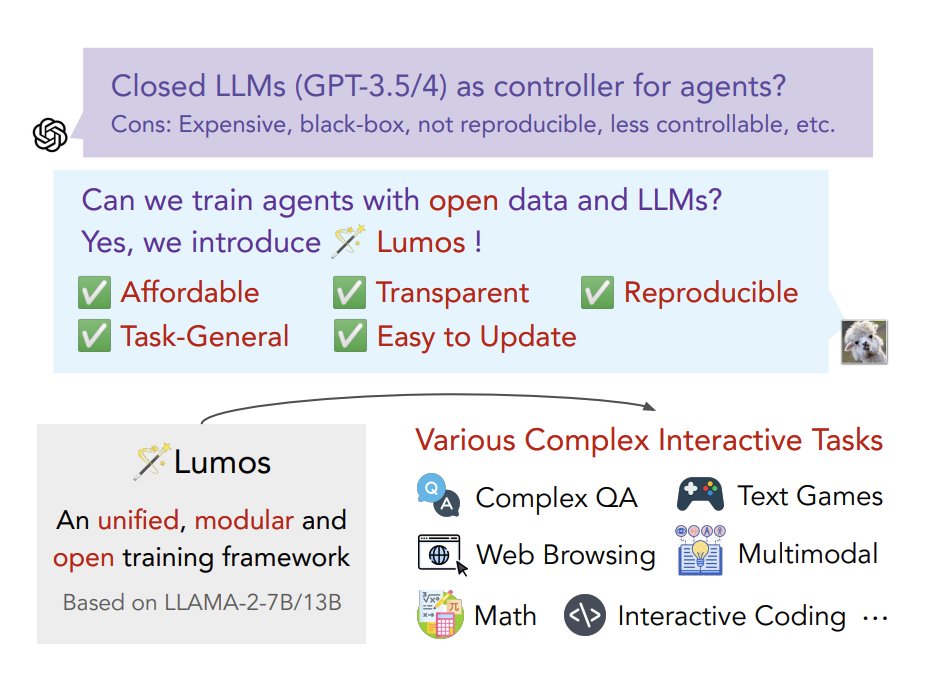

1/3

Multimodal DPO, large & tiny multimodal matryoshka embeddings, and 1st party ONNX support for 10x lighter deployments

In partnership with

@nebiusofficial , Unum is releasing a new set of pocket-sized multimodal models, already available on

@huggingface

2/3

Check out the new collections on

@huggingface and let us which languages we should prioritize for future multilingual releases

unum-cloud (Unum)

3/3

And the new inference code with binary and Matryoshka examples on our

@GitHub repo - don't forget to and spread the word

Multimodal DPO, large & tiny multimodal matryoshka embeddings, and 1st party ONNX support for 10x lighter deployments

In partnership with

@nebiusofficial , Unum is releasing a new set of pocket-sized multimodal models, already available on

@huggingface

2/3

Check out the new collections on

@huggingface and let us which languages we should prioritize for future multilingual releases

unum-cloud (Unum)

3/3

And the new inference code with binary and Matryoshka examples on our

@GitHub repo - don't forget to and spread the word

1/5

Anyone knows a paper that compares the performance of LLMs with user prompt at the top vs. bottom of the user input (e.g. this image)?

It appears that the top typically works better, but it'd be an interesting problem if there was no paper written yet.

community.openai.com

community.openai.com

2/5

That's a huge difference! Thanks a lot for providing a very convincing result

3/5

That's very interesting! Actually I was looking at your tweet yesterday, which made me think of another question. Maybe top vs bottom perf varies by task and model?

4/5

That'd be awesome. Thanks

5/5

This method happens to work so much better than the regular prompting, which was also extensively tested in this paper: [2403.05004] Can't Remember Details in Long Documents? You Need Some R&R

The perf gap isn't small at all, even for GPT4 Turbo, which is very interesting.

Anyone knows a paper that compares the performance of LLMs with user prompt at the top vs. bottom of the user input (e.g. this image)?

It appears that the top typically works better, but it'd be an interesting problem if there was no paper written yet.

When processing a text: prompt before it or after it?

Hi! When you’re processing a text (making an excerpt, summary or whatnot, or making changes in program code) you could add the actual prompt that tells ChatGPT what to do before the text, like Please make a summary of the following text: [text] or after the text: [text] Please make a...

community.openai.com

community.openai.com

2/5

That's a huge difference! Thanks a lot for providing a very convincing result

3/5

That's very interesting! Actually I was looking at your tweet yesterday, which made me think of another question. Maybe top vs bottom perf varies by task and model?

4/5

That'd be awesome. Thanks

5/5

This method happens to work so much better than the regular prompting, which was also extensively tested in this paper: [2403.05004] Can't Remember Details in Long Documents? You Need Some R&R

The perf gap isn't small at all, even for GPT4 Turbo, which is very interesting.

1/1

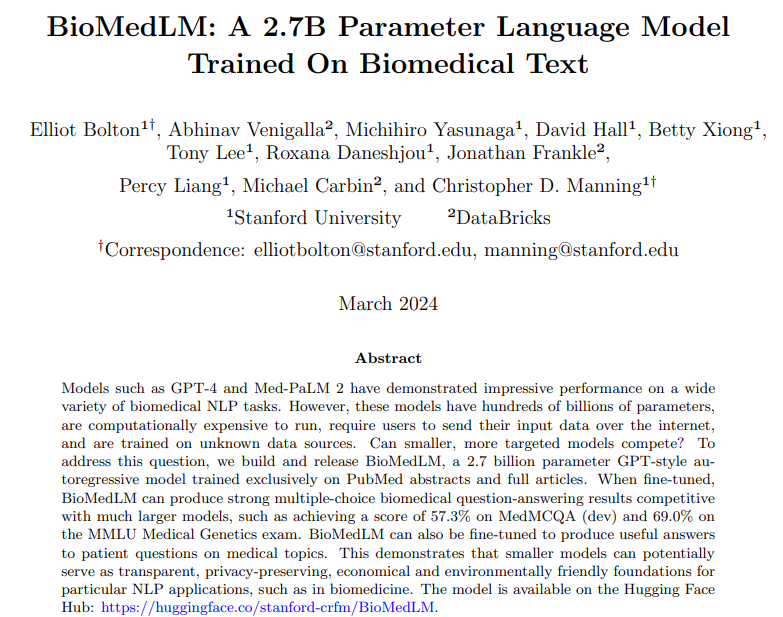

BioMedLM: A 2.7B Parameter Language Model Trained On Biomedical Text

weights: stanford-crfm/BioMedLM · Hugging Face

abs: [2403.18421] BioMedLM: A 2.7B Parameter Language Model Trained On Biomedical Text

BioMedLM: A 2.7B Parameter Language Model Trained On Biomedical Text

weights: stanford-crfm/BioMedLM · Hugging Face

abs: [2403.18421] BioMedLM: A 2.7B Parameter Language Model Trained On Biomedical Text

1/1

Google presents Long-form factuality in large language models

- Proposes that LLM agents can be used as automated evaluators for longform factuality

- Shows that LLM agents can achieve superhuman rating performance

repo: GitHub - google-deepmind/long-form-factuality: Benchmarking long-form factuality in large language models. Original code for our paper "Long-form factuality in large language models".

abs: [2403.18802] Long-form factuality in large language models

Google presents Long-form factuality in large language models

- Proposes that LLM agents can be used as automated evaluators for longform factuality

- Shows that LLM agents can achieve superhuman rating performance

repo: GitHub - google-deepmind/long-form-factuality: Benchmarking long-form factuality in large language models. Original code for our paper "Long-form factuality in large language models".

abs: [2403.18802] Long-form factuality in large language models

1/1

DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

- Presents a new framework for long-term dense tracking in video

- Achieves SotA results and significantly outperforms SSL methods

proj: DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

abs: [2403.14548] DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

- Presents a new framework for long-term dense tracking in video

- Achieves SotA results and significantly outperforms SSL methods

proj: DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

abs: [2403.14548] DINO-Tracker: Taming DINO for Self-Supervised Point Tracking in a Single Video

1/2

InternLM2 Technical Report

- Presents an open-source LLM (1.8 ~ 20B params) trained over 2T tokens

- Equipped with GQA and trained on up to 32k contexts

- Intermediate checkpoints and detailed description of training framework and dataset available

2/2

Anyone knows a paper that compares the performance of LLMs with user prompt at the top vs. bottom of the user input (e.g. this image)?

It appears that the top typically works better, but it'd be an interesting problem if there was no paper written yet.

When processing a text: prompt before it or after it?

InternLM2 Technical Report

- Presents an open-source LLM (1.8 ~ 20B params) trained over 2T tokens

- Equipped with GQA and trained on up to 32k contexts

- Intermediate checkpoints and detailed description of training framework and dataset available

2/2

Anyone knows a paper that compares the performance of LLMs with user prompt at the top vs. bottom of the user input (e.g. this image)?

It appears that the top typically works better, but it'd be an interesting problem if there was no paper written yet.

When processing a text: prompt before it or after it?