Can anyone recommend a a program that helps getting past AI detection when writing something like a letter for work? I use ChatGPT sometimes, and I’ve been testing out programs that rewrite what I created, but it either gets flagged on a checker or sounds terrible. I even tried paying for a program to rewrite and that still got flagged.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?Can anyone recommend a a program that helps getting past AI detection when writing something like a letter for work? I use ChatGPT sometimes, and I’ve been testing out programs that rewrite what I created, but it either gets flagged on a checker or sounds terrible. I even tried paying for a program to rewrite and that still got flagged.

it's my understanding that most of the AI detection services are BS. lots of reports of users submitting original papers and being accused of being written by AI.

you can either show chatgpt examples of your original writing style and tell it to emulate it to write a letter for you or prompt it to describe your writing style and use that description to create a letter.

10 Prompt Engineering Hacks to get 10x Better Results 🎯

Works with ChatGPT, Claude and other Opensource LLMs 💥

10 Prompt Engineering Hacks to get 10x Better Results

Works with ChatGPT, Claude and other Opensource LLMs

SHUBHAM SABOO

MAR 10, 2024

6

10 Prompt Engineering Hacks to get 10x Better Results

In today’s landscape, whether you’re crafting content or tackling real-world challenges, the essence of creativity and innovation is crucial. It's the lifeline that connects your work to its audience, ensuring engagement and impact. But do you feel sometimes that when you are brainstorming with your AI assistant for ideas, it is giving you very generic or repetitive solutions to your problem?

A research by the Wharton School indicates that AI-generated ideas are high in quality but lack diversity of ideas, limiting novelty and the overall quality of the best idea. Traditional AI prompting, when AI is left on its own to think of ideas, tends to generate ideas that are less varied compared to those generated by human groups, a higher Cosine Similarity scores, indicating closer similarity among the ideas.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/b9c1b3e8-336b-44dd-8a97-a9ce74e23380_1352x984.png

One of the methods that stands out here is called Chain-of-Thought (CoT) prompting. This approach involves breaking down the brainstorming task into micro-tasks. It turns out that this method not only makes AI’s ideas as diverse as those from human groups but also leads to more unique ideas.

The study also emphasizes how important it is to carefully choose how we prompt AI. Different ways of starting the brainstorming process, like using specific instructions or adopting different personas, can make a big difference in how varied the ideas are.

10 Practical Prompting Strategies

To translate these academic insights into practical tips, we have 10 tips to make your LLM prompts work 10x better, drawing directly from the above findings. These are designed to optimize how you interact with LLMs, ensuring that your prompts yield the most creative, diverse, and valuable responses.

Be Specific in Your Request: Moving from a generic prompt to a more detailed query not only sharpens the focus of the response but also encourages a more nuanced and informative output.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/f30c5b33-691c-464d-b084-277d375509b8_1886x882.png

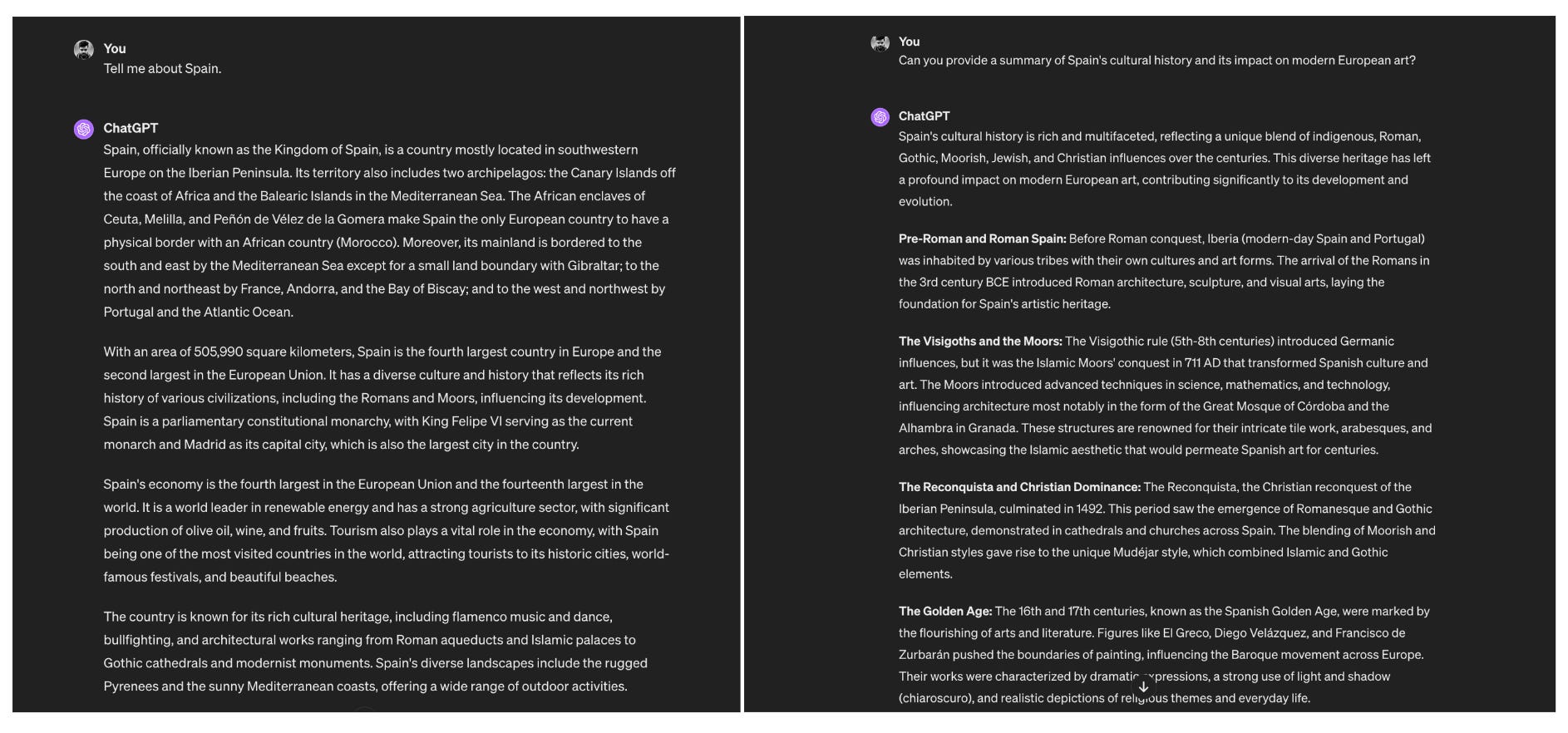

Include Context or Background: Providing context transforms a simple question into a comprehensive query that anticipates and addresses potential nuances.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/95270c4d-612d-4ba8-bcd9-0adb94c0ea37_2540x1112.png

Ask for Examples or Explanations: This approach enriches the response, making abstract concepts tangible and understandable.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/b4f37a15-2a91-4671-b22a-092ac5942ead_2690x1154.png

Use Open-Ended Questions for Broader Insights: Encouraging expansive thinking by asking open-ended questions can unearth deeper insights.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/6691c0c9-07ac-4f67-8a8d-31f5caa77315_1168x1280.png

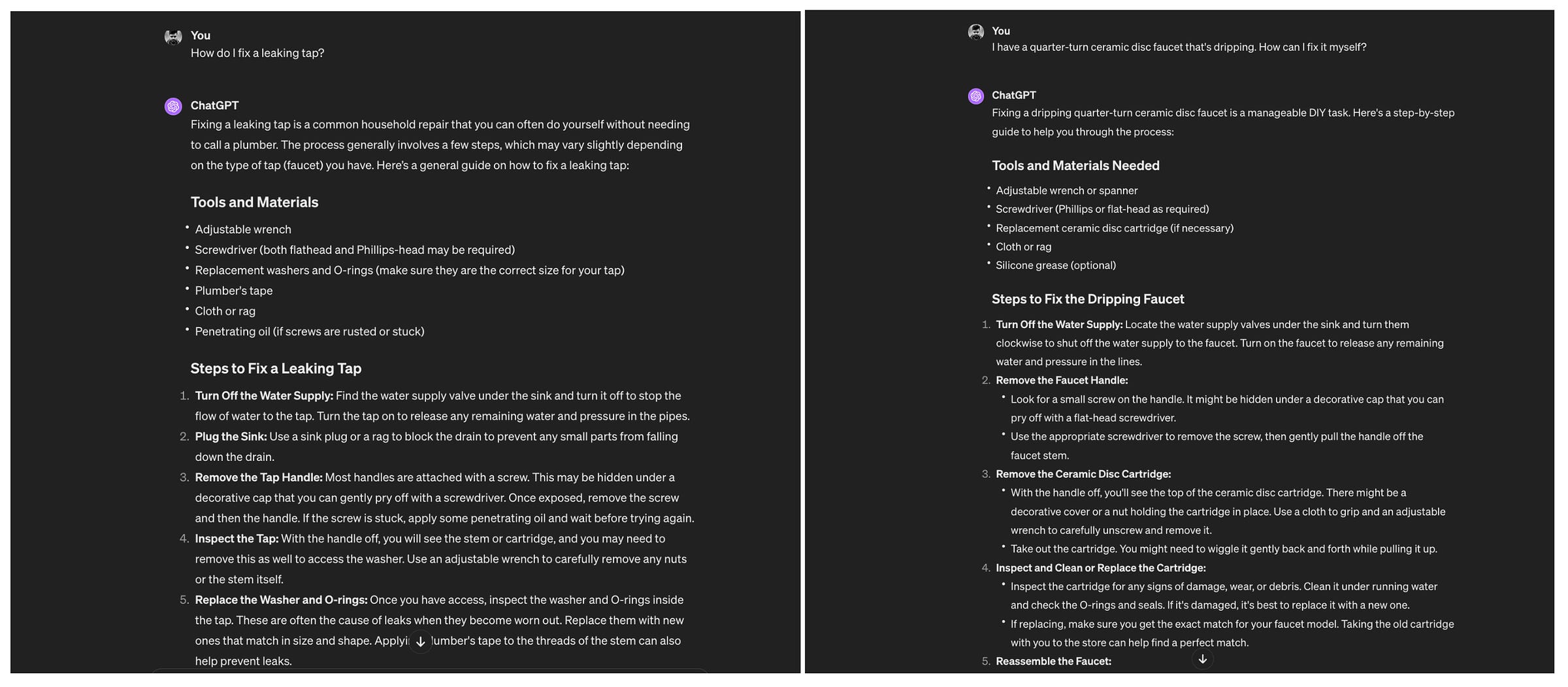

Request Step-by-Step Instructions: This hack is about seeking clarity and actionable guidance.

Generic Prompt: “How to bake a cake.”

Generic Prompt: “How to bake a cake.”

Improved Prompt: “Can you give me a step-by-step guide to baking a chocolate cake for beginners?”

Improved Prompt: “Can you give me a step-by-step guide to baking a chocolate cake for beginners?”

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/da8a02a1-f91a-461d-aafe-1b3a0ebd580a_1124x1336.png

Specify the Desired Format of Your Answer: Directing the format of the AI's response can greatly enhance its utility and relevance.

Generic Prompt: “Ideas for a garden party.”

Generic Prompt: “Ideas for a garden party.”

Improved Prompt: “Can you list 5 creative themes for a garden party, including decoration and food ideas?”

Improved Prompt: “Can you list 5 creative themes for a garden party, including decoration and food ideas?”

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/6107a0a1-6c09-4bbc-94f3-b7dc02596637_2266x1014.png

Incorporate Keywords for Clarity: Keywords act as beacons that guide the AI to focus on the most relevant aspects of a query.

Generic Prompt: “Improve writing skills.”

Generic Prompt: “Improve writing skills.”

Improved Prompt: “What are effective strategies to enhance academic writing skills for university students?”

Improved Prompt: “What are effective strategies to enhance academic writing skills for university students?”

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/a9079cee-0c29-4eca-bb49-d90738423047_2316x1040.png

Limit Your Scope: Narrowing the scope of your inquiry helps in obtaining more focused and applicable answers.

Generic Prompt: “Tell me about renewable energy.”

Generic Prompt: “Tell me about renewable energy.”

Improved Prompt: “What are the top three renewable energy sources suitable for urban areas, and why?”

Improved Prompt: “What are the top three renewable energy sources suitable for urban areas, and why?”

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/46ee9393-0a5e-4c16-8a93-ed9d27538cc2_2780x1132.png

Clarify the Purpose of Your Query: Expressing the purpose behind your question can tailor the response to your exact needs.

Generic Prompt: "Information on Python coding."

Generic Prompt: "Information on Python coding."

Improved Prompt: "I'm a beginner in programming looking to automate daily tasks. Can you recommend Python libraries and resources?"

Improved Prompt: "I'm a beginner in programming looking to automate daily tasks. Can you recommend Python libraries and resources?"

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/76e655b7-98fc-4db5-8ee5-2c92443c4c22_2640x1124.png

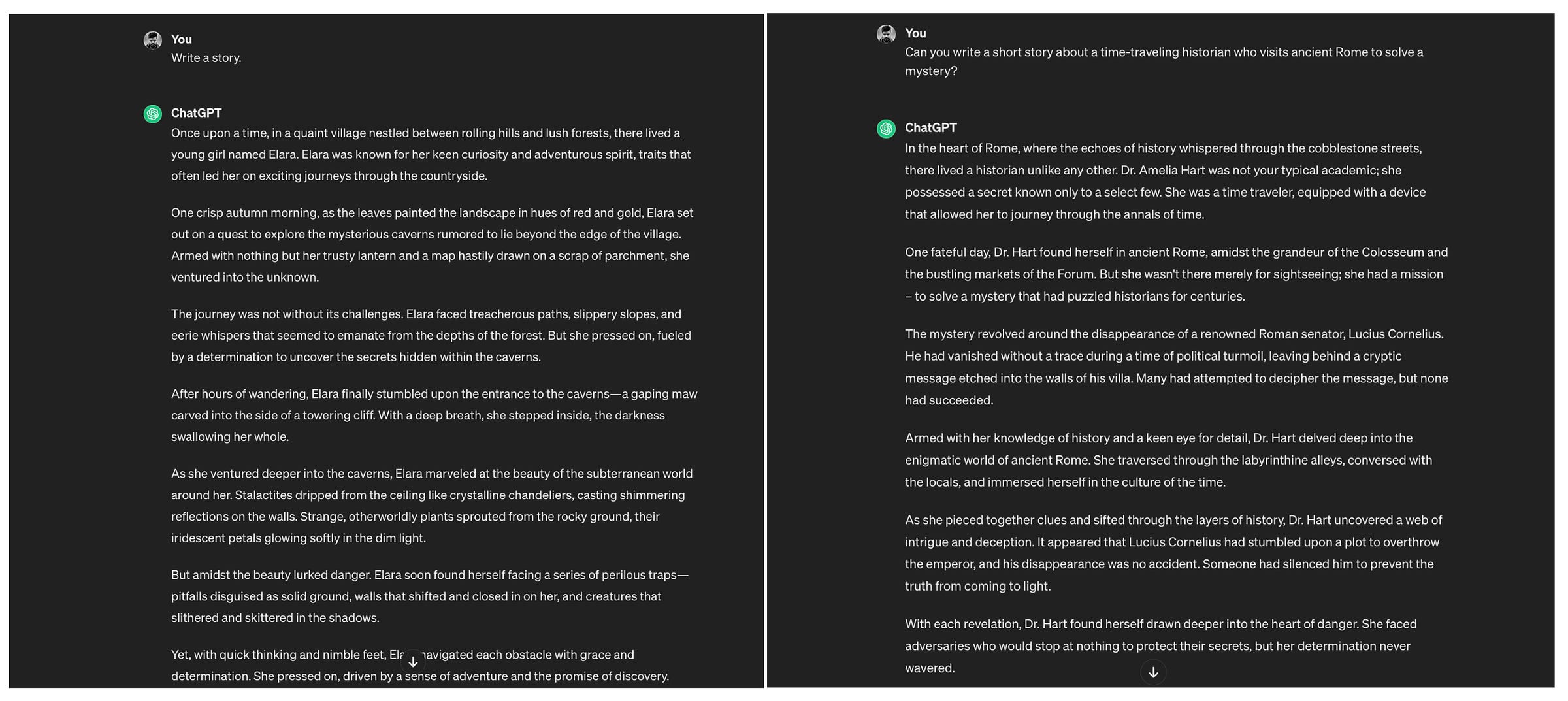

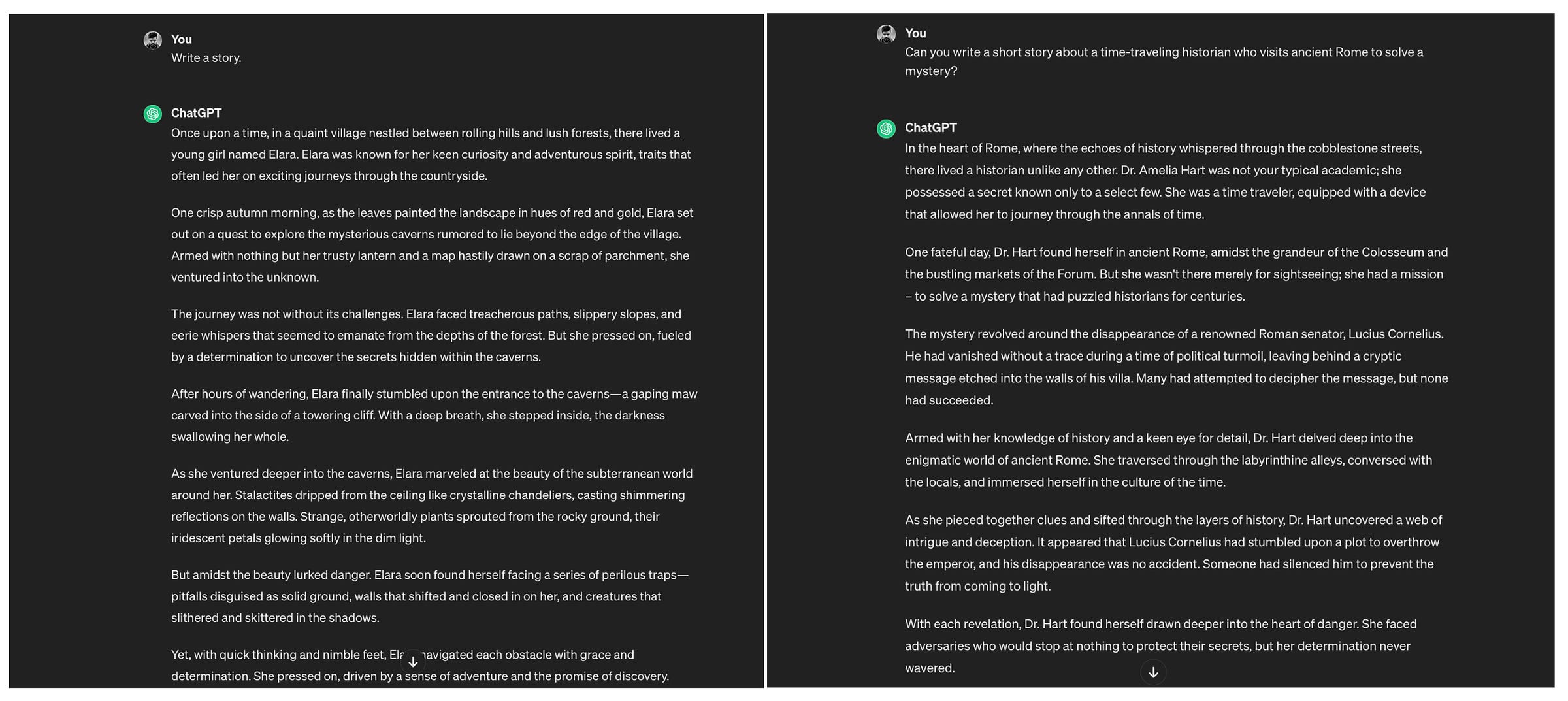

Incorporate a Creative Angle: Injecting creativity into your prompts not only makes the interaction more enjoyable but also pushes the AI to generate more unique and imaginative responses.

Generic Prompt: "Write a story."

Generic Prompt: "Write a story."

Improved Prompt: "Can you write a short story about a time-traveling historian who visits ancient Rome to solve a mystery?"

Improved Prompt: "Can you write a short story about a time-traveling historian who visits ancient Rome to solve a mystery?"

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/2b2dabaf-b030-4ac1-9898-53fec64328a4_2594x1160.png

Applying these research-driven strategies for prompt engineering can significantly enhance the effectiveness of your interactions with AI like ChatGPT, turning it into a more powerful tool for generating ideas, solving problems, and gaining knowledge.

That’s all for today![/SIZE]

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/da8a02a1-f91a-461d-aafe-1b3a0ebd580a_1124x1336.png

Specify the Desired Format of Your Answer: Directing the format of the AI's response can greatly enhance its utility and relevance.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/6107a0a1-6c09-4bbc-94f3-b7dc02596637_2266x1014.png

Incorporate Keywords for Clarity: Keywords act as beacons that guide the AI to focus on the most relevant aspects of a query.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/a9079cee-0c29-4eca-bb49-d90738423047_2316x1040.png

Limit Your Scope: Narrowing the scope of your inquiry helps in obtaining more focused and applicable answers.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/46ee9393-0a5e-4c16-8a93-ed9d27538cc2_2780x1132.png

Clarify the Purpose of Your Query: Expressing the purpose behind your question can tailor the response to your exact needs.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/76e655b7-98fc-4db5-8ee5-2c92443c4c22_2640x1124.png

Incorporate a Creative Angle: Injecting creativity into your prompts not only makes the interaction more enjoyable but also pushes the AI to generate more unique and imaginative responses.

https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https://substack-post-media.s3.amazonaws.com/public/images/2b2dabaf-b030-4ac1-9898-53fec64328a4_2594x1160.png

Applying these research-driven strategies for prompt engineering can significantly enhance the effectiveness of your interactions with AI like ChatGPT, turning it into a more powerful tool for generating ideas, solving problems, and gaining knowledge.

That’s all for today![/SIZE]

Elon Musk to open-source AI chatbot Grok this week | TechCrunch

Elon Musk's AI startup xAI will open-source Grok, its chatbot rivaling ChatGPT, this week, the entrepreneur said, days after suing OpenAI and complaining Elon Musk's AI startup xAI will open-source Grok, its chatbot rivaling ChatGPT, this week, he said.

Elon Musk says xAI will open-source Grok this week

Manish Singh @refsrc / 5:06 AM EDT•March 11, 2024Comment

Image Credits: Jaap Arriens/NurPhoto (opens in a new window)/ Getty Images

Elon Musk’s AI startup xAI will open-source Grok, its chatbot rivaling ChatGPT, this week, the entrepreneur said, days after suing OpenAI and complaining that the Microsoft-backed startup had deviated from its open-source roots.

xAI released Grok last year, arming it with features including access to “real-time” information and views undeterred by “politically correct” norms. The service is available to customers paying for X’s $16 monthly subscription.

Musk, who didn’t elaborate on what all aspects of Grok he planned to open-source, helped co-found OpenAI with Sam Altman nearly a decade ago as a counterweight to Google’s dominance in artificial intelligence. But OpenAI, which was required to also make its technology “freely available” to the public, has become closed-source and shifted focus to maximizing profits for Microsoft, Musk alleged in the lawsuit filed late last month. (Read OpenAI’s response here.)

“To this day, OpenAI’s website continues to profess that its charter is to ensure that AGI ‘benefits all of humanity.’ In reality, however, OpenAI has been transformed into a closed-source de facto subsidiary of the largest technology company in the world: Microsoft,” Musk’s lawsuit alleged.

The lawsuit has ignited a debate among many technologists and investors about the merits of open-source AI. Vinod Khosla, whose firm is among the earliest backers of OpenAI, called Musk’s legal action a “massive distraction from the goals of getting to AGI and its benefits.”

Marc Andreessen, co-founder of Andreessen Horowitz, accusing Khosla of “lobbying to ban open source” research in AI. “Every significant new technology that advances human well-being is greeted by a ginned-up moral panic,” said Andreessen, whose firm a16z has backed Mistral, whose chatbot is open-source. “This is just the latest.”

The promise to imminently open-source Grok will help xAI join the list of a number of growing firms, including Meta and Mistral, that have published the codes of their chatbots to the public.

Musk has long been a proponent of open-source. Tesla, another firm he leads, has open-sourced many of its patents. “Tesla will not initiate patent lawsuits against anyone who, in good faith, wants to use our technology,” Musk said in 2014. X, formerly known as Twitter, also open-sourced some of its algorithms last year.

He reaffirmed his criticism of Altman-led firm Monday, saying, “OpenAI is a lie.”

Nvidia is sued by authors over AI use of copyrighted works

By Jonathan StempelMarch 11, 20245:02 AM EDT

Updated 6 hours ago

- Companies

- NVIDIA Corp

Follow - Microsoft Corp

Follow - New York Times Co

Follow

March 10 (Reuters) - Nvidia (NVDA.O), opens new tab, whose chips power artificial intelligence, has been sued by three authors who said it used their copyrighted books without permission to train its NeMo, opens new tab AI platform.

Brian Keene, Abdi Nazemian and Stewart O'Nan said their works were part of a dataset of about 196,640 books that helped train NeMo to simulate ordinary written language, before being taken down in October "due to reported copyright infringement."

In a proposed class action filed on Friday night in San Francisco federal court, the authors said the takedown reflects Nvidia's having "admitted" it trained NeMo on the dataset, and thereby infringed their copyrights.

They are seeking unspecified damages for people in the United States whose copyrighted works helped train NeMo's so-called large language models in the last three years.

Among the works covered by the lawsuit are Keene's 2008 novel "Ghost Walk," Nazemian's 2019 novel "Like a Love Story," and O'Nan's 2007 novella "Last Night at the Lobster."

Nvidia declined to comment on Sunday. Lawyers for the authors did not immediately respond to requests on Sunday for additional comment.

[1/2]The logo of NVIDIA as seen at its corporate headquarters in Santa Clara, California, in May of 2022. Courtesy NVIDIA/Handout via REUTERS/File Photo Purchase Licensing Rights, opens new tab

The lawsuit drags Nvidia into a growing body of litigation by writers, as well as the New York Times, over generative AI, which creates new content based on inputs such as text, images and sounds.

Nvidia touts NeMo as a fast and affordable way to adopt generative AI, opens new tab.

Other companies sued over the technology have included OpenAI, which created the AI platform ChatGPT, and its partner Microsoft (MSFT.O), opens new tab.

AI's rise has made Nvidia a favorite of investors.

The Santa Clara, California-based chipmaker's stock price has risen almost 600% since the end of 2022, giving Nvidia a market value of nearly $2.2 trillion.

The case is Nazemian et al v Nvidia Corp, U.S. District Court, Northern District of California, No. 24-01454.

Reporting by Jonathan Stempel in New York; Editing by Josie Kao

Silicon Valley is pricing academics out of AI research

With eye-popping salaries and access to costly computing power, AI companies are draining academia of talent

By Naomi Nix, Cat Zakrzewski and Gerrit De VynckMarch 10, 2024 at 7:00 a.m. EDT

Listen

9 mi

Comment 241

Fei-Fei Li, the “godmother of artificial intelligence,” delivered an urgent plea to President Biden in the glittering ballroom of San Francisco’s Fairmont Hotel last June.

The Stanford professor asked Biden to fund a national warehouse of computing power and data sets — part of a “moonshot investment” allowing the country’s top AI researchers to keep up with tech giants.

She elevated the ask Thursday at Biden’s State of the Union address, which Li attended as a guest of Rep. Anna G. Eshoo (D-Calif.) to promote a bill to fund a national AI repository.

Li is at the forefront of a growing chorus of academics, policymakers and former employees who argue the sky-high cost of working with AI models is boxing researchers out of the field, compromising independent study of the burgeoning technology.

As companies like Meta, Google and Microsoft funnel billions of dollars into AI, a massive resources gap is building with even the country’s richest universities. Meta aims to procure 350,000 of the specialized computer chips — called GPUs — necessary to run gargantuan calculations on AI models. In contrast, Stanford’s Natural Language Processing Group has 68 GPUs for all of its work.

To obtain the expensive computing power and data required to research AI systems, scholars frequently partner with tech employees. Meanwhile, tech firms’ eye-popping salaries are draining academia of star talent.

Big tech companies now dominate breakthroughs in the field. In 2022, the tech industry created 32 significant machine learning models, while academics produced three, a significant reversal from 2014, when the majority of AI breakthroughs originated in universities, according to a Stanford report.

Researchers say this lopsided power dynamic is shaping the field in subtle ways, pushing AI scholars to tailor their research for commercial use. Last month, Meta CEO Mark Zuckerberg announced the company’s independent AI research lab would move closer to its product team, ensuring “some level of alignment” between the groups, he said.

“The public sector is now significantly lagging in resources and talent compared to that of industry,” said Li, a former Google employee and the co-director of the Stanford Institute for Human-Centered AI. “This will have profound consequences because industry is focused on developing technology that is profit-driven, whereas public sector AI goals are focused on creating public goods.”

This agency is tasked with keeping AI safe. Its offices are crumbling.

Some are pushing for new sources of funding. Li has been making the rounds in Washington, huddling with White House Office of Science and Technology Director Arati Prabhakar, dining with the political press at a swanky seafood and steakhouse and visiting Capitol Hill for meetings with lawmakers working on AI, including Sens. Martin Heinrich (D-N.M.), Mike Rounds (R-S.D.) and Todd Young (R-Ind.).

Large tech companies have contributed computing resources to the National AI Research Resource, the national warehouse project, including a $20 million donation in computing credits from Microsoft.

“We have long embraced the importance of sharing knowledge and compute resources with our colleagues within academia,” Microsoft Chief Scientific Officer Eric Horvitz said in a statement.

Policymakers are taking some steps to address the funding gaps. Last year, the National Science Foundation announced $140 million investment to launch seven university-led National AI Research Institutes to examine how AI could mitigate the effects of climate change and improve education, among other topics.

Eshoo said she hopes to pass the Create AI Act, which has bipartisan backing in the House and Senate, by the end of the year, when she is scheduled to retire. The legislation “essentially democratizes AI,” Eshoo said.

But scholars say this infusion may not come quickly enough.

As Silicon Valley races to build chatbots and image generators, it is drawing would-be computer science professors with high salaries and the chance to work on interesting AI problems. Nearly, 70 percent of people with artificial intelligence PhDs end up getting a job in private industry compared with 21 percent of graduates two decades ago, according to a 2023 report.

{continued}

Amid explosive demand, America is running out of power

Big Tech’s AI boom has pushed the salaries for the best researchers to new heights. Median compensation packages for AI research scientists at Meta climbed from $256,000 in 2020 to $335,250 in 2023, according to Levels.fyi, a salary-tracking website. True stars can attract even more cash: AI engineers with a PhD and several years of experience building AI models can command compensation as high as $20 million over four years, said Ali Ghodsi, who as CEO of AI start-up DataBricks is regularly competing to hire AI talent.

“The compensation is through the roof. It’s ridiculous,” he said. “It’s not an uncommon number to hear, roughly.”

University academics often have little choice but to work with industry researchers, with the company footing the bill for computing power and offering data. Nearly 40 percent of papers presented at leading AI conferences in 2020 had at least one tech employee author, according to the 2023 report. And industry grants often fund PhD students to perform research, said Mohamed Abdalla, a scientist at the Canadian-based Institute for Better Health at Trillium Health Partners, who has conducted research on the effect of industry on academics’ AI research.

“It was like a running joke that like everyone is getting hired by them,” Abdalla said. “And the people that were remaining, they were funded by them — so in a way hired by them.”

Google believes private companies and universities should work together to develop the science behind AI, said Jane Park, a spokesperson for the company. Google still routinely publishes its research publicly to benefit the broader AI community, Park said.

David Harris, a former research manager for Meta’s responsible AI team, said corporate labs may not censor the outcome of research but may influence which projects get tackled.

“Any time you see a mix of authors who are employed by a company and authors who work at a university, you should really scrutinize the motives of the company for contributing to that work,” said Harris, who is now a chancellor’s public scholar at the University of California at Berkeley. “We used to look at people employed in academia to be neutral scholars, motivated only by the pursuit of truth and the interest of society.”

These fake images reveal how AI amplifies our worst stereotypes

Tech giants procure huge amounts of computing powerthrough data centers and have access to GPUs — specialized computer chips that are necessary for running the gargantuan calculations needed for AI. These resources are expensive: A recent report from Stanford University researchers estimatedGoogle DeepMind’s large language model, Chinchilla, cost $2.1 million to develop. More than 100 top artificial intelligence researchers on Tuesday urged generative AI companies to offer a legal and technical safe harbor to researchers so they can scrutinize their products without the fear that internet platforms will suspend their accounts or threaten legal action.

A GPU made by Nvidia. (Joel Saget/AFP/Getty Images)

A GPU made by Nvidia. (Joel Saget/AFP/Getty Images)

The necessity for advanced computing power is likely to only grow stronger as AI scientists crunch more data to improve the performance of their models, said Neil Thompson, director of the FutureTech research project at MIT’s Computer Science and Artificial Intelligence Lab, which studies progress in computing.

“To keep getting better, [what] you expect to need is more and more money, more and more computers, more and more data,” Thompson said. “What that’s going to mean is that people who do not have as much compute [and] who do not have as many resources are going to stop being able to participate.”

Tech companies like Meta and Google have historically run their AI research labs to resemble universities where scientists decide what projects to pursue to advance the state of research, according to people familiar with the matter who spoke on the condition of anonymity to speak to private company matters.

Those workers were largely isolated from teams focused on building products or generating revenue, the people said. They were judged by publishing influential papers or notable breakthroughs — similar metrics to peers at universities, the people said. Meta top AI scientists Yann LeCun and Joelle Pineau hold dual appointments at New York University and McGill University, blurring the lines between industry and academia.

Top AI researchers say OpenAI, Meta and more hinder independent evaluations

In an increasingly competitive market for generative AI products, research freedom inside companies could wane. Last April, Google announced it was merging two of its AI research groups DeepMind, an AI research company it acquired in 2010, and the Brain team from Google Research into one department called Google DeepMind. Last year, Google started to take more advantage of its own AI discoveries, sharing research papers only after the lab work had been turned into products, The Washington Post has reported.

Meta has also reshuffled its research teams. In 2022, the company placed FAIR under the helm of its VR division Reality Labs and last year reassigned some of the group’s researchers to a new generative AI product team. Last month, Zuckerberg told investors that FAIR would work “closer together” with the generative AI product team, arguing that while the two groups would still conduct research on “different time horizons,” it was helpful to the company “to have some level of alignment” between them.

“In a lot of tech companies right now, they hired research scientists that knew something about AI and maybe set certain expectations about how much freedom they would have to set their own schedule and set their own research agenda,” Harris said. “That’s changing, especially for the companies that are moving frantically right now to ship these products.”

Amid explosive demand, America is running out of power

Big Tech’s AI boom has pushed the salaries for the best researchers to new heights. Median compensation packages for AI research scientists at Meta climbed from $256,000 in 2020 to $335,250 in 2023, according to Levels.fyi, a salary-tracking website. True stars can attract even more cash: AI engineers with a PhD and several years of experience building AI models can command compensation as high as $20 million over four years, said Ali Ghodsi, who as CEO of AI start-up DataBricks is regularly competing to hire AI talent.

“The compensation is through the roof. It’s ridiculous,” he said. “It’s not an uncommon number to hear, roughly.”

University academics often have little choice but to work with industry researchers, with the company footing the bill for computing power and offering data. Nearly 40 percent of papers presented at leading AI conferences in 2020 had at least one tech employee author, according to the 2023 report. And industry grants often fund PhD students to perform research, said Mohamed Abdalla, a scientist at the Canadian-based Institute for Better Health at Trillium Health Partners, who has conducted research on the effect of industry on academics’ AI research.

“It was like a running joke that like everyone is getting hired by them,” Abdalla said. “And the people that were remaining, they were funded by them — so in a way hired by them.”

Google believes private companies and universities should work together to develop the science behind AI, said Jane Park, a spokesperson for the company. Google still routinely publishes its research publicly to benefit the broader AI community, Park said.

David Harris, a former research manager for Meta’s responsible AI team, said corporate labs may not censor the outcome of research but may influence which projects get tackled.

“Any time you see a mix of authors who are employed by a company and authors who work at a university, you should really scrutinize the motives of the company for contributing to that work,” said Harris, who is now a chancellor’s public scholar at the University of California at Berkeley. “We used to look at people employed in academia to be neutral scholars, motivated only by the pursuit of truth and the interest of society.”

These fake images reveal how AI amplifies our worst stereotypes

Tech giants procure huge amounts of computing powerthrough data centers and have access to GPUs — specialized computer chips that are necessary for running the gargantuan calculations needed for AI. These resources are expensive: A recent report from Stanford University researchers estimatedGoogle DeepMind’s large language model, Chinchilla, cost $2.1 million to develop. More than 100 top artificial intelligence researchers on Tuesday urged generative AI companies to offer a legal and technical safe harbor to researchers so they can scrutinize their products without the fear that internet platforms will suspend their accounts or threaten legal action.

The necessity for advanced computing power is likely to only grow stronger as AI scientists crunch more data to improve the performance of their models, said Neil Thompson, director of the FutureTech research project at MIT’s Computer Science and Artificial Intelligence Lab, which studies progress in computing.

“To keep getting better, [what] you expect to need is more and more money, more and more computers, more and more data,” Thompson said. “What that’s going to mean is that people who do not have as much compute [and] who do not have as many resources are going to stop being able to participate.”

Tech companies like Meta and Google have historically run their AI research labs to resemble universities where scientists decide what projects to pursue to advance the state of research, according to people familiar with the matter who spoke on the condition of anonymity to speak to private company matters.

Those workers were largely isolated from teams focused on building products or generating revenue, the people said. They were judged by publishing influential papers or notable breakthroughs — similar metrics to peers at universities, the people said. Meta top AI scientists Yann LeCun and Joelle Pineau hold dual appointments at New York University and McGill University, blurring the lines between industry and academia.

Top AI researchers say OpenAI, Meta and more hinder independent evaluations

In an increasingly competitive market for generative AI products, research freedom inside companies could wane. Last April, Google announced it was merging two of its AI research groups DeepMind, an AI research company it acquired in 2010, and the Brain team from Google Research into one department called Google DeepMind. Last year, Google started to take more advantage of its own AI discoveries, sharing research papers only after the lab work had been turned into products, The Washington Post has reported.

Meta has also reshuffled its research teams. In 2022, the company placed FAIR under the helm of its VR division Reality Labs and last year reassigned some of the group’s researchers to a new generative AI product team. Last month, Zuckerberg told investors that FAIR would work “closer together” with the generative AI product team, arguing that while the two groups would still conduct research on “different time horizons,” it was helpful to the company “to have some level of alignment” between them.

“In a lot of tech companies right now, they hired research scientists that knew something about AI and maybe set certain expectations about how much freedom they would have to set their own schedule and set their own research agenda,” Harris said. “That’s changing, especially for the companies that are moving frantically right now to ship these products.”

AILAB @ailab_sh , Twitter Profile - twstalker.com

@ailab_sh Unleashing the Power of AI, One Solution at a Time

1/1

Claude 3 Opus is great! It transformed 2h13m video into a blog post in ONE prompt, and it just... did it

#LLM #LLMs #Claude3

Claude 3 Opus is great! It transformed 2h13m video into a blog post in ONE prompt, and it just... did it

#LLM #LLMs #Claude3

1/2

Use a simple Google Sheet to test one-off prompts with various LLMs. Populate it with structured data from Gmail, Zendesk, Jira via Zapier. Test prompts on ~100 rows, refine, and deploy the best prompt & LLM for your use case. e.g. our in-house ArcanumSheet

2/2

Here's an idea - create a Zap @zapier that has parallel actions to use different LLMs (and their LLM-specific prompt since it's not a one-prompt fits all) on triggers from an application (Zendesk, Jira, Gmail, Hubspot) and then populate a Zapier Table to compare the results.

Use a simple Google Sheet to test one-off prompts with various LLMs. Populate it with structured data from Gmail, Zendesk, Jira via Zapier. Test prompts on ~100 rows, refine, and deploy the best prompt & LLM for your use case. e.g. our in-house ArcanumSheet

2/2

Here's an idea - create a Zap @zapier that has parallel actions to use different LLMs (and their LLM-specific prompt since it's not a one-prompt fits all) on triggers from an application (Zendesk, Jira, Gmail, Hubspot) and then populate a Zapier Table to compare the results.

1/2

Influence functions are a really cool way to understand why an LLM generated a specific answer to a prompt.

They allow you to trace a sequence of generated tokens back to the training documents that most influenced ita generation.

They also allow you to see which layers of the transformer influenced the generation.

2/2

Talk by Prof. Grosse:

Influence functions are a really cool way to understand why an LLM generated a specific answer to a prompt.

They allow you to trace a sequence of generated tokens back to the training documents that most influenced ita generation.

They also allow you to see which layers of the transformer influenced the generation.

2/2

Talk by Prof. Grosse:

Tracing Model Outputs to the Training Data

Anthropic is an AI safety and research company that's working to build reliable, interpretable, and steerable AI systems.

1/7

I made a Colab that turns a Github repo into 1 long but well-formatted prompt! Super useful for Long Context like #Claude Opus.

+ a map to show your LLM how everything is organized!

I used it to turn

@nomic_ai

's entire Github into one prompt, to ask questions.

Enjoy! Link

2/7

Github link (please star!):

github.com

github.com

It's a Colab so it's easy to use.

The instructions are there. You need a Github repo URL and an access token!

The example turns the Nomic Atlas repo into a long prompt: GitHub - nomic-ai/nomic: Interact, analyze and structure massive text, image, embedding, audio and video datasets

2/n (one more scroll)

3/7

The structure of the prompt is:

Today I Learned for programmers contents

Directory tree

filename1

‘’’

Code contents

‘’’

filename2

‘’’

Code contents

‘’’

….

The directory tree is recursively generated and is important so that your LLM understands the file structure of your codebase.

The readme provides valuable initial context.

All code is encapsulated in triple quotes to help organize for the LLM!

4/7

Might be useful to @GregKamradt @Francis_YAO_ @DrJimFan @erhartford

5/7

This is a neat little function, it's how I generate the repo filesystem tree

it's recursive!

sure my cs106b teacher would be thrilled

def build_directory_tree(owner, repo, path='', token=None, indent=0, file_paths=[]):

items = fetch_repo_content(owner, repo, path, token)

tree_str = ""

for item in items:

if '.github' in item['path'].split('/'):

continue

if item['type'] == 'dir':

tree_str += ' ' * indent + f"[{item['name']}/]\n"

tree_str += build_directory_tree(owner, repo, item['path'], token, indent + 1, file_paths)[0]

else:

tree_str += ' ' * indent + f"{item['name']}\n"

# Indicate which file extensions should be included in the prompt!

if item['name'].endswith(('.py', '.ipynb', '.html', '.css', '.js', '.jsx', '.rst', '.md')):

file_paths.append((indent, item['path']))

return tree_str, file_paths

6/7

let me know how it works for you!

personally i am realizing that most large codebases don't fit in the Poe message length limit even for 200k

7/7

of course! thanks

I made a Colab that turns a Github repo into 1 long but well-formatted prompt! Super useful for Long Context like #Claude Opus.

+ a map to show your LLM how everything is organized!

I used it to turn

@nomic_ai

's entire Github into one prompt, to ask questions.

Enjoy! Link

2/7

Github link (please star!):

GitHub - andrewgcodes/repo2prompt: Turn a Github Repo's contents into a big prompt for long-context models like Claude 3 Opus.

Turn a Github Repo's contents into a big prompt for long-context models like Claude 3 Opus. - andrewgcodes/repo2prompt

It's a Colab so it's easy to use.

The instructions are there. You need a Github repo URL and an access token!

The example turns the Nomic Atlas repo into a long prompt: GitHub - nomic-ai/nomic: Interact, analyze and structure massive text, image, embedding, audio and video datasets

2/n (one more scroll)

3/7

The structure of the prompt is:

Today I Learned for programmers contents

Directory tree

filename1

‘’’

Code contents

‘’’

filename2

‘’’

Code contents

‘’’

….

The directory tree is recursively generated and is important so that your LLM understands the file structure of your codebase.

The readme provides valuable initial context.

All code is encapsulated in triple quotes to help organize for the LLM!

4/7

Might be useful to @GregKamradt @Francis_YAO_ @DrJimFan @erhartford

5/7

This is a neat little function, it's how I generate the repo filesystem tree

it's recursive!

sure my cs106b teacher would be thrilled

def build_directory_tree(owner, repo, path='', token=None, indent=0, file_paths=[]):

items = fetch_repo_content(owner, repo, path, token)

tree_str = ""

for item in items:

if '.github' in item['path'].split('/'):

continue

if item['type'] == 'dir':

tree_str += ' ' * indent + f"[{item['name']}/]\n"

tree_str += build_directory_tree(owner, repo, item['path'], token, indent + 1, file_paths)[0]

else:

tree_str += ' ' * indent + f"{item['name']}\n"

# Indicate which file extensions should be included in the prompt!

if item['name'].endswith(('.py', '.ipynb', '.html', '.css', '.js', '.jsx', '.rst', '.md')):

file_paths.append((indent, item['path']))

return tree_str, file_paths

6/7

let me know how it works for you!

personally i am realizing that most large codebases don't fit in the Poe message length limit even for 200k

7/7

of course! thanks

1/2

Grok - Prompt Tuning Directives

Did you know most LLM systems support "prompt tuning" directives to tweak response generation? The attachment shows an example with original and tweaked prompts and responses. The prompt was tuned to:

⁃increase randomness (maximum temperature)

⁃exclude specific words from response (blue and red)

⁃keep response brief (limit output tokens)

The second response in the example is inaccurate due to increased randomness (hallucinations) and masking two correct colors. But prompt tuning can make stories, poems, song lyrics more interesting (also images, video, music).

These tuning directives are usually specified when using an API or PromptIDE, but some AI platforms will process them conversationally. With Grok it is hit or miss conversationally. Sometimes Grok ignores the prompt tuning directives but will do so if asked to.

In the first post reply I added a prompt directive cheatsheet Grok generated for me containing some of the tuning directives available.

2/2

Grok Prompt Directive Cheatsheet

Following was generated by Grok on March 8, 2024.

There is no guarantee any of these will work for you or will continue to work.

Grok (

@grok

)

Here's a structured cheat sheet for you, based on the information provided:

1.Temperature:

•Acceptable value range: 0 to 1

•Description: Controls the randomness of the generated text

•Example: Temperature = 0.8

2.Top-k sampling:

•Acceptable value range: Integer values, typically 1 to 100

•Description: Limits the number of possible next tokens that the model can choose from when generating text

•Example: Top-k = 40

3.Top-p (nucleus) sampling:

•Acceptable value range: 0 to 1

•Description: Similar to top-k sampling but considers the smallest possible set of tokens whose cumulative probability exceeds a given threshold (p)

•Example: Top-p = 0.9

4.Frequency and presence penalty:

•Acceptable value range: 0 to 1

•Description: Discourages the model from repeating the same words or phrases and encourages it to use less common words

•Example: Frequency penalty = 0.5, Presence penalty = 0.3

5.Stop-word list:

•Acceptable value range: List of words

•Description: Prevents the model from generating specific words or phrases as part of its output

•Example: Stop-word list = ["apple", "banana", "orange"]

6.Maximum output length:

•Acceptable value range: Integer values, typically 1 to 1000

•Description: Limits the number of tokens the model can generate as part of its output

•Example: Maximum output length = 200

7.Context length:

•Acceptable value range: Integer values, typically 1 to 1000

•Description: The number of tokens the model can consider when generating its output

•Example: Context length = 100

Please note that the acceptable value ranges provided are general guidelines and may vary depending on the specific LLM or AI system you are using.

Grok - Prompt Tuning Directives

Did you know most LLM systems support "prompt tuning" directives to tweak response generation? The attachment shows an example with original and tweaked prompts and responses. The prompt was tuned to:

⁃increase randomness (maximum temperature)

⁃exclude specific words from response (blue and red)

⁃keep response brief (limit output tokens)

The second response in the example is inaccurate due to increased randomness (hallucinations) and masking two correct colors. But prompt tuning can make stories, poems, song lyrics more interesting (also images, video, music).

These tuning directives are usually specified when using an API or PromptIDE, but some AI platforms will process them conversationally. With Grok it is hit or miss conversationally. Sometimes Grok ignores the prompt tuning directives but will do so if asked to.

In the first post reply I added a prompt directive cheatsheet Grok generated for me containing some of the tuning directives available.

2/2

Grok Prompt Directive Cheatsheet

Following was generated by Grok on March 8, 2024.

There is no guarantee any of these will work for you or will continue to work.

Grok (

@grok

)

Here's a structured cheat sheet for you, based on the information provided:

1.Temperature:

•Acceptable value range: 0 to 1

•Description: Controls the randomness of the generated text

•Example: Temperature = 0.8

2.Top-k sampling:

•Acceptable value range: Integer values, typically 1 to 100

•Description: Limits the number of possible next tokens that the model can choose from when generating text

•Example: Top-k = 40

3.Top-p (nucleus) sampling:

•Acceptable value range: 0 to 1

•Description: Similar to top-k sampling but considers the smallest possible set of tokens whose cumulative probability exceeds a given threshold (p)

•Example: Top-p = 0.9

4.Frequency and presence penalty:

•Acceptable value range: 0 to 1

•Description: Discourages the model from repeating the same words or phrases and encourages it to use less common words

•Example: Frequency penalty = 0.5, Presence penalty = 0.3

5.Stop-word list:

•Acceptable value range: List of words

•Description: Prevents the model from generating specific words or phrases as part of its output

•Example: Stop-word list = ["apple", "banana", "orange"]

6.Maximum output length:

•Acceptable value range: Integer values, typically 1 to 1000

•Description: Limits the number of tokens the model can generate as part of its output

•Example: Maximum output length = 200

7.Context length:

•Acceptable value range: Integer values, typically 1 to 1000

•Description: The number of tokens the model can consider when generating its output

•Example: Context length = 100

Please note that the acceptable value ranges provided are general guidelines and may vary depending on the specific LLM or AI system you are using.

1/1

Anthropic Cookbook Series

Here’s a set of six notebooks and four videos by

by

@ravithejads

that show you how to use Claude 3 (

@AnthropicAI

) to build any context-augmented LLM app, from simple-to-advanced RAG to agents

Basic RAG with LlamaIndex

Basic RAG with LlamaIndex

Routing for QA and Summarization

Routing for QA and Summarization

Sub-Question Decomposition for Complex Queries

Sub-Question Decomposition for Complex Queries

ReAct agent over RAG pipelines

ReAct agent over RAG pipelines

Multi-document agents for advanced multi-doc reasoning

Multi-document agents for advanced multi-doc reasoning

Multi-modal apps with LlamaIndex

Multi-modal apps with LlamaIndex

Big shoutout to

@alexalbert__

for the speedy reviews.

Full repo here: anthropic-cookbook/third_party/LlamaIndex at main · anthropics/anthropic-cookbook

Videos:

RAG:

Routing + Sub-Questions:

Agents:

Multimodal:

Anthropic Cookbook Series

Here’s a set of six notebooks and four videos

@ravithejads

that show you how to use Claude 3 (

@AnthropicAI

) to build any context-augmented LLM app, from simple-to-advanced RAG to agents

Big shoutout to

@alexalbert__

for the speedy reviews.

Full repo here: anthropic-cookbook/third_party/LlamaIndex at main · anthropics/anthropic-cookbook

Videos:

RAG:

Routing + Sub-Questions:

Agents:

Multimodal:

1/2

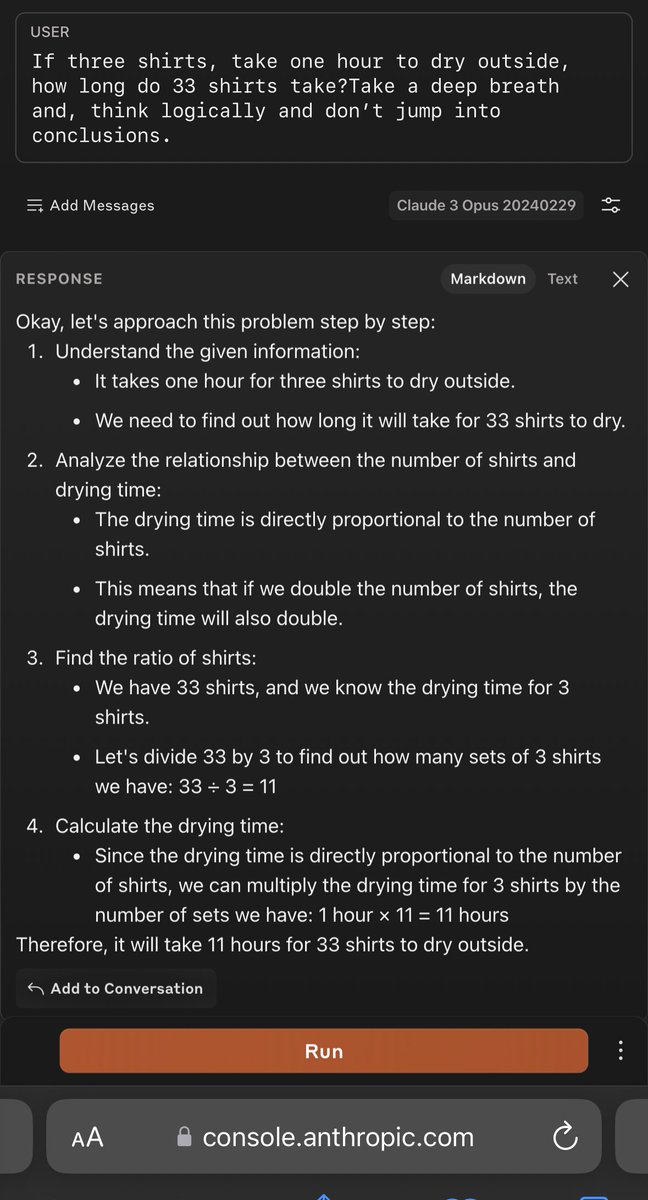

My new go-to LLM capability test:

“If three shirts, take one hour to dry outside, how long do 33 shirts take?”

GPT-4 Turbo

Mistral Large (Correct 50% of time)

Gemini Ultra

Claude 3 Opus

Inflection’s Pi

GPT-3.5

GPT-5 isn’t coming anytime soon. GPT-4 is holding up very well. And it came out a year ago!

2/2

Didn’t seem to work for this question. I agree it can be prompted to get to the right answer but I think it’s part of being a better model to be able to give the right answer in its default form. GPT-4 Turbo is probably _even better_ with prompting if its default state is so…

My new go-to LLM capability test:

“If three shirts, take one hour to dry outside, how long do 33 shirts take?”

GPT-4 Turbo

Mistral Large (Correct 50% of time)

Gemini Ultra

Claude 3 Opus

Inflection’s Pi

GPT-3.5

GPT-5 isn’t coming anytime soon. GPT-4 is holding up very well. And it came out a year ago!

2/2

Didn’t seem to work for this question. I agree it can be prompted to get to the right answer but I think it’s part of being a better model to be able to give the right answer in its default form. GPT-4 Turbo is probably _even better_ with prompting if its default state is so…