You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?OpenVoice: Versatile Instant Voice Cloning

We introduce OpenVoice, a versatile voice cloning approach that requires only a short audio clip from the reference speaker to replicate their voice and generate speech in multiple languages. OpenVoice represents a significant advancement in addressing the following open challenges in the field...

Computer Science > Sound

[Submitted on 3 Dec 2023 (v1), last revised 2 Jan 2024 (this version, v5)]OpenVoice: Versatile Instant Voice Cloning

Zengyi Qin, Wenliang Zhao, Xumin Yu, Xin SunWe introduce OpenVoice, a versatile voice cloning approach that requires only a short audio clip from the reference speaker to replicate their voice and generate speech in multiple languages. OpenVoice represents a significant advancement in addressing the following open challenges in the field: 1) Flexible Voice Style Control. OpenVoice enables granular control over voice styles, including emotion, accent, rhythm, pauses, and intonation, in addition to replicating the tone color of the reference speaker. The voice styles are not directly copied from and constrained by the style of the reference speaker. Previous approaches lacked the ability to flexibly manipulate voice styles after cloning. 2) Zero-Shot Cross-Lingual Voice Cloning. OpenVoice achieves zero-shot cross-lingual voice cloning for languages not included in the massive-speaker training set. Unlike previous approaches, which typically require extensive massive-speaker multi-lingual (MSML) dataset for all languages, OpenVoice can clone voices into a new language without any massive-speaker training data for that language. OpenVoice is also computationally efficient, costing tens of times less than commercially available APIs that offer even inferior performance. To foster further research in the field, we have made the source code and trained model publicly accessible. We also provide qualitative results in our demo website. Prior to its public release, our internal version of OpenVoice was used tens of millions of times by users worldwide between May and October 2023, serving as the backend of MyShell.

| Comments: | Technical Report |

| Subjects: | Sound (cs.SD); Machine Learning (cs.LG); Audio and Speech Processing (eess.AS) |

| Cite as: | arXiv:2312.01479 [cs.SD] |

| (or arXiv:2312.01479v5 [cs.SD] for this version) | |

| [2312.01479] OpenVoice: Versatile Instant Voice Cloning Focus to learn more |

Submission history

From: Zengyi Qin [view email][v1] Sun, 3 Dec 2023 18:41:54 UTC (109 KB)

[v2] Wed, 13 Dec 2023 02:25:42 UTC (110 KB)

[v3] Sat, 16 Dec 2023 17:22:45 UTC (234 KB)

[v4] Thu, 21 Dec 2023 22:56:45 UTC (234 KB)

[v5] Tue, 2 Jan 2024 17:45:43 UTC (234 KB)

GitHub - myshell-ai/OpenVoice: Instant voice cloning by MIT and MyShell. Audio foundation model.

Instant voice cloning by MIT and MyShell. Audio foundation model. - myshell-ai/OpenVoice

OpenVoice: Versatile Instant Voice Cloning | MyShell AI

Discover OpenVoice: Instant voice cloning technology that replicates voices from short audio clips. Supports multiple languages, emotion and accent control, and cross-lingual cloning. Efficient and cost-effective, outperforming commercial APIs. Explore the future of AI voice synthesis.

Pinokio: The 1-Click Localhost Cloud

Run your own personal Internet on Mac, Windows, and Linux with one click.

Last edited:

↓R↑LYB

I trained Sheng Long and Shonuff

The AI explosion is about to begin. Nvidia is about to start making custom chips for everyone

February 9, 20245:12 PM ESTUpdated 10 days ago

SAN FRANCISCO, Feb 9 (Reuters) - Nvidia (NVDA.O), opens new tab is building a new business unit focused on designing bespoke chips for cloud computing firms and others, including advanced artificial intelligence (AI) processors, nine sources familiar with its plans told Reuters.

The dominant global designer and supplier of AI chips aims to capture a portion of an exploding market for custom AI chips and shield itself from the growing number of companies pursuing alternatives to its products.

The Santa Clara, California-based company controls about 80% of high-end AI chip market, a position that has sent its stock market value up 40% so far this year to $1.73 trillion after it more than tripled in 2023.

Nvidia's customers, which include ChatGPT creator OpenAI, Microsoft (MSFT.O), opens new tab, Alphabet (GOOGL.O), opens new tab and Meta Platforms (META.O), opens new tab, have raced to snap up the dwindling supply of its chips to compete in the fast-emerging generative AI sector.

Its H100 and A100 chips serve as a generalized, all-purpose AI processor for many of those major customers. But the tech companies have started to develop their own internal chips for specific needs. Doing so helps reduce energy consumption, and potentially can shrink the cost and time to design.

Nvidia is now attempting to play a role in helping these companies develop custom AI chips that have flowed to rival firms such as Broadcom (AVGO.O), opens new tab and Marvell Technology (MRVL.O), opens new tab, said the sources, who declined to be identified because they were not authorized to speak publicly.

"If you're really trying to optimize on things like power, or optimize on cost for your application, you can't afford to go drop an H100 or A100 in there," Greg Reichow, general partner at venture capital firm Eclipse Ventures said in an interview. "You want to have the exact right mixture of compute and just the kind of compute that you need."

Nvidia does not disclose H100 prices, which are higher than for the prior-generation A100, but each chip can sell for $16,000 to $100,000 depending on volume and other factors. Meta plans to bring its total stock to 350,000 H100s this year.

Nvidia officials have met with representatives from Amazon.com (AMZN.O), opens new tab, Meta, Microsoft, Google and OpenAI to discuss making custom chips for them, two sources familiar with the meetings said. Beyond data center chips, Nvidia has pursued telecom, automotive and video game customers.

Nvidia shares rose 2.75% after the Reuters report, helping lift chip stocks overall. Marvell shares dropped 2.78%.

In 2022, Nvidia said it would let third-party customers integrate some of its proprietary networking technology with their own chips. It has said nothing about the program since, and Reuters is reporting its wider ambitions for the first time.

A Nvidia spokesperson declined to comment beyond the company's 2022 announcement.

Dina McKinney, a former Advanced Micro Devices (AMD.O), opens new tab and Marvell executive, heads Nvidia's custom unit and her team's goal is to make its technology available for customers in cloud, 5G wireless, video games and automotives, a LinkedIn profile said. Those mentions were scrubbed and her title changed after Reuters sought comment from Nvidia.

Amazon, Google, Microsoft, Meta and OpenAI declined to comment.

The broader custom chip market was worth roughly $30 billion in 2023, which amounts to roughly 5% of annual global chip sales, according to Needham analyst Charles Shi.

Currently, custom silicon design for data centers is dominated by Broadcom and Marvell.

In a typical arrangement, a design partner such as Nvidia would offer intellectual property and technology, but leave the chip fabrication, packaging and additional steps to Taiwan Semiconductor Manufacturing Co. (2330.TW), opens new tab or another contract chip manufacturer.

Nvidia moving into this territory has the potential to eat into Broadcom and Marvell sales.

"With Broadcom's custom silicon business touching $10 billion, and Marvell’s around $2 billion, this is a real threat," said Dylan Patel, founder of silicon research group SemiAnalysis. "It's a real big negative - there's more competition entering the fray."

Ericsson declined to comment.

650 Group's Weckle expects the telecom custom chip market to remain flat at roughly $4 billion to $5 billion a year.

Nvidia also plans to target the automotive and video game markets, according to sources and public social media postings.

Weckel expects the custom auto market to grow consistently from its current $6 billion to $8 billion range at 20% a year, and the $7 billion to $8 billion video game custom chip market could increase with the next-generation consoles from Xbox and Sony (6857.T), opens new tab.

Nintendo's current Switch handheld console already includes Nvidia's Tegra X1 chip. A new version of the Switch console expected this year is likely to include a Nvidia custom design, one source said.

Nintendo declined to comment.

Exclusive: Nvidia pursues $30 billion custom chip opportunity with new unit

By Max A. Cherney and Stephen NellisFebruary 9, 20245:12 PM ESTUpdated 10 days ago

SAN FRANCISCO, Feb 9 (Reuters) - Nvidia (NVDA.O), opens new tab is building a new business unit focused on designing bespoke chips for cloud computing firms and others, including advanced artificial intelligence (AI) processors, nine sources familiar with its plans told Reuters.

The dominant global designer and supplier of AI chips aims to capture a portion of an exploding market for custom AI chips and shield itself from the growing number of companies pursuing alternatives to its products.

The Santa Clara, California-based company controls about 80% of high-end AI chip market, a position that has sent its stock market value up 40% so far this year to $1.73 trillion after it more than tripled in 2023.

Nvidia's customers, which include ChatGPT creator OpenAI, Microsoft (MSFT.O), opens new tab, Alphabet (GOOGL.O), opens new tab and Meta Platforms (META.O), opens new tab, have raced to snap up the dwindling supply of its chips to compete in the fast-emerging generative AI sector.

Its H100 and A100 chips serve as a generalized, all-purpose AI processor for many of those major customers. But the tech companies have started to develop their own internal chips for specific needs. Doing so helps reduce energy consumption, and potentially can shrink the cost and time to design.

Nvidia is now attempting to play a role in helping these companies develop custom AI chips that have flowed to rival firms such as Broadcom (AVGO.O), opens new tab and Marvell Technology (MRVL.O), opens new tab, said the sources, who declined to be identified because they were not authorized to speak publicly.

"If you're really trying to optimize on things like power, or optimize on cost for your application, you can't afford to go drop an H100 or A100 in there," Greg Reichow, general partner at venture capital firm Eclipse Ventures said in an interview. "You want to have the exact right mixture of compute and just the kind of compute that you need."

Nvidia does not disclose H100 prices, which are higher than for the prior-generation A100, but each chip can sell for $16,000 to $100,000 depending on volume and other factors. Meta plans to bring its total stock to 350,000 H100s this year.

Nvidia officials have met with representatives from Amazon.com (AMZN.O), opens new tab, Meta, Microsoft, Google and OpenAI to discuss making custom chips for them, two sources familiar with the meetings said. Beyond data center chips, Nvidia has pursued telecom, automotive and video game customers.

Nvidia shares rose 2.75% after the Reuters report, helping lift chip stocks overall. Marvell shares dropped 2.78%.

In 2022, Nvidia said it would let third-party customers integrate some of its proprietary networking technology with their own chips. It has said nothing about the program since, and Reuters is reporting its wider ambitions for the first time.

A Nvidia spokesperson declined to comment beyond the company's 2022 announcement.

Dina McKinney, a former Advanced Micro Devices (AMD.O), opens new tab and Marvell executive, heads Nvidia's custom unit and her team's goal is to make its technology available for customers in cloud, 5G wireless, video games and automotives, a LinkedIn profile said. Those mentions were scrubbed and her title changed after Reuters sought comment from Nvidia.

Amazon, Google, Microsoft, Meta and OpenAI declined to comment.

$30 BILLION MARKET

According to estimates from research firm 650 Group’s Alan Weckel, the data center custom chip market will grow to as much as $10 billion this year, and double that in 2025.The broader custom chip market was worth roughly $30 billion in 2023, which amounts to roughly 5% of annual global chip sales, according to Needham analyst Charles Shi.

Currently, custom silicon design for data centers is dominated by Broadcom and Marvell.

In a typical arrangement, a design partner such as Nvidia would offer intellectual property and technology, but leave the chip fabrication, packaging and additional steps to Taiwan Semiconductor Manufacturing Co. (2330.TW), opens new tab or another contract chip manufacturer.

Nvidia moving into this territory has the potential to eat into Broadcom and Marvell sales.

"With Broadcom's custom silicon business touching $10 billion, and Marvell’s around $2 billion, this is a real threat," said Dylan Patel, founder of silicon research group SemiAnalysis. "It's a real big negative - there's more competition entering the fray."

BEYOND AI

Nvidia is in talks with telecom infrastructure builder Ericsson (ERICb.ST), opens new tab for a wireless chip that includes the chip designer's graphics processing unit (GPU) technology, two sources familiar with the discussions said.Ericsson declined to comment.

650 Group's Weckle expects the telecom custom chip market to remain flat at roughly $4 billion to $5 billion a year.

Nvidia also plans to target the automotive and video game markets, according to sources and public social media postings.

Weckel expects the custom auto market to grow consistently from its current $6 billion to $8 billion range at 20% a year, and the $7 billion to $8 billion video game custom chip market could increase with the next-generation consoles from Xbox and Sony (6857.T), opens new tab.

Nintendo's current Switch handheld console already includes Nvidia's Tegra X1 chip. A new version of the Switch console expected this year is likely to include a Nvidia custom design, one source said.

Nintendo declined to comment.

Bill banning AI-generated child pornography, adding mandatory minimums passes Senate panel - South Dakota Searchlight

A bill that would adjust South Dakota law on child pornography includes provisions barring the possession of sexually explicit deep fakes of real children or artificial intelligence-generated children.

I showed Gemini 1.5 Pro the ENTIRE Self-Operating Computer codebase, and an example Gemini 1.5 API call.

From there, it was able to perfectly explain how the codebase works...

and then it implemented itself as a new supported model for the repo!

Not perfect, but very close.

Last edited:

This has all been very fascinating! Thank you so much for sharing.

Some ideas I have:

-Upload all of seasons 1 - 3 of Star Trek Voyager (screen play). Then ask it to write additional episodes.

-Ingest multiple text books on electrical engineering and ask which chapters I should read for a given project

Thoughts?

Some ideas I have:

-Upload all of seasons 1 - 3 of Star Trek Voyager (screen play). Then ask it to write additional episodes.

-Ingest multiple text books on electrical engineering and ask which chapters I should read for a given project

Thoughts?

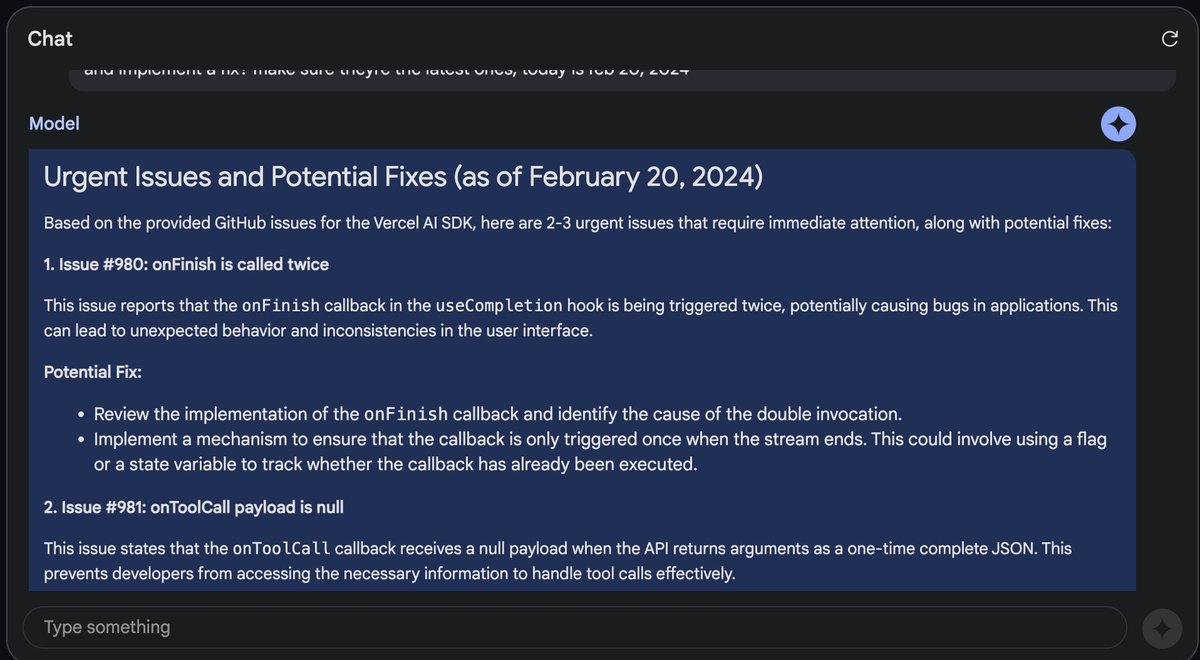

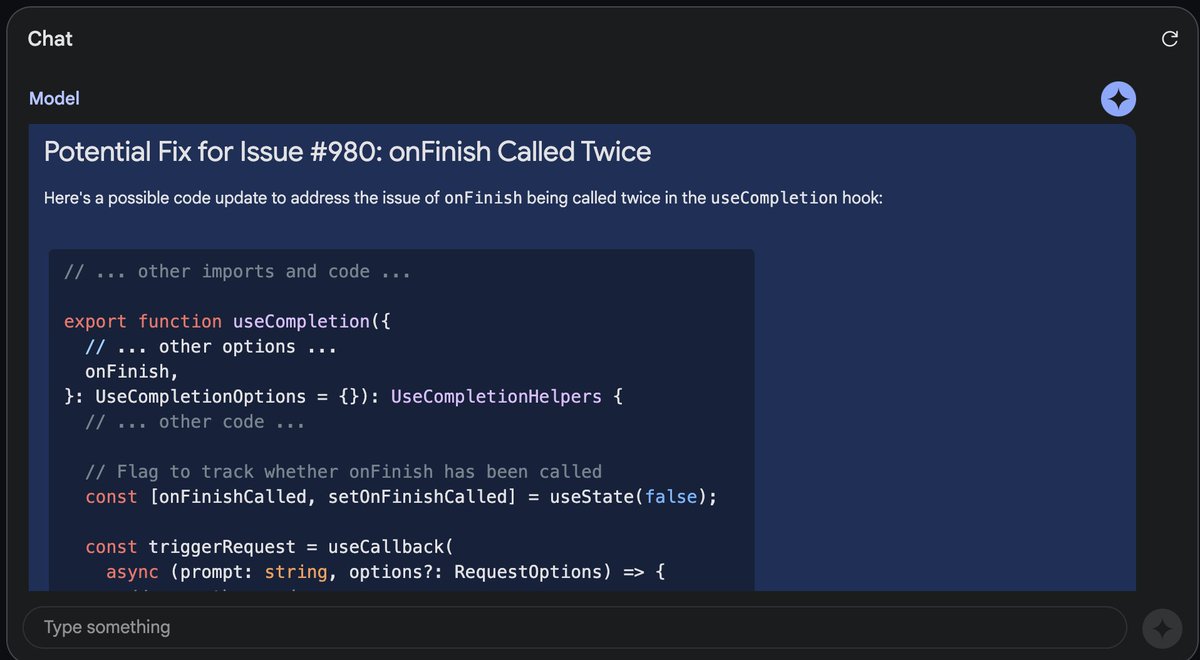

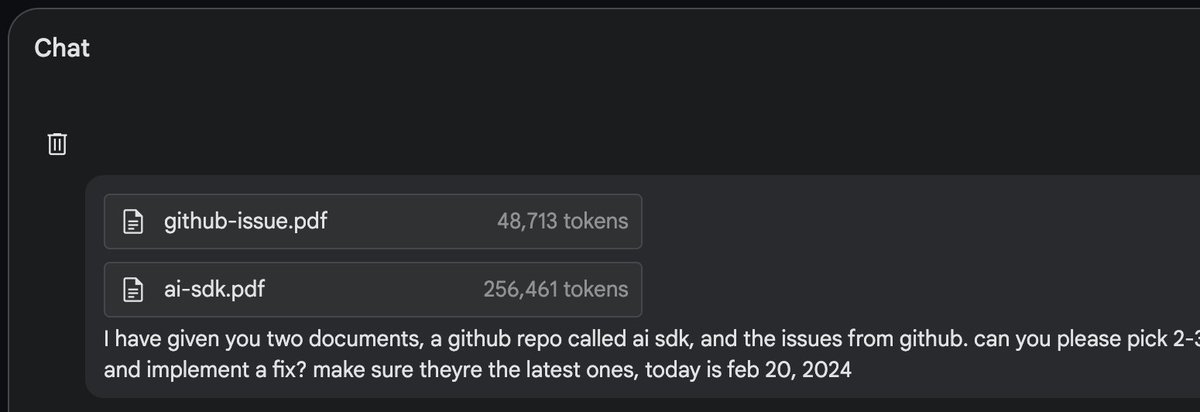

Gemini 1.5 pro is STILL under hyped

I uploaded an entire codebase directly from github, AND all of the issues (

@vercel

ai sdk,)

Not only was it able to understand the entire codebase, it identified the most urgent issue, and IMPLEMENTED a fix.

This changes everything

I uploaded an entire codebase directly from github, AND all of the issues (

@vercel

ai sdk,)

Not only was it able to understand the entire codebase, it identified the most urgent issue, and IMPLEMENTED a fix.

This changes everything

Stable Cascade: A New Image Generation Model by Stability AI - HyScaler

Stable Cascade is a new image generation model by Stability AI that promises to be faster and more powerful than its predecessor, Stable Diffusion.

Stable Cascade: A New Image Generation Model by Stability AI

Feb 14th 2024Table of Contents

- What is Stable Cascade and how does it work?

- Where can I find Stable Cascade and what can I do with it?

- What are the challenges and opportunities for Stable Cascade and Stability AI?

Stability AI, a leading company in the field of text-to-image generation, has recently released a new model called Stable Cascade. This model claims to offer better performance and more features than its previous model, Stable Diffusion, which is widely used by other AI tools for creating images from text.

What is Stable Cascade and how does it work?

Stable Cascade is a text-to-image generation model that can produce realistic and diverse images from natural language prompts. It can also perform various image editing tasks, such as increasing the resolution of an existing image, modifying a specific part of an image, or creating a new image from the edges of another image.

Unlike Stable Diffusion, which is a single large language model, Stable Cascade consists of three smaller models that work together using the Würstchen architecture. The first model, stage C, compresses the text prompt into a latent code, which is a compact representation of the desired image.

The second model, stage A, decodes the latent code into a low-resolution image. The third model, stage B, refines the low-resolution image into a high-resolution image.

By splitting the text-to-image generation process into three stages, Stable Cascade reduces the memory and computational requirements and speeds up the image creation time. According to Stability AI, Stable Cascade can generate an image in about 10 seconds, compared to 22 seconds for the SDXL model, which is the largest version of Stable Diffusion.

Moreover, Stable Cascade also improves the quality and diversity of the generated images, as it can better align the image with the text prompt and produce more variations of the same image.

Where can I find Stable Cascade and what can I do with it?

Stable Cascade is currently available on GitHub for research purposes only, and not for commercial use. Stability AI has provided a Colab notebook that demonstrates how to use Stable Cascade for various image generation and editing tasks. You can also explore some examples of images generated by Stable Cascade on their website.Stable Cascade is a versatile model that can be used for various applications, such as content creation, design, education, entertainment, and more. For instance, you can use Stable Cascade to generate images of fictional characters, landscapes, animals, logos, or anything else you can describe with text.

You can also use Stable Cascade to enhance or modify existing images, such as increasing their resolution, changing their style, adding or removing objects, or creating new images from their edges.

What are the challenges and opportunities for Stable Cascade and Stability AI?

Stable Cascade is a remarkable achievement by Stability AI, as it shows the potential of text-to-image generation models to create realistic and diverse images from natural language. However, Stable Cascade also faces some challenges and limitations, such as the quality and availability of the training data, the ethical and legal implications of generating images, and the competition from other companies and models.Stability AI has been at the forefront of text-to-image generation research, as it pioneered the stable diffusion method, which is a novel technique for training generative models. However, Stability AI has also been involved in several lawsuits, accusing it of using copyrighted data without permission from the owners.

For example, Getty Images, a stock photo agency, has filed a lawsuit against Stability AI in the UK, alleging that Stability AI used millions of Getty Images’ photos to train Stable Diffusion. The trial is expected to take place in December.

Stability AI has also faced criticism for its pricing and licensing policies, as it charges a subscription fee for commercial use of its models, which some users and developers have found to be too expensive or restrictive. Stability AI has defended its decision, saying that it needs to generate revenue to support its research and development.

Stability AI is not the only company that is working on text-to-image generation models, as other tech giants like Google and Apple have also released their own models, such as DALL-E and iGPT. These models use different approaches and architectures, such as transformers and autoregressive models, to generate images from text.

These models also offer impressive results and features, such as generating images from multiple text prompts or generating text from images.

Therefore, Stability AI will have to face the challenge of competing with these models, as well as keeping up with the rapid advances and innovations in the field of text-to-image generation. However, Stability AI also has the opportunity to collaborate with other researchers and developers, and to leverage its expertise and experience in the stable diffusion method, to create more powerful and useful models for image generation and editing.