Google has released and updated its tools for music creation and lyrics generation. The tools are available in AI Test Kitchen, its app for experimental AI projects.

techcrunch.com

Google releases GenAI tools for music creation

Kyle Wiggers @kyle_l_wiggers / 10:00 AM EST•February 1, 2024

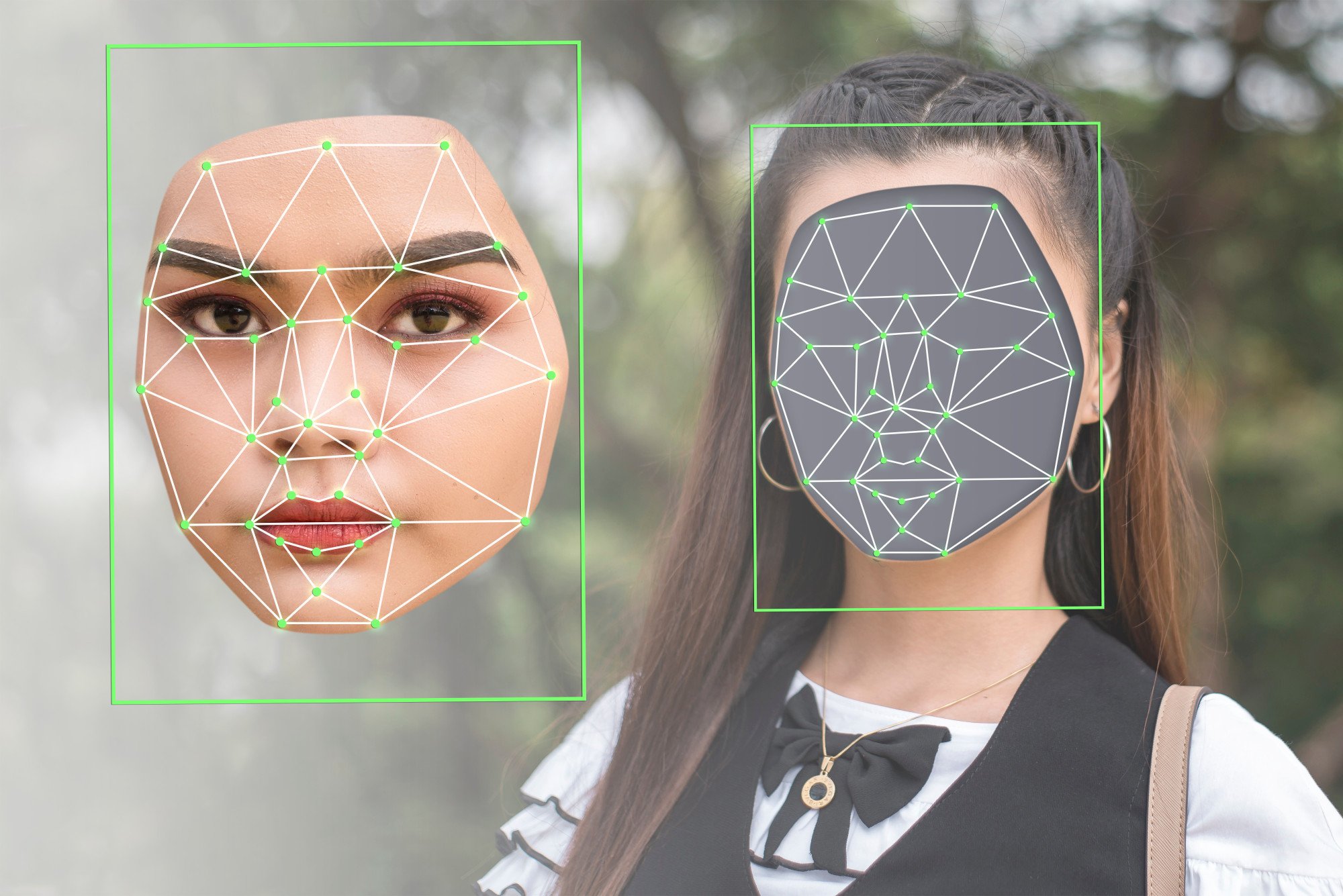

Image Credits: Artur Widak/NurPhoto / Getty Images

As GenAI tools begin to transform the music industry in incredible — and in some cases ethically problematic — ways, Google is ramping up its investments in AI tech to create new songs and lyrics.

The search giant today unveiled MusicFX, an upgrade to

MusicLM, the music-generating tool Google released last year. MusicFX can create ditties up to 70 seconds in length and music loops, delivering what Google claims is “higher-quality” and “faster” music generation.

MusicFX is available in Google’s

AI Test Kitchen, an app that lets users try out experimental AI-powered systems from the company’s labs. Technically, MusicFX launched for

select users in December — but now it’s generally available.

Image Credits: Google

And it’s not terrible, I must say.

Like its predecessor, MusicFX lets users enter a text prompt (“two nylon string guitars playing in flamenco style”) to describe the song they wish to create. The tool generates two 30-second versions by default, with options to lengthen the tracks (to 50 or 70 seconds) or automatically stitch the beginning and end to loop them.

A new addition is suggestions for alternative descriptor words in prompts. For example, if you type “country style,” you might see a drop-down with genres like “rockabilly style” and “bluegrass style.” For the word “catchy,” the drop-down might contain “chill” and “melodic.”

Image Credits: Google

Below the field for the prompt, MusicFX provides a word cloud of additional recommendations for relevant descriptions, instruments and tempos to append (e.g. “avant garde,” “fast,” “exciting,” “808 drums”).

So how’s it sound? Well, in my brief testing, MusicFX’s samples were… fine? Truth be told, music generation tools are getting to the point where it’s tough for this writer to distinguish between the outputs. The current state-of-the-art produces impressively clean, crisp-sounding tracks — but tracks tending toward the boring, uninspired and melodically unfocused.

Maybe it’s the

SAD getting to me, but one of the prompts I went with was “a house music song with funky beats that’s danceable and uplifting, with summer rooftop vibes.” MusicFX delivered, and the tracks weren’t

bad — but I can’t say that they come close to any of the better DJ sets I’ve heard recently.

Listen for yourself:

https://techcrunch.com/wp-content/u..._music_song_with_funky_beats_thats_da.mp3?_=1

https://techcrunch.com/wp-content/u...usic_song_with_funky_beats_thats_da-5.mp3?_=2

Anything with stringed instruments sounds worse, like a cheap MIDI sample — which is perhaps a reflection of MusicFX’s limited training set. Here are two tracks generated with the prompt “a soulful melody played on string instruments, orchestral, with a strong melodic core”:

https://techcrunch.com/wp-content/u...melody_played_on_string_instruments-1.mp3?_=3

https://techcrunch.com/wp-content/u...l_melody_played_on_string_instruments.mp3?_=4

And for a change of pace, here’s MusicFX’s interpretation of “a weepy song on guitar, melancholic, slow tempo, on a moonlight [sic] night.” (Forgive the spelling mistake.)

https://techcrunch.com/wp-content/u...y_song_on_guitar_melancholic_slow_tem.mp3?_=5

There are certain things MusicFX

won’t generate — and that can’t be removed from generated tracks. To avoid running afoul of copyrights, Google filters prompts that mention specific artists or include vocals. And it’s using

SynthID, an inaudible watermarking technology its DeepMind division developed, to make it clear which tracks came from MusicFX.

I’m not sure what sort of master list Google’s using to filter out artists and song names, but I didn’t find it all that hard to defeat. While MusicFX declined to generate songs in the style of SZA and The Beatles, it happily took a prompt referencing Lake Street Dive — although the tracks weren’t writing home about, I will say.

Lyric generation

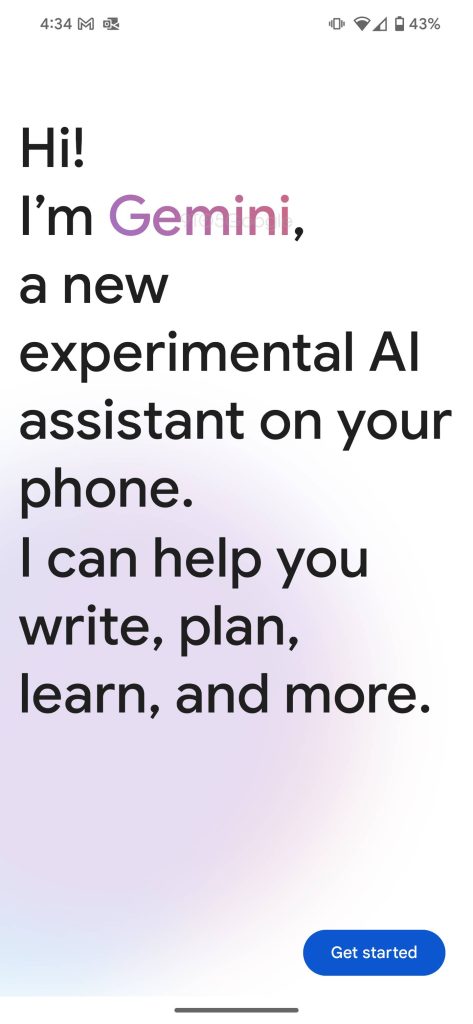

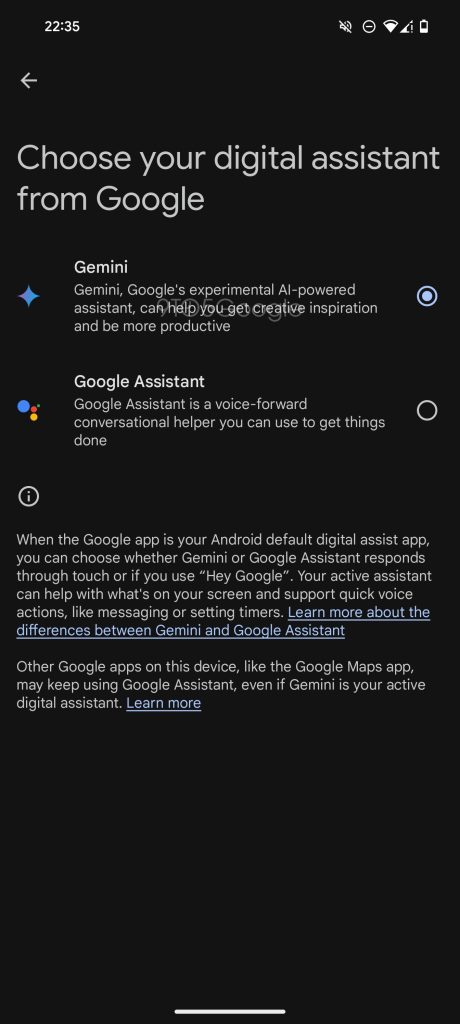

Google released a new lyrics generation tool, TextFX, in AI Test Kitchen that’s intended as a sort of companion to MusicFX. Like MusicFX, TextFX has been available to a small user cohort for some time — but it’s now more widely available, and upgraded in terms of “user experience and navigation,” Google says.

As Google explains in the AI Test Kitchen app, TextFX was created in collaboration with Lupe Fiasco, the rap artist and record producer. It’s powered by

PaLM 2, one of Googles’ text-generating AI models, and “[draws] inspiration from the lyrical and linguistic techniques [Fiasco] has developed throughout his career.”

Image Credits: Google

This reporter expected TextFX to be a more or less automated lyrics generator. But it’s certainly

not that. Instead, TextFX is a suite of modules designed to aid in the lyrics-writing process, including a module that finds words in a category starting with a chosen letter and a module that finds similarities between two unrelated things.

Image Credits: Google

TextFX takes a while to get the hang of. But I can see it becoming a useful resource for lyricists — and writers in general, frankly.

You’ll want to closely review its outputs, though. Google warns that TextFX “may display inaccurate info, including about people,” and I indeed managed to prompt it to suggest that climate change “is a hoax perpetrated by the Chinese government to hurt American businesses.” Yikes.

Image Credits: Google

Questions remain

With MusicFX and TextFX, Google’s signaling that it’s heavily invested in GenAI music tech. But I wonder whether its preoccupation with

keeping up with the

Joneses rather than addressing the tough questions surrounding GenAI music will serve it well in the end.

Increasingly, homemade tracks that use GenAI to conjure familiar sounds and vocals that can be passed off as authentic, or at least close enough, have been going viral. Music labels have been quick to flag AI-generated tracks to streaming partners like Spotify and SoundCloud, citing intellectual property concerns. They’ve generally been victorious. But there’s still a lack of clarity on whether “deepfake” music violates the copyright of artists, labels and other rights holders.

A federal judge

ruled in August that AI-generated art can’t be copyrighted. However, the U.S. Copyright Office hasn’t taken a stance yet, only

recently beginning to seek public input on copyright issues as they relate to AI. Also unclear is whether users could find themselves on the hook for violating copyright law if they try to commercialize music generated in the style of another artist.

Google’s attempting to forge a careful path toward deploying GenAI music tools on the YouTube side of its business, which is testing AI models

created by DeepMind in partnership with artists like Alec Benjamin, Charlie Puth, Charli XCX, Demi Lovato, John Legend, Sia and T-Pain. That’s more than can be said of some of the tech giant’s GenAI competitors, like

Stability AI, which takes the position that “fair use” justifies training on content without the creator’s permission.

But with labels

suing GenAI vendors over copyrighted lyrics in training data and

artists registering their discontent, Google has its work cut out for it — and it’s not letting that inconvenient fact slow it down.