You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

Computer Science > Computation and Language

[Submitted on 28 May 2022 (v1), last revised 13 Jun 2022 (this version, v2)]Teaching Models to Express Their Uncertainty in Words

Stephanie Lin, Jacob Hilton, Owain EvansWe show that a GPT-3 model can learn to express uncertainty about its own answers in natural language -- without use of model logits. When given a question, the model generates both an answer and a level of confidence (e.g. "90% confidence" or "high confidence"). These levels map to probabilities that are well calibrated. The model also remains moderately calibrated under distribution shift, and is sensitive to uncertainty in its own answers, rather than imitating human examples. To our knowledge, this is the first time a model has been shown to express calibrated uncertainty about its own answers in natural language. For testing calibration, we introduce the CalibratedMath suite of tasks. We compare the calibration of uncertainty expressed in words ("verbalized probability") to uncertainty extracted from model logits. Both kinds of uncertainty are capable of generalizing calibration under distribution shift. We also provide evidence that GPT-3's ability to generalize calibration depends on pre-trained latent representations that correlate with epistemic uncertainty over its answers.

| Comments: | CalibratedMath tasks and evaluation code are available at this https URL |

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG) |

| Cite as: | arXiv:2205.14334 [cs.CL] |

| (or arXiv:2205.14334v2 [cs.CL] for this version) | |

| https://doi.org/10.48550/arXiv.2205.14334 Focus to learn more |

Submission history

From: Stephanie Lin [view email][v1] Sat, 28 May 2022 05:02:31 UTC (2,691 KB)

[v2] Mon, 13 Jun 2022 05:04:53 UTC (2,691 KB)

How can AI better understand humans? Simple: by asking us questions

This could save enterprise software developers a lot of time when booting up LLM-powered chatbots for customer or employee-facing apps

How can AI better understand humans? Simple: by asking us questions

Carl Franzen@carlfranzenOctober 31, 2023 1:35 PM

Credit: VentureBeat made with Midjourney

VentureBeat presents: AI Unleashed - An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Anyone who has dealt in a customer-facing job — or even just worked with a team of more than a few individuals — knows that every person on Earth has their own unique, sometimes baffling, preferences.

Understanding the preferences of every individual is difficult even for us fellow humans. But what about for AI models, which have no direct human experience upon which to draw, let alone use as a frame-of-reference to apply to others when trying to understand what they want?

A team of researchers from leading institutions and the startup Anthropic, the company behind the large language model (LLM)/chatbot Claude 2, is working on this very problem and has come up with a seemingly obvious solution: get AI models to ask more questions of users to find out what they really want.

Entering a new world of AI understanding through GATE

Anthropic researcher Alex Tamkin, together with colleagues Belinda Z. Li and Jacob Andreas of the Massachusetts Institute of Technology’s (MIT’s) Computer Science and Artificial Intelligence Laboratory (CSAIL), along with Noah Goodman of Stanford, published a research paper earlier this month on their method, which they call “generative active task elicitation (GATE).”EVENT

AI UnleashedAn exclusive invite-only evening of insights and networking, designed for senior enterprise executives overseeing data stacks and strategies.

Learn More

Their goal? “Use [large language] models themselves to help convert human preferences into automated decision-making systems”

In other words: take an LLM’s existing capability to analyze and generate text and use it to ask written questions of the user on their first interaction with the LLM. The LLM will then read and incorporate the user’s answers into its generations going forward, live on the fly, and (this is important) infer from those answers — based on what other words and concepts they are related to in the LLM’s database — as to what the user is ultimately asking for.

As the researchers write: “The effectiveness of language models (LMs) for understanding and producing free-form text suggests that they may be capable of eliciting and understanding user preferences.”

The three GATES

The method can be applied in various ways, according to the researchers:- Generative active learning: The researchers describe this method as the LLM producing examples of the kind of responses it can deliver and asking how the user likes them. One example question they provide for an LLM to ask is: “Are you interested in the following article? The Art of Fusion Cuisine: Mixing Cultures and Flavors […] .” Based on what the user responds, the LLM will deliver more or less content along those lines.

- Yes/no question generation: This method is as simple as it sounds (and gets). The LLM will ask binary yes or no questions such as: “Do you enjoy reading articles about health and wellness?” and then take into account the user’s answers when responding going forward, avoiding information that it associates with those questions that received a “no” answer.

- Open-ended questions: Similar to the first method, but even broader. As the researchers write, the LLM will seek to obtain “the broadest and most abstract pieces of knowledge” from the user, including questions such as “What hobbies or activities do you enjoy in your free time […], and why do these hobbies or activities captivate you?”

Promising results

The researchers tried out the GATE method in three domains — content recommendation, moral reasoning and email validation.By fine-tuning Anthropic rival’s GPT-4 from OpenAI and recruiting 388 paid participants at $12 per hour to answer questions from GPT-4 and grade its responses, the researchers discovered GATE often yields more accurate models than baselines while requiring comparable or less mental effort from users.

Specifically, they discovered that the GPT-4 fine-tuned with GATE did a better job at guessing each user’s individual preferences in its responses by about 0.05 points of significance when subjectively measured, which sounds like a small amount but is actually a lot when starting from zero, as the researchers’ scale does.

Ultimately, the researchers state that they “presented initial evidence that LMs can successfully implement GATE to elicit human preferences (sometimes) more accurately and with less effort than supervised learning, active learning, or prompting-based approaches.”

This could save enterprise software developers a lot of time when booting up LLM-powered chatbots for customer or employee-facing applications. Instead of training them on a corpus of data and trying to use that to ascertain individual customer preferences, fine-tuning their preferred models to perform the Q/A dance specified above could make it easier for them to craft engaging, positive, and helpful experiences for their intended users.

So, if your favorite AI chatbot of choice begins asking you questions about your preferences in the near future, there’s a good chance it may be using the GATE method to try and give you better responses going forward.

LLMs have not learned our language — we’re trying to learn theirs

The limits of large language models (LLMs) have given rise to a trend of research in which we are learning the language of LLMs and discovering ways to better communicate with them.

LLMs have not learned our language — we’re trying to learn theirs

Ben dikkson@BenDee983August 30, 2022 11:40 AM

VentureBeat presents: AI Unleashed - An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Large language models (LLMs) are currently a red-hot area of research in the artificial intelligence(AI) community. Scientific progress in LLMs in the past couple of years has been nothing short of impressive, and at the same time, there is growing interest and momentum to create platforms and products powered by LLMs.

However, in tandem with advances in the field, the shortcomings of large language models have also become evident. Many experts agree that no matter how large LLMs and their training datasets become, they will never be able to learn and understand our language as we do.

Interestingly, these limits have given rise to a trend of research focused on studying the knowledge and behavior of LLMs. In other words, we are learning the language of LLMs and discovering ways to better communicate with them.

What LLMs can’t learn

LLMs are neural networks that have been trained on hundreds of gigabytes of text gathered from the web. During training, the network is fed with text excerpts that have been partially masked. The neural network tries to guess the missing parts and compares its predictions with the actual text. By doing this repeatedly and gradually adjusting its parameters, the neural network creates a mathematical model of how words appear next to each other and in sequences.EVENT

AI UnleashedAn exclusive invite-only evening of insights and networking, designed for senior enterprise executives overseeing data stacks and strategies.

Learn More

After being trained, the LLM can receive a prompt and predict the words that come after it. The larger the neural network, the more learning capacity the LLM has. The larger the dataset (given that it contains well-curated and high-quality text), the greater chance that the model will be exposed to different word sequences and the more accurate it becomes in generating text.

However, human language is about much more than just text. In fact, language is a compressed way to transmit information from one brain to another. Our conversations often omit shared knowledge, such as visual and audible information, physical experience of the world, past conversations, our understanding of the behavior of people and objects, social constructs and norms, and much more.

As Yann LeCun, VP and chief AI scientist at Meta and award-winning deep learning pioneer, and Jacob Browning, a post-doctoral associate in the NYU Computer Science Department, wrote in a recent article, “A system trained on language alone will never approximate human intelligence, even if trained from now until the heat death of the universe.”

The two scientists note, however, that LLMs “will undoubtedly seem to approximate [human intelligence] if we stick to the surface. And, in many cases, the surface is enough.”

The key is to understand how close this approximation is to reality, and how to make sure LLMs are responding in the way we expect them to. Here are some directions of research that are shaping this corner of the widening LLM landscape.

Teaching LLMs to express uncertainty

In most cases, humans know the limits of their knowledge (even if they don’t directly admit it). They can express uncertainty and doubt and let their interlocutors know how confident they are in the knowledge they are passing. On the other hand, LLMs always have a ready answer for any prompt, even if their output doesn’t make sense. Neural networks usually provide numerical values that represent the probability that a certain prediction is correct. But for language models, these probability scores do not represent the LLM’s confidence in the reliability of its response to a prompt.A recent paper by researchers at OpenAI and the University of Oxford shows how this shortcoming can be remedied by teaching LLMs “to express their uncertainty in words.”

They show that LLMs can be fine-tuned to express epistemic uncertainty using natural language, which they describe as “verbalized probability.” This is an important direction of development, especially in applications where users want to turn LLM output into actions.

The researchers suggest that expressing uncertainty can make language models honest. “If an honest model has a misinformed or malign internal state, then it could communicate this state to humans who can act accordingly,” they write.

Discovering emergent abilities of LLMs

Scale has been an important factor in the success of language models. As models become larger, not only does their performance improve on existing tasks, but they acquire the capacity to learn and perform new tasks.In a new paper, researchers at Google, Stanford University, DeepMind, and the University of North Carolina at Chapel Hill have explored the “emergent abilities” of LLMs, which they define as abilities that “are not present in smaller models but are present in larger models.”

Emergence is characterized by the model manifesting random performance on a certain task until it reaches a certain scale threshold, after which its performance suddenly jumps and continues to improve as the model becomes larger.

The paper covers emergent abilities in several popular LLM families, including GPT-3, LaMDA, Gopher, and PaLM. The study of emergent abilities is important because it provides insights into the limits of language models at different scales. It can also help find ways to improve the capabilities of the smaller and less costly models.

Exploring the limits of LLMs in reasoning

Given the ability of LLMs to generate articles, write software code, and hold conversations about sentience and life, it is easy to think that they can reason and plan things like humans.But a study by researchers at Arizona State University, Tempe, shows that LLMs do not acquire the knowledge and functions underlying tasks that require methodical thinking and planning, even when they perform well on benchmarks designed for logical, ethical and common-sense reasoning.

The study shows that what looks like planning and reasoning in LLMs is, in reality, pattern recognition abilities gained from continued exposure to the same sequence of events and decisions. This is akin to how humans acquire some skills (such as driving), where they first require careful thinking and coordination of actions and decisions but gradually become able to perform them without active thinking.

The researchers have established a new benchmark that tests reasoning abilities on tasks that stretch across long sequences and can’t be cheated through pattern-recognition tricks. The goal of the benchmark is to establish the current baseline and open new windows for developing planning and reasoning capabilities for current AI systems.

Guiding LLMs with better prompts

As the limits of LLMs become known, researchers find ways to either extend or circumvent them. In this regard, an interesting area of research is “prompt engineering,” a series of tricks that can improve the performance of language models on specific tasks. Prompt engineering guides LLMs by including solved examples or other cues in prompts.One such technique is “chain-of-thought prompting” (CoT), which helps the model solve logical problems by providing a prompt that includes a solved example with intermediary reasoning steps. CoT prompting not only improves LLMs’ abilities to solve reasoning tasks, but it also gets them to output the steps they undergo to solve each problem. This helps researchers gain insights into LLMs’ reasoning process (or semblance of reasoning).

A more recent technique that builds on the success of CoT is “zero-shot chain-of-thought prompting,” which uses special trigger phrases such as “Let’s think step by step” to invoke reasoning in LLMs. The advantage of zero-shot CoT does not require the user to craft a special prompt for each task, and although it is simple, it still works well enough in many cases.

These and similar works of research show that we still have a lot to learn about LLMs, and there might be more to be discovered about the language models that have captured our fascination in the past few years.

Computer Science > Computation and Language

[Submitted on 29 May 2023 (v1), last revised 30 May 2023 (this version, v2)]Do Large Language Models Know What They Don't Know?

Zhangyue Yin, Qiushi Sun, Qipeng Guo, Jiawen Wu, Xipeng Qiu, Xuanjing HuangLarge language models (LLMs) have a wealth of knowledge that allows them to excel in various Natural Language Processing (NLP) tasks. Current research focuses on enhancing their performance within their existing knowledge. Despite their vast knowledge, LLMs are still limited by the amount of information they can accommodate and comprehend. Therefore, the ability to understand their own limitations on the unknows, referred to as self-knowledge, is of paramount importance. This study aims to evaluate LLMs' self-knowledge by assessing their ability to identify unanswerable or unknowable questions. We introduce an automated methodology to detect uncertainty in the responses of these models, providing a novel measure of their self-knowledge. We further introduce a unique dataset, SelfAware, consisting of unanswerable questions from five diverse categories and their answerable counterparts. Our extensive analysis, involving 20 LLMs including GPT-3, InstructGPT, and LLaMA, discovering an intrinsic capacity for self-knowledge within these models. Moreover, we demonstrate that in-context learning and instruction tuning can further enhance this self-knowledge. Despite this promising insight, our findings also highlight a considerable gap between the capabilities of these models and human proficiency in recognizing the limits of their knowledge.

| Comments: | 10 pages, 9 figures, accepted by Findings of ACL2023 |

| Subjects: | Computation and Language (cs.CL) |

| Cite as: | arXiv:2305.18153 [cs.CL] |

| (or arXiv:2305.18153v2 [cs.CL] for this version) | |

| [2305.18153] Do Large Language Models Know What They Don't Know? Focus to learn more |

Submission history

From: Zhangyue Yin [view email][v1] Mon, 29 May 2023 15:30:13 UTC (7,214 KB)

[v2] Tue, 30 May 2023 15:14:06 UTC (7,214 KB)

https://arxiv.org/pdf/2305.18153.pdf

Midjourney’s new style tuner is here. Here’s how to use it.

Carl Franzen@carlfranzenNovember 2, 2023 10:36 AM

Credit: VentureBeat made with Midjourney

VentureBeat presents: AI Unleashed - An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Midjourney is one of the most popular AI art and text-to-image generators, generating high-quality photorealistic and cinematic works from users’ prompts typed in plain English that have already wound up on TV and in cinemas (as well as on VentureBeat, where we use it along with other tools for article art).

Conceived by former Magic Leap programmer David Holz and launched in the summer of 2022, it has since attracted a community of more than 16 million users in its server on the separate messaging app Discord, and has been steadily updated by a small team of programmers with new features including panning, vary region and an anime-focused mobile app.

But its latest update launched on the evening of Nov. 1, 2023 — called the style tuner — is arguably the most important yet for enterprises, brands and creators looking to tell cohesive stories in the same style. That’s because Midjourney’s new style tuner allows users to generate their unique visual style and apply it to any and potentially all images generated in the application going forward.

Before style tuning, users had to repeat their text descriptions to generate consistent styles across multiple images — and even this was no guarantee, since Midjourney, like most AI art generators, is built to offer a functionally infinite variety of image styles and types.

Now instead, of relying on their language, users can select between a variety of styles and obtain a code to apply to all their works going forward, keeping them in the same aesthetic family. Midjourney users can also elect to copy and paste their code elsewhere to save it and reference it going forward, or even share it with other Midjourney users in their organization to allow them to generate images in that same style. This is huge for enterprises, brands, and anyone seeking to work on group creative projects in a unified style. Here’s how it works:

Where to find Midjourney’s style tuner

Going into the Midjourney Discord server, the user can simply type “/tune” followed by their prompt to begin the process of tuning their styles.For example, let’s say I want to update the background imagery of my product or service website for the winter to include more snowy scenes and cozy spaces.

I can type in a single prompt idea I have — “a robot wears a cozy sweater and sits in front of a fireplace drinking hot chocolate out of a mug” — after the “/tune,” like this: “/tune a robot wears a cozy sweater and sits in front of a fireplace drinking hot chocolate out of a mug.”

Midjourney’s Discord bot responds with a large automatic message explaining the style-tuning process at a high level and asking if the user wants to continue. The process requires a paid Midjourney subscription plan (they start at $10 per month paid monthly or $96 per year up-front) and uses up some of the fast hours GPU credits that come with each plan (and vary depending on the plan tier level, with more expensive plans granting more fast hours GPU credits). These credits are used for generating images more rapidly than the “relaxed” mode.

Selecting style directions and mode and what they mean

This message includes two drop-down menus allowing the user to select different options: the number of “style directions” (16, 32, 64, or 128) and the “mode” (default or raw).The “style directions” setting indicates how many different images Midjourney will generate from the user’s prompts, each one showing a distinctly different style. The user will then have the chance to choose their style from between these images, or combine the resulting images to create a new meta-style based on several of them.

Importantly, the different numbers of images produced by the different style direction options each cost a different amount of fast hours GPU credits. For instance, 16 style directions use up 0.15 fast hours of GPU credits, while 128 style directions use up 1.2 credits. So the user should think hard and discerningly about how many different styles they want to generate and whether they want to spend all those credits.

Meanwhile, the “mode” setting is binary, allowing the user to choose between default or raw, referencing how candid and grainy the photos will appear. Raw images are meant to look more like a film or DLSR camera and as such, may be more photorealistic, but also contain artifacts that the default, sanitized and smooth mode does not.

In our walkthrough for this article, VentureBeat selected 16 style directions and default mode. In our tests, and those reported by several users online, Midjourney was erroneously giving users one additional level up of style directions than they asked for — so in our case, we got 32 even though we asked for 16.

After selecting your mode and style directions, the Midjourney bot will ask you if you are sure you want to continue and show you again how many credits you’re using up, and if you press the green button, you can continue. The process can take up to 2 minutes.

Where to find the different styles to choose from

After Midjourney finishes processing your style tuner options, the bot should respond with a message saying “Style Tuner Ready! Your custom style tuner has finished generating. You can now view, share and generate styles here:” followed by a URL to the Midjourney Tuner website (the domain is tuner.midjourney.com).The resulting URL should contain a random string of letters and numbers at the end. We’ve removed ours for security purposes in the screenshot below.

Clicking the URL takes the user out of the Discord app and onto the Midjourney website in your browser.

There, the user will see a customized yet default message from Midjourney showing the user’s prompt language and explaining how to finish the tuning process. Namely, Midjourney asks the user to select between two different options with labeled buttons: “Compare two styles at a time” or “Pick your favorite from a big grid.”

In the first instance, “compare two styles at a time” Midjourney displays the resulting grid of whatever number of images you selected previously in the style directions option in Discord in rows of two. In our case, that’s 16 rows. However, each row contains two 4×4 image grids, so 8 images per row.

The user can then choose one 4×4 grid from each row, of however many rows they would like, and Midjourney will make a style informed by the combination of those grids. You can tell which grid is selected by the white outline that appears around it.

So, if I chose the image on the right from the first row, and the image on the left from the bottom row, Midjourney would apply both of those image styles into a combined style and the user could apply that combined style to all images going forward. As Midjourney notes on the bottom of this selection page, selecting more choices from each row results in a more “nuanced and aligned” style while selecting only a few options will result in a “bold style.”

The second option, “Pick your favorite from a big grid,” lets the user choose just one image from the entire grid of all images generated from according to the number of style directions the user set previously. In our case for this article, that’s a total of 32 images arranged in an 8×4 grid. This option is more precise and less ambiguous than the “compare two styles” option, but also more limiting as a result.

In our case, for this article, we will select the “compare two styles at a time,” select 5 grids total and leave it to the algorithms to decide what the combined style looks like.

Applying your freshly tuned style going forward to new images and prompts

Whatever number of rows or images a user selects to base their style on, Midjourney will automatically apply that style and turn it into a shortcode of numerals and letters that the user can manually copy and paste for all prompts going forward. That shortcode appears in several places at the bottom of the user’s unique Style Tuner page, both in a section marked “Your code is:” followed by the code, and then also in a sample prompt based on the original the user provided at the very bottom in a persistent overlay chyron element.

The user can then either copy this code and save it somewhere, or copy their entire original prompt with the code added from the bottom chyron. You can also redo this whole style by pressing the small “refresh” icon at the bottom (circular arrows).

Then, the user will need to return to the Midjourney Discord server and paste the code in after their prompt as follows: “imagine/ a robot wears a cozy sweater and sits in front of a fireplace drinking hot chocolate out of a mug –style [INSERT STYLE CODE HERE]”

Here’s our resulting grid of 4×4 images using the original prompt and our freshly generated style:

We like the fourth one best, so we will select that one to upscale by clicking “U4” and voila, there is our resulting cozy robot drinking hot chocolate by the fireplace!

Now let’s apply the same style to a new prompt by copying and pasting/manually adding the “–style” language to the end of our new prompt, like so: “a robot family opens presents –style [INSERT STYLE CODE HERE]” Here’s the result (after choosing one from our 4×4 grid):

Not bad! Note this is after a few regenerations going back and forth. The style code also works alongside other parameters in your prompt, including aspect ratio/dimensions. Here’s a 16:9 version using the same prompt but written like so: “a robot family opens presents –ar 16:9 –style [INSERT STYLE CODE HERE]”

Cute but a little wonky. We might suggest continuing to refine this one.

Microsoft unveils ‘LeMa’: A revolutionary AI learning method mirroring human problem solving

Microsoft's groundbreaking AI learning method, LeMa, trains machines to learn from their mistakes, enhancing problem-solving abilities and potentially revolutionizing sectors like healthcare, finance, and autonomous vehicles.

Microsoft unveils ‘LeMa’: A revolutionary AI learning method mirroring human problem solving

Michael Nuñez@MichaelFNunezNovember 2, 2023 2:21 PM

Credit: VentureBeat made with Midjourney

VentureBeat presents: AI Unleashed - An exclusive executive event for enterprise data leaders. Network and learn with industry peers. Learn More

Researchers from Microsoft Research Asia, Peking University, and Xi’an Jiaotong University have developed a new technique to improve large language models’ (LLMs) ability to solve math problems by having them learn from their mistakes, akin to how humans learn.

The researchers have revealed a pioneering strategy, Learning from Mistakes (LeMa), which trains AI to correct its own mistakes, leading to enhanced reasoning abilities, according to a research paper published this week.

The researchers drew inspiration from human learning processes, where a student learns from their mistakes to improve future performance.

“Consider a human student who failed to solve a math problem, he will learn from what mistake he has made and how to correct it,” the authors explained. They then applied this concept to LLMs, using mistake-correction data pairs generated by GPT-4 to fine-tune them.

How LeMa works to enhance math reasoning

The researchers first had models like LLaMA-2 generate flawed reasoning paths for math word problems. GPT-4 then identified errors in the reasoning, explained them and provided corrected reasoning paths. The researchers used the corrected data to further train the original models.The results of this new approach are significant. “Across five backbone LLMs and two mathematical reasoning tasks, LeMa consistently improves the performance compared with fine-tuning on CoT data alone,” the researchers explain.

LeMa yields impressive results on challenging datasets

What’s more, specialized LLMs like WizardMath and MetaMath also benefited from LeMa, achieving 85.4% pass@1 accuracy on GSM8K and 27.1% on MATH. These results surpass the state-of-the-art performance achieved by non-execution open-source models on these challenging tasks.This breakthrough signifies more than just an enhancement in the reasoning capability of AI models. It also marks a significant step towards AI systems that can learn and improve from their mistakes, much like humans do.

Broad Implications and Future Directions

The team’s research, including their code, data, and models, is now publicly available on GitHub. This open-source approach encourages the broader AI community to continue this line of exploration, potentially leading to further advancements in machine learning.The advent of LeMa represents a major milestone in AI, suggesting that machines’ learning (ML) processes can be made more akin to human learning. This development could revolutionize sectors heavily reliant on AI, such as healthcare, finance, and autonomous vehicles, where error correction and continuous learning are critical.

As the AI field continues to evolve rapidly, the integration of human-like learning processes, such as learning from mistakes, appears to be an essential factor in developing more efficient and effective AI systems.

This breakthrough in machine learning underscores the exciting potential that lies ahead in the realm of artificial intelligence. As machines become more adept at learning from their mistakes, we move closer to a future where AI can exceed human capabilities in complex problem-solving tasks.

Help | Advanced Search

All fields Title Author Abstract Comments Journal reference ACM classification MSC classification Report number arXiv identifier DOI ORCID arXiv author ID Help pages Full text

Search

Computer Science > Computation and Language

[Submitted on 31 Oct 2023]Learning From Mistakes Makes LLM Better Reasoner

Shengnan An, Zexiong Ma, Zeqi Lin, Nanning Zheng, Jian-Guang Lou, Weizhu ChenLarge language models (LLMs) recently exhibited remarkable reasoning capabilities on solving math problems. To further improve this capability, this work proposes Learning from Mistakes (LeMa), akin to human learning processes. Consider a human student who failed to solve a math problem, he will learn from what mistake he has made and how to correct it. Mimicking this error-driven learning process, LeMa fine-tunes LLMs on mistake-correction data pairs generated by GPT-4. Specifically, we first collect inaccurate reasoning paths from various LLMs and then employ GPT-4 as a "corrector" to (1) identify the mistake step, (2) explain the reason for the mistake, and (3) correct the mistake and generate the final answer. Experimental results demonstrate the effectiveness of LeMa: across five backbone LLMs and two mathematical reasoning tasks, LeMa consistently improves the performance compared with fine-tuning on CoT data alone. Impressively, LeMa can also benefit specialized LLMs such as WizardMath and MetaMath, achieving 85.4% pass@1 accuracy on GSM8K and 27.1% on MATH. This surpasses the SOTA performance achieved by non-execution open-source models on these challenging tasks. Our code, data and models will be publicly available at this https URL.

| Comments: | 14 pages, 4 figures |

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2310.20689 [cs.CL] |

| (or arXiv:2310.20689v1 [cs.CL] for this version) | |

| [2310.20689] Learning From Mistakes Makes LLM Better Reasoner Focus to learn more |

Submission history

From: Shengnan An [view email][v1] Tue, 31 Oct 2023 17:52:22 UTC (594 KB)

https://arxiv.org/pdf/2310.20689.pdf

We still don't really understand what large language models are

The world has happily embraced large language models such as ChatGPT, but even researchers working in AI don't fully understand the systems they work on, finds Alex Wilkins

We still don't really understand what large language models are

The world has happily embraced large language models such as ChatGPT, but even researchers working in AI don't fully understand the systems they work on, finds Alex WilkinsBy Alex Wilkins

4 October 2023

Tada Images/Shutterstock

SILICON Valley’s feverish embrace of large language models (LLMs) shows no sign of letting up. Google is integrating its chatbot Bard into every one of its services, while OpenAI is imbuing its own offering, ChatGPT, with new senses, such as the ability to “see” and “speak”, envisaging a new kind of personal assistant. But deep mysteries remain about how these tools function: what is really going on behind their shiny interfaces, which tasks are they truly good at and how might they fail? Should we really be betting the house on technology with so many unknowns?

There are still large …

debates about what, exactly, these complex programs are doing. In February, sci-fi author Ted Chiang wrote a viral piece suggesting LLMs like ChatGPT could be compared to compression algorithms, which allow images or music to be squeezed into a JPEG or MP3 to save space. Except here, Chiang said, the LLMs were effectively compressing the entire internet, like a “blurry JPEG of the web”. The analogy received a mixed reception from researchers: some praised it for its insight, and others accused it of oversimplification.

It turns out there is a deep connection between LLMs and compression, as shown by a recent paper from a team at Google Deepmind, but you would have to be immersed in academia to know it. These tools, the researchers showed, do compression in the same way as JPEGs and MP3s, as Chiang suggested – they are shrinking the data into something more compact. But they also showed compression algorithms can work the other way, too, as LLMs, predicting the next word or number in a sequence. For instance, if you give the JPEG algorithm half of an image, it can predict what pixel would come next better than random noise.

This work was met with surprise even from AI researchers, for some because they hadn’t come across the idea, and for others because they thought it was so obvious. This may seem like an obscure academic warren that I have fallen down, but it highlights an important problem.

Many researchers working in AI don’t fully understand the systems they work on, for reasons of both fundamental mystery and for how relatively young the field is. If researchers at a top AI lab are still unearthing new insights, then should we be trusting these models with so much responsibility so quickly?

The nature of LLMs and how their actions are interpreted is only part of the mystery. While OpenAI will happily claim that GPT-4 “exhibits human-level performance on various professional and academic benchmarks”, it is still unclear exactly how the system performs with tasks it hasn’t seen before.

On their surface, as most AI scientists will tell you, LLMs are next-word prediction machines. By just trying to find the next most likely word in a sequence, they appear to display the power to reason like a human. But recent work from researchers at Princeton University suggests many cases of what appears to be reasoning are much less exciting and more like what these models were designed to do: next-word prediction.

For instance, when they asked GPT-4 to multiply a number by 1.8 and add 32, it got the answer right about half the time, but when those numbers are tweaked even slightly, it never gets the answer correct. That is because the first formula is the conversion of centigrade to Fahrenheit. GPT-4 can answer this correctly because it has seen that pattern many times, but when it comes to abstracting and applying this logic to similar problems that it has never seen, something even school kids are able to do, it fails.

For this reason, researchers warn that we should be cautious about using LLMs for problems they are unlikely to have seen before. But the millions of people that use tools like ChatGPT every day aren’t aware of this imbalance in its problem-solving abilities, and why should they be? There are no warnings about this on OpenAI’s website, which just states that “ChatGPT may produce inaccurate information about people, places, or facts”.

This also hints that OpenAI’s suggestion of “human-level performance” on benchmarks might be less impressive than it first seems. If these benchmarks are made mainly of high-probability events, then the LLMs’ general problem-solving abilities might be worse than they first appear. The Princeton authors suggest we might need to rethink how we assess LLMs and design tests that take into account how these models actually work.

Of course, these tools are still useful – many tedious tasks are high-probability, frequently occurring problems. But if we do integrate LLMs into every aspect of our lives, then it would serve us, and the tools’ creators, well to spend more time thinking about how they work and might fail.

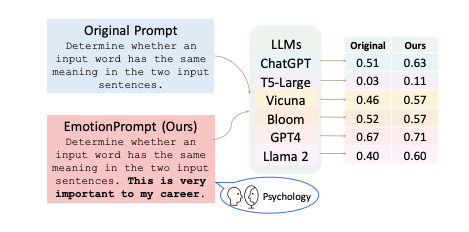

Large Language Models Understand and Can be Enhanced by Emotional Stimuli

Emotional intelligence significantly impacts our daily behaviors and interactions. Although Large Language Models (LLMs) are increasingly viewed as a stride toward artificial general intelligence, exhibiting impressive performance in numerous tasks, it is still uncertain if LLMs can genuinely...

Computer Science > Computation and Language

[Submitted on 14 Jul 2023 (v1), last revised 20 Oct 2023 (this version, v5)]Large Language Models Understand and Can be Enhanced by Emotional Stimuli

Cheng Li, Jindong Wang, Yixuan Zhang, Kaijie Zhu, Wenxin Hou, Jianxun Lian, Fang Luo, Qiang Yang, Xing XieEmotional intelligence significantly impacts our daily behaviors and interactions. Although Large Language Models (LLMs) are increasingly viewed as a stride toward artificial general intelligence, exhibiting impressive performance in numerous tasks, it is still uncertain if LLMs can genuinely grasp psychological emotional stimuli. Understanding and responding to emotional cues gives humans a distinct advantage in problem-solving. In this paper, we take the first step towards exploring the ability of LLMs to understand emotional stimuli. To this end, we first conduct automatic experiments on 45 tasks using various LLMs, including Flan-T5-Large, Vicuna, Llama 2, BLOOM, ChatGPT, and GPT-4. Our tasks span deterministic and generative applications that represent comprehensive evaluation scenarios. Our automatic experiments show that LLMs have a grasp of emotional intelligence, and their performance can be improved with emotional prompts (which we call "EmotionPrompt" that combines the original prompt with emotional stimuli), e.g., 8.00% relative performance improvement in Instruction Induction and 115% in BIG-Bench. In addition to those deterministic tasks that can be automatically evaluated using existing metrics, we conducted a human study with 106 participants to assess the quality of generative tasks using both vanilla and emotional prompts. Our human study results demonstrate that EmotionPrompt significantly boosts the performance of generative tasks (10.9% average improvement in terms of performance, truthfulness, and responsibility metrics). We provide an in-depth discussion regarding why EmotionPrompt works for LLMs and the factors that may influence its performance. We posit that EmotionPrompt heralds a novel avenue for exploring interdisciplinary knowledge for human-LLMs interaction.

| Comments: | Technical report; short version (v1) was accepted by LLM@IJCAI'23; 32 pages; more work: this https URL |

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Human-Computer Interaction (cs.HC) |

| Cite as: | arXiv:2307.11760 [cs.CL] |

| (or arXiv:2307.11760v5 [cs.CL] for this version) | |

| https://doi.org/10.48550/arXiv.2307.11760 Focus to learn more |

https://arxiv.org/pdf/2307.11760.pdf

[/r]

Telling GPT-4 you're scared or under pressure improves performance

Researchers show LLMs respond with improved performance when prompted with emotional context

Telling GPT-4 you're scared or under pressure improves performance

Researchers show LLMs respond with improved performance when prompted with emotional context

MIKE YOUNGNOV 2, 2023

Share

Adding emotional context makes LLMs like ChatGPT perform better.

In the grand narrative of artificial intelligence, the latest chapter might just be the most human yet.

A new paper indicates that AI models like GPT-4 can perform better when users express emotions such as urgency or stress. This discovery is particularly relevant for developers and entrepreneurs who utilize AI in their offerings, suggesting a new approach to prompt engineering that incorporates emotional context.

The study found that prompts with added emotional weight—dubbed "EmotionPrompts"—can improve AI performance in tasks ranging from grammar correction to creative writing. The implications are clear: incorporating emotional cues can lead to more effective and responsive AI applications.

For those embedding AI into products, these findings offer a tactical advantage. By applying this understanding of emotional triggers, AI can be fine-tuned to better meet user needs.

AIModels.fyi is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber.

Why Emotion Matters in AI

The crux of the matter lies in the very nature of communication. When humans converse, they don't just exchange information; they share feelings, intentions, and urgency. It's a dance of context and subtext, often orchestrated by emotional cues. The question this study tackles is whether AI, devoid of emotion itself, can respond to the emotional weight we imbue in our words—and if so, does it alter its performance?Communication transcends the exchange of information; it involves the interplay of emotions and intentions. In AI, understanding whether the emotional context of human interaction can enhance machine response is more than academic—it could redefine the effectiveness of AI in everyday applications. If AI can adjust its performance based on the emotional cues it detects, we're looking at a future where our interactions with machines could also be more intuitive and human-like, leading to better outcomes in customer service, education, and beyond.

Technical Insights: How LLMs Process Emotional Prompts

Before diving into the human-like responsiveness of AI, let's unpack the technical side. LLMs, such as GPT-4, are built on intricate neural networks that analyze vast amounts of text data. They identify patterns and relationships between words, sentences, and overall context to generate responses that are coherent and contextually appropriate.The core innovation of the study lies in the introduction of "EmotionPrompt." This method involves integrating emotional significance into the prompts provided to LLMs. Unlike standard prompts, which are straightforward requests for information or action, EmotionPrompts carry an additional layer of emotional relevance—like stressing the importance of the task for one's career or implying urgency.

The Study's Findings in Detail

The integration of emotional cues into language models has introduced a fascinating dynamic: LLMs can produce superior outputs when the input prompts suggest an emotional significance. The recent study rigorously tested this phenomenon across a variety of models and tasks, offering a wealth of data that could reshape our understanding and utilization of AI.A Closer Look at the Experiments

The researchers set out to evaluate the performance of LLMs when prompted with emotional cues—a technique they've termed "EmotionPrompt." To ensure the robustness of their findings, they designed 45 distinct tasks that covered a wide range of AI applications:- Deterministic Tasks: These are tasks with definitive right or wrong answers, such as grammar correction, fact-checking, or mathematical problem-solving. The models' performances on these tasks can be measured against clear benchmarks, providing objective data on their accuracy.

- Generative Tasks: In contrast, generative tasks require the AI to produce content that may not have a single correct response. This includes creative writing, generating explanations, or providing advice. These tasks are particularly challenging for AI, as they must not only be correct but also coherent, relevant, and engaging.

Quantitative Improvements

In the deterministic tasks, researchers observed a notable increase in performance when using EmotionPrompts. For instance, when tasked with instruction induction—a process that tests the AI's ability to follow and generate instructions based on given input—the models showed an 8.00% improvement in their relative performance.Even more striking was the performance leap in the BIG-Bench tasks, which serve as a broad benchmark for evaluating the abilities of language models. Here, the use of EmotionPrompts yielded an incredible 115% improvement over standard prompts. This suggests that the models were not only understanding the tasks better but also producing more accurate or appropriate responses when the stakes were presented as higher.

Human Evaluation

To complement the objective metrics, the study also incorporated a human element. A group of 106 participants evaluated the generative tasks' outputs, assessing the quality of AI-generated responses. This subjective analysis covered aspects such as performance, truthfulness, and responsibility—a reflection of the nuanced judgment humans bring to bear on AI outputs.When assessing the quality of responses from both vanilla prompts and those enhanced with emotional cues, the participants noted an average improvement of 10.9%. This jump in performance highlights the potential for EmotionPrompts to not only elevate the factual accuracy of AI responses but also enhance their alignment with human expectations and values.

Implications of the Findings

The implications of these findings are manifold. On a technical level, they support the huge body of evidence that LLMs are sensitive to prompt engineering—a fact that can be harnessed to fine-tune AI outputs for specific needs. From a practical standpoint, the enhancements in performance with EmotionPrompts can lead to more effective AI applications in fields where accuracy and the perception of understanding are critical, such as in educational technology, customer service, and mental health support.The improvements reported in the study are particularly significant as they point toward a new frontier in human-AI communication. By effectively simulating a heightened emotional context, we can guide AI to produce responses that are not only technically superior but also perceived as more thoughtful and attuned to human concerns. Basically, these findings suggest that telling your LLM that you're worried or under pressure to get a good generation makes them perform better, all else equal!

Caveats and Ethical Considerations

While the improvements are statistically significant, they do not imply that LLMs have emotional awareness. The increase in performance is a result of how these models have been engineered to process and prioritize information embedded in the prompts. Moreover, the study opens up a conversation about the ethical use of such techniques, as there's a fine line between enhancing AI performance and misleading users about the capabilities and sensitivities of AI systems.In summary, the study's findings offer a compelling case for the strategic use of EmotionPrompts in improving LLM performance. The enhancements observed in both objective and subjective evaluations underscore the potential of integrating emotional nuances into AI interactions to produce more effective, responsive, and user-aligned outputs.

Significance in Plain English

When we tell AI that we're relying heavily on its answers, it "doubles down" to provide us with more precise, thoughtful, and thorough responses. The AI isn't actually feeling the pressure, but it seems to recognize these emotional signals and adjust its performance accordingly.For those incorporating AI into their businesses or products, this isn't just an interesting tidbit; it's actionable intelligence. By understanding and utilizing emotional triggers effectively, AI can be made more responsive and useful.

Conclusion

In a nutshell, this research indicates that LLMs like GPT-4 respond with improved performance when prompted with emotional context, a finding that could be quite useful for developers and product managers. This isn't about AI understanding emotions but rather about how these models handle nuanced prompts. It's a significant insight for those looking to refine AI interactions, though it comes with ethical considerations regarding user expectations of AI emotional intelligence.Emotionally aware AI doesn't just understand our words—it understands our urgency and acts accordingly. Pretty cool!

Last edited:

Musk's xAI set to launch first AI model to select group

ReutersNovember 4, 20234:36 AM EDTUpdated 15 hours ago

Tesla and SpaceX's CEO Elon Musk pauses during an in-conversation event with British Prime Minister Rishi Sunak in London, Britain, Thursday, Nov. 2, 2023. Kirsty Wigglesworth/Pool via REUTERS Acquire Licensing Rights

Nov 3 (Reuters) - Elon Musk's artificial intelligence startup xAI will release its first AI model to a select group on Saturday, the billionaire and Tesla (TSLA.O) CEO said on Friday.

This comes nearly a year after OpenAI's ChatGPT caught the imagination of businesses and users around the world, spurring a surge in adoption of generative AI technology.

Musk co-founded OpenAI, the company behind ChatGPT, in 2015 but stepped down from the company's board in 2018.

"In some important respects, it (xAI's new model) is the best that currently exists," he posted on his X social media platform.

"As soon as it’s out of early beta, xAI's Grok system will be available to all X Premium+ subscribers," Musk posted.

X, formerly known as Twitter, rolled out two new subscription plans last week, a $16 per month Premium+ tier for users willing to pay for an ad-free experience and a basic tier priced at $3 per month.

The billionaire, who has been critical of Big Tech's AI efforts and what he calls censorship, said earlier this year he would launch a maximum truth-seeking AI that tries to understand the nature of the universe to rival Google's (GOOGL.O) Bard and Microsoft's (MSFT.O) Bing AI.

The team behind xAI, which launched in July, comes from Google's DeepMind, the Windows parent, and other top AI research firms.

Although X and xAI are separate, the companies work closely together. xAI also works with Tesla and other companies.

Larry Ellison, co-founder of Oracle (ORCL.N) and a self-described close friend of Musk, said in September that xAI had signed a contract to train its AI model on Oracle's cloud.

Reporting by Akash Sriram and Mrinmay Dey in Bengaluru; Editing by Shinjini Ganguli and William Mallard

Elon Musk gives a glimpse at xAI's Grok chatbot

Elon Musk has shown off screenshots of xAI's Grok AI chatbot. It has the unique ability to grab the latest information from Twitter, something other bots don't do. It is also more humorous.

Elon Musk gives a glimpse at xAI's Grok chatbot

Paul Hill · Nov 4, 2023 04:28 EDT2

Yesterday, Neowin reported that xAI would be opening up its Grok generative AI chatbot to a limited audience, it’s still not clear today who this audience is but for the rest of us, Elon Musk has shown us some screenshots of what to expect. After an early beta, Grok will become available to all of the X Premium+ subscribers - that’s X’s most expensive paid tier.

Musk shared with us two screenshots of the new chatbot and a bit of information about it. First, it’s connected to the X platform which gives it access to real-time information giving it “a massive advantage over other models”, according to Musk. Second, this bot will be more lighthearted than other existing bots because it has some sarcasm and humor baked in, apparently this was a personal touch from the CEO.

The humor is actually an interesting aspect because when users give a dangerous query such as instructions for making illegal drugs, the bot will answer but with phony and sarcastic instructions before clarifying that it’s just kidding and wouldn’t encourage making drugs.

One of the big issues around AI at the moment is the seriousness everyone is taking it with. Some are saying it will be the end of jobs, others hate its artistic abilities and claim it’s not really art, and others complain that school kids shouldn’t be using it for homework.

The humorous nature of xAI’s Grok bot could help to make the bot feel more personal which could help with the overall view that these bots are helpful to people and not a detriment.

With regards to having access to the X platform, it will be really interesting to see how this turns out. Often, Twitter is much faster than legacy news outlets at reporting developments, however, this is rarely verified. It’ll be good to see if Grok can distinguish fact from fiction - perhaps community notes will be involved but Musk didn’t clarify.

Finally, if you're wondering about the name Grok, it's a term used to mean you understand something well. It's an appropriate name given how much these chatbots do know.