“Open-source AI is a tremendous innovation, and we appreciate that there are open questions and differing legal opinions. We expect them to be resolved over time, as AI becomes more ubiquitous and different groups come to a consensus as to how to balance individual rights and essential AI/ML research,” says Stability.AI’s Mason. “We strive to find the balance between innovating and helping the community.”

GREG RUTKOWSKI

MS TECH VIA STABLE DIFFUSION

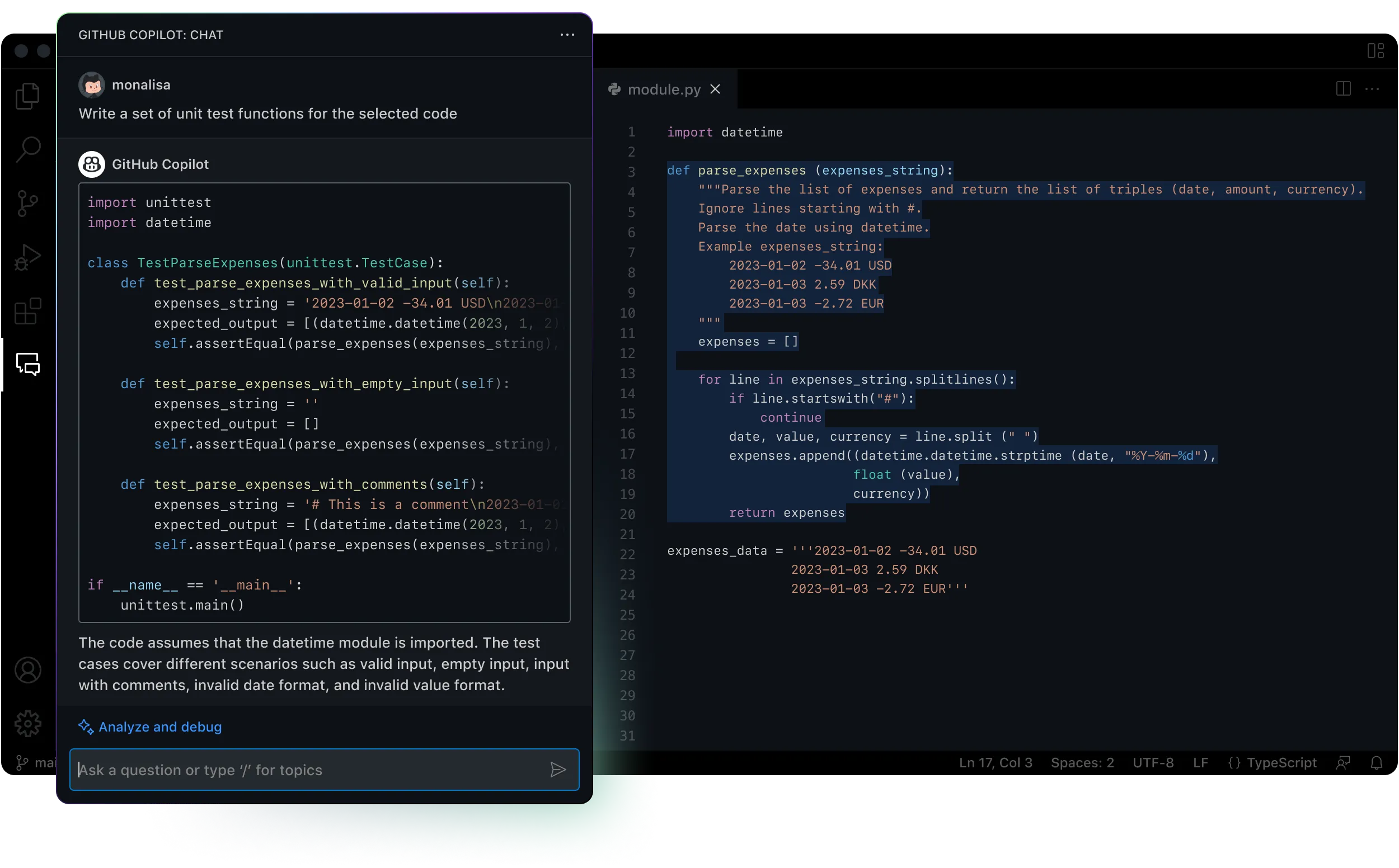

Rutkowski’s “Castle Defense, 2018” (left) and a Stable Diffusion prompted image.

Mason encourages any artists who don’t want their works in the data set to contact LAION, which is an independent entity from the startup. LAION did not immediately respond to a request for comment.

Berlin-based artists Holly Herndon and Mat Dryhurst are working on tools to help artists opt out of being in training data sets. They launched a site called Have I Been Trained, which lets artists search to see whether their work is among the 5.8 billion images in the data set that was used to train Stable Diffusion and Midjourney. Some online art communities, such as Newgrounds, are already taking a stand and have explicitly banned AI-generated images.

An industry initiative called Content Authenticity Initiative, which includes the likes of Adobe, Nikon, and the New York Times, are developing an open standard that would create a sort of watermark on digital content to prove its authenticity. It could help fight disinformation as well as ensuring that digital creators get proper attribution.

“It could also be a way in which creators or IP holders can assert ownership over media that belongs to them or synthesized media that's been created with something that belongs to them,” says Nina Schick, an expert on deepfakes and synthetic media.

The UK, which hopes to boost domestic AI development, wants to change laws to give AI developers greater access to copyrighted data. Under these changes, developers would be able to scrape works protected by copyright to train their AI systems for both commercial and noncommercial purposes.

While artists and other rights holders would not be able to opt out of this regime, they will be able to choose where they make their works available. The art community could end up moving into a pay-per-play or subscription model like the one used in the film and music industries.

“The risk, of course, is that rights holders simply refuse to make their works available, which would undermine the very reason for extending fair use in the AI development space in the first place,” says Dennis.

In the US, LinkedIn lost a case in an appeals court, which ruled last spring that scraping publicly available data from sources on the internet is not a violation of the Computer Fraud and Abuse Act. Google also won a case against authors who objected to the company’s scraping their copyrighted works for Google Books.

Rutkowski says he doesn’t blame people who use his name as a prompt. For them, “it’s a cool experiment,” he says. “But for me and many other artists, it’s starting to look like a threat to our careers.”

GREG RUTKOWSKI

MS TECH VIA STABLE DIFFUSION

Rutkowski’s “Castle Defense, 2018” (left) and a Stable Diffusion prompted image.

Mason encourages any artists who don’t want their works in the data set to contact LAION, which is an independent entity from the startup. LAION did not immediately respond to a request for comment.

Berlin-based artists Holly Herndon and Mat Dryhurst are working on tools to help artists opt out of being in training data sets. They launched a site called Have I Been Trained, which lets artists search to see whether their work is among the 5.8 billion images in the data set that was used to train Stable Diffusion and Midjourney. Some online art communities, such as Newgrounds, are already taking a stand and have explicitly banned AI-generated images.

An industry initiative called Content Authenticity Initiative, which includes the likes of Adobe, Nikon, and the New York Times, are developing an open standard that would create a sort of watermark on digital content to prove its authenticity. It could help fight disinformation as well as ensuring that digital creators get proper attribution.

“It could also be a way in which creators or IP holders can assert ownership over media that belongs to them or synthesized media that's been created with something that belongs to them,” says Nina Schick, an expert on deepfakes and synthetic media.

Pay-per-play

AI-generated art poses tricky legal questions. In the UK, where Stability.AI is based, scraping images from the internet without the artist’s consent to train an AI tool could be a copyright infringement, says Gill Dennis, a lawyer at the firm Pinsent Masons. Copyrighted works can be used to train an AI under “fair use,” but only for noncommercial purposes. While Stable Diffusion is free to use, Stability.AI also sells premium access to the model through a platform called DreamStudio.The UK, which hopes to boost domestic AI development, wants to change laws to give AI developers greater access to copyrighted data. Under these changes, developers would be able to scrape works protected by copyright to train their AI systems for both commercial and noncommercial purposes.

While artists and other rights holders would not be able to opt out of this regime, they will be able to choose where they make their works available. The art community could end up moving into a pay-per-play or subscription model like the one used in the film and music industries.

“The risk, of course, is that rights holders simply refuse to make their works available, which would undermine the very reason for extending fair use in the AI development space in the first place,” says Dennis.

In the US, LinkedIn lost a case in an appeals court, which ruled last spring that scraping publicly available data from sources on the internet is not a violation of the Computer Fraud and Abuse Act. Google also won a case against authors who objected to the company’s scraping their copyrighted works for Google Books.

Rutkowski says he doesn’t blame people who use his name as a prompt. For them, “it’s a cool experiment,” he says. “But for me and many other artists, it’s starting to look like a threat to our careers.”