1/2

The study introduces a new guided tree search algorithm aimed at improving LLM performance on mathematical reasoning tasks while reducing computational costs compared to existing methods. By incorporating dynamic node selection, exploration budget calculation,...

2/2

LiteSearch: Efficacious Tree Search for LLM

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

The study introduces a new guided tree search algorithm aimed at improving LLM performance on mathematical reasoning tasks while reducing computational costs compared to existing methods. By incorporating dynamic node selection, exploration budget calculation,...

2/2

LiteSearch: Efficacious Tree Search for LLM

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

LiteSearch: Efficacious Tree Search for LLM. [2407.00320] LiteSearch: Efficacious Tree Search for LLM

2/2

AI Summary: The study introduces a new guided tree search algorithm aimed at improving LLM performance on mathematical reasoning tasks while reducing computational costs compared to existing methods. By inco...

LiteSearch: Efficacious Tree Search for LLM

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

LiteSearch: Efficacious Tree Search for LLM. [2407.00320] LiteSearch: Efficacious Tree Search for LLM

2/2

AI Summary: The study introduces a new guided tree search algorithm aimed at improving LLM performance on mathematical reasoning tasks while reducing computational costs compared to existing methods. By inco...

LiteSearch: Efficacious Tree Search for LLM

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Computer Science > Computation and Language

[Submitted on 29 Jun 2024]LiteSearch: Efficacious Tree Search for LLM

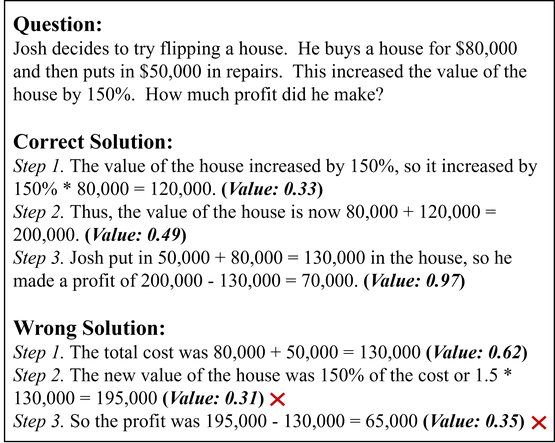

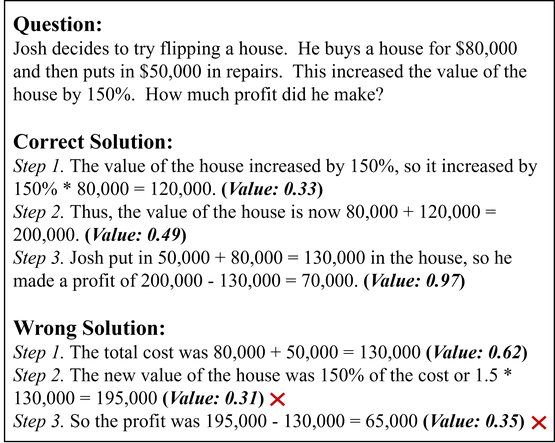

Ante Wang, Linfeng Song, Ye Tian, Baolin Peng, Dian Yu, Haitao Mi, Jinsong Su, Dong YuRecent research suggests that tree search algorithms (e.g. Monte Carlo Tree Search) can dramatically boost LLM performance on complex mathematical reasoning tasks. However, they often require more than 10 times the computational resources of greedy decoding due to wasteful search strategies, making them difficult to be deployed in practical applications. This study introduces a novel guided tree search algorithm with dynamic node selection and node-level exploration budget (maximum number of children) calculation to tackle this issue. By considering the search progress towards the final answer (history) and the guidance from a value network (future) trained without any step-wise annotations, our algorithm iteratively selects the most promising tree node before expanding it within the boundaries of the allocated computational budget. Experiments conducted on the GSM8K and TabMWP datasets demonstrate that our approach not only offers competitive performance but also enjoys significantly lower computational costs compared to baseline methods.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Machine Learning (cs.LG) |

| Cite as: | arXiv:2407.00320 [cs.CL] |

| (or arXiv:2407.00320v1 [cs.CL] for this version) | |

| [2407.00320] LiteSearch: Efficacious Tree Search for LLM Focus to learn more |

Submission history

From: Linfeng Song [view email][v1] Sat, 29 Jun 2024 05:14:04 UTC (640 KB)