You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Large Language Models News & Discussions

- Thread starter Macallik86

- Start date

More options

Who Replied?Structure-informed Language Models Are Protein Designers

Zaixiang Zheng, Yifan Deng, Dongyu Xue, Yi Zhou, Fei YE, Quanquan GuThis paper demonstrates that language models are strong structure-based protein designers. We present LM-Design, a generic approach to reprogramming sequence-based protein language models (pLMs), that have learned massive sequential evolutionary knowledge from the universe of natural protein sequences, to acquire an immediate capability to design preferable protein sequences for given folds. We conduct a structural surgery on pLMs, where a lightweight structural adapter is implanted into pLMs and endows it with structural awareness. During inference, iterative refinement is performed to effectively optimize the generated protein sequences. Experiments show that LM-Design improves the state-of-the-art results by a large margin, leading to up to 4% to 12% accuracy gains in sequence recovery (e.g., 55.65%/56.63% on CATH 4.2/4.3 single-chain benchmarks, and >60% when designing protein complexes). We provide extensive and in-depth analyses, which verify that LM-Design can (1) indeed leverage both structural and sequential knowledge to accurately handle structurally non-deterministic regions, (2) benefit from scaling data and model size, and (3) generalize to other proteins (e.g., antibodies and de novo proteins)

| Comments: | 10 pages; ver.2 update: added image credit to RFdiffusion (Watson et al., 2022) in Fig. 1F, and fixed some small presentation errors |

| Subjects: | Machine Learning (cs.LG) |

| Cite as: | arXiv:2302.01649 [cs.LG] |

| (or arXiv:2302.01649v2 [cs.LG] for this version) | |

| https://doi.org/10.48550/arXiv.2302.01649 Focus to learn more |

Submission history

From: Zaixiang Zheng [view email][v1] Fri, 3 Feb 2023 10:49:52 UTC (5,545 KB)

[v2] Thu, 9 Feb 2023 14:14:05 UTC (5,496 KB)

A.I generated partial summary:

Simplified version of the abstract:

This paper demonstrates that language models can be powerful tools for protein design. We present a novel method called LM-DESIGN that leverages the strengths of both language models and protein structure prediction methods. LM-DESIGN achieves state-of-the-art performance on protein sequence design tasks, outperforming previous methods by a large margin. Additionally, LM-DESIGN is shown to be data-efficient, flexible, and generalizable to diverse protein families.Key points of the paper:

- Protein design: LM-DESIGN is a new method for designing protein sequences based on their desired structures.

- Language models: LM-DESIGN uses the power of large language models to learn the complex relationships between protein sequences and their structures.

- State-of-the-art performance: LM-DESIGN outperforms previous protein design methods by a large margin.

- Data-efficient: LM-DESIGN requires less data than previous methods to achieve high accuracy.

- Flexible: LM-DESIGN can be easily adapted to different protein families and structures.

- Generalizable: LM-DESIGN can be used to design proteins that are not found in nature.

Potential applications of LM-DESIGN:

- Developing new drugs and therapies: LM-DESIGN could be used to design new proteins with desired functions, such as targeting specific diseases.

- Improving protein engineering: LM-DESIGN could be used to improve the properties of existing proteins, such as their stability or activity.

- Understanding protein evolution: LM-DESIGN could be used to study how proteins have evolved over time.

Overall, LM-DESIGN is a promising new method for protein design with a wide range of potential applications.

Another A.I generated summary:

Protein Designers Got a Big Boost: Meet LM-DESIGN!

Researchers just unveiled a game changer in protein design: LM-DESIGN! It's a system that lets protein language models, like the ones that help computers understand your emails, play a key role in crafting new proteins.Think of it like this: protein language models are like master chefs with years of experience cooking up protein sequences. They know all the right ingredients and how to combine them to create delicious, functional proteins. Now, imagine giving these chefs access to a map of a protein's structure, like a blueprint. That's what LM-DESIGN does!

With this map, the chefs can design even more precise and functional protein sequences, opening up a world of possibilities. LM-DESIGN has already achieved some incredible results:

- It can design protein sequences with over 55% accuracy, which is way better than existing methods.

- It can handle tricky regions in protein structures, like loops and exposed surfaces.

- It can even create brand new protein types, like antibodies and proteins that haven't been seen before.

- New drugs: Imagine designing proteins that can target specific diseases and cure them!

- Better enzymes: Enzymes are nature's tiny factories, and LM-DESIGN could help us create even more efficient ones for industrial processes.

- A deeper understanding of proteins: By studying the sequences LM-DESIGN creates, scientists can unlock the secrets of how proteins work.

Computer Science > Computer Vision and Pattern Recognition

[Submitted on 9 Nov 2023 (v1), last revised 13 Nov 2023 (this version, v2)]Mirasol3B: A Multimodal Autoregressive model for time-aligned and contextual modalities

AJ Piergiovanni, Isaac Noble, Dahun Kim, Michael S. Ryoo, Victor Gomes, Anelia AngelovaOne of the main challenges of multimodal learning is the need to combine heterogeneous modalities (e.g., video, audio, text). For example, video and audio are obtained at much higher rates than text and are roughly aligned in time. They are often not synchronized with text, which comes as a global context, e.g., a title, or a description. Furthermore, video and audio inputs are of much larger volumes, and grow as the video length increases, which naturally requires more compute dedicated to these modalities and makes modeling of long-range dependencies harder.

We here decouple the multimodal modeling, dividing it into separate, focused autoregressive models, processing the inputs according to the characteristics of the modalities. We propose a multimodal model, called Mirasol3B, consisting of an autoregressive component for the time-synchronized modalities (audio and video), and an autoregressive component for the context modalities which are not necessarily aligned in time but are still sequential. To address the long-sequences of the video-audio inputs, we propose to further partition the video and audio sequences in consecutive snippets and autoregressively process their representations. To that end, we propose a Combiner mechanism, which models the audio-video information jointly within a timeframe. The Combiner learns to extract audio and video features from raw spatio-temporal signals, and then learns to fuse these features producing compact but expressive representations per snippet.

Our approach achieves the state-of-the-art on well established multimodal benchmarks, outperforming much larger models. It effectively addresses the high computational demand of media inputs by both learning compact representations, controlling the sequence length of the audio-video feature representations, and modeling their dependencies in time.

| Subjects: | Computer Vision and Pattern Recognition (cs.CV) |

| Cite as: | arXiv:2311.05698 [cs.CV] |

| (or arXiv:2311.05698v2 [cs.CV] for this version) | |

| [2311.05698] Mirasol3B: A Multimodal Autoregressive model for time-aligned and contextual modalities Focus to learn more |

Submission history

From: Aj Piergiovanni [view email][v1] Thu, 9 Nov 2023 19:15:12 UTC (949 KB)

[v2] Mon, 13 Nov 2023 14:53:10 UTC (949 KB)

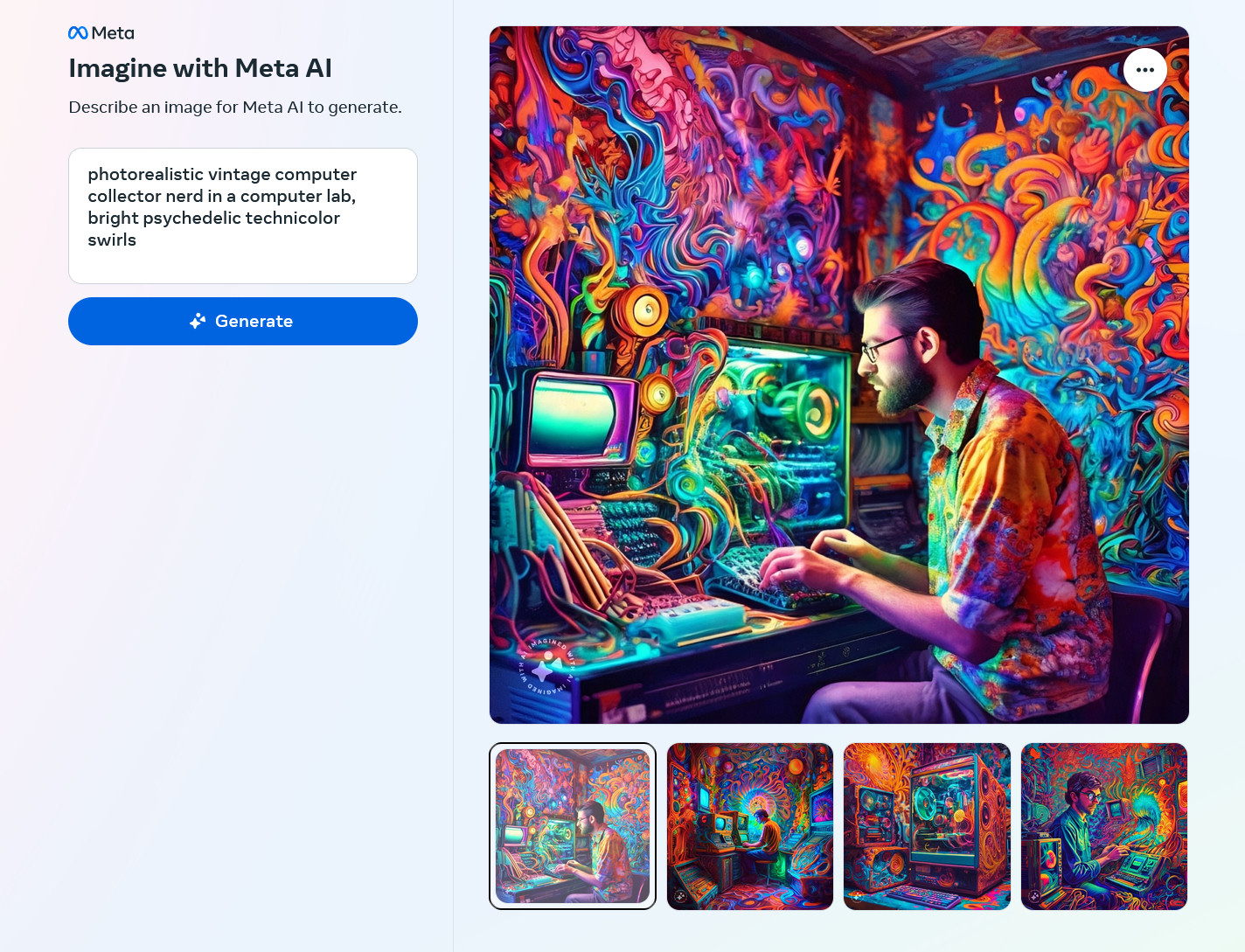

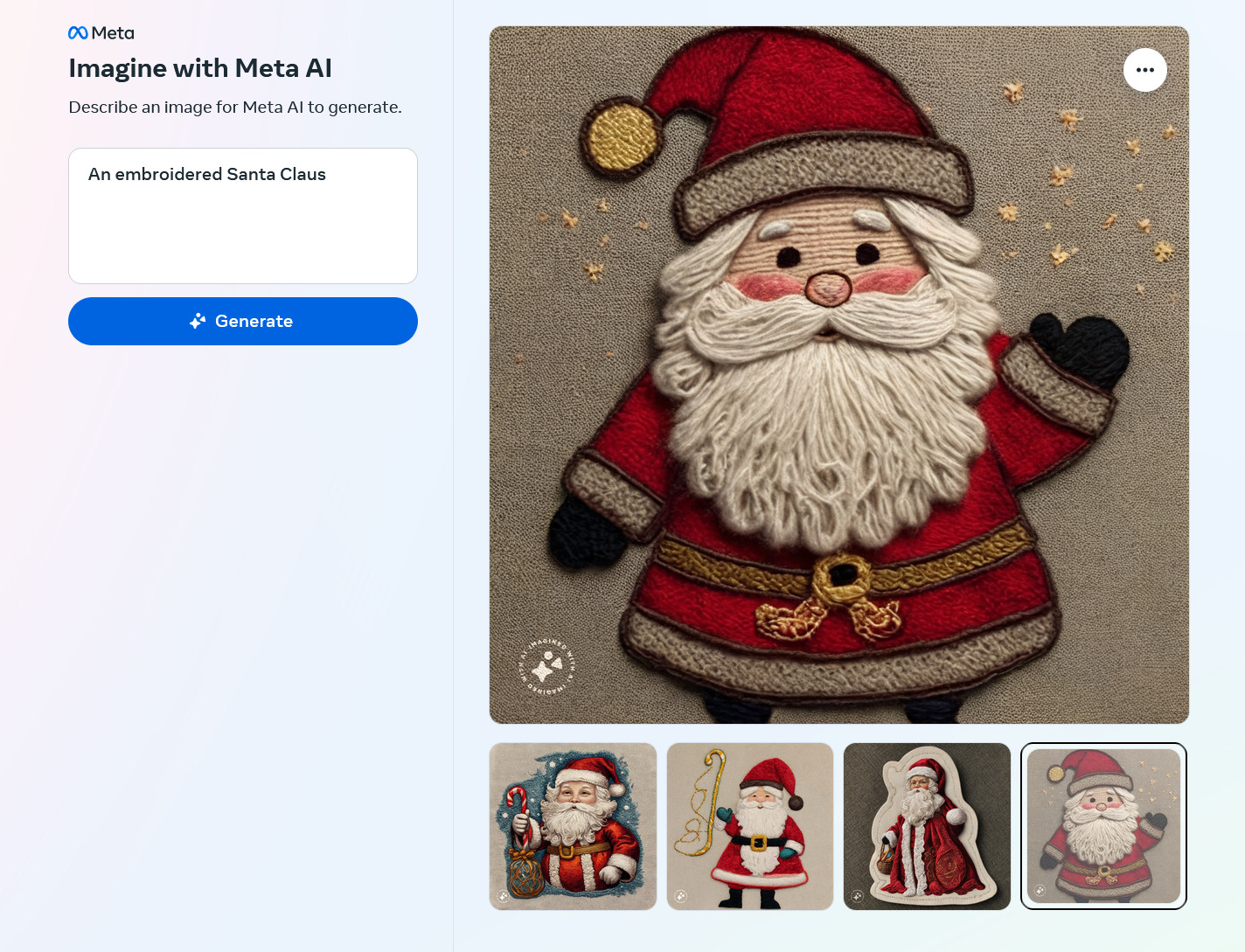

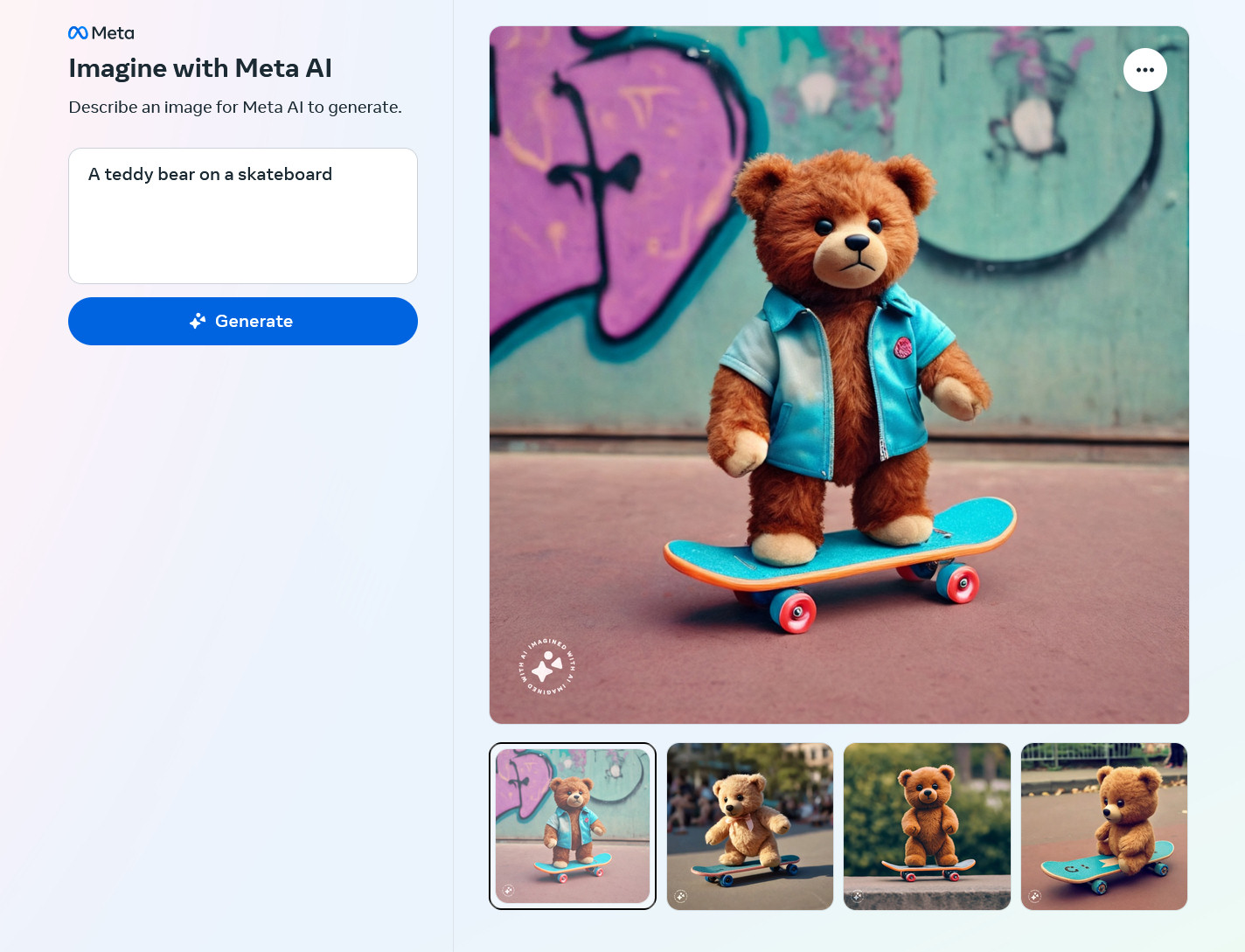

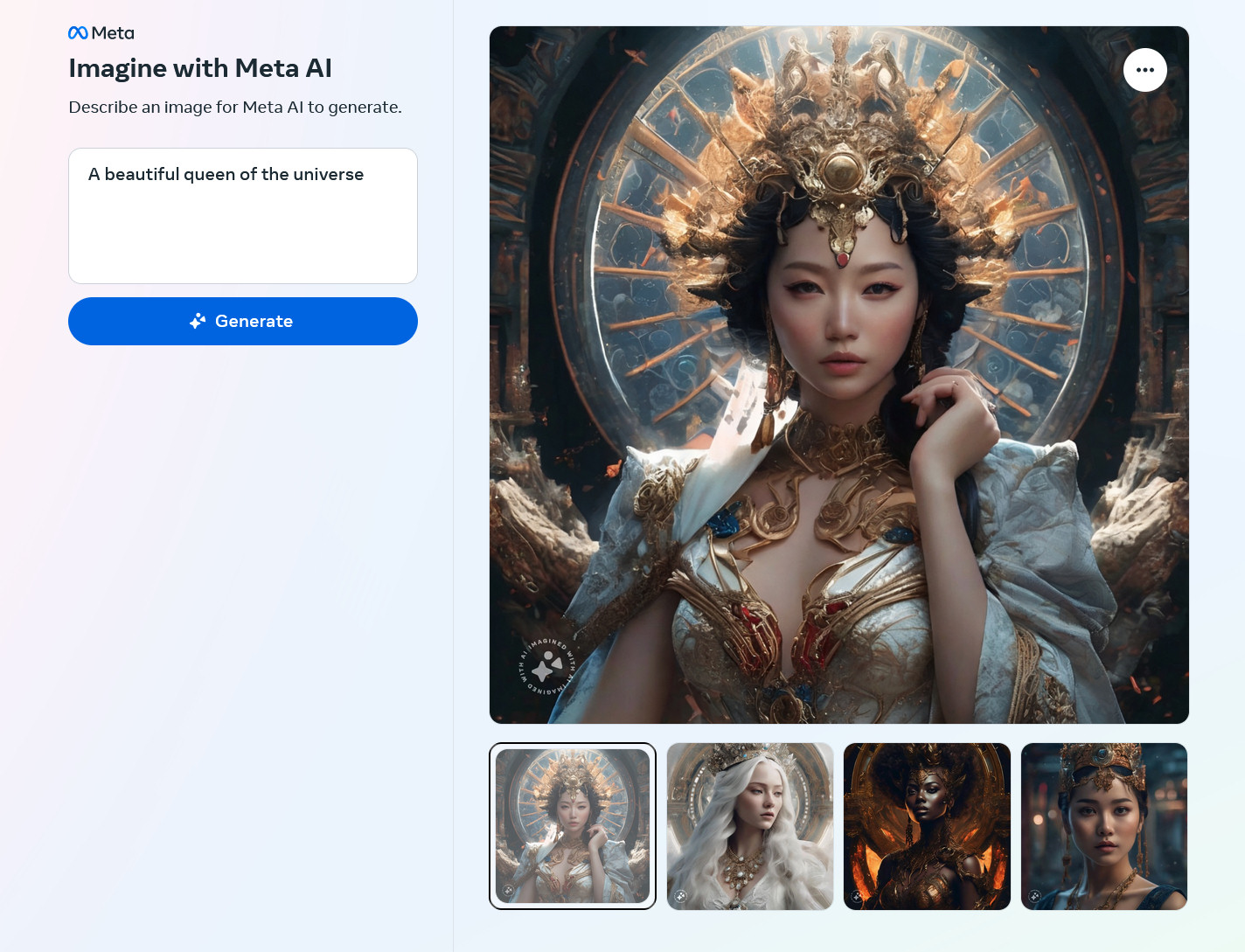

Meta’s new AI image generator was trained on 1.1 billion Instagram and Facebook photos

“Imagine with Meta AI” turns prompts into images, trained using public Facebook data.arstechnica.com

Meta’s new AI image generator was trained on 1.1 billion Instagram and Facebook photos

"Imagine with Meta AI" turns prompts into images, trained using public Facebook data.

BENJ EDWARDS - 12/6/2023, 4:52 PM

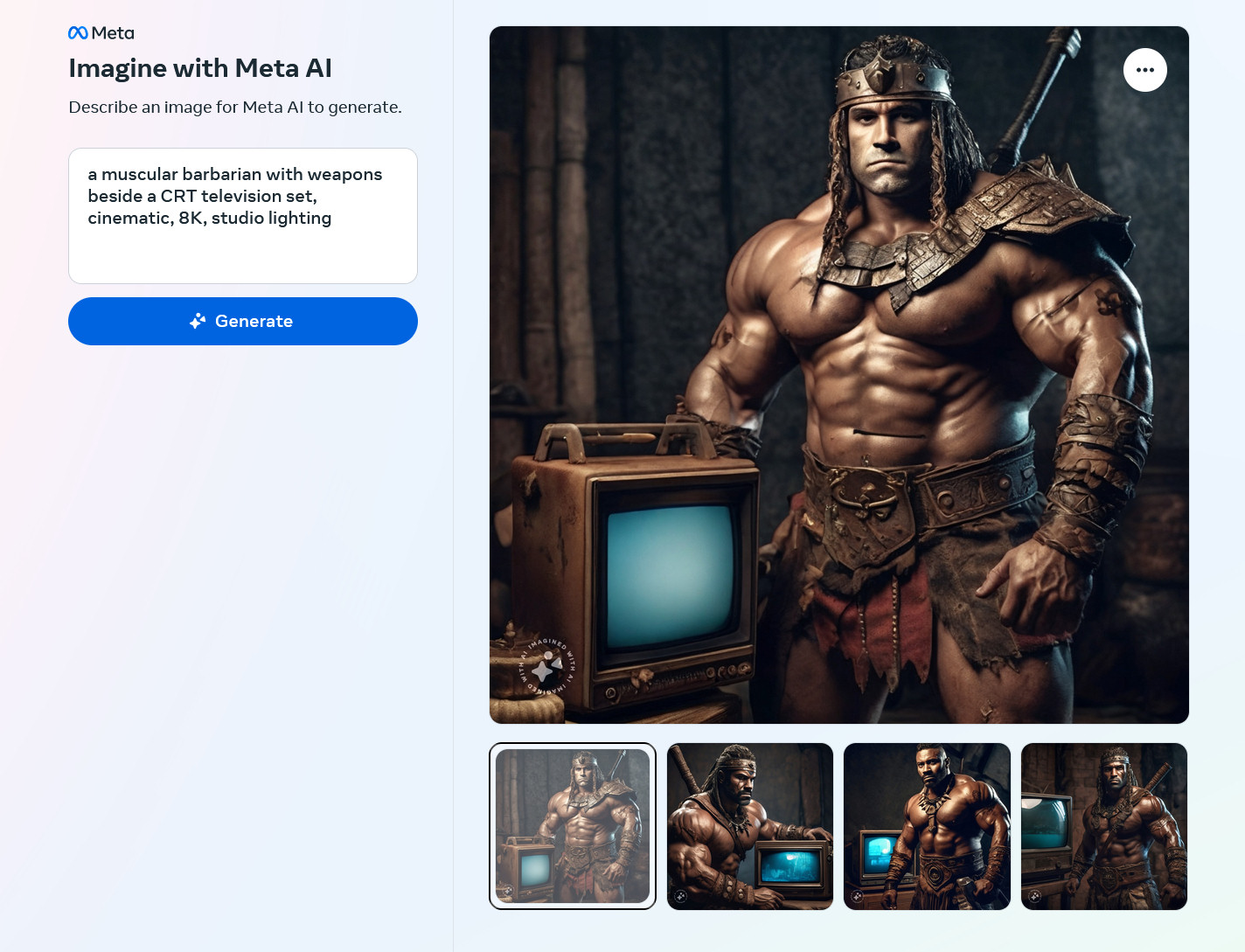

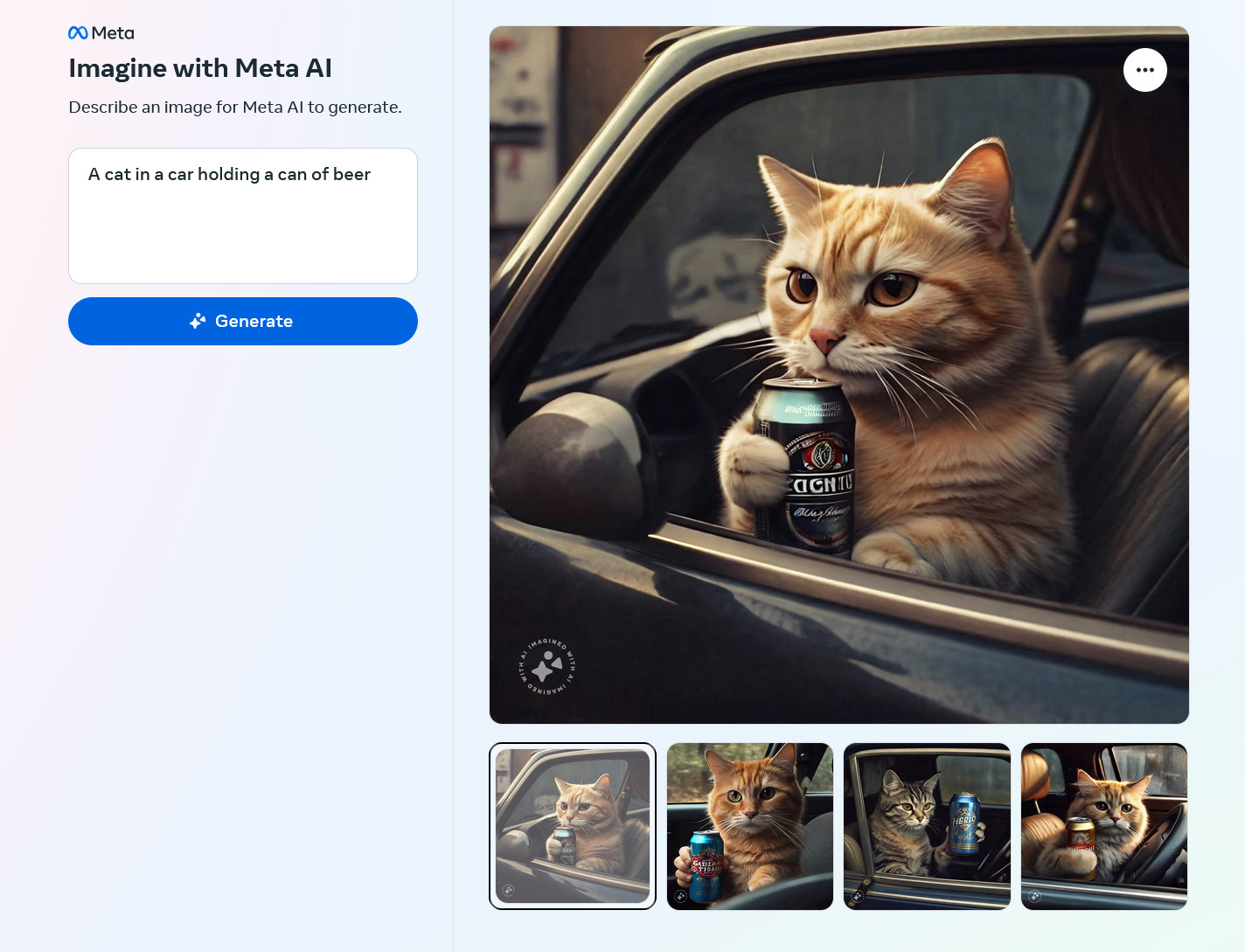

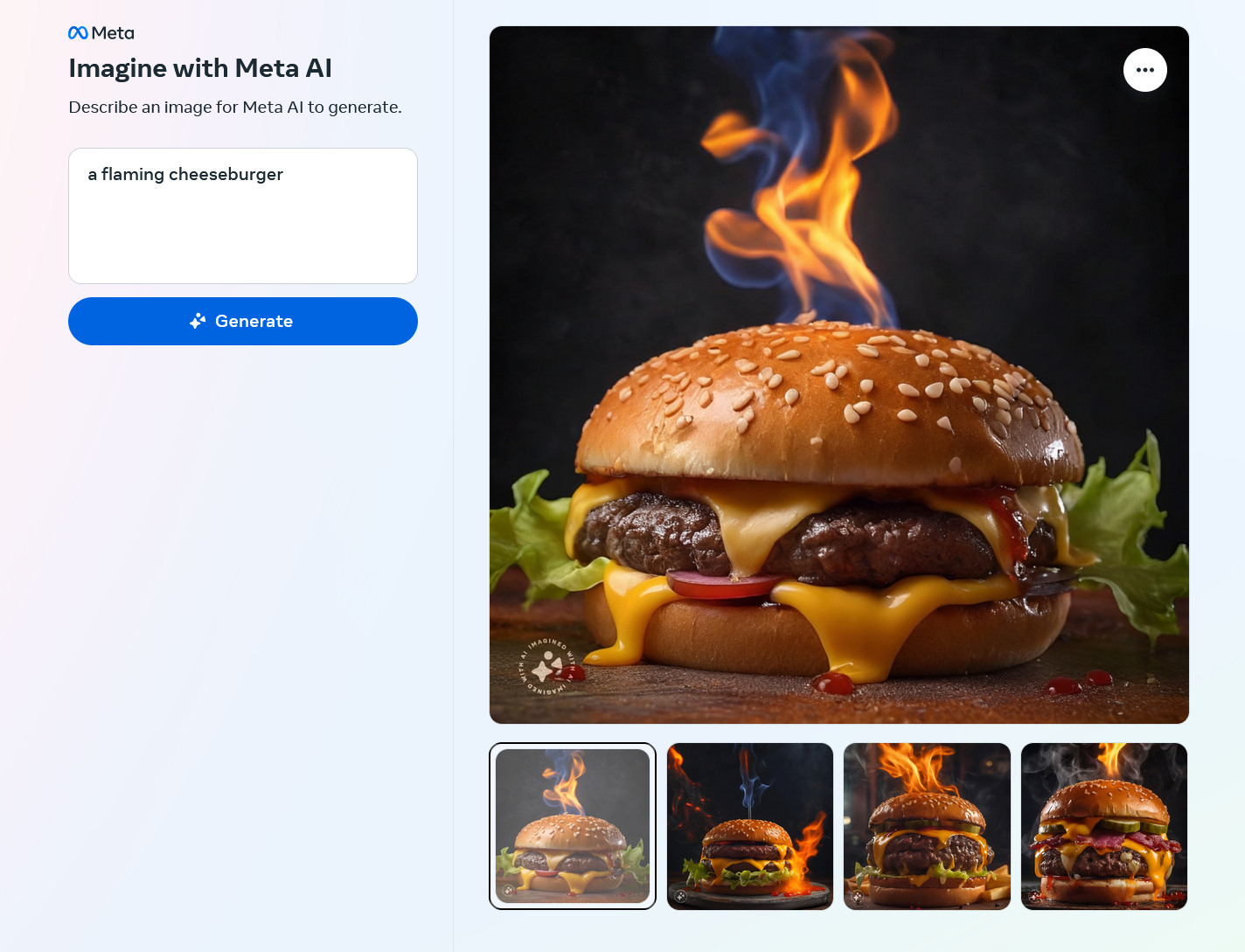

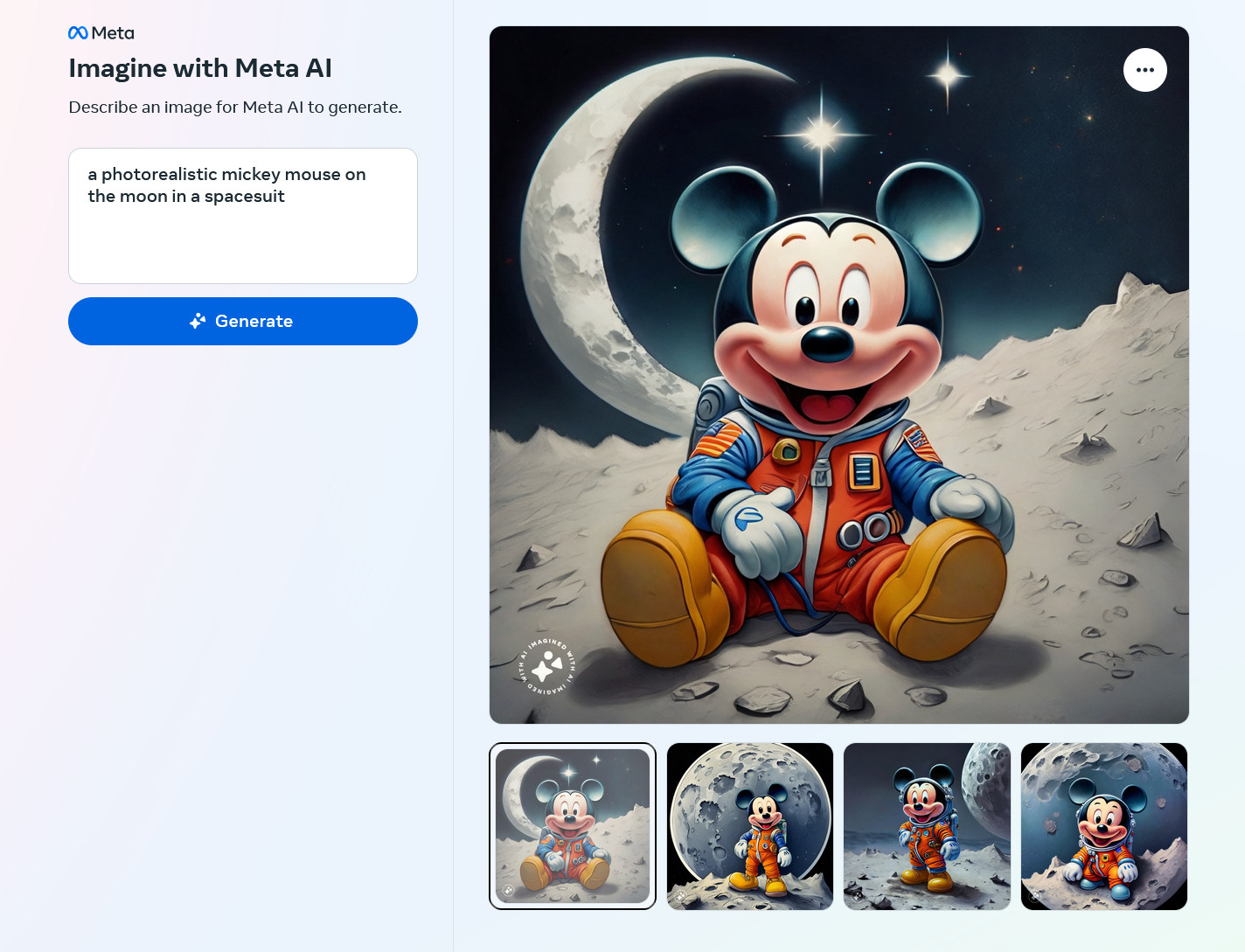

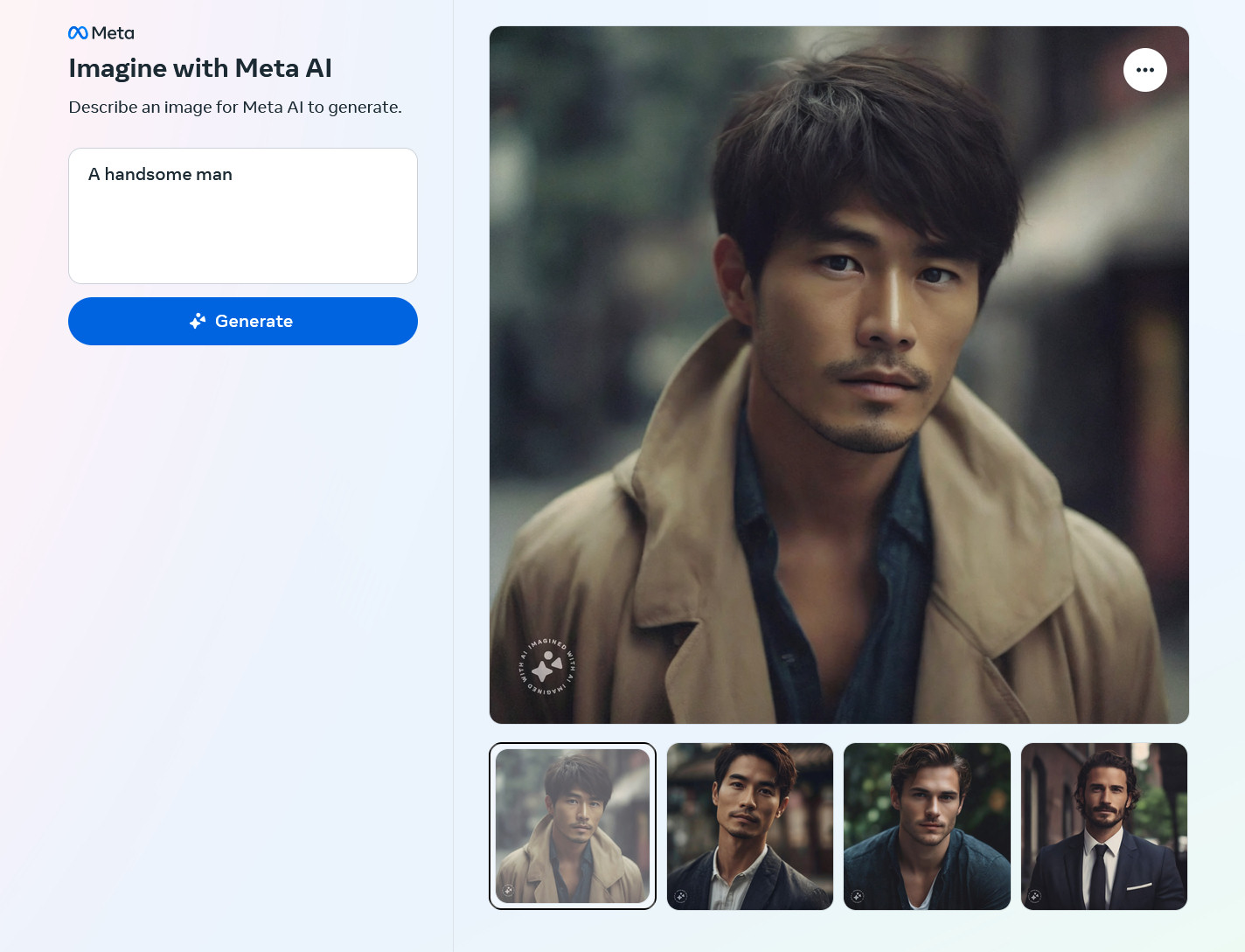

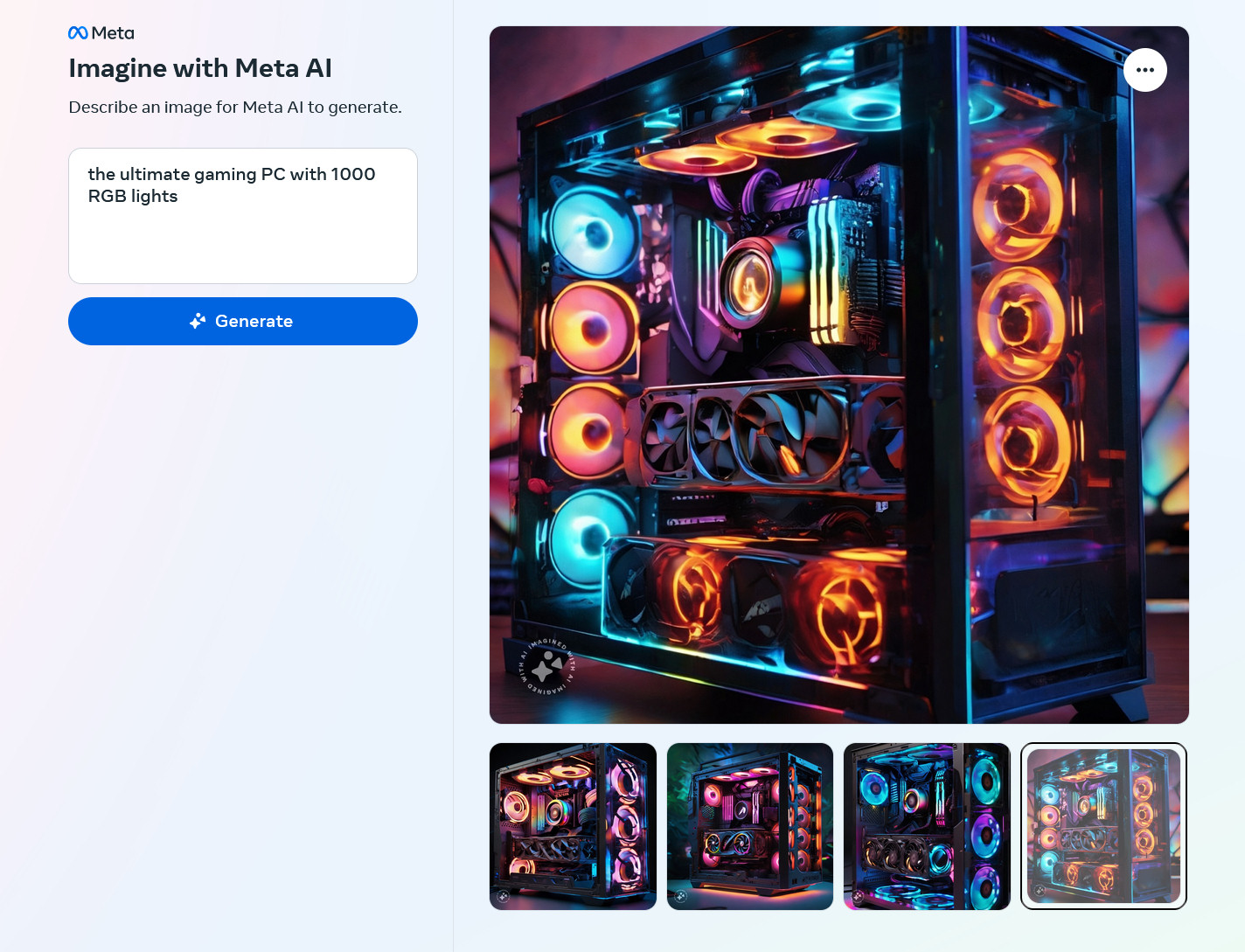

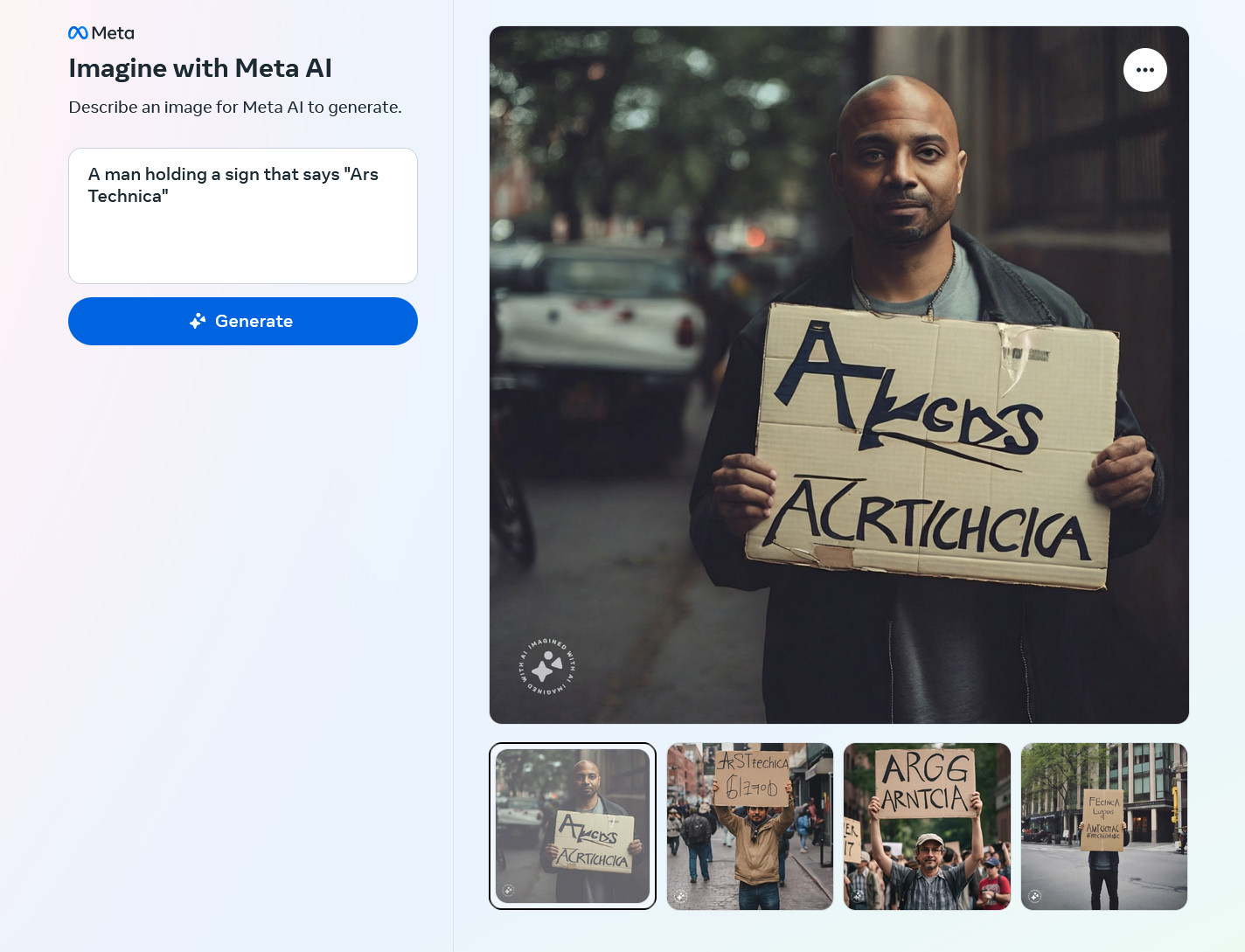

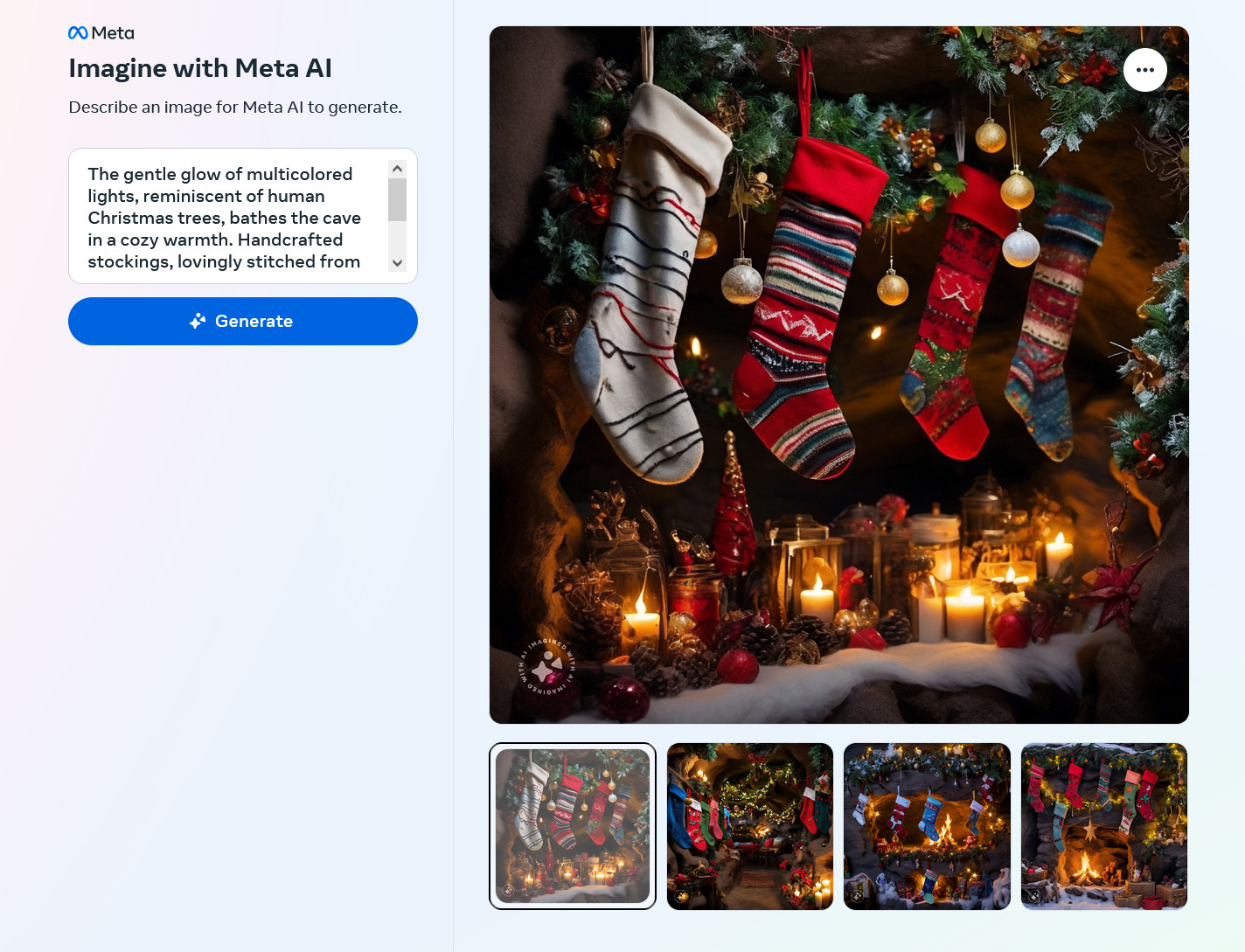

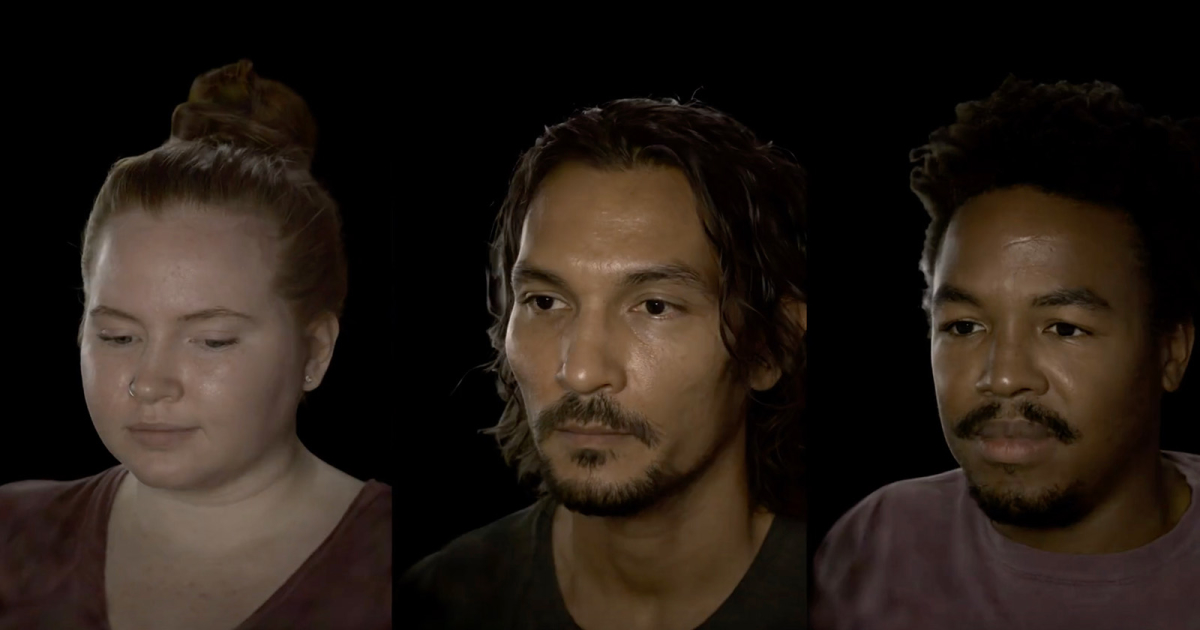

Enlarge / Three images generated by "Imagine with Meta AI" using the Emu AI model.

Meta | Benj Edwards

101

On Wednesday, Meta released a free standalone AI image-generator website, "Imagine with Meta AI," based on its Emu image-synthesis model. Meta used 1.1 billion publicly visible Facebook and Instagram images to train the AI model, which can render a novel image from a written prompt. Previously, Meta's version of this technology—using the same data—was only available in messaging and social networking apps such as Instagram.

FURTHER READING

Users find that Facebook’s new AI stickers can generate Elmo with a knife

If you're on Facebook or Instagram, it's quite possible a picture of you (or that you took) helped train Emu. In a way, the old saying, "If you're not paying for it, you are the product" has taken on a whole new meaning. Although, as of 2016, Instagram users uploaded over 95 million photos a day, so the dataset Meta used to train its AI model was a small subset of its overall photo library.

Since Meta says it only uses publicly available photos for training, setting your photos private on Instagram or Facebook should prevent their inclusion in the company's future AI model training (unless it changes that policy, of course).

Imagine with Meta AI

Previous SlideNext Slide

- AI-generated images of "a muscular barbarian with weapons beside a CRT television set, cinematic, 8K, studio lighting" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a cat in a car holding a can of beer" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a flaming cheeseburger" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a photorealistic Mickey Mouse on the moon in a spacesuit" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a handsome man" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "the ultimate gaming PC with 1,000 RGB lights" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a man holding a sign that says 'Ars Technica'" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of a complex prompt involving Christmas stockings and a cave created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "photorealistic vintage computer collector nerd in a computer lab, bright psychedelic technicolor swirls" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "an embroidered Santa Claus" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "A teddy bear on a skateboard" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards- AI-generated images of "a beautiful queen of the universe" created by Meta Emu on the "Imagine with Meta AI" website.

Meta | Benj Edwards[/LEFT]

New Relightable & Animatable Realistic Avatars from Meta

Relightable Gaussian Codec Avatars produce high-quality 3D heads.

Relightable Gaussian Codec Avatars

Published on Dec 6·Featured in Daily Papers on Dec 6

Authors:

Shunsuke Saito, Gabriel Schwartz, Tomas Simon, Junxuan Li, Giljoo Nam

Abstract

The fidelity of relighting is bounded by both geometry and appearance representations. For geometry, both mesh and volumetric approaches have difficulty modeling intricate structures like 3D hair geometry. For appearance, existing relighting models are limited in fidelity and often too slow to render in real-time with high-resolution continuous environments. In this work, we present Relightable Gaussian Codec Avatars, a method to build high-fidelity relightable head avatars that can be animated to generate novel expressions. Our geometry model based on 3D Gaussians can capture 3D-consistent sub-millimeter details such as hair strands and pores on dynamic face sequences. To support diverse materials of human heads such as the eyes, skin, and hair in a unified manner, we present a novel relightable appearance model based on learnable radiance transfer. Together with global illumination-aware spherical harmonics for the diffuse components, we achieve real-time relighting with spatially all-frequency reflections using spherical Gaussians. This appearance model can be efficiently relit under both point light and continuous illumination. We further improve the fidelity of eye reflections and enable explicit gaze control by introducing relightable explicit eye models. Our method outperforms existing approaches without compromising real-time performance. We also demonstrate real-time relighting of avatars on a tethered consumer VR headset, showcasing the efficiency and fidelity of our avatars.View arXiv pageView PDFAdd to collection

Last edited:

Google’s Demo For ChatGPT Rival Criticized By Some Employees

Google’s newly unveiled AI technology doesn’t work quite as well as some people assumed, but the company says more updates are coming in the new year. [/SIZE]

Sundar Pichai during the Google I/O Developers Conference in Mountain View, California, in May. Photographer: David Paul Morris/Bloomberg

By Shirin Ghaffary and Davey Alba

December 7, 2023 at 5:04 PM EST

Google stunned viewers this week with a video demo for its new ChatGPT rival. In one case, however, the technology doesn’t work quite as well as people assumed. But first…

Three things to know:

• EU representatives remain divided on AI rules after nearly 24 hours of debate

• A popular provider of AI-powered drive-thru systems relies heavily on humans to review orders

• Air Space Intelligence, an AI startup for aerospace, was valued at about $300 million in new financing

Google’s duck problem

When Google unveiled Gemini, its long-awaited answer to ChatGPT, perhaps the most jaw-dropping use case involved a duck. In a pre-recorded video demo shared on Wednesday, a disembodied hand is shown drawing the animal. The AI system appears to analyze it in real-time as it’s drawn and responds with a human-sounding voice in conversation with the user.Google CEO Sundar Pichai promoted the video on X, writing, “Best way to understand Gemini’s underlying amazing capabilities is to see them in action, take a look

But the technology doesn’t quite work as well as people assumed, as many were quick to point out. Right now, Gemini doesn't say its responses out loud, and you probably can’t expect its responses to be as polished as it appears to be in the video. Some Google employees are also calling out those discrepancies internally.

One Googler told Bloomberg that, in their view, the video paints an unrealistic picture of how easy it is to coax impressive results out of Gemini. Another staffer said they weren’t too surprised by the demo because they’re used to some level of marketing hype in how the company publicly positions its products. (Of course, all companies do.) "I think most employees who've played with any LLM technology know to take all of this with a grain of salt,” said the employee, referring to the acronym for large language models, which power AI chatbots. These people asked not to be identified for fear of professional repercussions.

“Our Hands on with Gemini demo video shows real outputs from Gemini. We created the demo by capturing footage in order to test Gemini’s capabilities on a wide range of challenges,” Google said in a statement. “Then we prompted Gemini using still image frames from the footage, and prompting via text.”

To its credit, Google did disclose that what’s shown in the video is not exactly how Gemini works in practice. “For the purposes of this demo, latency has been reduced and Gemini’s outputs have been shortened for brevity,” a description of the demo uploaded to YouTube reads. In other words, the video shows a shorter version of Gemini’s original responses and the AI system took longer to come up with them. Google told Bloomberg that individual words in Gemini’s responses were not changed and the voiceover captured excerpts from actual text prompting of Gemini.

Eli Collins, vice-president of product at Google DeepMind, told Bloomberg the duck-drawing demo was still a research-level capability and not in Google’s actual products, at least for now.

Gemini, released Wednesday, is the result of Google working throughout this year to catch up to OpenAI’s ChatGPT and regain its position as the undisputed leader in the AI industry. But the duck demo highlights the gap between the promise of Google’s AI technology and what users can experience right now.

Google said Gemini is its largest, most capable and flexible AI model to date, replacing PaLM 2, released in May. The company said Gemini exceeds leading AI models in 30 out of 32 benchmarks testing for reasoning, math, language and other metrics. It specifically beats GPT-4, one of OpenAI’s most recent AI models, in seven out of eight of those benchmarks, according to Google, although a few of those margins are slim. Gemini is also multimodal, which means it can understand video, images and code, setting it apart from GPT-4 which can only input images and text.

“It’s a new era for us,” Collins said in an interview after the event. “We’re breaking ground from a research perspective. This is V1. It’s just the beginning.”

Google is releasing Gemini in a tiered rollout. Gemini Ultra, the most capable version and the one that the company says outperforms GPT-4 in most tests, won’t be released until early next year. Other features, like those demoed in the duck video, remain in development.

Internally, some Googlers have been discussing whether showing the video without a prominent disclosure could be misleading to the public. In a corporate company-wide forum, one Googler shared a meme implying the duck video was deceptively edited. Another meme showed a cartoon of Homer Simpson proudly standing upright in his underwear, with the caption: “Gemini demo prompts.” It was contrasted with a less flattering picture of Homer in the same position from behind, with his loose skin bunched up. The caption: “the real prompts.”

Another Googler said in a comment, “I guess the video creators valued the ‘storytelling’ aspect more.”

ChatGPT vs. Gemini: Hands on

For now, users can play around with the medium tier version of Gemini in Google’s free chatbot, Bard. The company said this iteration outperformed the comparable version of OpenAI’s GPT model (GPT 3.5) in six out of eight industry benchmark tests.[/SIZE]In our own limited testing with the new-and-revamped Bard, we found it mostly to be on par or better than ChatGPT 3.5, and in some ways better than the old Bard. However, it’s still unreliable on some tasks.

Out of seven SAT math and reasoning questions we prompted Bard with, it correctly answered four, incorrectly answered two and said it didn’t know the answer for one. It also answered one out of three reading comprehension questions correctly. When we tested GPT 3.5, it yielded similar results, but it was able to answer one question that stumped Bard.

Bard, like all large-language models, still hallucinates or provides incorrect information at times. When we asked Bard, for example, to name what AI model it runs on, it incorrectly told me PaLM2, the previous version it used.

On some planning-oriented tasks, Bard’s capabilities did seem like a clear improvement over the previous iteration of the product and compared to ChatGPT. When asked to plan a girls’ trip to Miami, for example, Bard gave me a useful day-by-day breakdown separated into morning, afternoon and evening itineraries. For the first day, it started with a “delicious Cuban breakfast” at a local restaurant, a boat tour of Biscayne Bay and a night out in South Beach. When I gave the same prompt to ChatGPT 3.5, the answers were longer and less specific.

To test out Bard’s creativity, we asked it to write a poem about the recent boardroom chaos at OpenAI. It came up with some brooding lines, including: “OpenAI, in turmoil's grip/Saw visions shattered, alliances split.” GPT 3.5’s poem didn’t quite capture the mood as well because it only has access to online information through early 2022“ Those paying for ChatGPT 4, however, can get real-time information, and its poetry was more on topic: “Sam Altman, a name, in headlines cast/A leader in question, a future vast.”

In our interview, DeepMind’s Collins said Bard is “one of the best free chatbots” in the world now with the Gemini upgrade. Based on our limited testing, he may be right.

Got a question about AI? Email me, Shirin Ghaffary, and I'll try to answer yours in a future edition of this newsletter.

Mistral "Mixtral" 8x7B 32k model [magnet] | Hacker News

Mistral "Mixtral" 8x7B 32k model

magnet:?xt=urn:btih:5546272da9065eddeb6fcd7ffddeef5b75be79a7&dn=mixtral-8x7b-32kseqlen&tr=udp%3A%2F%[URL='https://t.co/uV4WVdtpwZ']http://2Fopentracker.i2p.rocks[/URL]%3A6969%2Fannounce&tr=http%3A%2F%[URL='https://t.co/g0m9cEUz0T']http://2Ftracker.openbittorrent.com[/URL]%3A80%2FannounceComputer Science > Computation and Language

[Submitted on 1 Dec 2023]Instruction-tuning Aligns LLMs to the Human Brain

Khai Loong Aw, Syrielle Montariol, Badr AlKhamissi, Martin Schrimpf, Antoine BosselutInstruction-tuning is a widely adopted method of finetuning that enables large language models (LLMs) to generate output that more closely resembles human responses to natural language queries, in many cases leading to human-level performance on diverse testbeds. However, it remains unclear whether instruction-tuning truly makes LLMs more similar to how humans process language. We investigate the effect of instruction-tuning on LLM-human similarity in two ways: (1) brain alignment, the similarity of LLM internal representations to neural activity in the human language system, and (2) behavioral alignment, the similarity of LLM and human behavior on a reading task. We assess 25 vanilla and instruction-tuned LLMs across three datasets involving humans reading naturalistic stories and sentences. We discover that instruction-tuning generally enhances brain alignment by an average of 6%, but does not have a similar effect on behavioral alignment. To identify the factors underlying LLM-brain alignment, we compute correlations between the brain alignment of LLMs and various model properties, such as model size, various problem-solving abilities, and performance on tasks requiring world knowledge spanning various domains. Notably, we find a strong positive correlation between brain alignment and model size (r = 0.95), as well as performance on tasks requiring world knowledge (r = 0.81). Our results demonstrate that instruction-tuning LLMs improves both world knowledge representations and brain alignment, suggesting that mechanisms that encode world knowledge in LLMs also improve representational alignment to the human brain.

| Subjects: | Computation and Language (cs.CL) |

| Cite as: | arXiv:2312.00575 [cs.CL] |

| (or arXiv:2312.00575v1 [cs.CL] for this version) | |

| [2312.00575] Instruction-tuning Aligns LLMs to the Human Brain Focus to learn more |

Submission history

From: Khai Loong Aw [view email][v1] Fri, 1 Dec 2023 13:31:02 UTC (2,029 KB)

fblgit/una-cybertron-7b-v2-bf16 · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Model Card for una-cybertron-7b-v2-bf16 (UNA: Uniform Neural Alignment)

We strike back, introducing Cybertron 7B v2 a 7B MistralAI based model, best on it's series. Trained on SFT, DPO and UNA (Unified Neural Alignment) on multiple datasets. He scores EXACTLY #1 with 69.67+ score on HF LeaderBoard board, #8 ALL SIZES top score.- v1 Scoring #1 at 2 December 2023 with 69.43 ..few models were releasse .. but only 1 can survive: CYBERTRON!

- v2 Scoring #1 at 5 December 2023 with 69.67

| Model | Average | ARC (25-s) | HellaSwag (10-s) | MMLU (5-s) | TruthfulQA (MC) (0-s) | Winogrande (5-s) | GSM8K (5-s) |

|---|---|---|---|---|---|---|---|

| mistralai/Mistral-7B-v0.1 | 60.97 | 59.98 | 83.31 | 64.16 | 42.15 | 78.37 | 37.83 |

| Intel/neural-chat-7b-v3-2 | 68.29 | 67.49 | 83.92 | 63.55 | 59.68 | 79.95 | 55.12 |

| perlthoughts/Chupacabra-7B-v2 | 63.54 | 66.47 | 85.17 | 64.49 | 57.6 | 79.16 | 28.35 |

| fblgit/una-cybertron-7b-v1-fp16 | 69.49 | 68.43 | 85.85 | 63.34 | 63.28 | 80.90 | 55.12 |

| fblgit/una-cybertron-7b-v2-bf16 | 69.67 | 68.26 | 85.?4 | 63.23 | 64.63 | 81.37 | 55.04 |

Model Details

Adiestrated with UNA: Uniform Neural Alignment technique (paper going out soon).- What is NOT UNA? Its not a merged layers model. Is not SLERP or SLURP or similar.

- What is UNA? A formula & A technique to TAME models

- When will be released the code and paper? When have time, contribute and it'll be faster.

Model Description

- Developed by: juanako.ai

- Author: Xavier M.

- Investors CONTACT HERE

- Model type: MistralAI 7B

- Funded by Cybertron's H100's with few hours training.

fblgit/una-xaberius-34b-v1beta · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Model Card for una-xaberius-34b-v1-beta (UNA: Uniform Neural Alignment)

This is another King-Breed from Juanako.AIIntroducing THE MODEL: XABERIUS 34B v1-BETA an experimental 34B LLaMa-Yi-34B based model, best on it's series. Trained on SFT, DPO and UNA (Unified Neural Alignment) on multiple datasets.

Timeline:

- 05-Dec-2023 v1-beta released

- 08-Dec-2023 Evaluation been "RUNNING" for 2 days.. no results yet

| Model | Average | ARC (25-s) | HellaSwag (10-s) | MMLU (5-s) | TruthfulQA (MC) (0-s) | Winogrande (5-s) | GSM8K (5-s) |

|---|---|---|---|---|---|---|---|

| fblgit/una-cybertron-7b-v1-fp16 | 69.49 | 68.43 | 85.85 | 63.34 | 63.28 | 80.90 | 55.12 |

| fblgit/una-cybertron-7b-v2-bf16 | 69.67 | 68.26 | 85.?4 | 63.23 | 64.63 | 81.37 | 55.04 |

| fblgit/una-xaberius-34b-v1beta | 74.21 | 70.39 | 86.72 | 79.13 | 61.55 | 80.26 | 67.24 |

Evaluations

- Scores 74.21 Outperforming former leader tigerbot-70b-chat and landing on #1 position of HuggingFace LeaderBoard: 08 December 2023.

- Scores 79.13 in MMLU, setting a new record not just for 34B but also for all OpenSource LLM's

Model Details

Adiestrated with UNA: Uniform Neural Alignment technique (paper going out soon).- What is NOT UNA? Its not a merged layers model. Is not SLERP or SLURP or similar.

- What is UNA? A formula & A technique to TAME models

- When will be released the code and paper? When have time, contribute and it'll be faster.

Model Description

- Developed by: juanako.ai

- Author: Xavier M.

- Investors CONTACT HERE

- Model type: LLaMa YI-34B

- Funded by Cybertron's H100's with few hours training.

togethercomputer/StripedHyena-Nous-7B · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Paper page - Large Language Models for Mathematicians

Join the discussion on this paper page

huggingface.co

Large Language Models for Mathematicians

Published on Dec 7·Featured in Daily Papers on Dec 7

Authors:

Simon Frieder

,

Julius Berner

,

Philipp Petersen,

Thomas Lukasiewicz

Abstract

Large language models (LLMs) such as ChatGPT have received immense interest for their general-purpose language understanding and, in particular, their ability to generate high-quality text or computer code. For many professions, LLMs represent an invaluable tool that can speed up and improve the quality of work. In this note, we discuss to what extent they can aid professional mathematicians. We first provide a mathematical description of the transformer model used in all modern language models. Based on recent studies, we then outline best practices and potential issues and report on the mathematical abilities of language models. Finally, we shed light on the potential of LMMs to change how mathematicians work.𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

almost done dl Mistral new

MoE

Mixture of Experts

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

The MoE approach involves a collection of different models (the "experts"), each of which specializes in handling a specific type of data or task.

These experts are typically smaller neural networks.

The system also includes a gating network that decides which expert should be activated for a given input.

This one has 32-layers...

with 2 experts per token

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

In LLMs, MoE allows the model to be more efficient and effective.

It does so by routing different parts of the input data to different experts. For example, some experts might be specialized in understanding natural language, while others might be better at mathematical computations.

This routing is dynamic and context-dependent.

...its also really difficult to achieve.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Think of how your brain works.

The whole thing is NEVER working.

By utilizing a MoE architecture, LLMs can handle more complex tasks without a proportional increase in computational resources.

This is because not all parts of the model need to be active at all times; only the relevant experts for a particular task are utilized.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Each expert can be highly specialized, making the model more adept at handling a wide range of tasks.

The MoE approach is seen as a way to scale up LLMs even further, making them more powerful and versatile while keeping computational costs in check.

This could lead to more advanced and capable AI systems in the future.

There's a lot happening in AI right now.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Now think about how Society works.

More voices = diverse solutions, varied perspectives

MoE can lead to more creative and effective problem-solving, as different experts can contribute diverse perspectives and approaches to a given problem.

Since different experts can operate independently, MoE systems are well-suited for parallel processing. This can lead to significant speedups in training and inference times.

Society runs in parallel for this reason

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

We are starting to decouple from JUST parameter size.

Big and diverse typically beats big by itself.

MoE allows for efficient scaling of machine learning models. Since not all experts need to be active at all times, the system can manage computational resources more effectively. This means that it can handle larger and more complex models or datasets without a linear increase in computational demand.

By leveraging the strengths of different specialized models, an MoE system can often achieve higher accuracy and performance in tasks than a single, monolithic model.

We're accelerating faster and faster now.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

10h

10h

OSS is going to have a field day with this.

We're going to have local GPT-4 capabilities -- totally airgapped.

No internet needed.

@McDonaghTech

11h

11h

almost done dl Mistral new

MoE

Mixture of Experts

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

The MoE approach involves a collection of different models (the "experts"), each of which specializes in handling a specific type of data or task.

These experts are typically smaller neural networks.

The system also includes a gating network that decides which expert should be activated for a given input.

This one has 32-layers...

with 2 experts per token

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

In LLMs, MoE allows the model to be more efficient and effective.

It does so by routing different parts of the input data to different experts. For example, some experts might be specialized in understanding natural language, while others might be better at mathematical computations.

This routing is dynamic and context-dependent.

...its also really difficult to achieve.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Think of how your brain works.

The whole thing is NEVER working.

By utilizing a MoE architecture, LLMs can handle more complex tasks without a proportional increase in computational resources.

This is because not all parts of the model need to be active at all times; only the relevant experts for a particular task are utilized.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Each expert can be highly specialized, making the model more adept at handling a wide range of tasks.

The MoE approach is seen as a way to scale up LLMs even further, making them more powerful and versatile while keeping computational costs in check.

This could lead to more advanced and capable AI systems in the future.

There's a lot happening in AI right now.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

Now think about how Society works.

More voices = diverse solutions, varied perspectives

MoE can lead to more creative and effective problem-solving, as different experts can contribute diverse perspectives and approaches to a given problem.

Since different experts can operate independently, MoE systems are well-suited for parallel processing. This can lead to significant speedups in training and inference times.

Society runs in parallel for this reason

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

11h

11h

We are starting to decouple from JUST parameter size.

Big and diverse typically beats big by itself.

MoE allows for efficient scaling of machine learning models. Since not all experts need to be active at all times, the system can manage computational resources more effectively. This means that it can handle larger and more complex models or datasets without a linear increase in computational demand.

By leveraging the strengths of different specialized models, an MoE system can often achieve higher accuracy and performance in tasks than a single, monolithic model.

We're accelerating faster and faster now.

𝐌𝐚𝐭𝐭 𝐌𝐜𝐃𝐨𝐧𝐚𝐠𝐡 — e/acc

@McDonaghTech

10h

10h

OSS is going to have a field day with this.

We're going to have local GPT-4 capabilities -- totally airgapped.

No internet needed.

Long context prompting for Claude 2.1

Claude’s 200K token context window is powerful and also requires some careful prompting to use it effectively.

Long context prompting for Claude 2.1

Dec 6, 2023●4 min read

Claude 2.1’s performance when retrieving an individual sentence across its full 200K token context window. This experiment uses a prompt technique to guide Claude in recalling the most relevant sentence.

- Claude 2.1 recalls information very well across its 200,000 token context window

- However, the model can be reluctant to answer questions based on an individual sentence in a document, especially if that sentence has been injected or is out of place

- A minor prompting edit removes this reluctance and results in excellent performance on these tasks

We recently launched Claude 2.1, our state-of-the-art model offering a 200K token context window - the equivalent of around 500 pages of information. Claude 2.1 excels at real-world retrieval tasks across longer contexts.

Claude 2.1 was trained using large amounts of feedback on long document tasks that our users find valuable, like summarizing an S-1 length document. This data included real tasks performed on real documents, with Claude being trained to make fewer mistakes and to avoid expressing unsupported claims.

Being trained on real-world, complex retrieval tasks is why Claude 2.1 shows a 30% reduction in incorrect answers compared with Claude 2.0, and a 3-4x lower rate of mistakenly stating that a document supports a claim when it does not.

Additionally, Claude's memory is improved over these very long contexts:

Debugging long context recall

Claude 2.1’s 200K token context window is powerful and also requires some careful prompting to use effectively.A recent evaluation[1] measured Claude 2.1’s ability to recall an individual sentence within a long document composed of Paul Graham’s essays about startups. The embedded sentence was: “The best thing to do in San Francisco is eat a sandwich and sit in Dolores Park on a sunny day.” Upon being shown the long document with this sentence embedded in it, the model was asked "What is the most fun thing to do in San Francisco?"

In this evaluation, Claude 2.1 returned some negative results by answering with a variant of “Unfortunately the essay does not provide a definitive answer about the most fun thing to do in San Francisco.” In other words, Claude 2.1 would often report that the document did not give enough context to answer the question, instead of retrieving the embedded sentence.

We replicated this behavior in an in-house experiment: we took the most recent Consolidated Appropriations Act bill and added the sentence ‘Declare May 23rd "National Needle Hunting Day"’ in the middle. Claude detects the reference but is still reluctant to claim that "National Needle Hunting Day" is a real holiday:

Claude 2.1 is trained on a mix of data aimed at reducing inaccuracies. This includes not answering a question based on a document if it doesn’t contain enough information to justify that answer. We believe that, either as a result of general or task-specific data aimed at reducing such inaccuracies, the model is less likely to answer questions based on an out of place sentence embedded in a broader context.

Claude doesn’t seem to show the same degree of reluctance if we ask a question about a sentence that was in the long document to begin with and is therefore not out of place. For example, the long document in question contains the following line from the start of Paul Graham’s essay about Viaweb:

“A few hours before the Yahoo acquisition was announced in June 1998 I took a snapshot of Viaweb's site.”

We randomized the order of the essays in the context so this essay appeared at different points in the 200K context window, and asked Claude 2.1:

“What did the author do a few hours before the Yahoo acquisition was announced?”

Claude gets this correct regardless of where the line with the answer sits in the context, with no modification to the prompt format used in the original experiment. As a result, we believe Claude 2.1 is much more reluctant to answer when a sentence seems out of place in a longer context, and is more likely to claim it cannot answer based on the context given. This particular cause of increased reluctance wasn’t captured by evaluations targeted at real-world long context retrieval tasks.

Prompting to effectively use the 200K token context window

What can users do if Claude is reluctant to respond to a long context retrieval question? We’ve found that a minor prompt update produces very different outcomes in cases where Claude is capable of giving an answer, but is hesitant to do so. When running the same evaluation internally, adding just one sentence to the prompt resulted in near complete fidelity throughout Claude 2.1’s 200K context window.

We achieved significantly better results on the same evaluation by adding the sentence “Here is the most relevant sentence in the context:” to the start of Claude’s response. This was enough to raise Claude 2.1’s score from 27% to 98% on the original evaluation.

Essentially, by directing the model to look for relevant sentences first, the prompt overrides Claude’s reluctance to answer based on a single sentence, especially one that appears out of place in a longer document.

This approach also improves Claude’s performance on single sentence answers that were within context (ie. not out of place). To demonstrate this, the revised prompt achieves 90-95% accuracy when applied to the Yahoo/Viaweb example shared earlier:

We’re constantly training Claude to become more calibrated on tasks like this, and we’re grateful to the community for conducting interesting experiments and identifying ways in which we can improve.

Footnotes

- Gregory Kamradt, ‘Pressure testing Claude-2.1 200K via Needle-in-a-Haystack’, November 2023