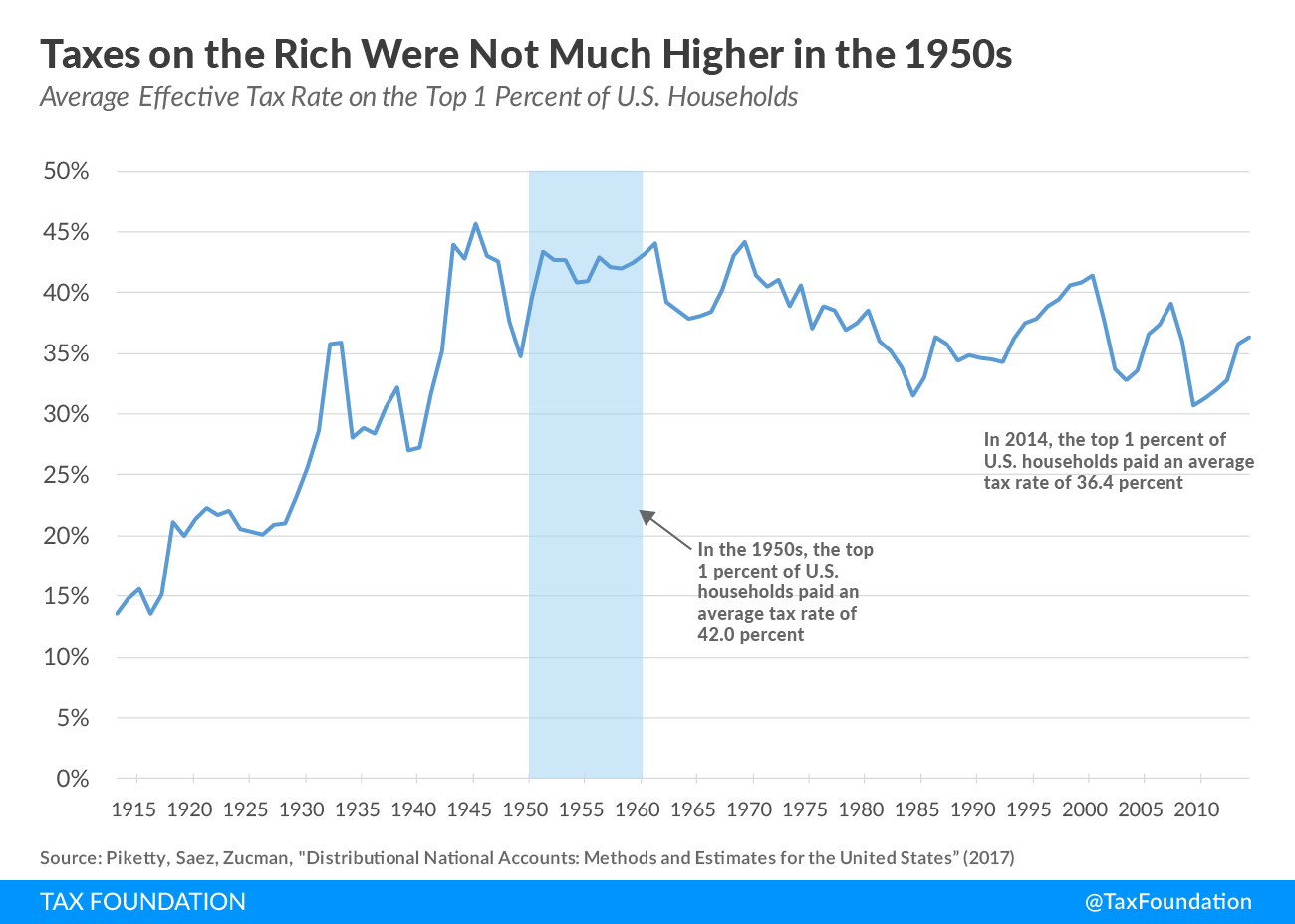

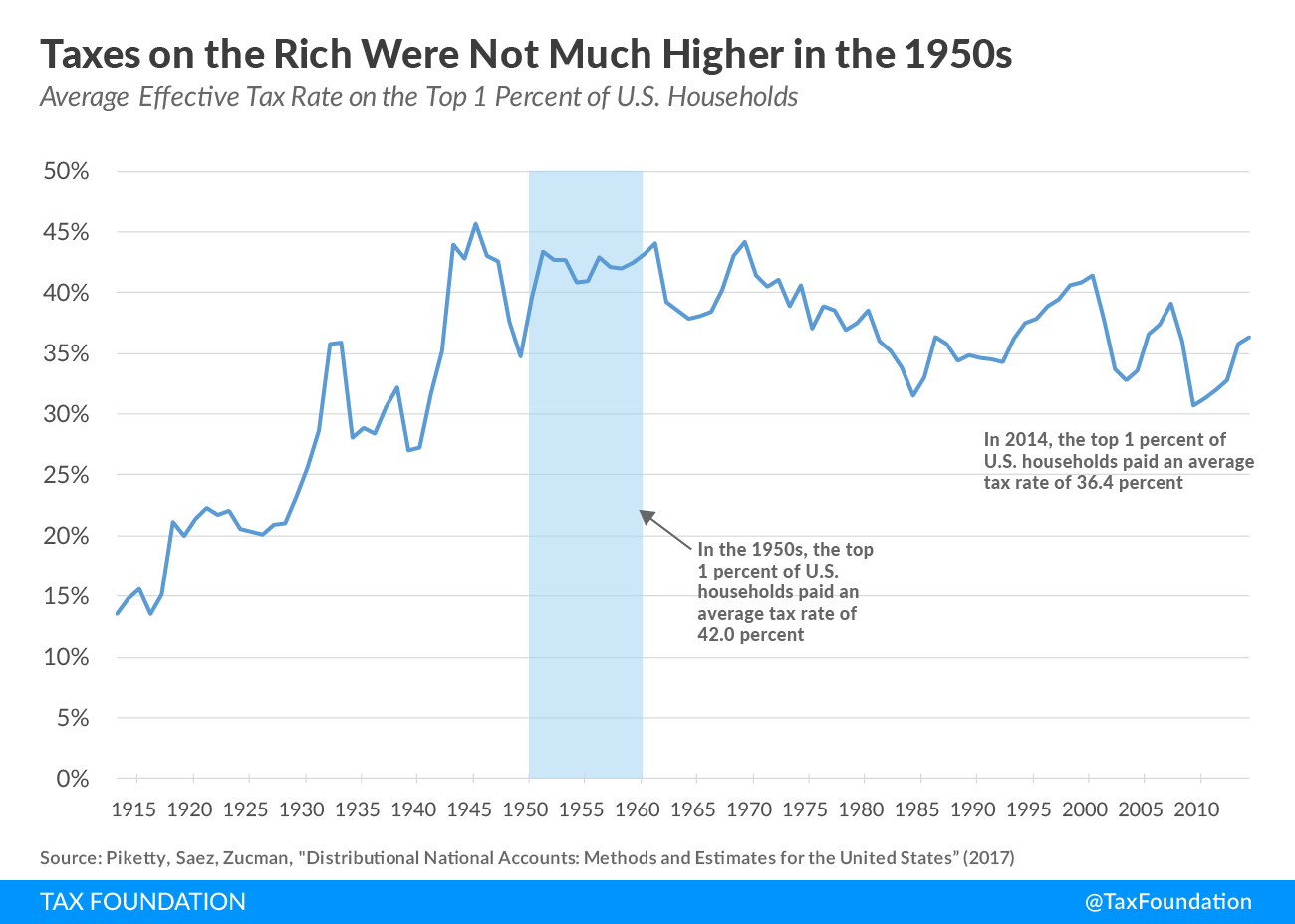

There is a common misconception that high-income Americans are not paying much in taxes compared to what they used to. Proponents of this view often point to the 1950s, when the top federal income tax rate was 91 percent

for most of the decade.

[1]However, despite these high marginal rates, the top 1 percent of taxpayers in the 1950s only paid about 42 percent of their income in taxes. As a result, the tax burden on high-income households today is only slightly lower than what these households faced in the 1950s.

The graph below shows the average tax rate that the top 1 percent of Americans have faced over the last century. The data comes from a

recent paper by Thomas Piketty, Emmanuel Saez, and Gabriel Zucman that attempts to account for all federal, state, and local taxes paid by different groups of Americans over the last 100 years.

[2]

The data shows that, between 1950 and 1959, the top 1 percent of taxpayers paid an average of 42.0 percent of their income in federal, state, and local taxes. Since then, the average effective tax rate of the top 1 percent has declined slightly overall. In 2014, the top 1 percent of taxpayers paid an average tax rate of 36.4 percent.

All things considered, this is not a very large change. To put it another way, the average effective tax rate on the 1 percent highest-income households is about 5.6 percentage points lower today than it was in the 1950s. That’s a noticeable change, but not a radical shift.

[3]

How could it be that the tax code of the 1950s had a top marginal tax rate of 91 percent, but resulted in an effective tax rate of only 42 percent on the wealthiest taxpayers? In fact, the situation is even stranger. The 42.0 percent tax rate on the top 1 percent takes into account all taxes levied by federal, state, and local governments, including: income, payroll, corporate, excise, property, and estate taxes. When we look at income taxes specifically, the top 1 percent of taxpayers paid an average effective rate of only

16.9 percent in income taxes during the 1950s.

[4]

There are a few reasons for the discrepancy between the 91 percent top marginal income tax rate and the 16.9 percent effective income tax rate of the 1950s.

- The 91 percent bracket of 1950 only applied to households with income over $200,000 (or about $2 million in today’s dollars). Only a small number of taxpayers would have had enough income to fall into the top bracket – fewer than 10,000 households, according to an article in The Wall Street Journal. Many households in the top 1 percent in the 1950s probably did not fall into the 91 percent bracket to begin with.

- Even among households that did fall into the 91 percent bracket, the majority of their income was not necessarily subject to that top bracket. After all, the 91 percent bracket only applied to income above $200,000, not to every single dollar earned by households.

- Finally, it is very likely that the existence of a 91 percent bracket led to significant tax avoidance and lower reported income. There are many studies that show that, as marginal tax rates rise, income reported by taxpayers goes down. As a result, the existence of the 91 percent bracket did not necessarily lead to significantly higher revenue collections from the top 1 percent.

All in all, the idea that high-income Americans in the 1950s paid much more of their income in taxes should be abandoned. The top 1 percent of Americans today do not face an unusually low tax burden, by historical standards