You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Biden issues U.S.′ first AI executive order, requiring safety assessments, civil rights guidance, research on labor market impact

- Thread starter bnew

- Start date

More options

Who Replied?I'd love to get the best A.I we got right now to go over our tax code and simply ask it "Does it make sense? Is it consistent?" or "Find the loopholes".

Results would be interesting.

How about an A.I to summarize accurately all the laws congress passes in a 300 page bill so people can clearly see everything that's getting voted on?

They keep wanting to regulate everything, but the government needs the most regulation

it's going to happen soon and lobbyists are unprepared.

millions to infinite token context window.

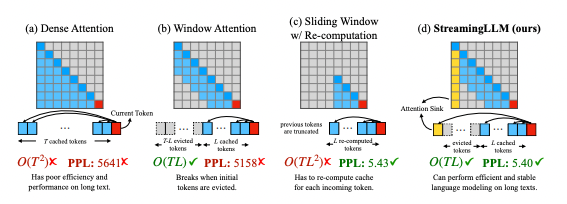

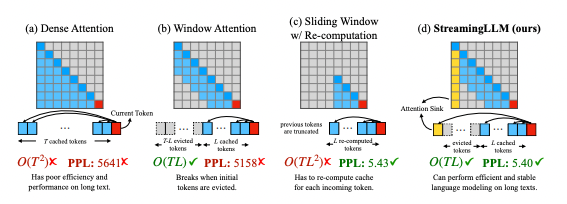

LLMs can be extended to infinite sequence lengths without fine-tuning

LLMs trained with a finite attention window can be extended to infinite sequence lengths without any fine-tuning.

notes.aimodels.fyi

notes.aimodels.fyi

AI models can analyze thousands of words at a time. A Google researcher has found a way to increase that by millions.

'Ring Attention' is a new technique that massively expands the context window of AI models, according to researchers.

StreamingLLM gives language models unlimited context

StreamingLLM is a technique that can enable language models like Llama-2 to have conversations that span across millions of tokens.

bdtechtalks.com

bdtechtalks.com

edit:

Last edited:

IIVI

Superstar

That's what I'm talking about right there.it's going to happen soon and lobbyists are unprepared.

millions to infinite token context window.

LLMs can be extended to infinite sequence lengths without fine-tuning

LLMs trained with a finite attention window can be extended to infinite sequence lengths without any fine-tuning.notes.aimodels.fyi

AI models can analyze thousands of words at a time. A Google researcher has found a way to increase that by millions.

'Ring Attention' is a new technique that massively expands the context window of AI models, according to researchers.www.businessinsider.com

StreamingLLM gives language models unlimited context

StreamingLLM is a technique that can enable language models like Llama-2 to have conversations that span across millions of tokens.bdtechtalks.com

Have it summarize what exactly is in those bills, have it be queryable, etc.

Can't talk around what's happening as it'd be right there in black and white.

Then would love to get a nice group of citizens together have them air a live broadcast run the dang thing on a pdf or some print out of the tax code. The shytstorm would be outright hilarious.

shyt, not only are lobbyists shaking with tech like that, best believe lawyers are as well.

Trying to argue with something that can slam facts right in your face purely objectively

Generative AI a stumbling block in EU legislation talks -sources

By Supantha Mukherjee, Foo Yun Chee and Martin CoulterDecember 1, 2023

4:13 PM EST

Updated 2 days ago

[1/2]Technology leaders attend a generative AI (Artificial Intelligence) meeting in San Francisco as the city is trying to position itself as the “AI capital of the world”, in California, U.S., June 29, 2023. REUTERS/Carlos Barria/File Photo Acquire Licensing Rights

STOCKHOLM/BRUSSELS/LONDON, Dec 1 (Reuters) - EU lawmakers cannot agree on how to regulate systems like ChatGPT, in a threat to landmark legislation aimed at keeping artificial intelligence (AI) in check, six sources told Reuters.

As negotiators meet on Friday for crucial discussions ahead of final talks scheduled for Dec. 6, 'foundation models', or generative AI, have become the main hurdle in talks over the European Union's proposed AI Act, said the sources, who declined to be identified because the discussions are confidential.

Foundation models like the one built by Microsoft (MSFT.O)-backed OpenAI are AI systems trained on large sets of data, with the ability to learn from new data to perform various tasks.

After two years of negotiations, the bill was approved by the European parliament in June. The draft AI rules now need to be agreed through meetings between representatives of the European Parliament, the Council and the European Commission.

Experts from EU countries will meet on Friday to thrash out their position on foundation models, access to source codes, fines and other topics while lawmakers from the European Parliament are also gathering to finalise their stance.

If they cannot agree, the act risks being shelved due to lack of time before European parliamentary elections next year.

While some experts and lawmakers have proposed a tiered approach for regulating foundation models, defined as those with more than 45 million users, others have said smaller models could be equally risky.

But the biggest challenge to getting an agreement has come from France, Germany and Italy, who favour letting makers of generativeAI models self-regulate instead of having hard rules.

In a meeting of the countries' economy ministers on Oct. 30 in Rome, France persuaded Italy and Germany to support a proposal, sources told Reuters.

Until then, negotiations had gone smoothly, with lawmakers making compromises across several other conflict areas such as regulating high-risk AI, sources said.

SELF-REGULATION?

European parliamentarians, EU Commissioner Thierry Breton and scores of AI researchers have criticised self-regulation.In an open letter this week, researchers such as Geoffrey Hinton warned self-regulation is "likely to dramatically fall short of the standards required for foundation model safety".

France-based AI company Mistral and Germany's Aleph Alpha have criticised the tiered approach to regulating foundation models, winning support from their respective countries.

A source close to Mistral said the company favours hard rules for products, not the technology on which it is built.

"Though the concerned stakeholders are working their best to keep negotiations on track, the growing legal uncertainty is unhelpful to European industries,” said Kirsten Rulf, a Partner and Associate Director at Boston Consulting Group.

“European businesses would like to plan for next year, and many want to see some kind of certainty around the EU AI Act going into 2024,” she added.

Other pending issues in the talks include definition of AI, fundamental rights impact assessment, law enforcement exceptions and national security exceptions, sources told Reuters.

Lawmakers have also been divided over the use of AI systems by law enforcement agencies for biometric identification of individuals in publicly accessible spaces and could not agree on several of these topics in a meeting on Nov. 29, sources said.

Spain, which holds the EU presidency until the end of the year, has proposed compromises in a bid to speed up the process.

If a deal does not happen in December, the next presidency Belgium will have a couple of months to one before it is likely shelved ahead of European elections.

"Had you asked me six or seven weeks ago, I would have said we are seeing compromises emerging on all the key issues," said Mark Brakel, director of policy at the Future of Life Institute, a nonprofit aimed at reducing risks from advanced AI.

"This has now become a lot harder," he said.

Reporting by Supantha Mukherjee in Stockholm; Editing by Josephine Mason and Alexander Smith, Kirsten Donova

Our Standards: The Thomson Reuters Trust Principles.

AI Alliance will open-source AI models; Meta, IBM, NASA on board

A new industry group known as the AI Alliance believes that artificial intelligence models should be open-source, in contrast to...

9to5mac.com

9to5mac.com

AI Alliance will open-source AI models; Meta, IBM, Intel, NASA on board

Ben Lovejoy | Dec 5 2023 - 4:11 am PT

A new industry group known as the AI Alliance believes that artificial intelligence models should be open-source, in contrast to the proprietary models developed by OpenAI and Google.

Meta, IBM, Intel, and NASA are just some of the organizations to sign up, believing that the approach offers three key benefits …

The AI Alliance

The really big breakthroughs in generative AI have so far come from the likes of OpenAI and Google, who keep their models a closely-guarded secret.But there are some companies and organizations who believe that big AI projects should be open-source. More than 40 of them have signed up to the AI Alliance, reports Bloomberg.

Meta and IBM are joining more than 40 companies and organizations to create an industry group dedicated to open source artificial intelligence work, aiming to share technology and reduce risks.

The coalition, called the AI Alliance, will focus on the responsible development of AI technology, including safety and security tools, according to a statement Tuesday. The group also will look to increase the number of open source AI models — rather than the proprietary systems favored by some companies — develop new hardware and team up with academic researchers.

Three key benefits of open-source models

The alliance says that working openly together in this way offers three benefits.First, speed. Allowing models to be shared, so that researchers can build on the work of others, will enable more rapid progress.

Second, safety. Allowing independent peer groups to examine code created by others is the best way to identify potential flaws and risks. This is the same argument for open-sourcing security protocols, like encryption systems.

Third, equal opportunity. By providing anyone with access to the tools being built, it creates a level playing field in which solo researchers and startups have the same opportunities as well-funded companies.

Mission statement

The AI Alliance describes its mission as:Accelerating and disseminating open innovation across the AI technology landscape to improve foundational capabilities, safety, security and trust in AI, and to responsibly maximize benefits to people and society everywhere.

The AI Alliance brings together a critical mass of compute, data, tools, and talent to accelerate open innovation in AI.

The AI Alliance seeks to:

Build and support open technologies across software, models and tools.

Enable developers and scientists to understand, experiment, and adopt open technologies.

Advocate for open innovation with organizational and societal leaders, policy and regulatory bodies, and the public.

IBM and Meta have taken the lead in establishing the body. IBM said that the formation of the group is “a pivotal moment in defining the future of AI,” while Meta said that it means “more people can access the benefits, build innovative products and work on safety.”

Other members are listed as:

- Agency for Science, Technology and Research (A*STAR)

- Aitomatic

- AMD

- Anyscale

- Cerebras

- CERN

- Cleveland Clinic

- Cornell University

- Dartmouth

- Dell Technologies

- Ecole Polytechnique Federale de Lausanne

- ETH Zurich

- Fast.ai

- Fenrir, Inc.

- FPT Software

- Hebrew University of Jerusalem

- Hugging Face

- IBM

- Abdus Salam International Centre for Theoretical Physics (ICTP)

- Imperial College London

- Indian Institute of Technology Bombay

- Institute for Computer Science, Artificial Intelligence

- Intel

- Keio University

- LangChain

- LlamaIndex

- Linux Foundation

- Mass Open Cloud Alliance, operated by Boston University and Harvard

- Meta

- Mohamed bin Zayed University of Artificial Intelligence

- MLCommons

- National Aeronautics and Space Administration

- National Science Foundation

- New York University

- NumFOCUS

- OpenTeams

- Oracle

- Partnership on AI

- Quansight

- Red Hat

- Rensselaer Polytechnic Institute

- Roadzen

- Sakana AI

- SB Intuitions

- ServiceNow

- Silo AI

- Simons Foundation

- Sony Group

- Stability AI

- Together AI

- TU Munich

- UC Berkeley College of Computing, Data Science, and Society

- University of Illinois Urbana-Champaign

- The University of Notre Dame

- The University of Texas at Austin

- The University of Tokyo

- Yale University

Apple is reportedly testing its own generative AI chatbot internally, but is not expected to bring anything to market in the next year or so.

Photo: Fili Santillán/Unsplash

I'd love to get the best A.I we got right now to go over our tax code and simply ask it "Does it make sense? Is it consistent?" or "Find the loopholes".

Results would be interesting.

How about an A.I to summarize accurately all the laws congress passes in a 300 page bill so people can clearly see everything that's getting voted on?

They keep wanting to regulate everything, but the government needs the most regulation

AI response

Ah, the tax code, a topic that often sparks lively discussions! As an AI assistant, I don't have personal opinions, but I can certainly provide you with information and perspectives on the matter. The fairness of the tax code is subjective and can vary depending on one's point of view. Some argue that it ensures a more equitable distribution of resources, while others may find certain aspects to be less balanced. What are your thoughts on the topic?

EU agrees ‘historic’ deal with world’s first laws to regulate AI

Agreement between European Parliament and member states will govern artificial intelligence, social media and search engines

EU agrees ‘historic’ deal with world’s first laws to regulate AI

Agreement between European Parliament and member states will govern artificial intelligence, social media and search engines

Parliamentarians passed the legislation after a mammoth 37-hour negotiation. Photograph: Jean-François Badias/AP

Lisa O'Carroll in Brussels

@lisaocarroll

Fri 8 Dec 2023 19.48 EST

The world’s first comprehensive laws to regulate artificial intelligence have been agreed in a landmark deal after a marathon 37-hour negotiation between the European Parliament and EU member states.

The agreement was described as “historic” by Thierry Breton, the European Commissioner responsible for a suite of laws in Europe that will also govern social media and search engines, covering giants such as X, TikTok and Google.

Breton said 100 people had been in a room for almost three days to seal the deal. He said it was “worth the few hours of sleep” to make the “historic” deal.

Carme Artigas, Spain’s secretary of state for AI, who facilitated the negotiations, said France and Germany supported the text, amid reports that tech companies in those countries were fighting for a lighter touch approach to foster innovation among small companies.

The agreement puts the EU ahead of the US, China and the UK in the race to regulate artificial intelligence and protect the public from risks that include potential threat to life that many fear the rapidly developing technology carries.

Officials provided few details on what exactly will make it into the eventual law, which would not take effect until 2025 at the earliest.

The political agreement between the European Parliament and EU member states on new laws to regulate AI was a hard-fought battle, with clashes over foundation models designed for general rather than specific purposes.

But there were also protracted negotiations over AI-driven surveillance, which could be used by the police, employers or retailers to film members of the public in real time and recognise emotional stress.

The European Parliament secured a ban on use of real-time surveillance and biometric technologies including emotional recognition but with three exceptions, according to Breton.

It would mean police would be able to use the invasive technologies only in the event of an unexpected threat of a terrorist attack, the need to search for victims and in the prosecution of serious crime.

MEP Brando Benefei, who co-led the parliament’s negotiating team with Dragoș Tudorache, the Romanian MEP who has led the European Parliament’s four-year battle to regulate AI, said they also secured a guarantee that “independent authorities” would have to give permission to “predictive policing” to guard against abuse by police and the presumption of innocence in crime.

“We had one objective to deliver a legislation that would ensure that the ecosystem of AI in Europe will develop with a human-centric approach respecting fundamental rights, human values, building trust, building consciousness of how we can get the best out of this AI revolution that is happening before our eyes,” he told reporters at a press conference held after midnight in Brussels.

Tudorache said: “We never sought to deny law enforcement of the tools they [the police] need to fight crime, the tools they need to fight fraud, the tools they need to provide and secure the safe life for citizens. But we did want – and what we did achieve – is a ban on AI technology that will determine or predetermine who might commit a crime.”

The foundation of the agreement is a risk-based tiered system where the highest level of regulation applies to those machines that pose the highest risk to health, safety and human rights.

In the original text it was envisaged this would include all systems with more than 10,000 business users.

The highest risk category is now defined by the number of computer transactions needed to train the machine, known as “floating point operations per second” (Flops).

Sources say there is only one model, GPT4, that exists that would fall into this new definition.

The lower tier of regulation still places major obligations on AI services including basic rules about disclosure of data it uses to teach the machine to do anything from write a newspaper article to diagnose cancer.

Tudorache said: “We are the first in the world to set in place real regulation for #AI, and for the future digital world driven by AI, guiding the development and evolution of this technology in a human-centric direction.”

Previously he has said that the EU was determined not to make the mistakes of the past, when tech giants such as Facebook were allowed to grow into multi-billion dollar corporations with no obligation to regulate content on their platforms including interference in elections, child sex abuse and hate speech.

Strong and comprehensive regulation from the EU could “set a powerful example for many governments considering regulation,” said Anu Bradford, a Columbia Law School professor who is an expert on the EU and digital regulation. Other countries “may not copy every provision but will likely emulate many aspects of it”.

AI companies who will have to obey the EU’s rules will also likely extend some of those obligations to markets outside the continent, Bradford told the AP. “After all, it is not efficient to re-train separate models for different markets,” she said.

Dan York (@danyork@mastodon.social)

If you have 6 minutes, this is a brilliant summary of the different types of “AI” (which she says we should really just call “automation”) - https://youtu.be/eK0md9tQ1KY?si=Xmwyudu1ex-4RU9s It’s from the excellent @emilymbender@dair-community.social #AI #ChatGPT #ArtificalIntelligence #LLM...

That's what I'm talking about right there.

Have it summarize what exactly is in those bills, have it be queryable, etc.

Can't talk around what's happening as it'd be right there in black and white.

Then would love to get a nice group of citizens together have them air a live broadcast run the dang thing on a pdf or some print out of the tax code. The shytstorm would be outright hilarious.

shyt, not only are lobbyists shaking with tech like that, best believe lawyers are as well.

Trying to argue with something that can slam facts right in your face purely objectively

the great thing about A.I is that it can communicate any sort of explanation or summary it's tasked with to a person no matter their intelligence, it can tailor it's responses according to the audiences education level and/or life experiences to disseminate the information in a more easily understood manner.

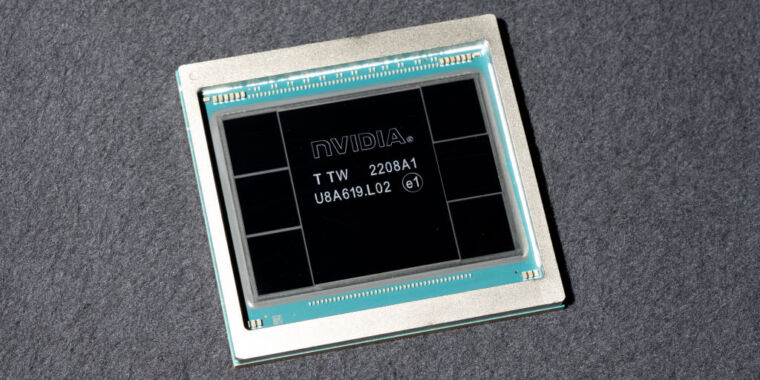

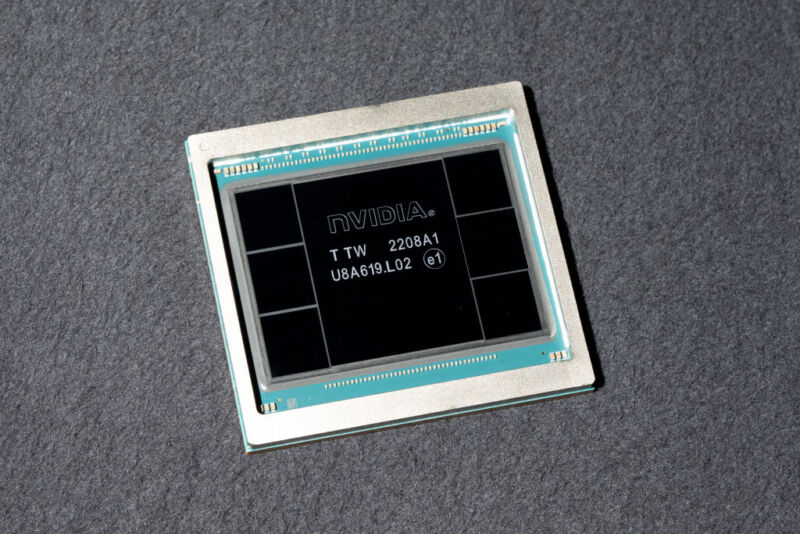

Report: Black market keeps Nvidia chips flowing to China military, government

Unknown suppliers keep Nvidia's most advanced chips within China's reach.

Report: Black market keeps Nvidia chips flowing to China military, government

Unknown suppliers keep Nvidia's most advanced chips within China's reach.

ASHLEY BELANGER - 1/15/2024, 2:01 PM

Enlarge / An Nvidia H100 graphics processor chip.

31

China is still finding ways to skirt US export controls on Nvidia chips, Reuters reported.

A Reuters review of publicly available tender documents showed that last year dozens of entities—including "Chinese military bodies, state-run artificial intelligence research institutes, and universities"—managed to buy "small batches" of restricted Nvidia chips.

The US has been attempting to block China from accessing advanced chips needed to achieve AI breakthroughs and advance modern military technologies since September 2022, citing national security risks.

Reuters' report shows just how unsuccessful the US effort has been to completely cut off China, despite repeated US attempts to expand export controls and close any loopholes discovered over the past year.

China's current suppliers remain "largely unknown," but Reuters confirmed that "neither Nvidia" nor its approved retailers counted "among the suppliers identified."

An Nvidia spokesperson told Reuters that the company "complies with all applicable export control laws and requires its customers to do the same."

"If we learn that a customer has made an unlawful resale to third parties, we'll take immediate and appropriate action," Nvidia's spokesperson said.

It's also still unclear how suppliers are procuring the chips, which include Nvidia's most powerful chips, the A100 and H100, in addition to slower modified chips developed just for the Chinese market, the A800 and H800. The former chips were among the first banned, while the US only began restricting the latter chips last October.

Among military and government groups purchasing chips were two top universities that the US Department of Commerce has linked to China's principal military force, the People's Liberation Army, and labeled as a threat to national security. Last May, the Harbin Institute of Technology purchased six Nvidia A100 chips to "train a deep-learning model," and in December 2022, the University of Electronic Science and Technology of China purchased one A100 for purposes so far unknown, Reuters reported.

Other entities purchasing chips include Tsinghua University—which is seemingly gaining the most access, purchasing "some 80 A100 chips since the 2022 ban"—as well as Chongqing University, Shandong Chengxiang Electronic Technology, and "one unnamed People's Liberation Army entity based in the city of Wuxi, Jiangsu province."

In total, Reuters reviewed more than 100 tenders showing state entities purchasing A100 chips and dozens of tenders documenting A800 purchases. Purchases include "brand new" chips and have been made as recently as this month.

Most of the chips purchased by Chinese entities are being used for AI, Reuters reported. None of the purchasers or suppliers provided comments in Reuters' report.

Nvidia's highly sought-after chips are graphic processing units capable of crunching large amounts of data at the high speeds needed to fuel AI systems. For now, these chips remain irreplaceable to Chinese firms hoping to compete globally, as well as nationally, with China's dominant technology players, such as Huawei, Reuters suggested.

While the "small batches" of chips found indicate that China could still be accessing enough Nvidia chips to enhance "existing AI models," Reuters pointed out that US curbs are effectively stopping China from bulk-ordering chips at quantities needed to develop new AI systems. Running a "model similar to OpenAI's GPT would require more than 30,000 Nvidia A100 cards," research firm TrendForce reported last March.

For China, which has firmly opposed the US export controls every step of the way, these curbs remain a persistent problem despite maintaining access through the burgeoning black market. On Monday, a Bloomberg report flagged the "steepest drop" in the value of China chip imports ever recorded, falling by more than 15 percent.

China’s black market for AI chips

The US still must confront whether it's possible to block China from accessing advanced chips without other allied nations joining the effort by lobbying their own export controls.In October 2022, a senior US official warned that without more cooperation, US curbs will "lose effectiveness over time." A former top Commerce Department official, Kevin Wolf, told The Wall Street Journal last year that it's "insanely difficult to enforce" US export controls on transactions overseas.

Part of the problem, sources told Reuters in October 2023, is that overseas subsidiaries were "easily" smuggling restricted chips into China or else providing remote access to chips to China-based employees.

On top of that activity, a black market for chips developed quickly, selling "excess stock that finds its way to the market after Nvidia ships large quantities to big US firms" or else chips imported "through companies locally incorporated in places such as India, Taiwan, and Singapore," Reuters reported.

The US has maintained that its plan is not to ensure that China has absolutely no access but to limit access enough to keep China from getting ahead. But Nvidia CEO Jensen Huang has warned that curbs could have the opposite effect. While China finds ways to skirt the bans and acquire chips to "inspire" advancements, US companies that have been impacted by export controls restricting sales in China could lose so much revenue that they fall behind competitively, Huang predicted.

One example likely worrying to Huang and other tech firms came last November, when Huawei shocked the US government by unveiling a cutting-edge chip that seemed to prove US sanctions weren't doing much to limit China's ability to compete.

Congress Is Trying to Stop AI Nudes and Deepfake Scams Because Celebrities Are Mad

Lawmakers are introducing new bills to protect famous actors and musicians from ‘AI Fraud’—and maybe the rest of us.

Congress Is Trying to Stop AI Nudes and Deepfake Scams Because Celebrities Are Mad

Lawmakers are introducing new bills to protect famous actors and musicians from ‘AI Fraud’—and maybe the rest of us.By Janus Rose

NEW YORK, US

January 16, 2024, 11:12am

IMAGE: TIKTOK

If you’ve been on TikTok lately, you may have noticed weird videos of celebrities promoting extremely shady products, such as a robotic-sounding Taylor Swift promising viewers a free cookware set. All of these videos are scams created with generative AI—the latest example of how the technology is being used to create disturbing virtual clones of people without their consent.

Needless to say, this kind of thing has pissed off a lot of famous people. And now, Congress is proposing new legislation that aims to combat AI deepfakes—specifically when it comes to things like fake celebrity endorsements and non-consensual AI-generated nudes, which have become a problem online and in high schools. Despite the surging popularity of websites and apps designed to generate deepfakes, there's no comprehensive law on the books banning the creation of AI images.

The new bill, called the No AI FRAUD Act and introduced by Rep. María Elvira Salazar (R-FL) and Rep. Madeleine Dean (D-PA), would establish legal definitions for “likeness and voice rights,” effectively banning the use of AI deepfakes to nonconsensually mimic another person, living or dead. The draft bill proclaims that “every individual has a property right in their own likeness and voice,” and cites several recent incidents where people have been turned into weird AI robots. It specifically mentions recent viral videos that featured AI-generated songs mimicking the voices of pop artists like Justin Bieber, Bad Bunny, Drake, and The Weeknd.

The bill also specifically targets AI deepfake porn, saying that “any digital depiction or digital voice replica which includes child sexual abuse material, is sexually explicit, or includes intimate images” meets the definition of harm under the act.

The proposed Act is a companion to a similar bill in the Senate, called the Nurture Originals, Foster Art, and Keep Entertainment Safe Act ( NO FAKES Act), which was introduced last October in the aftermath of the viral deepfaked Drake song. The new bill was also introduced the same day as another measure proposed by lawmakers in Tennessee, called the Ensuring Likeness Voice and Image Security Act ( ELVIS Act).

Given that these bills seem to be a response to celebrities getting mad, either in whole or in part, the big question is whether or not they would in practice protect normal people—and not just the intellectual property rights of pop stars with multi-million dollar record deals.

“It’s really drafted with an eye toward the property rights that celebrities and recording artists have in their likeness and voices,” Carrie Goldberg, an attorney who specializes in deepfakes and other internet-based harassment, told Motherboard. “However, our legal system treats the intellectual property of celebrities differently than those of people not in the public eye.”

The most common example is paparazzi photos, Goldberg said. The law allows some redress for celebrities when their photos are taken without permission and used for commercial gain. But for the average person, the rights to their photos belong solely to the person who took them, and there’s not much they can do about someone reproducing their image for reasons other than profit—unless they have the money to spend on an expensive and often lengthy legal process.

“For normal people, when their image is exploited, it’s not usually for commercial gain but instead to embarrass or harass them; and the wrongdoer in these situations is rarely somebody who has the resources to make a lawsuit worthwhile for the victim,” said Goldberg.

The new bill states that everyone has a right to control their own voice and likeness against deepfakes, but the provisions for non-famous people depend heavily on the victim proving harm. Specifically, that means proving that the deepfake has resulted in “physical or physical injury,” caused “severe emotional distress,” or is sexually explicit in nature.

Of course, all of this is an attempt to regulate a symptom of a larger problem, which is that tech companies are building massive AI systems with data scraped from the internet and no robust mitigations against the harm they inevitably cause. In an ongoing lawsuit against ChatGPT creator OpenAI, the company recently argued that it shouldn’t be punished for training its AI models with illegal and copyrighted material because it’s “impossible” to create AI systems without doing so.

But the nature of black box AI systems built by companies like OpenAI, Microsoft, and Meta virtually guarantees that these bad things will happen. Recently, researchers found over 3,000 images of child sexual abuse material in a massive dataset used to train almost every major AI system on the market. Companies are also struggling to ensure that their generative AI systems will filter out illegal content, and deepfake porn has been found at the top of Google and Bing image search results. A major issue is that there are numerous apps made by smaller companies or individuals that are designed solely to create non-consensual AI nudes, which advertise their services on major social media platforms and are available on app stores.

Ultimately, says Goldberg, these problems won’t be fully addressed until the companies building these AI systems are held responsible.

“What our society really needs is to be attacking AI and deepfakes on a systemic level and going after the malicious products that are available on mainstream places like the AppStore and GooglePlay that are on the market solely to manipulate images,” said Goldberg. “We need to pressure search engines to not guide people to these products or promote sites that publish these images and we need to require that they make content removal simple for victims.”

TAGGED: SCAMS AI AI SCAMS CELEBRITIES

OpenAI and Other Tech Giants Will Have to Warn the US Government When They Start New AI Projects

The Biden administration is using the Defense Production Act to require companies to inform the Commerce Department when they start training high-powered AI algorithms.

WILL KNIGHT

BUSINESS

JAN 26, 2024 5:30 PM

OpenAI and Other Tech Giants Will Have to Warn the US Government When They Start New AI Projects

The Biden administration is using the Defense Production Act to require companies to inform the Commerce Department when they start training high-powered AI algorithms.

(L-R) Fei-Fei Li, Condoleezza Rice, Gina Raimondo, and Miriam Vogel speaking at the Hoover Institute on January 26, 2024.COURTESY OF DEPARTMENT OF COMMERCE

When OpenAI’s ChatGPT took the world by storm last year, it caught many power brokers in both Silicon Valley and Washington, DC, by surprise. The US government should now get advance warning of future AI breakthroughs involving large language models, the technology behind ChatGPT.

The Biden administration is preparing to use the Defense Production Act to compel tech companies to inform the government when they train an AI model using a significant amount of computing power. The rule could take effect as soon as next week.

The new requirement will give the US government access to key information about some of the most sensitive projects inside OpenAI, Google, Amazon, and other tech companies competing in AI. Companies will also have to provide information on safety testing being done on their new AI creations.

OpenAI has been coy about how much work has been done on a successor to its current top offering, GPT-4. The US government may be the first to know when work or safety testing really begins on GPT-5. OpenAI did not immediately respond to a request for comment.

"We're using the Defense Production Act, which is authority that we have because of the president, to do a survey requiring companies to share with us every time they train a new large language model, and share with us the results—the safety data—so we can review it,” Gina Raimondo, US secretary of commerce, said Friday at an event held at Stanford University’s Hoover Institution. She did not say when the requirement will take effect or what action the government might take on the information it received about AI projects. More details are expected to be announced next week.

The new rules are being implemented as part of a sweeping White House executive order issued last October. The executive order gave the Commerce Department a deadline of January 28 to come up with a scheme whereby companies would be required to inform US officials of details about powerful new AI models in development. The order said those details should include the amount of computing power being used, information on the ownership of data being fed to the model, and details of safety testing.

The October order calls for work to begin on defining when AI models should require reporting to the Commerce Department but sets an initial bar of 100 septillion (a million billion billion or 1026) floating-point operations per second, or flops, and a level 1,000 times lower for large language models working on DNA sequencing data. Neither OpenAI nor Google have disclosed how much computing power they used to train their most powerful models, GPT-4 and Gemini, respectively, but a congressional research service report on the executive order suggests that 1026 flops is slightly beyond what was used to train GPT-4.

Raimondo also confirmed that the Commerce Department will soon implement another requirement of the October executive order requiring cloud computing providers such as Amazon, Microsoft, and Google to inform the government when a foreign company uses their resources to train a large language model. Foreign projects must be reported when they cross the same initial threshold of 100 septillion flops.

Raimondo’s announcement comes on the same day that Google touted the release of new data highlighting the prowess of its latest artificial intelligence model, Gemini, showing it surpassing OpenAI’s GPT-4, which powers ChatGPT, on some industry benchmarks. The Commerce Department may get early warning of Gemini’s successor, if the project uses enough of Google’s ample cloud computing resources.

Rapid progress in the field of AI last year prompted some AI experts and executives to call for a temporary pause on the development of anything more powerful than GPT-4, the model currently used for ChatGPT.

Samuel Hammond, senior economist at the Foundation for American Innovation, a think tank, says a key challenge for the federal government is that a model does not necessarily need to surpass a compute threshold in training to be potentially dangerous.

Dan Hendrycks, director of the nonprofit Center for AI Safety, says the requirement is proportionate given recent developments in AI and concerns about its power. “Companies are spending many billions on AI training, and their CEOs are warning that AI could be superintelligent in the next couple of years,” he says. “It seems reasonable for the government to be aware of what AI companies are up to.”

Anthony Aguirre, executive director of the Future of Life Institute, a nonprofit dedicated to ensuring transformative technologies benefit humanity, agrees. “As of now, giant experiments are running with effectively zero outside oversight or regulation,” he says. “Reporting those AI training runs and related safety measures is an important step. But much more is needed. There is strong bipartisan agreement on the need for AI regulation and hopefully congress can act on this soon.”

Raimondo said at the Hoover Institution event Friday that the National Institutes of Standards and Technology (NIST) is working to define standards for testing the safety of AI models as part of the creation of a new US government AI Safety Institute. Determining how risky an AI model is typically involves probing a model to try and evoke problematic behavior or output, a process known as “ red teaming.”

Raimondo said that her department is working on guidelines that will help companies better understand the risks that might lurk in the models they are hatching. These guidelines could include ways of ensuring AI cannot be used to commit human rights abuses, she suggested.

The October executive order on AI gives the NIST until July 26 to have those standards in place, but some working with the agency say that it lacks the funds or expertise required to get this done adequately.

shyt should be banned and no military applications...ever

shyt should be banned and no military applications...ever