1/11

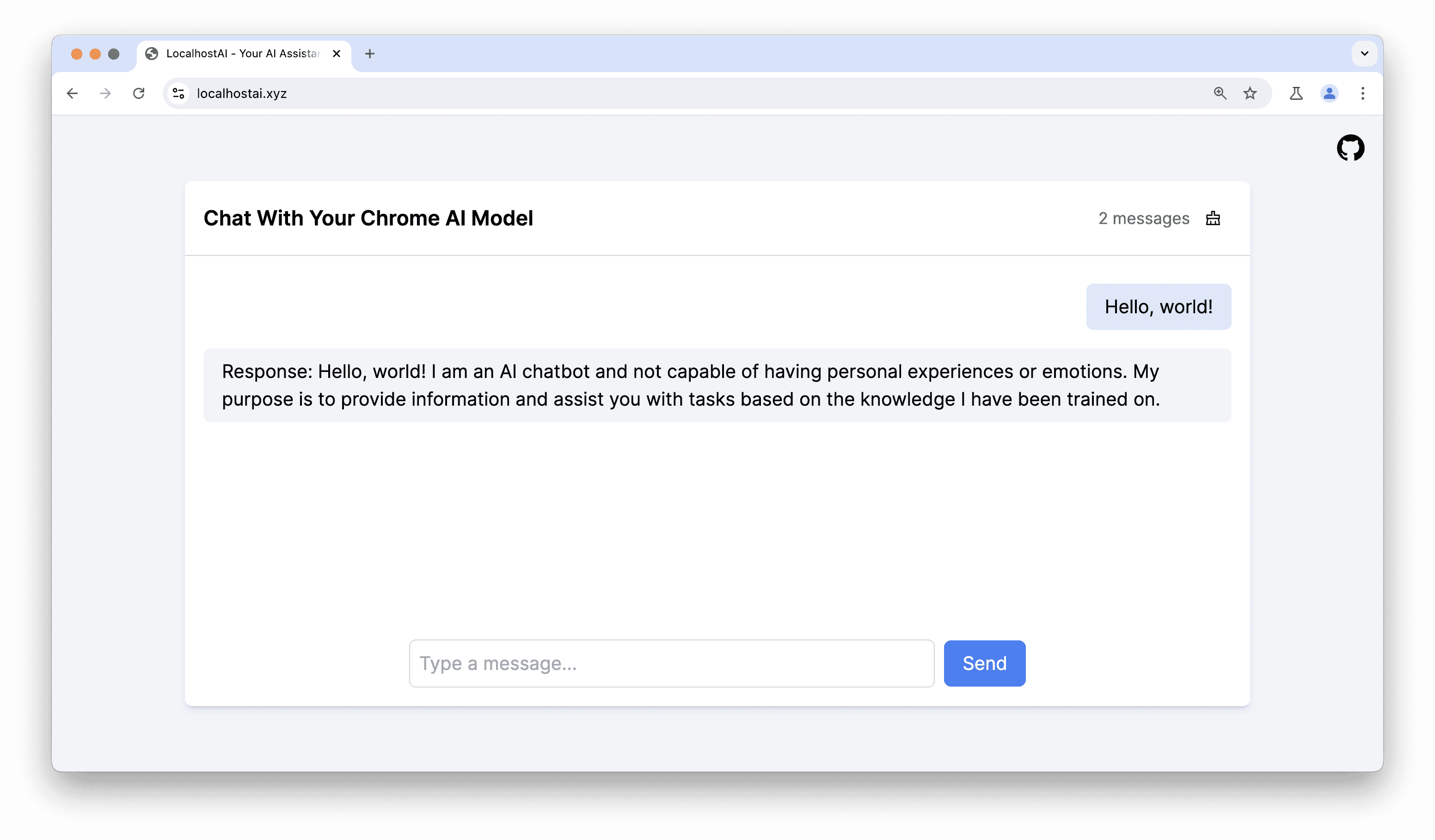

This is fast. Chrome running Gemini locally on my laptop. 2 lines of code.

2/11

No library or anything, it's a native part of some future version of Chrome

3/11

Does it work offline?

4/11

This is Chrome 128 Canary. You need to sign up for "Built-In AI proposal preview" to enable it

5/11

Seems very light on memory and CPU

6/11

wait why are they putting this into Chrome lol

are they trying to push this as a web standard of sorts or are they just going to keep this for themselves?

7/11

It's a proposal for all browsers

8/11

Query expansion like this could be promising

9/11

This is a great point!

10/11

No API key required? That would be great, I can run tons of instances of Chrome on the server as the back end of my wrapper apps.

11/11

Free, fast and private for everyone

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

This is fast. Chrome running Gemini locally on my laptop. 2 lines of code.

2/11

No library or anything, it's a native part of some future version of Chrome

3/11

Does it work offline?

4/11

This is Chrome 128 Canary. You need to sign up for "Built-In AI proposal preview" to enable it

5/11

Seems very light on memory and CPU

6/11

wait why are they putting this into Chrome lol

are they trying to push this as a web standard of sorts or are they just going to keep this for themselves?

7/11

It's a proposal for all browsers

8/11

Query expansion like this could be promising

9/11

This is a great point!

10/11

No API key required? That would be great, I can run tons of instances of Chrome on the server as the back end of my wrapper apps.

11/11

Free, fast and private for everyone

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Get Access to Gemini Nano Locally Using Chrome Canary

Learn how to run Gemini Nano locally with Chrome Canary for enhanced privacy and speed.

Jun 25, 2024

Get Access to Gemini Nano Locally Using Chrome Canary

You can access Gemini Nano locally using Chrome Canary. It lets you use cutting-edge AI in your browser.

Stay up to date

Subscribe to AI NewsletterExplore the power of Gemini Nano that is now available in Chrome Canary. While the official release is coming soon, you can already use Gemini Nano on your computer using Chrome Canary.

What is Gemini Nano?

Gemini Nano is a streamlined version of the larger Gemini model, designed to run locally. It uses the same datasets as as its predecessors. Gemini Nano keeps the original models' multimodal capabilities, but in a smaller form. Google had promised this in Chrome 126. But, it's now in Chrome Canary. This hints that an official release is near.Benefits of Using Nano Locally

Using Nano locally offers numerous advantages. It enhances product quality drastically. Nano materials display unique properties. Locally sourced materials reduce transport costs. This approach minimizes environmental impacts. Fewer emissions result from local production. It streamlines supply chains efficiently. Locally produced goods boost economies. Customers appreciate nearby product origin. It increases trust with local sourcing. This practice supports community growth.Running Gemini Nano locally offers several benefits.

- Privacy: Local processing means data doesn't have to leave your device. This provides an extra layer of security and privacy.

- Speed and Responsiveness: You don't need to send data to a server. So, interactions can be quicker, improving user experience.

- Accessibility: Developers can add large language model capabilities to applications. Users don't need constant internet access.

What is Chrome Canary?

It's the most experimental version of the Google Chrome web browser, designed primarily for developers and tech enthusiasts who want to test the latest features and APIs before they are widely available. While it offers cutting-edge functionality, it is also more prone to crashes and instability due to its experimental nature.- Canary is updated daily with the latest changes, often with minimal or no testing from Google.

- It is always three versions ahead of the Stable channel.

- Canary includes all features of normal Chrome, plus experimental functionality.

- It can run alongside other Chrome versions and is available for Windows, macOS, and Android.

Launching Gemini Nano Locally with Chrome Canary

To get started with Gemini Nano locally using Chrome Canary, follow these steps:- Download and set up Chrome Canary, ensuring the language is set to English (United States).

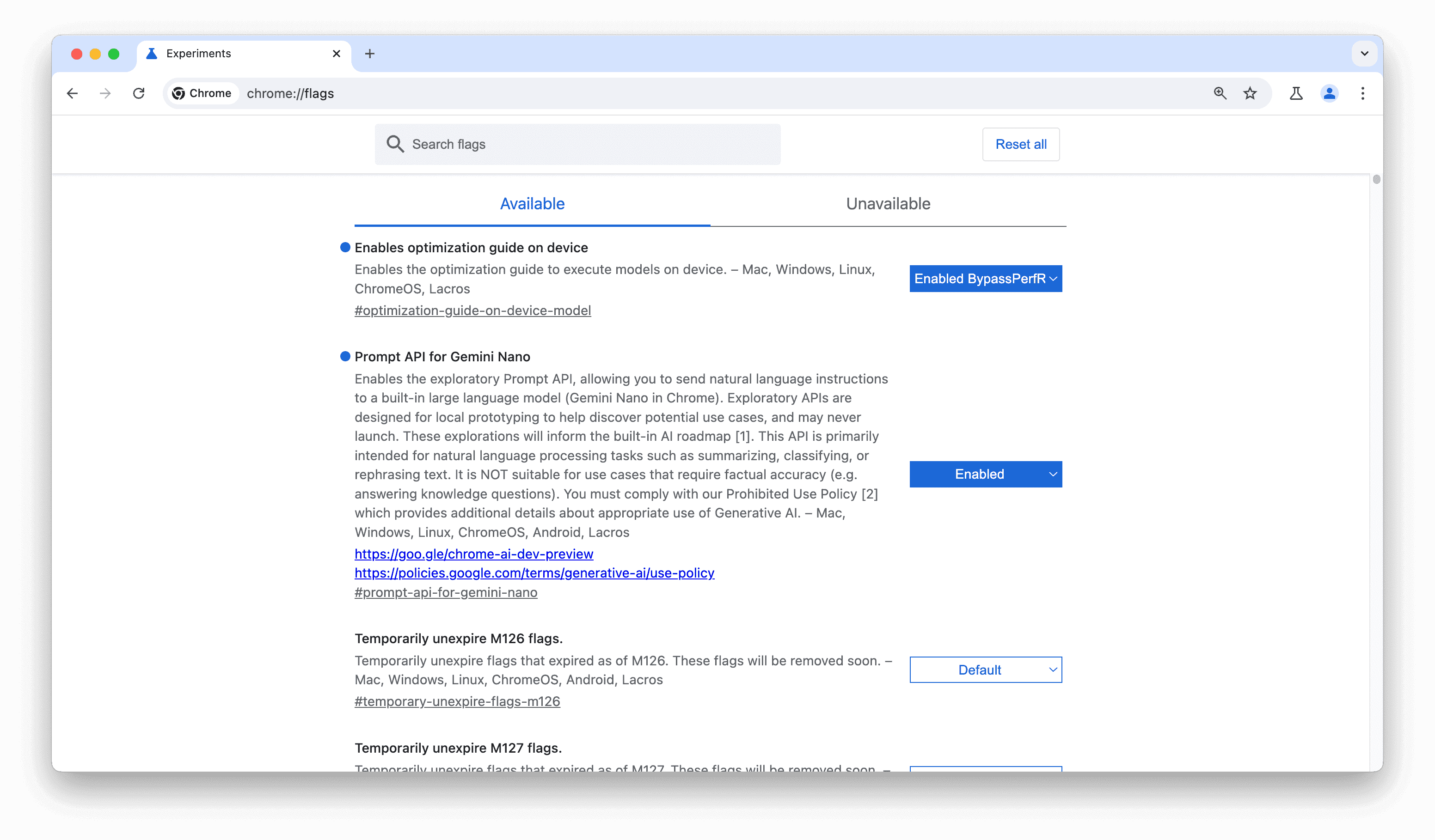

- In the address bar, enter chrome://flags

- Set:

- the 'Enables optimization guide on device' to Enabled BypassPerfRequirement

- the 'Prompt API for Gemini Nano' to Enabled

- the 'Enables optimization guide on device' to Enabled BypassPerfRequirement

- Restart Chrome.

- Wait for the Gemini Nano to download. To check the status, navigate to chrome://components and ensure that the Optimization Guide On Device Model shows version 2024.6.5.2205 or higher. If not, click 'Check for updates'.

- Congratulations! You're all set to explore Gemini Nano for chat applications. Although the model is significantly simpler, it's a major stride for website developers now having access to a local LLM for inference.

- You can chat with chrome AI model here: https://www.localhostai.xyz

Conclusion

Gemini Nano is now available on Chrome Canary, a big step forward for local AI. It processes data on your device, which increases privacy and speeds things up. This also makes advanced technology easier for more people to use. Gemini Nano gives developers and tech fans a new way to try out AI. This helps create a stronger and more efficient local tech community and shows what the future of independent digital projects might look like.

Last edited:

/cdn.vox-cdn.com/uploads/chorus_asset/file/25565579/DSCF0084.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25565579/DSCF0084.jpg)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25571139/Screenshot_2024_08_13_at_12.53.17_PM.png)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25570938/Gemini_header_image.png)

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25570929/Gemini_Live_from_IO__1_.gif)