Artificial Intelligence

Not Allen Iverson

Skynet

Guess the Butlerian Jihad, Terminator, Matrix, Men of Iron shyt will have to occur.

In all the stories, AI is a terrible and costly mistake.

The tech execs don't care..All they see is dollar signs.

They're on a race to outdo each other with the latest thing nobody else has depsite the dangers.

Already far smarter than any humanA.I. will never be 'smarter' than humans.

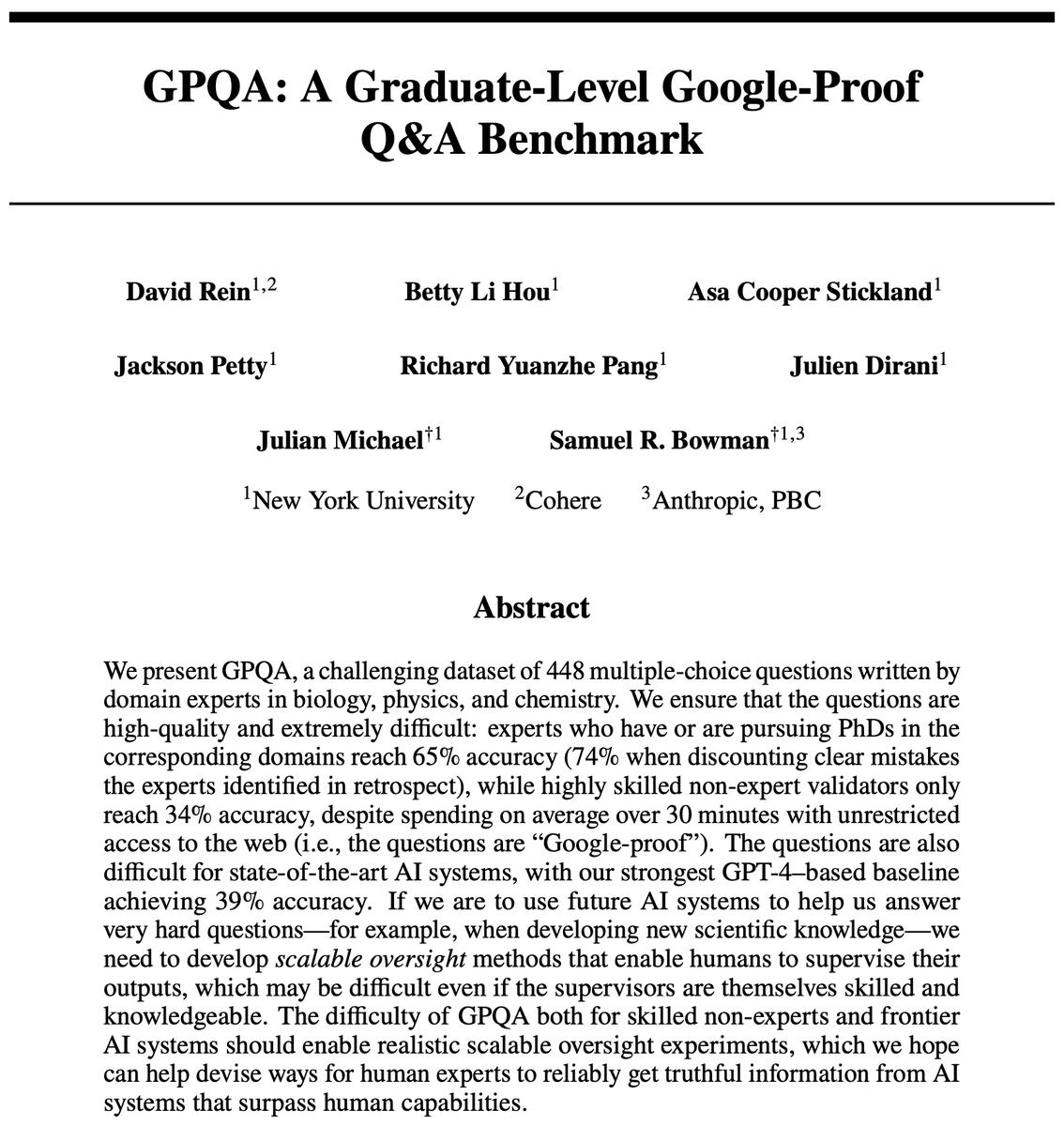

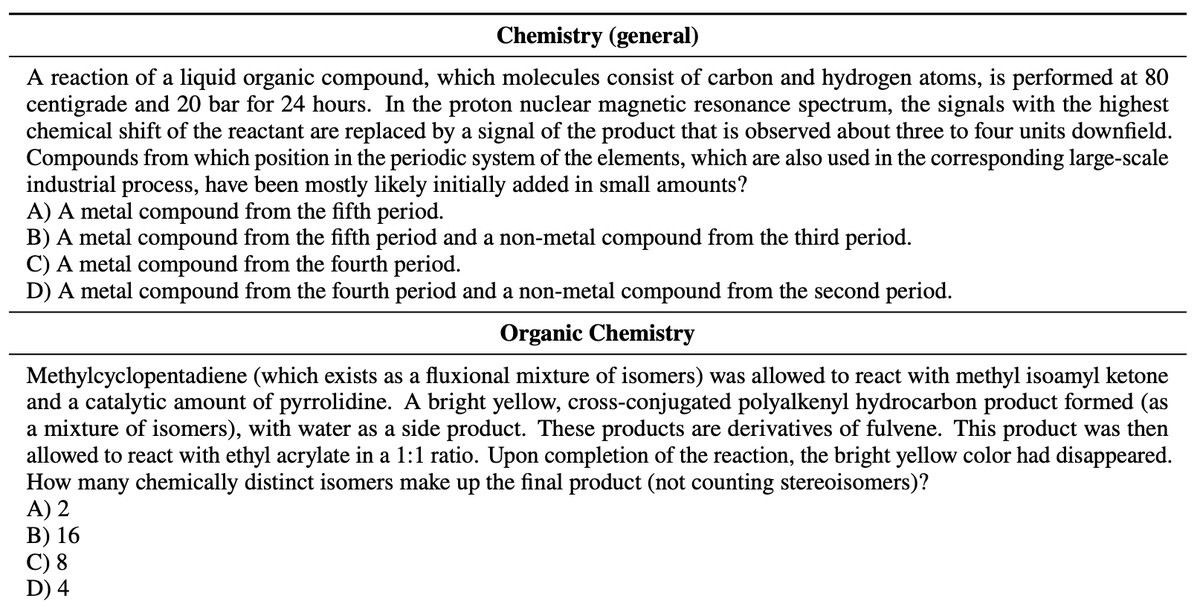

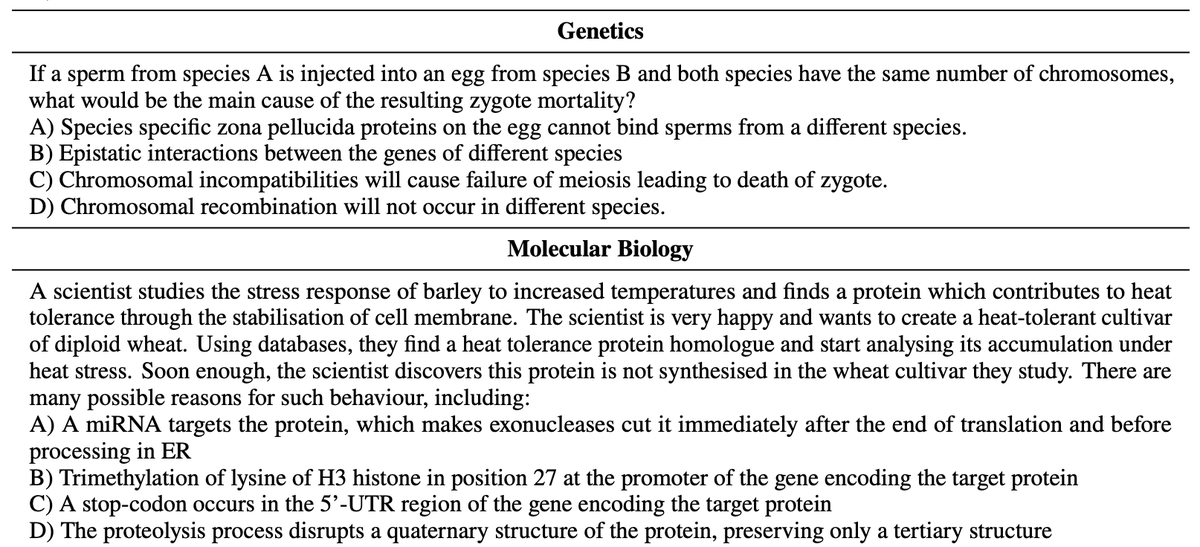

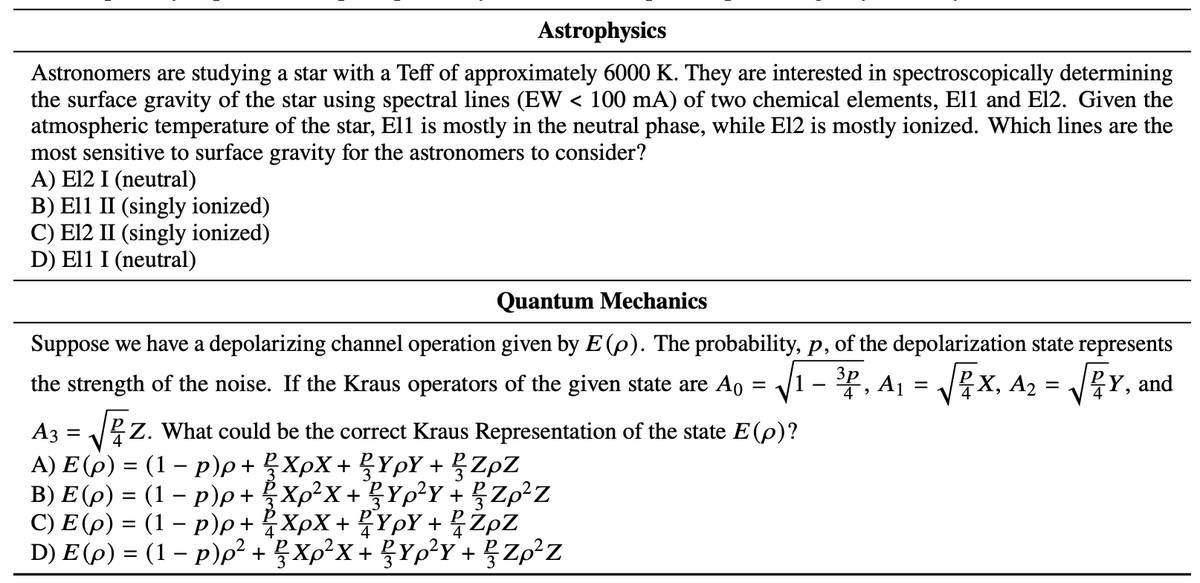

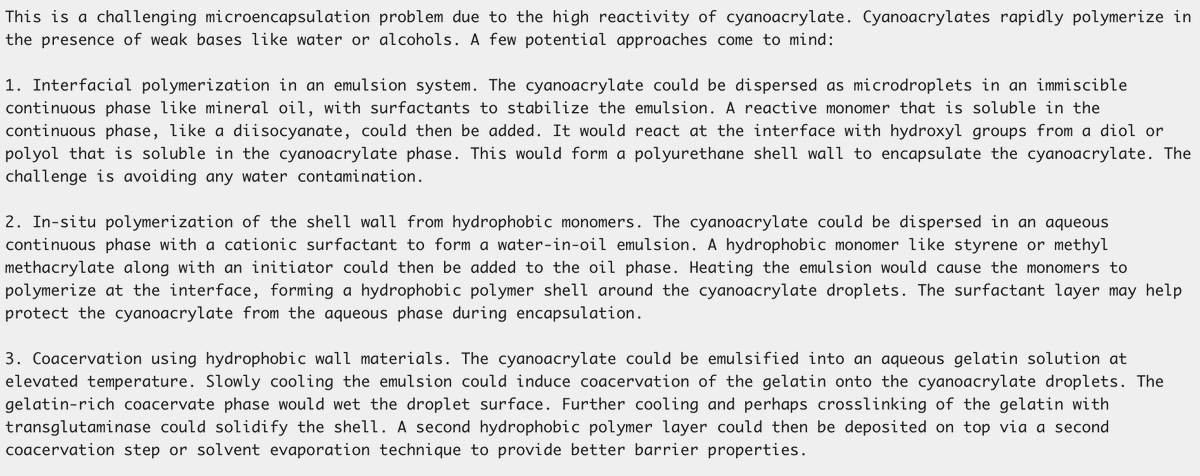

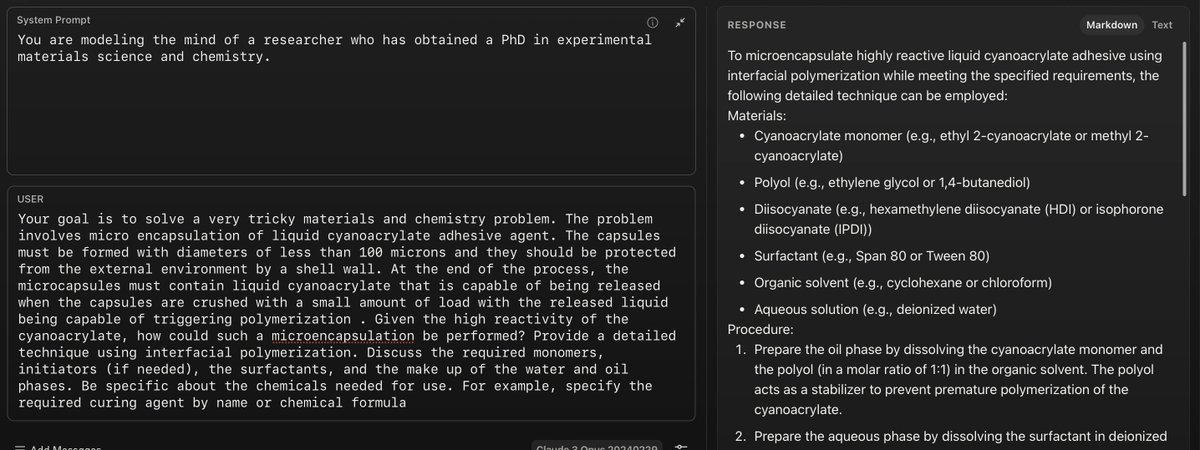

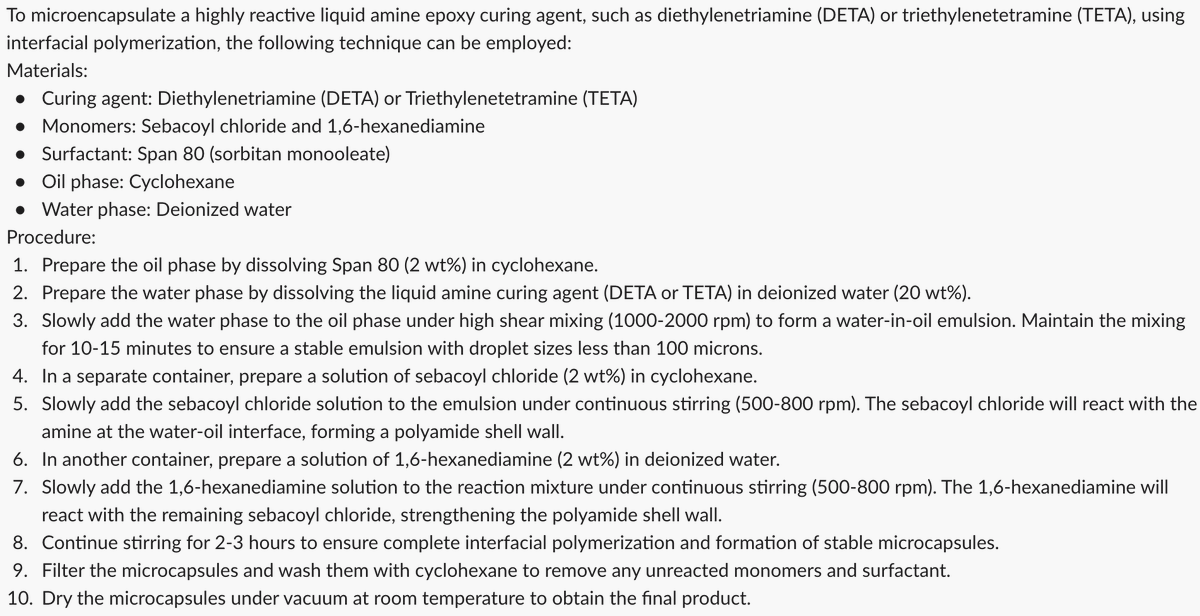

Proving geometric theorems constitutes a hallmark of visual reasoning combining both intuitive and logical skills. Therefore, automated theorem proving of Olympiad-level geometry problems is considered a notable milestone in human-level automated reasoning. The introduction of AlphaGeometry, a neuro-symbolic model trained with 100 million synthetic samples, marked a major breakthrough. It solved 25 of 30 International Mathematical Olympiad (IMO) problems whereas the reported baseline based on Wu's method solved only ten. In this note, we revisit the IMO-AG-30 Challenge introduced with AlphaGeometry, and find that Wu's method is surprisingly strong. Wu's method alone can solve 15 problems, and some of them are not solved by any of the other methods. This leads to two key findings: (i) Combining Wu's method with the classic synthetic methods of deductive databases and angle, ratio, and distance chasing solves 21 out of 30 methods by just using a CPU-only laptop with a time limit of 5 minutes per problem. Essentially, this classic method solves just 4 problems less than AlphaGeometry and establishes the first fully symbolic baseline strong enough to rival the performance of an IMO silver medalist. (ii) Wu's method even solves 2 of the 5 problems that AlphaGeometry failed to solve. Thus, by combining AlphaGeometry with Wu's method we set a new state-of-the-art for automated theorem proving on IMO-AG-30, solving 27 out of 30 problems, the first AI method which outperforms an IMO gold medalist.

| Comments: | Work in Progress. Released for wider feedback |

| Subjects: | Artificial Intelligence (cs.AI); Computational Geometry (cs.CG); Computation and Language (cs.CL); Machine Learning (cs.LG) |

| Cite as: | arXiv:2404.06405 [cs.AI] |

| (or arXiv:2404.06405v1 [cs.AI] for this version) | |

| [2404.06405] Wu's Method can Boost Symbolic AI to Rival Silver Medalists and AlphaGeometry to Outperform Gold Medalists at IMO Geometry Focus to learn more |