The UK's competition watchdog, the CMA, has sounded a warning over Big Tech's grip on the advanced AI market, with CEO Sarah Cardell expressing "real concerns" over how the sector is developing.

techcrunch.com

UK’s antitrust enforcer sounds the alarm over Big Tech’s grip on GenAI

Natasha Lomas @riptari / 1:21 PM EDT•April 11, 2024

Image Credits: Justin Sullivan / Getty Images

The U.K.’s competition watchdog, Competition and Markets Authority (CMA), has sounded a warning over Big Tech’s entrenching grip on the advanced AI market, with CEO Sarah Cardell

expressing “real concerns” over how the sector is developing.

In an

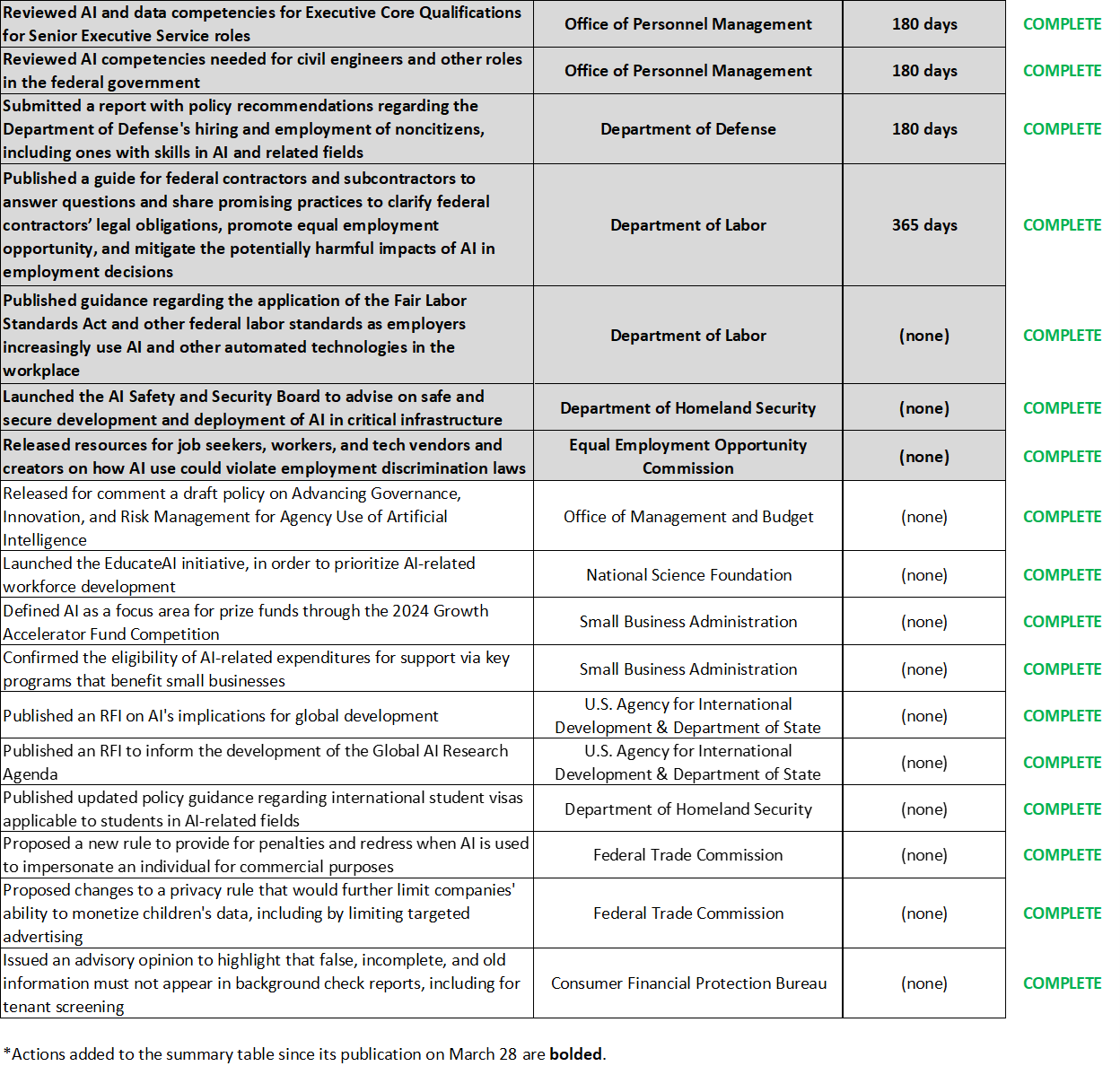

Update Paper on foundational AI models published Thursday, the CMA cautioned over increasing interconnection and concentration between developers in the cutting-edge tech sector responsible for the boom in generative AI tools.

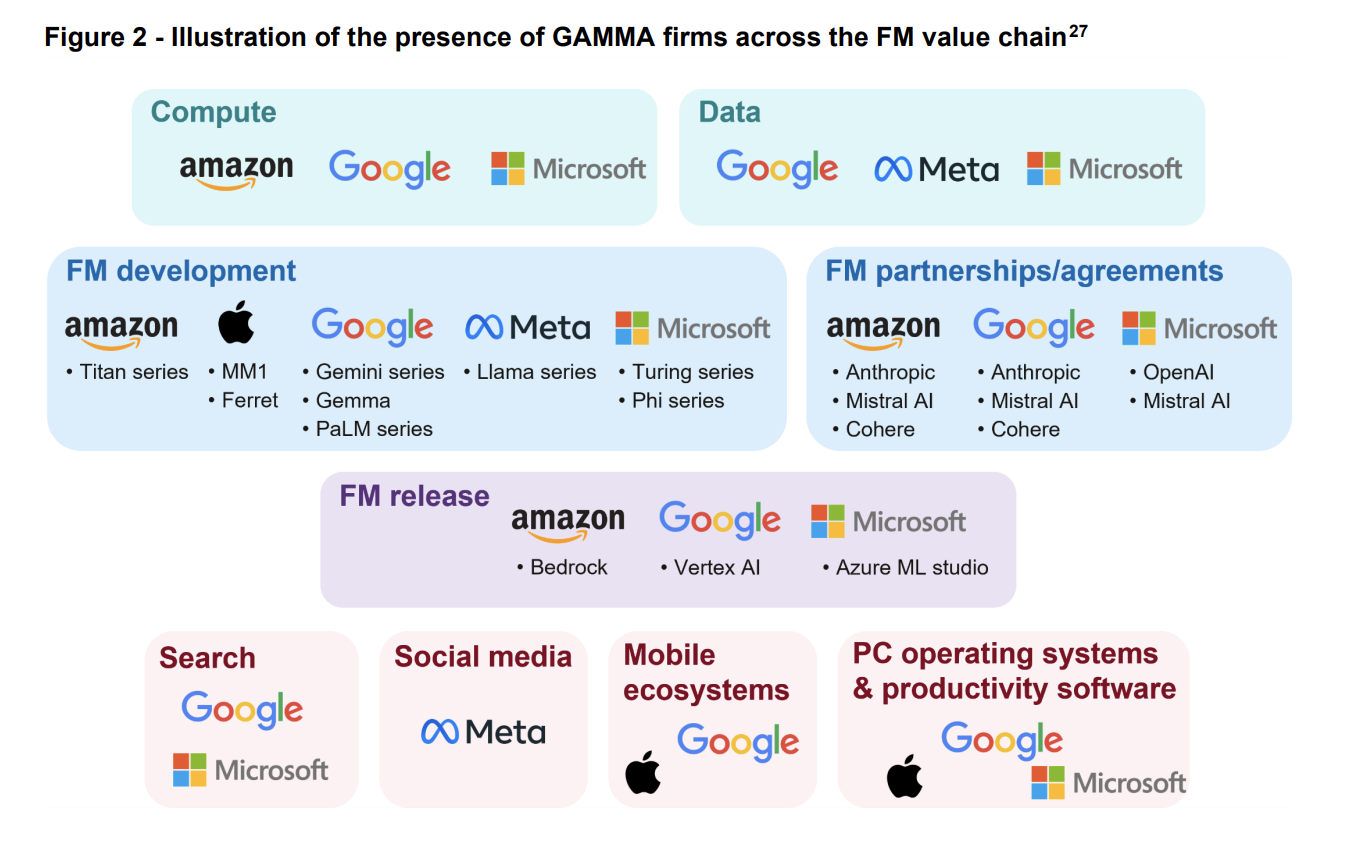

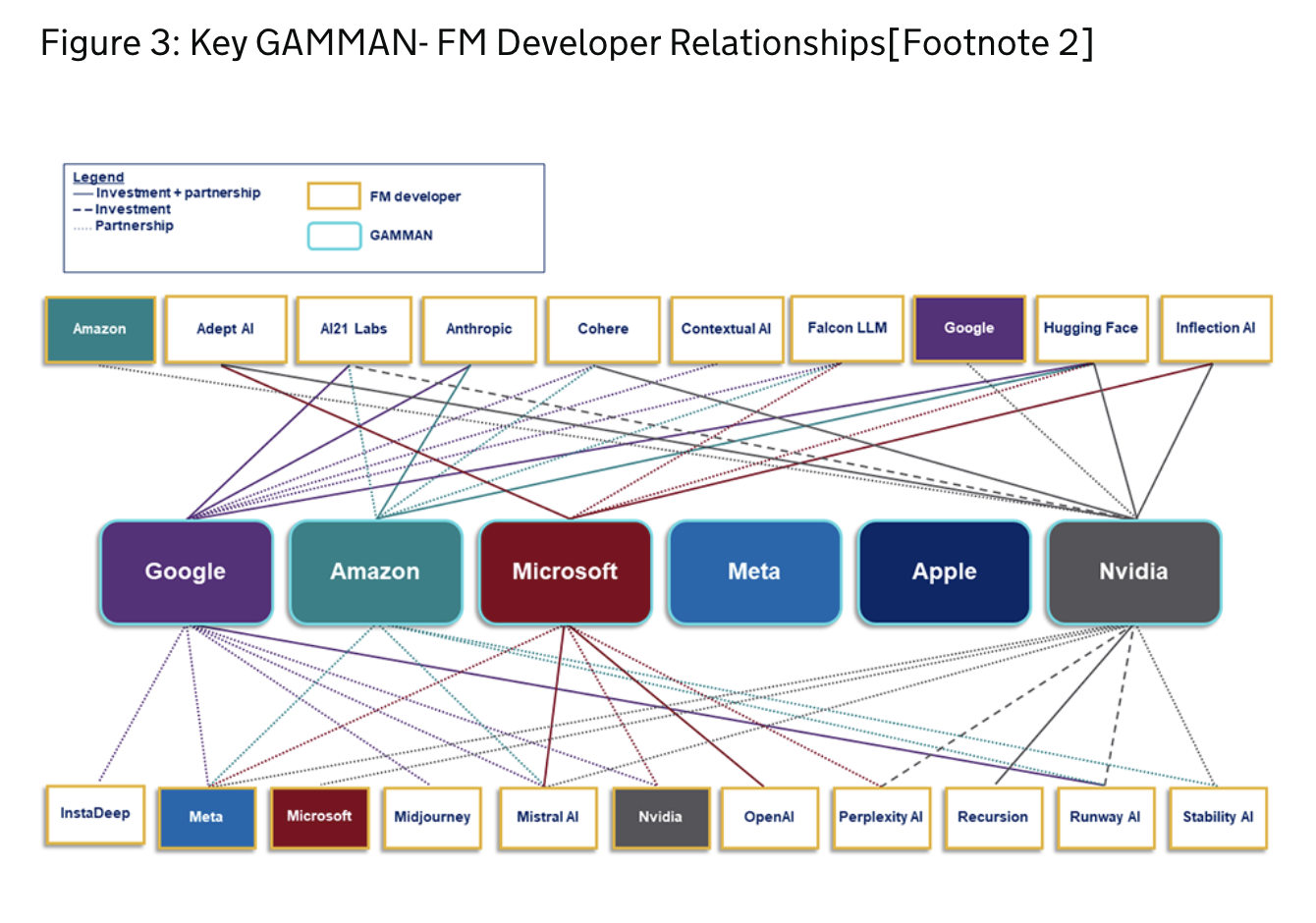

The CMA’s paper points to the recurring presence of Google, Amazon, Microsoft, Meta and Apple (aka GAMMA) across the AI value chain: compute, data, model development, partnerships, release and distribution platforms. And while the regulator also emphasized that it recognizes that partnership arrangements “can play a pro-competitive role in the technology ecosystem,” it coupled that with a warning that “powerful partnerships and integrated firms” can pose risks to competition that run counter to open markets.

Image Credits: CMA’s Foundation Models.

Update Paper

“We are concerned that the FM [foundational model] sector is developing in ways that risk negative market outcomes,” the CMA wrote, referencing a type of AI that’s developed with large amounts of data and compute power and may be used to underpin a variety of applications.

“In particular, the growing presence across the FM value chain of a small number of incumbent technology firms, which already hold positions of market power in many of today’s most important digital markets, could profoundly shape FM-related markets to the detriment of fair, open and effective competition, ultimately harming businesses and consumers, for example by reducing choice and quality, and by raising prices,” it warned.

The CMA undertook an

initial review of the top end of the AI market last May and went on to publish a set of principles for “responsible” generative AI development that it said would guide its oversight of the fast-moving market. Although, Will Hayter, senior director of the CMA’s

Digital Markets Unit, told TechCrunch last fall that it was not in a rush to regulate advanced AI because it wanted to give the market a chance to develop.

Since then, the watchdog has

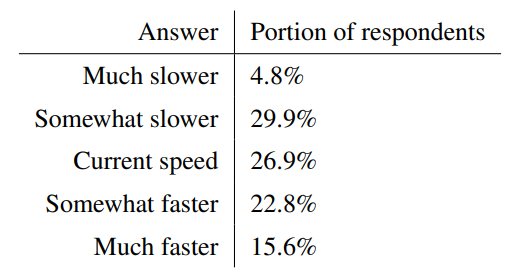

stepped in to scrutinize the cozy relationship between OpenAI, the developer behind the viral AI chatbot ChatGPT, and Microsoft, a major investor in OpenAI. Its update paper remarks on the giddy pace of change in the market. For example, it flagged research by the U.K.’s internet regulator, Ofcom, in

a report last year that found 31% of adults and 79% of 13- to 17-year-olds in the U.K. have used a generative AI tool, such as ChatGPT, Snapchat My AI or Bing Chat (aka Copilot). So there are signs the CMA is revising its initial chillaxed position on the GenAI market amid the commercial “whirlwind” sucking up compute, data and talent.

Its Update Paper identifies three “key interlinked risks to fair, effective, and open competition,” as it puts it, which the omnipresence of GAMMA speaks to: (1) Firms controlling “critical inputs” for developing foundational models (known as general-purpose AI models), which might allow them to restrict access and build a moat against competition; (2) tech giants’ ability to exploit dominant positions in consumer- or business-facing markets to distort choice for GenAI services and restrict competition in deployment of these tools; and (3) partnerships involving key players, which the CMA says “could exacerbate existing positions of market power through the value chain.”

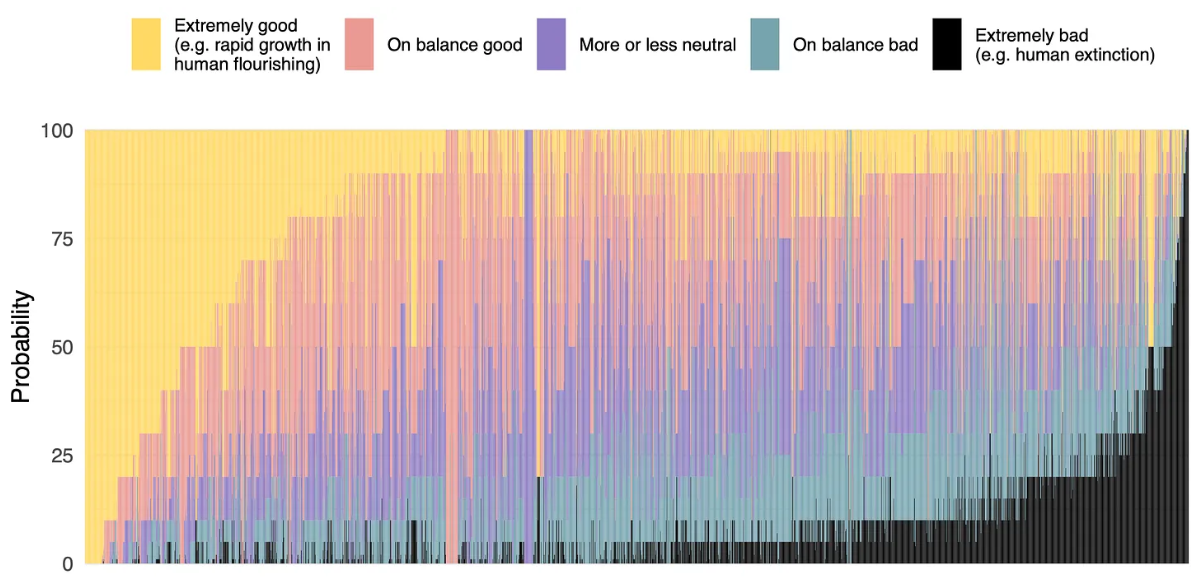

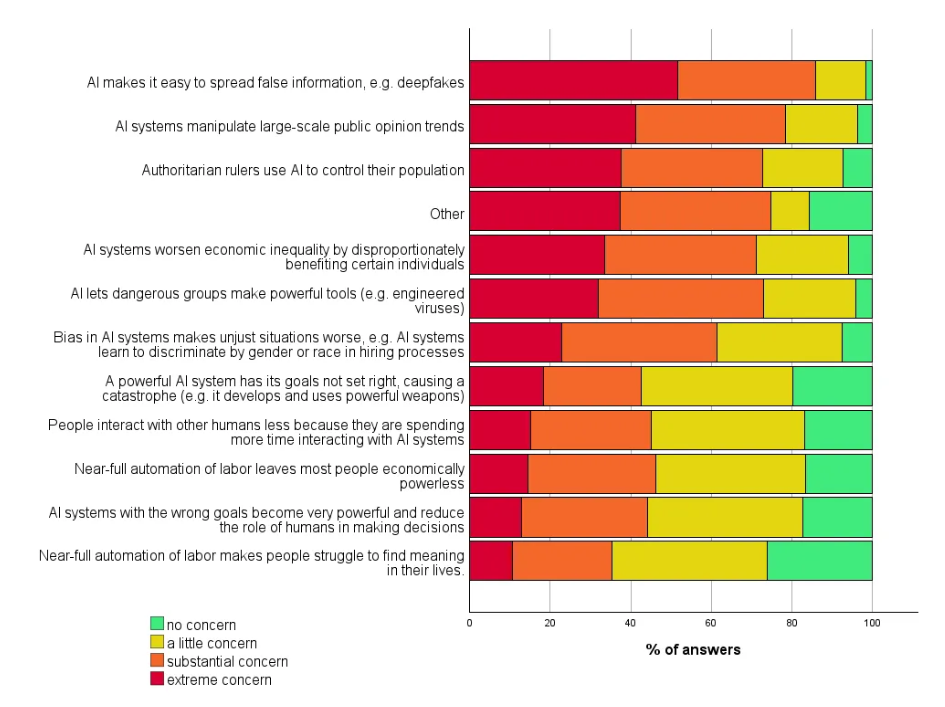

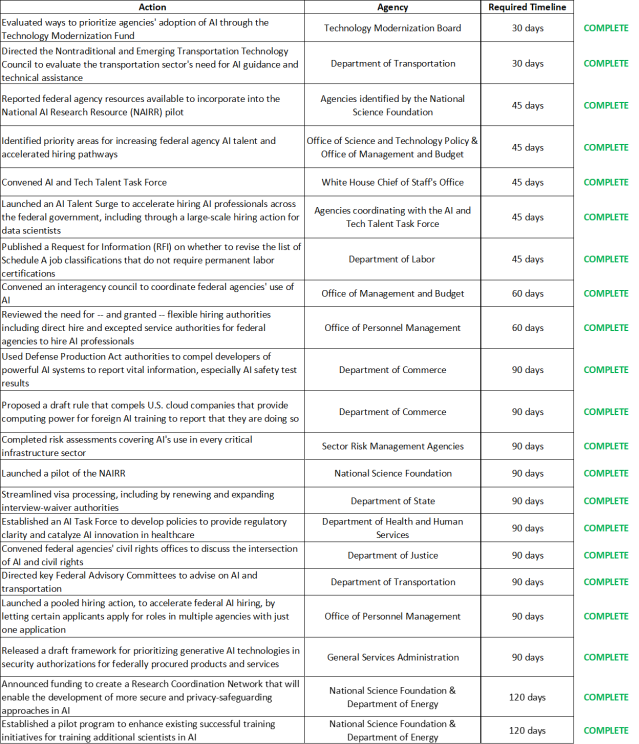

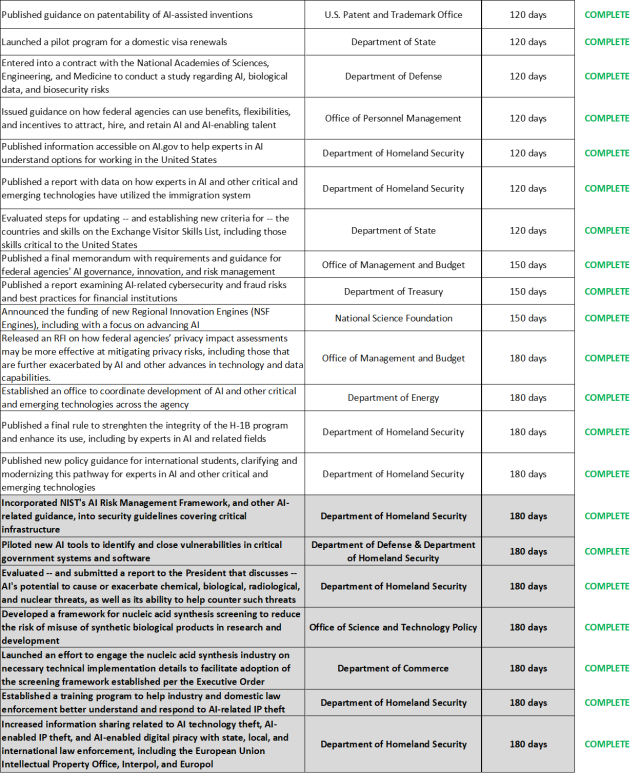

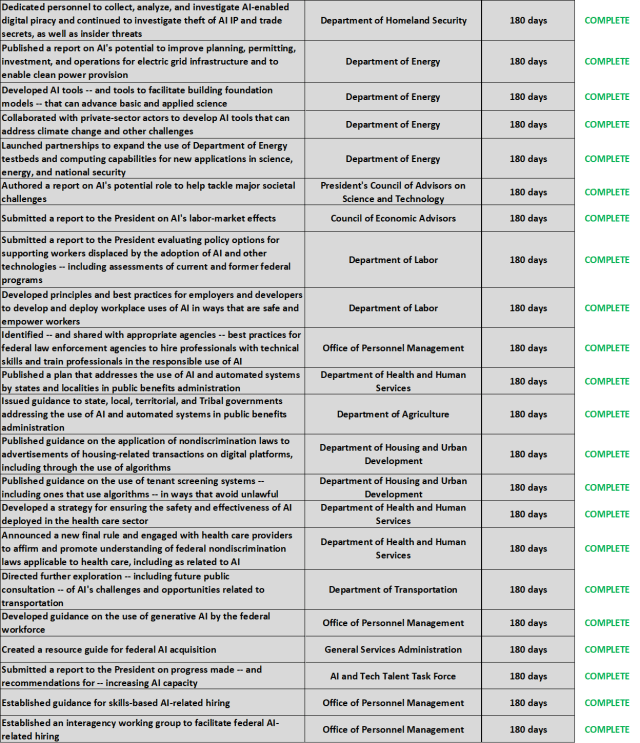

Image Credits: CMA

In a speech delivered Thursday in Washington, D.C., at a legal event focused on generative AI, Cardell pointed to the “winner-take-all dynamics” seen in earlier web dev eras, when Big Tech built and entrenched their Web 2.0 empires while regulators sat on their heels. She said it’s important that competition enforcers don’t repeat the same mistakes with this next generation of digital development.

“The benefits we wish to see flowing from [advanced AI], for businesses and consumers, in terms of quality, choice and price, and the very best innovations, are much more likely in a world where those firms are themselves subject to fair, open and effective competition, rather than one where they are simply able to leverage foundation models to further entrench and extend their existing positions of power in digital markets,” she said, adding: “So we believe it is important to act now to ensure that a small number of firms with unprecedented market power don’t end up in a position to control not just how the most powerful models are designed and built, but also how they are embedded and used across all parts of our economy and our lives.”

How is the CMA going to intervene at the top end of the AI market? It does not have concrete measures to announce, as yet, but Cardell said it’s closely tracking GAMMA’s partnerships and stepping up its use of merger review to see whether any of these arrangements fall within existing merger rules.

That would unlock formal powers of investigation, and even the ability to block connections it deems anti-competitive. But for now the CMA has not gone that far, despite clear and growing concerns about cozy GAMMA GenAI ties. Its

review of the links between OpenAI and Microsoft — for example, to determine whether the partnership constitutes a “relevant merger situation” — continues.

“Some of these arrangements are quite complex and opaque, meaning we may not have sufficient information to assess this risk without using our merger control powers to build that understanding,” Cardell also told the audience, explaining the challenges of trying to understand the power dynamics of the AI market without unlocking formal merger review powers. “It may be that some arrangements falling outside the merger rules are problematic, even if not ultimately remediable through merger control. They may even have been structured by the parties to seek to avoid the scope of merger rules. Equally some arrangements may not give rise to competition concerns.”

“By stepping up our merger review, we hope to gain more clarity over which types of partnerships and arrangements may fall within the merger rules, and under what circumstances competition concerns may arise — and that clarity will also benefit the businesses themselves,” she added.

The CMA’s Update report sets out some “indicative factors,” which Cardell said may trigger greater concern about and attention to FM partnerships, such as the upstream power of the partners, over AI inputs; and the downstream power, over distribution channels. She also said the watchdog will be looking closely at the nature of the partnership and the level of “

influence and alignment of incentives” between partners.

Meanwhile, the U.K. regulator is urging AI giants to follow the

seven development principles it set out last fall to steer market developments onto responsible rails where competition and consumer protection are baked in. (The short version of what it wants to see is: accountability, access, diversity, choice, flexibility, fair dealing, and transparency.)

“We’re committed to applying the principles we have developed and to using all legal powers at our disposal — now and in the future — to ensure that this transformational and structurally critical technology delivers on its promise,” Cardell said in a statement.