1/27

@EccentrismArt

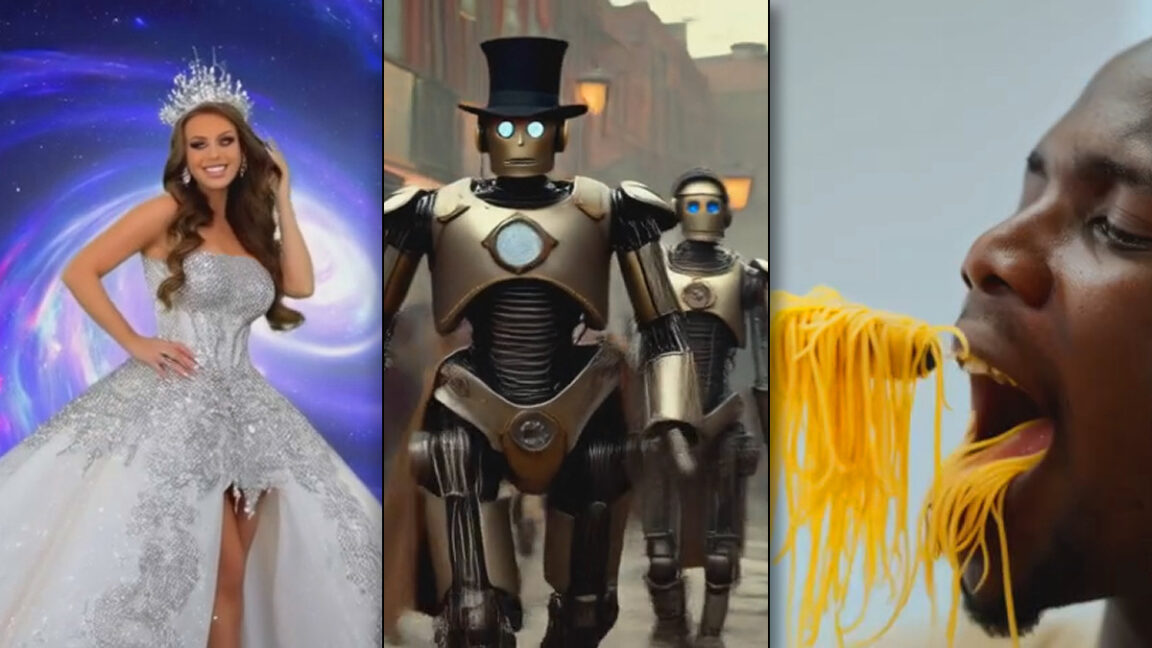

It’s here, “Castle Mates - Part 2”!!

It’s here, “Castle Mates - Part 2”!!

Part 2 of my comedy mockumentary. If you haven’t seen Part 1, see it

Please like and share

On

On

https://video.twimg.com/amplify_video/1861791580732145664/vid/avc1/1280x720/QEwKrnDyVLbeiaZb.mp4

2/27

@EccentrismArt

Part 1 here:

[Quoted tweet]

“Castle Mates - Part 1”

“Castle Mates - Part 1”

A comedy mockumentary and spinoff to my “Monster Flatmates”

Part 2 coming soon. Please like and share

On

On

#lotr #skyrim #Witcher

https://video.twimg.com/amplify_video/1859272340473085952/vid/avc1/1280x720/6tF1jQBzfzM64bFY.mp4

3/27

@EccentrismArt

Workflow:

Midjourney and @freepik

Midjourney and @freepik

@freepik & @KaiberAI and @runwayml (Act-One)

@freepik & @KaiberAI and @runwayml (Act-One)

🫦Lip sync by Runway Act-One and @hedra_labs with my facial capture as well.

Suno V4

Suno V4

(Titles): @ideogram_ai

(Titles): @ideogram_ai

4/27

@EccentrismArt

My comedy friends! Castle Mates Part 2 is here!! Let me all know if you want a Part 3

@steviemac03

@koba_1975

@iamtomblake

@madpencil

@ikewagh

@pressmanc

@alexgnewmedia

@blvcklightai

@captainhahaa

@Stonekaiju

@empath_angry

@_lev3lup

@jeepersmedia

@Uncanny_Harry

@TheReelRobot

@NeuralViz

5/27

@dlennard

awesome job!

awesome job!

6/27

@EccentrismArt

Thank you!

7/27

@TashaCaufield

Lol, interviewing next to each other is a legit complaint

... Very nice...

... Very nice...

8/27

@EccentrismArt

Haha right!? Thanks Tasha

9/27

@dankchungusYT

youtube?

10/27

@EccentrismArt

Working on it. I will probably combine part 1&2 and post it on YouTube. Also do an upscale with topaz

11/27

@Iamtomblake

Another one well done!

12/27

@EccentrismArt

Thanks Tom! Appreciate it. Hope someday Runway has Eleven Labs speech change built right into Act-One

13/27

@sergiosuave23

This is awesome and hilarious!

14/27

@EccentrismArt

Haha thanks! Glad you like it. Did you watch Part 1?

15/27

@HBCoop_

I like part 2

16/27

@EccentrismArt

Thanks again and thank you for the repost Working on a Christmas one now stay tuned

Working on a Christmas one now stay tuned

17/27

@madpencil_

Well done my friend

18/27

@EccentrismArt

Thought I replied to you! Thank you appreciate it my friend

19/27

@MrDavids1

RIP Stabby The Ass Kicker, hahaha had a good laugh, nice one Jer!

20/27

@EccentrismArt

Thanks Travis!

21/27

@JyeBeats

That's glorious bro, well done.

22/27

@DreamStarter_1

Haha,good one!

23/27

@empath_angry

This is just so fabulous.

I don't laugh this much at proper TV sitcoms.

24/27

@levaiteart

What ai you used

25/27

@BLVCKLIGHTai

Lfg!!!

26/27

@Alterverse_AI

Yep, that's a series alright!

27/27

@stonekaiju

So good!!!! You kept to your word, the King got his revenge

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

@EccentrismArt

Part 2 of my comedy mockumentary. If you haven’t seen Part 1, see it

Please like and share

https://video.twimg.com/amplify_video/1861791580732145664/vid/avc1/1280x720/QEwKrnDyVLbeiaZb.mp4

2/27

@EccentrismArt

Part 1 here:

[Quoted tweet]

A comedy mockumentary and spinoff to my “Monster Flatmates”

Part 2 coming soon. Please like and share

#lotr #skyrim #Witcher

https://video.twimg.com/amplify_video/1859272340473085952/vid/avc1/1280x720/6tF1jQBzfzM64bFY.mp4

3/27

@EccentrismArt

Workflow:

🫦Lip sync by Runway Act-One and @hedra_labs with my facial capture as well.

4/27

@EccentrismArt

My comedy friends! Castle Mates Part 2 is here!! Let me all know if you want a Part 3

@steviemac03

@koba_1975

@iamtomblake

@madpencil

@ikewagh

@pressmanc

@alexgnewmedia

@blvcklightai

@captainhahaa

@Stonekaiju

@empath_angry

@_lev3lup

@jeepersmedia

@Uncanny_Harry

@TheReelRobot

@NeuralViz

5/27

@dlennard

6/27

@EccentrismArt

Thank you!

7/27

@TashaCaufield

Lol, interviewing next to each other is a legit complaint

8/27

@EccentrismArt

Haha right!? Thanks Tasha

9/27

@dankchungusYT

youtube?

10/27

@EccentrismArt

Working on it. I will probably combine part 1&2 and post it on YouTube. Also do an upscale with topaz

11/27

@Iamtomblake

Another one well done!

12/27

@EccentrismArt

Thanks Tom! Appreciate it. Hope someday Runway has Eleven Labs speech change built right into Act-One

13/27

@sergiosuave23

This is awesome and hilarious!

14/27

@EccentrismArt

Haha thanks! Glad you like it. Did you watch Part 1?

15/27

@HBCoop_

I like part 2

16/27

@EccentrismArt

Thanks again and thank you for the repost

17/27

@madpencil_

Well done my friend

18/27

@EccentrismArt

Thought I replied to you! Thank you appreciate it my friend

19/27

@MrDavids1

RIP Stabby The Ass Kicker, hahaha had a good laugh, nice one Jer!

20/27

@EccentrismArt

Thanks Travis!

21/27

@JyeBeats

That's glorious bro, well done.

22/27

@DreamStarter_1

Haha,good one!

23/27

@empath_angry

This is just so fabulous.

I don't laugh this much at proper TV sitcoms.

24/27

@levaiteart

What ai you used

25/27

@BLVCKLIGHTai

Lfg!!!

26/27

@Alterverse_AI

Yep, that's a series alright!

27/27

@stonekaiju

So good!!!! You kept to your word, the King got his revenge

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196