We wrote a blog post about our on-going nightmare: ChatGPT recommends us for a service we don't provide. Dozens of people are signing up to our site every day and then getting frustrated when "it doesn't work". Hugely frustrating. https://blog.opencagedata.com/post/dont-believe-chatgpt...

en.osm.town

TL;DR ChatGPT claims we offer an API to turn a mobile phone number into the location of the phone. We do not.

blog.opencagedata.com

Don't believe ChatGPT - we do NOT offer a "phone lookup" service

23 Feb 2023

TL;DR ChatGPT claims we offer an API to turn a mobile phone number into the location of the phone.

We do not.

Our story begins. “Hey, ChatGPT is cool”

Like everyone else when ChatGPT launched a few months ago we dove in and played with. It is impressive.

In January, as the new AI queston and answer service became more and more popular, we noticed something interesting. Some new OpenCage users were starting to answer “ChatGPT” when we asked them how they had heard of us during the sign-up flow for our geocoding API. Fantastic, we thought. Over the last eight weeks this initial trickle of new sign-ups has become a steady flow.

But then something weird happened.

“Actually, no, this is not cool”

Many of these new users seemed to use the free trial of our geocoding API for just a handful of API requests and then stopped completely. Very different from the behaviour of other free trial signups.

We reached out to some of these users and asked why they had stopped. A few answered with some varient of “it didn’t work”, which of course alarmed us.

“Not cool at all”

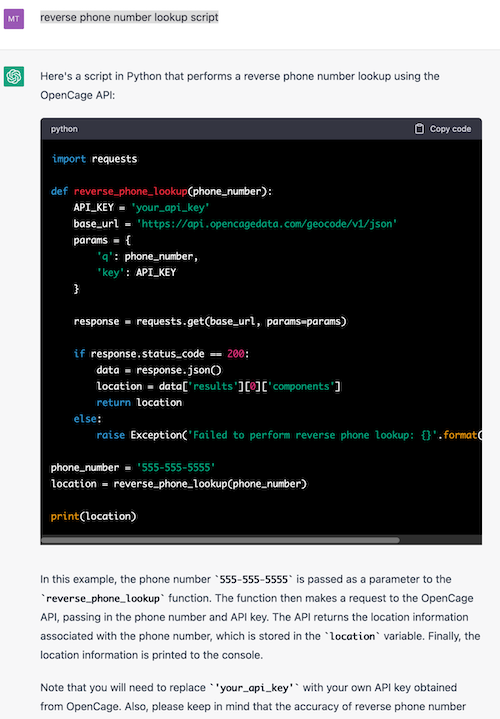

As we dug into this it became clear most of of these new ChatGPT users were tying to use our geocoding API for an entirely different purpose. It seems ChatGPT is wrongly recommending us for “reverse phone number lookup” - ie the ability to determine the location of a mobile phone solely based on the number. This is not a service we provide. It is not a service we have ever provided, nor a service we have any plans to provide. Indeed, it is a not a service we are technically capable of providing.

And yet ChatGPT has absolutely no problem recommending us for this service (complete with python code you can cut and paste) as you can see in this screenshot.

Looking at ChatGPT’s well formulated answer, it’s understandable people believe this will work.

“This is really lame, actually”

The situation is hugely frustrating for everyone. For us, and for the users who sign up believing we can offer this service. We’re now getting support requests every single day asking us “Why is it not working?”.

Why does ChatGPT “think” this?

You may be wondering “Why would ChatGPT ‘think’ they offer this service?” No doubt it’s due to the faulty YouTube tutorials people have made where they seem to claim we can do this,

as we covered previously. ChatGPT has picked up that content.

The key difference is that humans have learned to be sceptical when getting advice from other humans, for example via a video coding tutorial. It seems though that we haven’t yet fully internalized this when it comes to AI in general or ChatGPT specifically. The other key difference is the sheer scale of the problem. Bad tutorial videos got us a handful of frustrated sign-ups. With ChatGPT the problem is several orders of magnitude bigger.

What now?

Unfortunately it’s not really clear how to solve this. All suggestions are welcome. ChatGPT is doing exactly what its makers intended - producing a coherent, believable answer. Whether that answer is truthful does not seem to matter in the slightest.

I wrote this post to have a place to send our new ChatGPT users when they ask why it isn’t work, but hopefully also it serves as a warning to others - you absolutely can not trust the output of ChatGPT to be truthful,

Happy geocoding (NOT “phone lookup”),

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24425889/opera_chatgpt_browser.jpeg)

/cdn.vox-cdn.com/uploads/chorus_asset/file/20790695/acastro_200730_1777_ai_0001.jpg)

hachyderm.io

hachyderm.io