You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?EvalPlus Leaderboard

evalplus.github.io

evalplus.github.io

Computer Science > Computation and Language

[Submitted on 20 Dec 2023 (v1), last revised 11 Jan 2024 (this version, v3)]WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation

Zhaojian Yu, Xin Zhang, Ning Shang, Yangyu Huang, Can Xu, Yishujie Zhao, Wenxiang Hu, Qiufeng YinRecent work demonstrates that, after being fine-tuned on a high-quality instruction dataset, the resulting model can obtain impressive capabilities to address a wide range of tasks. However, existing methods for instruction data generation often produce duplicate data and are not controllable enough on data quality. In this paper, we extend the generalization of instruction tuning by classifying the instruction data to 4 code-related tasks and propose a LLM-based Generator-Discriminator data process framework to generate diverse, high-quality instruction data from open source code. Hence, we introduce CodeOcean, a dataset comprising 20,000 instruction instances across 4 universal code-related tasks,which is aimed at augmenting the effectiveness of instruction tuning and improving the generalization ability of fine-tuned model. Subsequently, we present WaveCoder, a fine-tuned Code LLM with Widespread And Versatile Enhanced instruction tuning. This model is specifically designed for enhancing instruction tuning of Code Language Models (LLMs). Our experiments demonstrate that Wavecoder models outperform other open-source models in terms of generalization ability across different code-related tasks at the same level of fine-tuning scale. Moreover, Wavecoder exhibits high efficiency in previous code generation tasks. This paper thus offers a significant contribution to the field of instruction data generation and fine-tuning models, providing new insights and tools for enhancing performance in code-related tasks.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI); Software Engineering (cs.SE) |

| Cite as: | arXiv:2312.14187 [cs.CL] |

| (or arXiv:2312.14187v3 [cs.CL] for this version) | |

| [2312.14187] WaveCoder: Widespread And Versatile Enhanced Instruction Tuning with Refined Data Generation Focus to learn more |

Submission history

From: Zhaojian Yu [view email][v1] Wed, 20 Dec 2023 09:02:29 UTC (1,336 KB)

[v2] Tue, 26 Dec 2023 13:51:38 UTC (1,336 KB)

[v3] Thu, 11 Jan 2024 07:44:55 UTC (1,463 KB)

Last edited:

22h

If this is true it is over: Unlimited context length is here.

Activation Beacon,

New method for extending LLMs context.

TL;DR: Add "global state" tokens before the prompt and predict auto-regressively (sliding window). Train to always condense to and read from the new tokens.

This way you get:

1. Fixed memory consumption.

2. Inference time grow linearly.

3. Train to use this with short windows: but then it works on long window generations.

3. The perplexity remain constant

[This is the amazing part!]

Results:

The authors trained LLaMA 2 for 10K-steps / 4K ctx_len

Then it generalized to 400K (!!!) ctx_len.

You should be able train any model you want to do this, the code provided is very very simple and clean.

If this will be reproducible for all models than ctx_len just got close to be considered "solved".

---

- Paper: - Code: FlagEmbedding/Long_LLM/activation_beacon at master · FlagOpen/FlagEmbedding

Soaring from 4K to 400K: Extending LLM’s Context with Activation Beacon

Long Context Compression with Activation Beacon

Long context compression is a critical research problem due to its significance in reducing the high computational and memory costs associated with LLMs. In this paper, we propose Activation Beacon, a plug-in module for transformer-based LLMs that targets effective, efficient, and flexible...

Computer Science > Computation and Language

[Submitted on 7 Jan 2024]Soaring from 4K to 400K: Extending LLM's Context with Activation Beacon

Peitian Zhang, Zheng Liu, shytao Xiao, Ninglu Shao, Qiwei Ye, Zhicheng DouThe utilization of long contexts poses a big challenge for large language models due to their limited context window length. Although the context window can be extended through fine-tuning, it will result in a considerable cost at both training and inference time, and exert an unfavorable impact to the LLM's original capabilities. In this work, we propose Activation Beacon, which condenses LLM's raw activations into more compact forms such that it can perceive a much longer context with a limited context window. Activation Beacon is introduced as a plug-and-play module for the LLM. It fully preserves the LLM's original capability on short contexts while extending the new capability on processing longer contexts. Besides, it works with short sliding windows to process the long context, which achieves a competitive memory and time efficiency in both training and inference. Activation Beacon is learned by the auto-regression task conditioned on a mixture of beacons with diversified condensing ratios. Thanks to such a treatment, it can be efficiently trained purely with short-sequence data in just 10K steps, which consumes less than 9 hours on a single 8xA800 GPU machine. The experimental studies show that Activation Beacon is able to extend Llama-2-7B's context length by ×100 times (from 4K to 400K), meanwhile achieving a superior result on both long-context generation and understanding tasks. Our model and code will be available at the BGE repository.

| Subjects: | Computation and Language (cs.CL); Artificial Intelligence (cs.AI) |

| Cite as: | arXiv:2401.03462 [cs.CL] |

| (or arXiv:2401.03462v1 [cs.CL] for this version) | |

| [2401.03462] Soaring from 4K to 400K: Extending LLM's Context with Activation Beacon Focus to learn more |

Submission history

From: Peitian Zhang [view email][v1] Sun, 7 Jan 2024 11:57:40 UTC (492 KB)

Last edited:

RWKV-Gradio-2 - a Hugging Face Space by BlinkDL

Enter a prompt, and the application generates text based on your input. You can customize the length, creativity, and repetition penalties of the generated text.

huggingface.co

Introducing Eagle-7B

Based on the RWKV-v5 architecture, bringing into opensource space, the strongest

- multi-lingual model

(beating even mistral)

- attention-free transformer today

(10-100x+ lower inference)

With comparable English performance with the best 1T 7B models

Last edited:

SWA: Sliding Window Attention

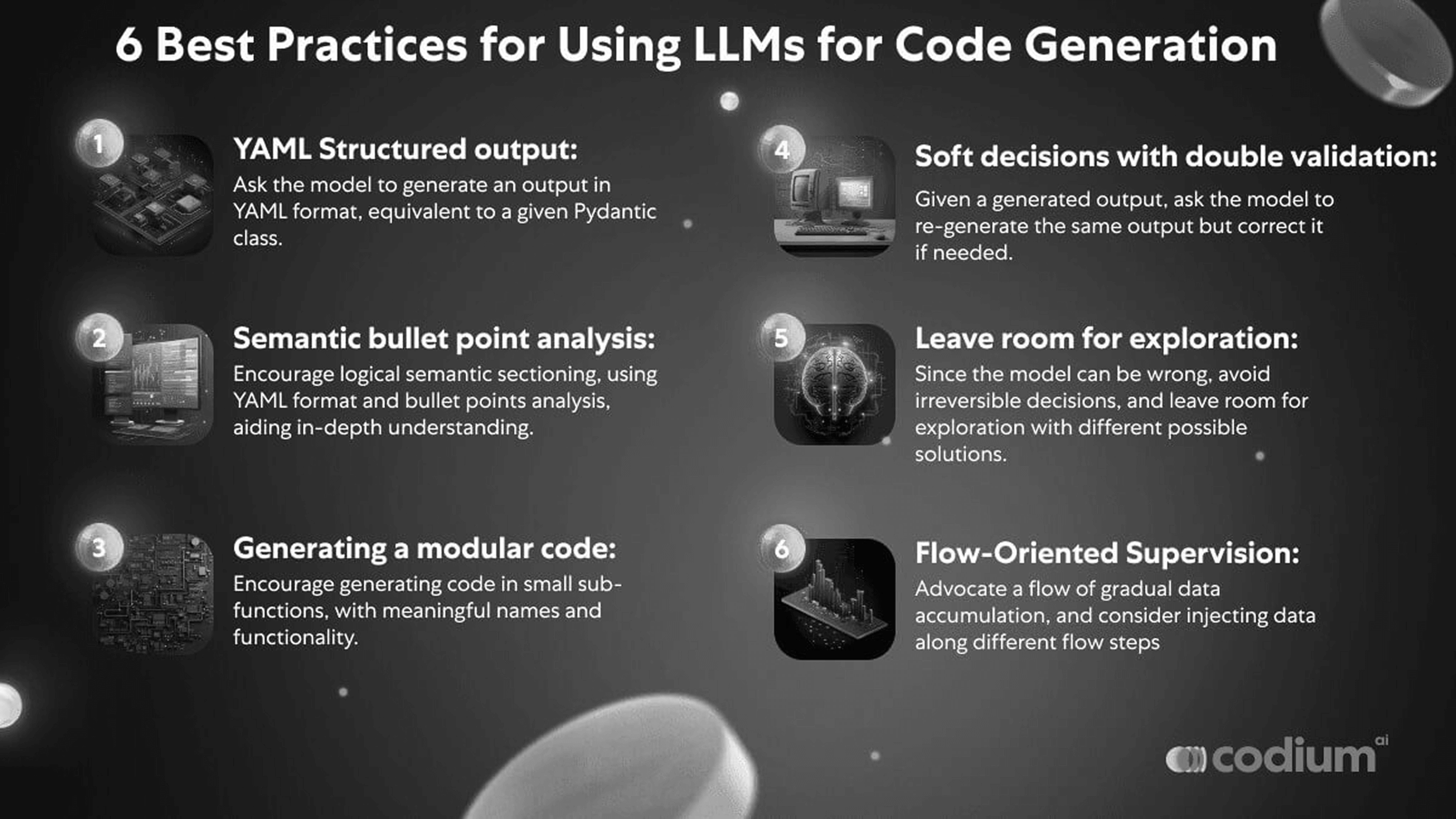

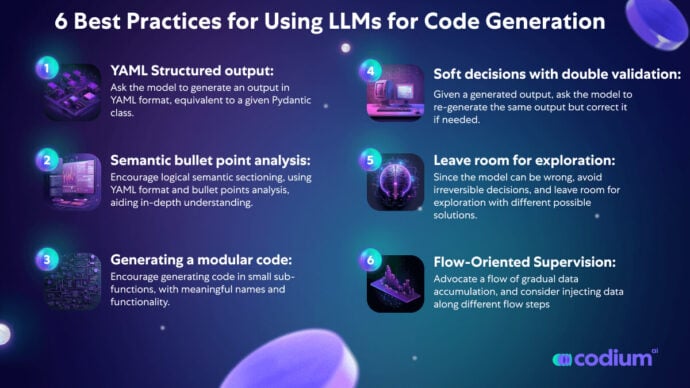

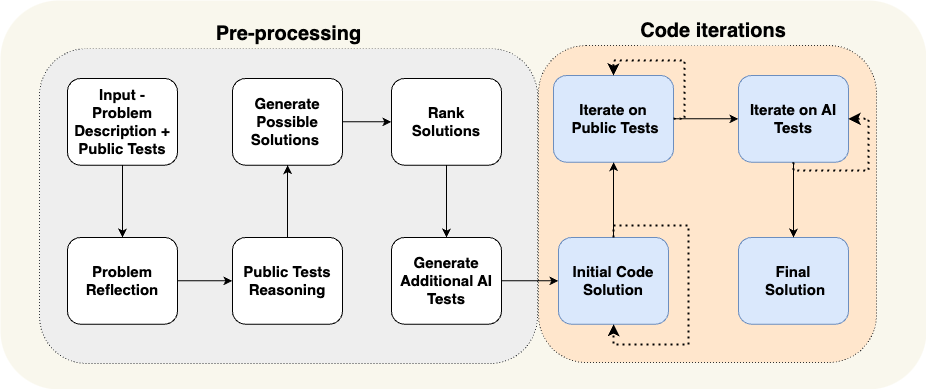

AlphaCodium - super interesting work that shows just how much alpha (no pun intended) is there from building complex prompt flows in this case for code generation.

It achieves better results than DeepMind's AlphaCode with 4 orders of magnitude fewer LLM calls! This is a direct consequence of the brute force approach that AlphaCode took generating ~100k solutions and then filtering them down (the recently announced AlphaCode2 is much more sample efficient though).

The framework is agnostic to the choice of the underlying LLM. On the validation set with GPT-4 they increase the pass@5 accuracy from 19 -> 44%.

There are 2 steps in the pipeline (and definitely reminds me of Meta's Self-Rewarding Language Models work I shared yesterday):

1) Pre-processing -> transforming the original problem statement into something more concise and parsable by the model, forcing the model to explain the results (why test input leads to a certain output), rank various solutions, etc.

2) Iterative loop where the model fixes its solution code against a set of tests

I feel ultimately we'll want this to be a part of the LLM training process as well and not something we append ad-hoc, but definitely exciting to see how much low hanging fruit is still out there!

Paper:

Code Generation with AlphaCodium: From Prompt Engineering to Flow Engineering

Code generation problems differ from common natural language problems - they require matching the exact syntax of the target language, identifying happy paths and edge cases, paying attention to numerous small details in the problem spec, and addressing other code-specific issues and...

GitHub - Codium-ai/AlphaCodium: Official implementation for the paper: "Code Generation with AlphaCodium: From Prompt Engineering to Flow Engineering""

Official implementation for the paper: "Code Generation with AlphaCodium: From Prompt Engineering to Flow Engineering"" - Codium-ai/AlphaCodium

State-of-the-art Code Generation with AlphaCodium - From Prompt Engineering to Flow Engineering

Read about State-of-the-art Code Generation with AlphaCodium - From Prompt Engineering to Flow Engineering in our blog.

www.codium.ai

www.codium.ai

State-of-the-art Code Generation with AlphaCodium – From Prompt Engineering to Flow Engineering

TECHNOLOGYTal Ridnik January 17, 2024 • 17 min read

TL;DR

Code generation problems differ from common natural language problems – they require matching the exact syntax of the target language, identifying happy paths and edge cases, paying attention to numerous small details in the problem spec, and addressing other code-specific issues and requirements. Hence, many of the optimizations and tricks that have been successful in natural language generation may not be effective for code tasks.In this work, we propose a new approach to code generation by LLMs, which we call AlphaCodium – a test-based, multi-stage, code-oriented iterative flow, that improves the performances of LLMs on code problems.

We tested AlphaCodium on a challenging code generation dataset called CodeContests, which includes competitive programming problems from platforms such as Codeforces. The proposed flow consistently and significantly improves results.

On the validation set, for example, GPT-4 accuracy (pass@5) increased from 19% with a single well-designed direct prompt to 44% with the AlphaCodium flow. AlphaCodium also outperforms previous works, such as AlphaCode, while having a significantly smaller computational budget.

Many of the principles and best practices acquired in this work, we believe, are broadly applicable to general code generation tasks.

In our very new open-source on AlphaCodium2.3K we share our AlphaCodium solution to CodeContests, along with a complete reproducible dataset evaluation and benchmarking scripts, to encourage further research in this area.

CodeContests dataset

CodeContests is a challenging code generation dataset introduced by Google’s Deepmind, involving problems curated from competitive programming platforms such as Codeforces. The dataset contains ~10K problems that can be used to train LLMs, as well as a validation and test set to assess the ability of LLMs to solve challenging code generation problems.In this work, instead of training a dedicated model, we focused on developing a code-oriented flow, that can be applied to any LLM pre-trained to support coding tasks, such as GPT or DeepSeek. Hence, we chose to ignore the train set, and focused on the validation and test sets of CodeContests, which contain 107 and 165 problems, respectively. Figure 1 depicts an example of a typical problem from CodeContests dataset:

Each problem consists of a description and public tests, available as inputs to the model. The goal is to generate a code solution that produces the correct output for any (legal) input. A private test set, which is not available to the model or contesters, is used to evaluate the submitted code solutions.

What makes CodeContests a good dataset for evaluating LLMs on code generation tasks?

1) CodeContests, unlike many other competitive programming datasets, utilizes a comprehensive private set of tests to avoid false positives – each problem contains ~200 private input-output tests the generated code solution must pass.

2) LLMs generally do not excel at paying attention to small details because they typically transform the problem description to some “average” description, similar to common cases on which they were trained. Real-world problems, on the other hand, frequently contain minor details that are critical to their proper resolution. A key feature of CodeContests dataset is that the problem descriptions are, by design, complicated and lengthy, with small details and nuances (see a typical problem description in Figure 1). We feel that adding this degree of freedom of problem understanding is beneficial since it simulates real-life problems, which are often complicated and involve multiple factors and considerations. This is in contrast to more common code datasets such as HumanEval, where the problems are easier and presented in a concise manner. An example of a typical HumanEval code problem appears in Appendix 1.

Figure 2 depicts the model’s introspection on the problem presented in Figure 1. Note that proper self-reflection makes the problem clearer and more coherent. This illustrates the importance of problem understanding as part of a flow that can lead with high probability to a correct code solution.

The proposed flow

Due to the complicated nature of code generation problems, we observed that single-prompt optimizations, or even chain-of-thought prompts, have not led to meaningful improvement in the solve ratio of LLMs on CodeContest. The model struggles to understand and “digest” the problem and continuously produces wrong code, or a code that fails to generalize to unseen private tests. Common flows, that are suitable for natural language tasks, may not be optimal for code-generation tasks, which include an untapped potential – repeatedly running the generated code, and validating it against known examples.Instead of common prompt engineering techniques used in NLP, we found that to solve CodeContest problems it was more beneficial to employ a dedicated code-generation and testing-oriented flow, that revolves around an iterative process where we repeatedly run and fix the generated code against input-output tests. Two key elements for this code-oriented flow are (a) generating additional data in a pre-processing stage, such as self-reflection and public tests reasoning, to aid the iterative process, and (b) enrichment of the public tests with additional AI-generated tests.

In Figure 3 we present our proposed flow for solving competitive programming problems:

continue reading on site

Santiago

@svpino

Jan 18

Jan 18

We are one step closer to having AI generate code better than humans!

There's a new open-source, state-of-the-art code generation tool. It's a new approach that improves the performance of Large Language Models generating code.

The paper's authors call the process "AlphaCodium" and tested it on the CodeContests dataset, which contains around 10,000 competitive programming problems.

The results put AlphaCodium as the best approach to generate code we've seen. It beats DeepMind's AlphaCode and their new AlphaCode2 without needing to fine-tune a model!

I'm linking to the paper, the GitHub repository, and a blog post below, but let me give you a 10-second summary of how the process works:

Instead of using a single prompt to solve problems, AlphaCodium relies on an iterative process that repeatedly runs and fixes the generated code using the testing data.

1. The first step is to have the model reason about the problem. They describe it using bullet points and focus on the goal, inputs, outputs, rules, constraints, and any other relevant details.

2. Then, they make the model reason about the public tests and come up with an explanation of why the input leads to that particular output.

3. The model generates two to three potential solutions in text and ranks them in terms of correctness, simplicity, and robustness.

4. Then, it generates more diverse tests for the problem, covering cases not part of the original public tests.

5. Iteratively, pick a solution, generate the code, and run it on a few test cases. If the tests fail, improve the code and repeat the process until the code passes every test.

Last edited:

miqudev/miqu-1-70b · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Nexesenex/Miqu-1-70b-Requant-iMat.GGUF · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

alpindale/miqu-1-70b-pytorch · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Introducing Qwen-VL

Along with the rapid development of our large language model Qwen, we leveraged Qwen’s capabilities and unified multimodal pretraining to address the limitations of multimodal models in generalization, and we opensourced multimodal model Qwen-VL in Sep. 2023. Recently, the Qwen-VL series has...

Introducing Qwen-VL

January 25, 2024· 12 min · 2505 words · Qwen Team | Translations:Along with the rapid development of our large language model Qwen, we leveraged Qwen’s capabilities and unified multimodal pretraining to address the limitations of multimodal models in generalization, and we opensourced multimodal model Qwen-VL in Sep. 2023. Recently, the Qwen-VL series has undergone a significant upgrade with the launch of two enhanced versions, Qwen-VL-Plus and Qwen-VL-Max. The key technical advancements in these versions include:

- Substantially boost in image-related reasoning capabilities;

- Considerable enhancement in recognizing, extracting, and analyzing details within images and texts contained therein;

- Support for high-definition images with resolutions above one million pixels and images of various aspect ratios.

| Model Name | Model Description |

| qwen-vl-plus | Qwen's Enhanced Large Visual Language Model. Significantly upgraded for detailed recognition capabilities and text recognition abilities, supporting ultra-high pixel resolutions up to millions of pixels and arbitrary aspect ratios for image input. It delivers significant performance across a broad range of visual tasks. |

| qwen-vl-max | Qwen's Most Capable Large Visual Language Model. Compared to the enhanced version, further improvements have been made to visual reasoning and instruction-following capabilities, offering a higher level of visual perception and cognitive understanding. It delivers optimal performance on an even broader range of complex tasks. |

Compared to the open-source version of Qwen-VL, these two models perform on par with Gemini Ultra and GPT-4V in multiple text-image multimodal tasks, significantly surpassing the previous best results from open-source models.

Notably, Qwen-VL-Max outperforms both GPT-4V from OpenAI and Gemini from Google in tasks on Chinese question answering and Chinese text comprehension. This breakthrough underscores the model’s advanced capabilities and its potential to set new standards in the field of multimodal AI research and application.

| Model | DocVQA Document understanding | ChartQA Chart understanding | AI2D Science diagrams | TextVQA Text reading | MMMU College-level problems | MathVista Mathematical reasoning | MM-Bench-CN Natural image QA in Chinese |

|---|---|---|---|---|---|---|---|

| Other Best Open-source LVLM | 81.6% (CogAgent) | 68.4% (CogAgent) | 73.7% (Fuyu-Medium) | 76.1% (CogAgent) | 45.9% (Yi-VL-34B) | 36.7% (SPHINX-V2) | 72.4% (InternLM-XComposer-VL) |

| Gemini Pro | 88.1% | 74.1% | 73.9% | 74.6% | 47.9% | 45.2% | 74.3% |

| Gemini Ultra | 90.9% | 80.8% 1 | 79.5% 1 | 82.3% 1 | 59.4% 1 | 53.0% 1 | - |

| GPT-4V | 88.4% | 78.5% | 78.2% | 78.0% | 56.8% | 49.9% | 73.9% |

| Qwen-VL-Plus | 91.4% | 78.1% | 75.9% | 78.9% | 45.2% | 43.3% | 68.0% |

| Qwen-VL-Max | 93.1% 1 | 79.8% 2 | 79.3% 2 | 79.5% 2 | 51.4% 3 | 50.0% 2 | 75.1% 1 |

New versions of the Qwen-VL-Plus and Qwen-VL-Max models not only exhibit exceptional benchmark performance but also demonstrate a marked improvement in problem-solving capabilities within real-world scenarios. These advanced models effortlessly engage in dialogue, identify celebrities and landmarks, generate text, and notably, have significantly enhanced their abilities to describe and interpret visual content.

Here we present some practical examples:

1. Basic Recognition Capabilities

The latest Qwen-VL models are now more adept at accurately describing and identifying complex information within images, as well as providing detailed background and answering related questions. For instance, Qwen-VL can recognize not only common objects, but also celebrities and landmarks. Qwen-VL can write poetry in various languages inspired by visuals, and analyze everyday screenshots.Example: Celebrity Recognition (1/4)NEXT

User

who are they?

Qwen-VL

These are some of the most famous and highest-paid celebrities in the world. From left to right, top to bottom:

- Justin Bieber (Canadian singer)

- Lionel Messi (Argentine soccer player)

- Cristiano Ronaldo (Portuguese soccer player)

- Elon Musk (South African-born American entrepreneur and businessman)

- Kylie Jenner (American media personality and socialite)

- Taylor Swift (American singer-songwriter)

- Robert Downey Jr. (American actor)

- Scarlett Johansson (American actress)

It’s worth noting that this is just a small sample of the many talented and successful celebrities out there, and that fame and wealth are not the only measures of success or importance.

User

their chinese name?

Qwen-VL

Here are the Chinese names of the celebrities mentioned:

- Justin Bieber: 贾斯汀·比伯

- Lionel Messi: 莱昂内尔·梅西

- Cristiano Ronaldo: 克里斯蒂亚诺·罗纳尔多

- Elon Musk: 埃隆·马斯克

- Kylie Jenner: 凯莉·詹娜

- Taylor Swift: 泰勒·斯威夫特

- Robert Downey Jr.: 小罗伯特·唐尼

- Scarlett Johansson: 斯嘉丽·约翰逊

Note that these are just one possible translation of their names into Chinese, and there may be other variations used in different contexts or regions.

2. Visual Agent Capability: The Essential Role of Localization

Beyond its fundamental capabilities in description and recognition, Qwen-VL also has impressive abilities to pinpoint and query specific elements. For instance, it can accurately highlight the black cars within an image. Moreover, Qwen-VL is also equipped to make judgments, deductions, and decisions based on the prevailing context of a scene.Example: Locate Whatever You Desire (1/3)NEXT

User

Locate the red car

Qwen-VL

The red car is located in the bottom right corner of the image.

3. Visual Reasoning Capability: To Solve Real Problems

One of the most notable advancements in the latest Qwen-VL is its capacity for complex reasoning based on visual inputs. This enhanced visual reasoning capability goes well beyond mere content description, extending to the comprehension and interpretation of intricate representations such as flowcharts, diagrams, and other symbolic systems. In the realms of problem-solving and reasoning, Qwen-VL-Plus/Max excels not only in mathematical problem-solving and information organization but also in conducting more profound interpretations and analyses of charts and graphs.Example: Math (1/5)NEXT

User

read the image and solve it step by step

Qwen-VL

The image shows two geometric figures, a rectangular prism and a cube, with their dimensions labeled. The problem asks to calculate the surface area and volume of these figures.

- For the rectangular prism:

- Length (l) = 4 cm

- Width (w) = 2 cm

- Height (h) = 3 cm

Surface Area (SA) of a rectangular prism = 2lw + 2lh + 2wh Volume (V) of a rectangular prism = lwh

Substituting the given values: SA = 2(4cm)(2cm) + 2(4cm)(3cm) + 2(2cm)(3cm) SA = 16cm² + 24cm² + 12cm² SA = 52cm²

V = (4cm)(2cm)(3cm) V = 24cm³

- For the cube:

- Side length (a) = 3 dm

Surface Area (SA) of a cube = 6a² Volume (V) of a cube = a³

Substituting the given value: SA = 6(3dm)² SA = 6(9dm²) SA = 54dm²

V = (3dm)³ V = 27dm³

So, the surface area and volume for each figure are as follows:

For the rectangular prism:

- Surface Area = 52 cm²

- Volume = 24 cm³

For the cube:

- Surface Area = 54 dm²

- Volume = 27 dm³

4. Text Information Recognition & Processing

Text processing in images has also improved significantly, especially in terms of recognizing Chinese and English text. Qwen-VL-Plus/Max can now efficiently extract information from tables and documents and reformat this information to meet custom output requirements. In addition, it has an efficient mechanism for identifying and converting dense text, which is very effective in dealing with documents that contain a lot of information. It supports images with extreme aspect ratios, ensuring the flexibility to process diverse visual content.Example: Information Retrieval (1/3)NEXT

User

Please read the text in this image and return the information in the following JSON format (note xxx is placeholder, if the information is not available in the image, put “N/A” instead). {“class”: xxx, “DLN”: xxx, “DOB”: xxx, “Name”: xxx, “Address”: xxx, “EXP”: xxx, “ISS”: xxX, “SEX”: xxx, “HGT”: xxx, “WGT”: xxx, “EYES”: xxx, “HAIR”: xxX, “DONOR”: xxx}

Qwen-VL

{“class”: “C”, “DLN”: “I1234568”, “DOB”: “08/31/1977”, “Name”: “IMA CARDHOLDER”, “Address”: “2570 24TH STREET ANYTOWN, CA 95818”, “EXP”: “08/31/2014”, “ISS”: “08/31/2009”, “SEX”: “F”, “HGT”: “5’-05"”, “WGT”: “125 lb”, “EYES”: “BRN”, “HAIR”: “BRN”, “DONOR”: “YES”}

How to Use

Now you can access Qwen-VL-Plus and Qwen-VL-Max through the Huggingface Spaces, the Qwen website, and Dashscope APIs.- Try Qwen-VL-Plus ( Qwen-VL-Plus - a Hugging Face Space by Qwen) and Qwen-VL-Max ( Qwen-VL-Max - a Hugging Face Space by Qwen) in the Huggingface Spaces

GitHub - QwenLM/Qwen-VL: The official repo of Qwen-VL (通义千问-VL) chat & pretrained large vision language model proposed by Alibaba Cloud.

The official repo of Qwen-VL (通义千问-VL) chat & pretrained large vision language model proposed by Alibaba Cloud. - QwenLM/Qwen-VL

Last edited:

In case you are out of the loop:

𝚖𝚒𝚜𝚝𝚛𝚊𝚕-𝚖𝚎𝚍𝚒𝚞𝚖 might have been leaked.

(or we are all trolled by *****)

Why is this big?

𝚖𝚒𝚜𝚝𝚛𝚊𝚕-𝚖𝚎𝚍𝚒𝚞𝚖 is the best LLM ever after GPT-4

Everything is all still ongoing, people are benchmarking this model right now.

But so far it seems to be generating VERY similar texts to what you get by mistral's endpoint.

Let's see.

---

Links:

- Model: miqudev/miqu-1-70b · Hugging Face

- ***** thread: https://boards.4...chan.org/g/thread/98696032

Original thread starting the investigation by

@JagersbergKnut here: https://nitter.unixfox.eu/JagersbergKnut/status/1751733218498286077

Some investigation into the weights by @nisten here: https://nitter.unixfox.eu/nisten/status/1751812911226331294

DeepSeek-Coder: When the Large Language Model Meets Programming -- The Rise of Code Intelligence - DeepSeek-AI 2024 - SOTA open-source coding model that surpasses GPT-3.5 and Codex while being unrestricted in research and commercial use!

Paper: [2401.14196] DeepSeek-Coder: When the Large Language Model Meets Programming -- The Rise of Code Intelligence

Github: GitHub - deepseek-ai/DeepSeek-Coder: DeepSeek Coder: Let the Code Write Itself

Models: deepseek-ai (DeepSeek)

Abstract:

The rapid development of large language models has revolutionized code intelligence in software development. However, the predominance of closed-source models has restricted extensive research and development. To address this, we introduce the DeepSeek-Coder series, a range of open-source code models with sizes from 1.3B to 33B, trained from scratch on 2 trillion tokens. These models are pre-trained on a high-quality project-level code corpus and employ a fill-in-the-blank task with a 16K window to enhance code generation and infilling. Our extensive evaluations demonstrate that DeepSeek-Coder not only achieves state-of-the-art performance among open-source code models across multiple benchmarks but also surpasses existing closed-source models like Codex and GPT-3.5. Furthermore, DeepSeek-Coder models are under a permissive license that allows for both research and unrestricted commercial use.

deepseek-ai/deepseek-coder-7b-instruct-v1.5 · coding scores?

Please either update your github page with benchmarrks of this model or add them to the model card. Humaneval, humaneval+, ect.

huggingface.co

deepseek-ai/deepseek-coder-7b-instruct-v1.5 · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

LoneStriker/deepseek-coder-7b-instruct-v1.5-GGUF · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co