You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?Get the Microsoft Copilot app for free for a limited time. The app gives you free access to ChatGPT Plus.

Android only

play.google.com

play.google.com

Android only

Microsoft Copilot - Apps on Google Play

Calm. Confident. Copilot. Here to help. A companion for every moment.

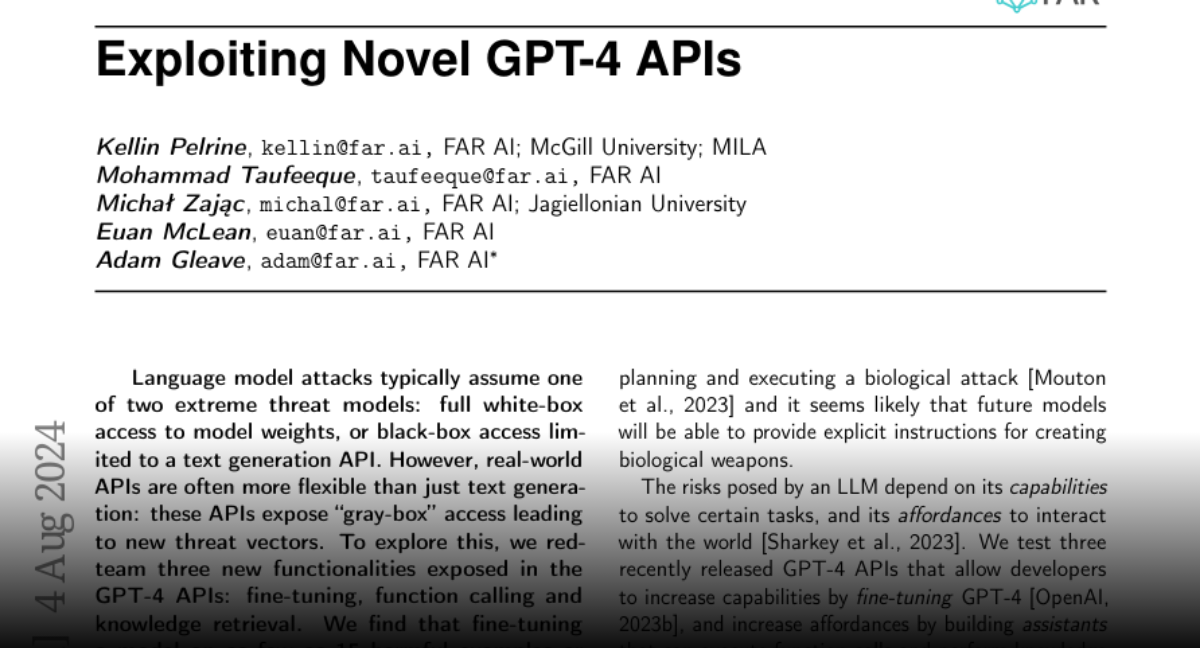

Language model attacks typically assume one of two extreme threat models: full white-box access to model weights, or black-box access limited to a text generation API. However, real-world APIs are often more flexible than just text generation: these APIs expose ``gray-box'' access leading to new threat vectors. To explore this, we red-team three new functionalities exposed in the GPT-4 APIs: fine-tuning, function calling and knowledge retrieval. We find that fine-tuning a model on as few as 15 harmful examples or 100 benign examples can remove core safeguards from GPT-4, enabling a range of harmful outputs. Furthermore, we find that GPT-4 Assistants readily divulge the function call schema and can be made to execute arbitrary function calls. Finally, we find that knowledge retrieval can be hijacked by injecting instructions into retrieval documents. These vulnerabilities highlight that any additions to the functionality exposed by an API can create new vulnerabilities.

Last edited:

Get the Microsoft Copilot app for free for a limited time. The app gives you free access to ChatGPT Plus.

Android only

Microsoft Copilot - Apps on Google Play

Calm. Confident. Copilot. Here to help. A companion for every moment.play.google.com

it's not for a limited time, it's limited to android for now with no iOS version yet. been using bing chat/copilot for months now and microsoft has yet to signal if and when they'll charge users for access.

GitHub - vikhyat/moondream: tiny vision language model

tiny vision language model. Contribute to vikhyat/moondream development by creating an account on GitHub.

moondream

a tiny vision language modelproject goals

Build a high-quality, low-hallucination vision language model small enough to run on an edge device without a GPU.moondream0

Initial prototype built using SigLIP, Phi-1.5, and the LLaVa training dataset. The model is for research purposes only, and is subject to the Phi and LLaVa license restrictions.Examples

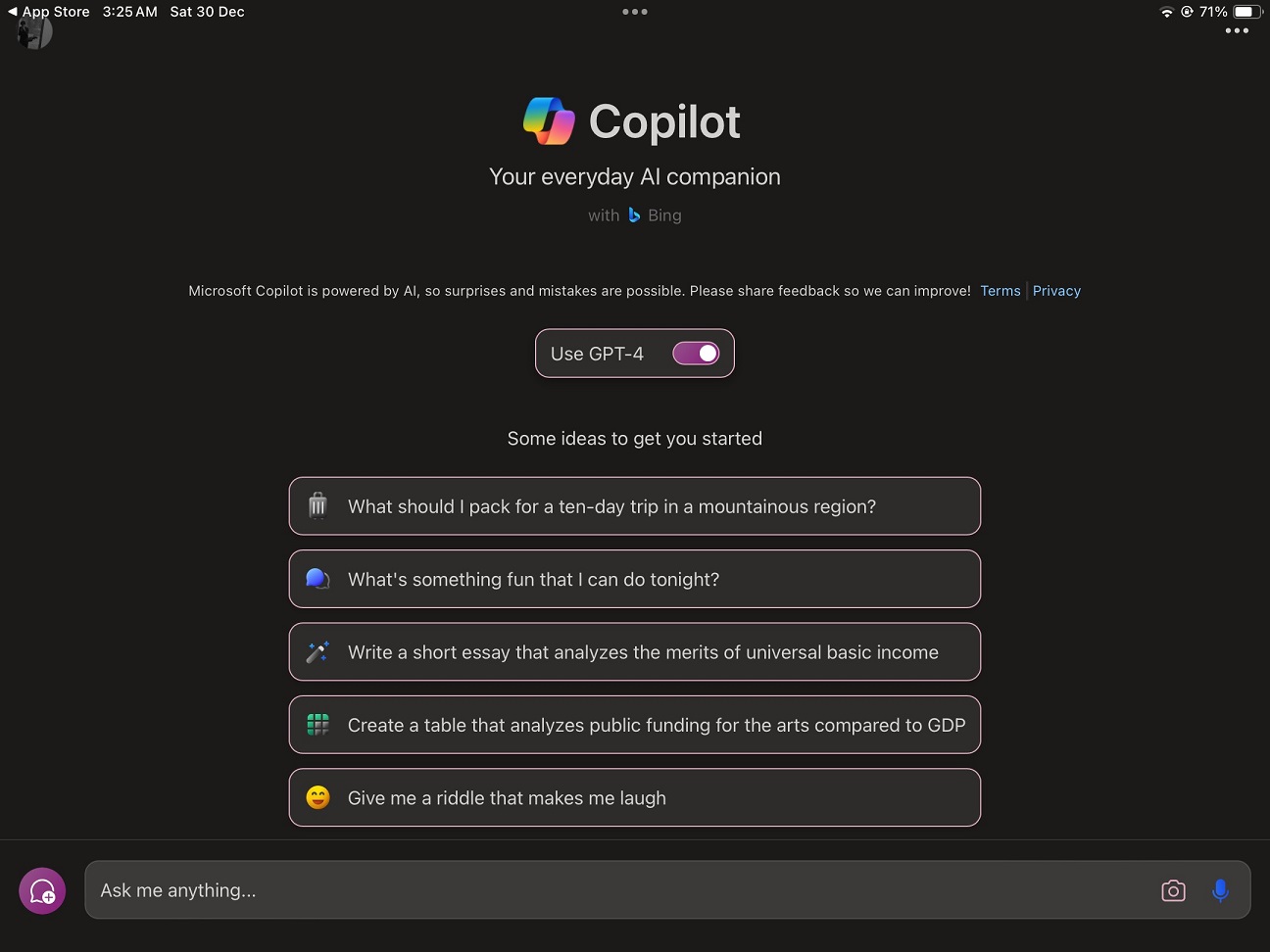

Microsoft Copilot app arrives on iOS, bringing free ChatGPT-4 to iPhone and iPad

Microsoft has released its Copilot app for iOS devices, just two days after I reported exclusively that the iOS version was almost ready. Now, iPhone and iPad users can download it from the Apple App Store. Copilot isn’t just another name for Bing Chat; it brings some cool new things to the...

Microsoft Copilot app arrives on iOS, bringing free ChatGPT-4 to iPhone and iPad

By Mayank Parmar

December 30, 2023

Microsoft has released its Copilot app for iOS devices, just two days after I reported exclusively that the iOS version was almost ready. Now, iPhone and iPad users can download it from the Apple App Store. Copilot isn’t just another name for Bing Chat; it brings some cool new things to the table.

Microsoft Copilot app is available via Apple’s App Store, and the tech giant clarified that the AI app is optimized for iPad. In our tests, we also noticed that Copilot app offers better experience than the dedicated ChatGPT app for iPad.

While Bing integrates search, rewards, and chat functionalities, Copilot streamlines its focus on an advanced chat interface, incorporating features like the innovative DALL-E 3. Unlike Bing, which combines search and AI, Copilot emerges as a ChatGPT-like app tailored to iOS devices.

Microsoft Copilot for iPad

Copilot maintains its versatility as a multi-functional assistant on Android, supporting GPT Vision, GPT-4, and DALL-E. The addition of ChatGPT-4 Turbo, available to select users, enhances its ability to provide information on recent events, especially with the search plugin disabled.

Copilot is ChatGPT-4 for iOS, but it’s absolutely free. You can write emails, stories, or even summarize tough texts. It’s great with languages too, helping you translate, proofread, and more. And it’s all free, which Microsoft says won’t change.

It also plugin support, even third-party plugins at no extra cost. This can be super handy for planning trips or sprucing up your resume. Plus, there’s the Image Creator that turns your words into pictures right on your phone.

argilla/notux-8x7b-v1 · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Model Card for Notux 8x7B-v1

This model is a preference-tuned version of mistralai/Mixtral-8x7B-Instruct-v0.1 on the argilla/ultrafeedback-binarized-preferences-cleaned dataset using DPO (Direct Preference Optimization).As of Dec 26th 2023, it outperforms Mixtral-8x7B-Instruct-v0.1 and is the top ranked MoE (Mixture of Experts) model on the Hugging Face Open LLM Leaderboard.

This is part of the Notus family of models and experiments, where the Argilla team investigates data-first and preference tuning methods like dDPO (distilled DPO). This model is the result of our first experiment at tuning a MoE model that has already been fine-tuned with DPO (i.e., Mixtral-8x7B-Instruct-v0.1).

Model Details

Model Description

- Developed by: Argilla (based on MistralAI previous efforts)

- Shared by: Argilla

- Model type: Pretrained generative Sparse Mixture of Experts

- Language(s) (NLP): English, Spanish, Italian, German, and French

- License: MIT

- Finetuned from model: mistralai/Mixtral-8x7B-Instruct-v0.1

Model Sources

- Repository: GitHub - argilla-io/notus: Notus is a collection of fine-tuned LLMs using SFT, DPO, SFT+DPO, and/or any other RLHF techniques, while always keeping a data-first approach

- Paper: N/A

Training Details

Training Hardware

We used a VM with 8 x H100 80GB hosted in runpod.io for 1 epoch (~10hr).Training Data

We used a new iteration of the Argilla UltraFeedback preferences dataset named argilla/ultrafeedback-binarized-preferences-cleaned.Training procedure

Training hyperparameters

The following hyperparameters were used during training:- learning_rate: 5e-07

- train_batch_size: 8

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- total_train_batch_size: 64

- total_eval_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1

Training results

Training Loss | Epoch | Step | Validation Loss | Rewards/chosen | Rewards/rejected | Rewards/accuracies | Rewards/margins | Logps/rejected | Logps/chosen | Logits/rejected | Logits/chosen |

|---|---|---|---|---|---|---|---|---|---|---|---|

0.4384 | 0.22 | 200 | 0.4556 | -0.3275 | -1.9448 | 0.7937 | 1.6174 | -405.7994 | -397.8617 | -1.3157 | -1.4511 |

0.4064 | 0.43 | 400 | 0.4286 | -0.2163 | -2.2090 | 0.8254 | 1.9927 | -408.4409 | -396.7496 | -0.7660 | -0.6539 |

0.3952 | 0.65 | 600 | 0.4275 | -0.1311 | -2.1603 | 0.8016 | 2.0291 | -407.9537 | -395.8982 | -0.6783 | -0.7206 |

0.3909 | 0.87 | 800 | 0.4167 | -0.2273 | -2.3146 | 0.8135 | 2.0872 | -409.4968 | -396.8602 | -0.8458 | -0.7738 |

Framework versions

- Transformers 4.36.0

- Pytorch 2.1.0+cu118

- Datasets 2.14.6

- Tokenizers 0.15.0

DEMO:

Notux Chat - a Hugging Face Space by argilla

Discover amazing ML apps made by the community

huggingface.co

Last edited:

How AI-created fakes are taking business from online influencers

Hyper-realistic ‘virtual influencers’ are being used to promote leading brands — drawing ire from human content creators

www.ft.com

www.ft.com

How AI-created fakes are taking business from online influencers

Hyper-realistic ‘virtual influencers’ are being used to promote leading brands — drawing ire from human content creators

Aitana Lopez, an AI-generated influencer, has convinced many social media users she is real © FT montage/TheClueless/GettyImages

Cristina Criddle in London

YESTERDAY

152Print this page

Pink-haired Aitana Lopez is followed by more than 200,000 people on social media. She posts selfies from concerts and her bedroom, while tagging brands such as haircare line Olaplex and lingerie giant Victoria’s Secret.

Brands have paid about $1,000 a post for her to promote their products on social media — despite the fact that she is entirely fictional.

Aitana is a “virtual influencer” created using artificial intelligence tools, one of the hundreds of digital avatars that have broken into the growing $21bn content creator economy.

Their emergence has led to worry from human influencers their income is being cannibalised and under threat from digital rivals. That concern is shared by people in more established professions that their livelihoods are under threat from generative AI — technology that can spew out humanlike text, images and code in seconds.

But those behind the hyper-realistic AI creations argue they are merely disrupting an overinflated market.

“We were taken aback by the skyrocketing rates influencers charge nowadays. That got us thinking, ‘What if we just create our own influencer?’” said Diana Núñez, co-founder of the Barcelona-based agency The Clueless, which created Aitana. “The rest is history. We unintentionally created a monster. A beautiful one, though.”

Over the past few years, there have been high-profile partnerships between luxury brands and virtual influencers, including Kim Kardashian’s make-up line KKW Beauty with Noonoouri, and Louis Vuitton with Ayayi.

Instagram analysis of an H&M advert featuring virtual influencer Kuki found that it reached 11 times more people and resulted in a 91 per cent decrease in cost per person remembering the advert, compared with a traditional ad.

“It is not influencing purchase like a human influencer would, but it is driving awareness, favourability and recall for the brand,” said Becky Owen, global chief marketing and innovation officer at Billion Dollar Boy, and former head of Meta’s creator innovations team.

Brands have been quick to engage with virtual influencers as a new way to attract attention while reducing costs.

“Influencers themselves have a lot of negative associations related to being fake or superficial, which makes people feel less concerned about the concept of that being replaced with AI or virtual influencers,” said Rebecca McGrath, associate director for media and technology at Mintel.

“For a brand, they have total control versus a real person who comes with potential controversy, their own demands, their own opinions,” McGrath added.

Human influencers contend that their virtual counterparts should have to disclose that they are not real, however. “What freaks me out about these influencers is how hard it is to tell they’re fake,” said Danae Mercer, a content creator with more than 2mn followers.

The UK’s Advertising Standards Agency said it was “keenly aware of the rise of virtual influencers within this space” but said there was no rule where they must declare they are generated by AI.

Maia is another model created by The Clueless using artificial intelligence © The Clueless

Many other markets are contending with the problem, with India being one country that forces virtual influencers to reveal their AI origins.

Although The Clueless discloses Aitana is fake through the hashtag #aimodel in her profile on Instagram, many others do not do so or use vague terms such as #digitalinfluencer.

“Even though we made it clear she was an AI-generated model . . . initially, most of her followers didn’t question her authenticity, they genuinely believed in her existence,” said Núñez, who added that Aitana has received multiple requests to meet followers in person.

One of the first virtual influencers, Lil Miquela, charges up to hundreds of thousands of dollars for any given deal and has worked with Burberry, Prada and Givenchy.

Although AI is used to generate content for Lil Miquela, the team behind the creation “strongly believe [the storytelling behind virtual creators] cannot be fully replicated by generative AI”, said Ridhima Kahn, vice-president of business development at Dapper Labs, who oversees Lil Miquela’s partnerships.

“A lot of companies are coming out with virtual influencers they have generated in a day, and they are not really putting that human element [into the messaging] . . . and I don’t think that is going to be the long-term strategy,” she added.

Lil Miquela is considered by many to be mixed race, and her audience of nearly 3mn followers ranges from the US to Asia and Latin America. Meanwhile, The Clueless now has another creation in development, which it calls a “curvy Mexican” named Laila.

Francesca Sobande, a senior lecturer in digital media studies at Cardiff University, has researched virtual influencers with racially ambiguous features and suggests that the motivations behind giving some of these characteristics are “simply another form of marketing” in order to target a broader audience, when “something has been created with a focus on profit”.

“[This] can be very convenient for brands wanting to identify global marketing strategies and trying to project a hollow image that might be perceived as progressive,” said Sobande, who added that “seldom does it seem to be black people” creating the virtual avatars.

Dapper Labs emphasised that the team behind Lil Miquela is diverse and reflects her audience. The Clueless said its creations were designed to “foster inclusivity and provide opportunities to collectives that have faced exclusion for an extended period”.

The Clueless’s creations, among other virtual influencers, have also been criticised for being overly sexualised, with Aitana regularly appearing in underwear. The agency said sexualisation is “prevalent with real models and influencers” and that its creations “merely mirror these established practices without deviating from the current norms in the industry”.

Mercer, the human influencer, argued: “It feels like women in recent years have been able to take back some agency, through OnlyFans, through social media, they have been able to take control of their bodies and say ‘for so long men have made money off me, I am going to make money for myself’.”

But she said AI-generated creations, often made by men, were once again profiting from female sexuality. “That is the reason behind growing these accounts. It is to make money.”

A New Kind of AI Copy Can Fully Replicate Famous People. The Law Is Powerless.

New AI-generated digital replicas of real experts expose an unnerving policy gray zone. Washington wants to fix it, but it’s not clear how.

A New Kind of AI Copy Can Fully Replicate Famous People. The Law Is Powerless.

New AI-generated digital replicas of real experts expose an unnerving policy gray zone. Washington wants to fix it, but it’s not clear how.

Martin Seligman, the influential American psychologist, has an AI chatbot built after himself — which is part of a broader wave of AI chatbots modeled on real humans. | Juergen Frank

By MOHAR CHATTERJEE

12/30/2023 07:00 AM EST

Martin Seligman, the influential American psychologist, found himself pondering his legacy at a dinner party in San Francisco one late February evening. The guest list was shorter than it used to be: Seligman is 81, and six of his colleagues had died in the early Covid years. His thinking had already left a profound mark on the field of positive psychology, but the closer he came to his own death, the more compelled he felt to help his work survive.

The next morning he received an unexpected email from an old graduate student, Yukun Zhao. His message was as simple as it was astonishing: Zhao’s team had created a “virtual Seligman.”

Zhao wasn’t just bragging. Over two months, by feeding every word Seligman had ever written into cutting-edge AI software, he and his team had built an eerily accurate version of Seligman himself — a talking chatbot whose answers drew deeply from Seligman’s ideas, whose prose sounded like a folksier version of Seligman’s own speech, and whose wisdom anyone could access.

Impressed, Seligman circulated the chatbot to his closest friends and family to check whether the AI actually dispensed advice as well as he did. “I gave it to my wife and she was blown away by it,” Seligman said.

The bot, cheerfully nicknamed “Ask Martin,” had been built by researchers based in Beijing and Wuhan — originally without Seligman’s permission, or even awareness.

The Chinese-built virtual Seligman is part of a broader wave of AI chatbots modeled on real humans, using the powerful new systems known as large language models to simulate their personalities online. Meta is experimenting with licensed AI celebrity avatars; you can already find internet chatbots trained on publicly available material about dead historical figures.

But Seligman’s situation is also different, and in a way more unsettling. It has cousins in a small handful of projects that have effectively replicated living people without their consent. In Southern California, tech entrepreneur Alex Furmansky created a chatbot version of Belgian celebrity psychotherapist Esther Perel by scraping her podcasts off the internet. He used the bot to counsel himself through a recent heartbreak, documenting his journey in a blog post that a friend eventually forwarded to Perel herself.

Perel addressed AI Perel’s existence at the 2023 SXSW conference. Like Seligman, she was more astonished than angry about the replication of her personality. She called it “artificial intimacy.”

Both Seligman and Perel eventually decided to accept the bots rather than challenge their existence. But if they’d wanted to shut down their digital replicas, it’s not clear they would have had a way to do it. Training AI on copyrighted works isn’t actually illegal. If the real Martin had wanted to block access to the fake one — a replica trained on his own thinking, using his own words, to produce all-new answers — it’s not clear he could have done anything about it.

SAG-AFTRA members hold a rally on July 25, 2023 in New York City. Artists fear the dystopian possibility of vocalists, screenwriters and fashion models competing against AI models that look and sound like them. | Roy Rochlin/Getty Images

AI-generated digital replicas illuminate a new kind of policy gray zone created by powerful new “generative AI” platforms, where existing laws and old norms begin to fail.

In Washington, spurred mainly by actors and performers alarmed by AI’s capacity to mimic their image and voice, some members of Congress are already attempting to curb the rise of unauthorized digital replicas. In the Senate Judiciary Committee, a bipartisan group of senators — including the leaders of the intellectual property subcommittee — are circulating a draft bill titled the NO FAKES Act that would force the makers of AI-generated digital replicas to license their use from the original human.

If passed, the bill would allow individuals to authorize, and even profit from, the use of their AI-generated likeness — and bring lawsuits against cases of unauthorized use.

“More and more, we’re seeing AI used to replicate someone’s likeness and voice in novel ways without consent or compensation,” said Sen. Amy Klobuchar (D-Minn.) wrote to POLITICO, in response to the stories of AI experimentation involving Seligman and Perel. She is one of the co-sponsors of the bill. “Our laws need to keep up with this quickly evolving technology,” she said.

But even if NO FAKES Act did pass Congress, it would be largely powerless against the global tide of AI technology.

Neither Perel nor Seligman reside in the country where their respective AI chatbot developers do. Perel is Belgian; her replica is based in the U.S. And AI Seligman is trained in China, where U.S. laws have little traction.

“It really is one of those instances where the tools seem woefully inadequate to address the issue, even though you may have very strong intuitions about it,” said Tim Wu, a legal scholar who architected the Biden administration’s antitrust and competition policies.

A chatbot version of Belgian celebrity psychotherapist Esther Perel was created by scraping her podcasts off the internet. | Carolyn Kaster/AP

Neither AI Seligman nor AI Perel were built — at least originally — with a profit motive in mind, their creators say.

Contacted by POLITICO, Zhao said he built the AI Seligman to help fellow Chinese citizens through an epidemic of anxiety and depression. China’s mental health policies have made it very difficult for citizens to get confidential help from a therapist. And the demand for mental health services in China has soared over the past three years due to the stresses imposed by China’s now-abandoned draconian ”zero Covid” policy.

As vice director of Tsinghua University’s Positive Psychology Research Center in China, Zhao now researches the same branches of psychology his graduate adviser had pioneered. The AI Seligman his team had built — originally trained on 15 of Seligman’s books — is a way to bring the benefits of positive psychology to millions in China, Zhao told his old teacher.

Similarly, when Perel the human wasn’t available, a public version of the AI software driving ChatGPT and Perel’s podcasts allowed Furmansky to build the next best thing. He saw the same potential in AI that Zhao did: a means to access the knowledge locked away in the brains of a few really brilliant people.

Furmansky said he and Perel’s team were on cordial terms regarding AI Perel, and had spoken about pursuing something more collaborative. Contacted by POLITICO, Perel’s representative said Perel has not “endorsed, encouraged, enabled Furmansky or waived any of her rights to take legal action against Furmansky.” They declined to provide further details about her stance on the AI Perel.

Others don’t necessarily share Seligman’s sanguine views about AI’s capacity to replicate the vocal and intellectual traits of real people — particularly without their knowing consent. In June, a shaken mother recounted the harrowing details of a scammer who used AI to mimic her child’s voice at a Senate hearing. In October, anxious artist unions beseeched the Federal Trade Commission to regulate the creative industry’s data-handling practices, pointing to the dystopian possibility of vocalists, screenwriters and fashion models competing against AI models that look and sound like them.

Even Wu — a seasoned scholar of America’s complicated tech economy — held a straightforward view on the unsanctioned use of AI to impersonate humans. “My instinct is strong and immediate on it,” Wu said. “I think it’s unethical and I think it is something close to body snatching.”

Lawmakers are also worried about who, ultimately, will reap the tangible and intangible profits of American intellectual property in the age of AI. “Neither Big Tech nor China should have the right to benefit from the work of American creators,” said Sen. Marsha Blackburn (R-Tenn.), another co-sponsor of the NO FAKES Act, in an email response to Seligman’s story.

After numerous experiments with his AI counterpart, Martin Seligman thinks his chatbot will do more good than harm in the world. | Florida International University College of Business

Seligman’s American citizenship has not stopped him from being something of a hero in the world of Chinese psychology. His theories on well-being — which carry the gravitas of global scientific credibility — are embedded in Chinese education policies from kindergarten onwards. Grade-school children there know who he is. And Zhao believes Seligman’s popularity will help his mental health AI “coach” stand out in the Chinese market. “Marty has a big brand name,” Zhao said. “With his endorsement, a lot of people would come to use it, at least out of curiosity.”

But even for the Chinese citizens who Zhao hopes to help, there’s a risk — one that exposes another facet of the new landscape of AI.

To talk to the chatbot, people type or speak into their computers, sharing confidential information about their lives. In the U.S., that would raise the question of which tech companies are listening and possibly selling that data bout you.

In China, where the government is deeply concerned with monitoring citizens’ thoughts, there’s a far more immediate risk. The ruling Chinese Communist Party’s pervasive electronic surveillance policies mean Chinese users of the virtual Seligman may unwittingly be sharing their thoughts with authorities — who could access or interpret it as criticism of the one-party state.

Beijing has long wielded false diagnoses of mental illness to target dissidents with arbitrary detention in state-run institutions. The virtual Seligman could provide Chinese authorities a potential treasure trove of information on its citizens’ innermost thoughts.

With AI-generated digital replicas poised to enter the mainstream market from Hollywood to Chinese app stores, U.S. policymakers are running out of time to determine the rules surrounding their use. Since that first phone call with Zhao, Seligman says, other AI companies have reached out to him about licensing his library of work to them. “I told them, no, I’m not willing to do it because I don’t know you. I don’t trust you,” he said. Zhao is the exception, Seligman told POLITICO, because of their long friendship.

Seligman is no stranger to powerful government forces co-opting his work — his research on learned helplessness was used, without his knowledge, to develop the CIA’s “enhanced interrogation” techniques post 9/11. But after numerous experiments with his AI counterpart, he thinks virtual Seligman will do more good than harm in the world.

“It is enchanting to me,” he said, sitting in his study in Philadelphia, “that what I’ve written about and discovered over 60 years in psychology could be useful to people long after I was dead.”

Seligman leaned forward in his chair, as if divulging a secret. “That kind of legacy seems to me as close to immortality as a scientist can come.”

Phelim Kine contributed to this report.

AVXL

Laughing at you n*ggaz like “ha ha ha”

GitHub makes Copilot Chat generally available, letting devs ask questions about code | TechCrunch

Copilot Chat, a feature of GitHub's gen AI coding tool Copilot that lets devs ask natural language questions about code, is now generally available.