You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The A.I Megathread (LLM , GPT , Development)

More options

Who Replied?

RoboVQA: Multimodal Long-Horizon Reasoning for Robotics

RoboVQA: Multimodal Long-Horizon Reasoning for Robotics

Project page for RoboVQA: Multimodal Long-Horizon Reasoning for Robotics.

robovqa.github.io

Code : https://github.com/MasterBin-IIAU/UNINEXT

Paper : https://arxiv.org/abs/2303.06674

simpler AI(zephyr-beta) generated explanation:

Imagine you're playing a game where you have to find different kinds of objects hidden in images or videos. These objects could be animals, cars, people, or anything else with distinct features. The task might vary depending on what information is given to you. For example, sometimes you might see a category name like "cat" or "car," other times you might be asked to locate specific objects based on their position, such as through detection boxes or segmented masks. You might also need to track multiple objects over time in video footage. All these tasks require understanding what objects look like and how they appear under various conditions.

The researchers behind UNINEXT simplify and unify many of these object perception challenges by viewing them all as variations on two core components: finding the right inputs to guide your search for an object, and then efficiently locating it within the image or video stream. By defining these steps in terms of prompts - descriptive phrases used to help identify objects - UNINEXT can handle a wide range of tasks with just a few adjustments to its internal architecture.

So instead of training separate models for each type of object recognition problem, UNINEXT uses a flexible framework that lets you switch between tasks by modifying the input prompts alone. This not only saves computational resources but also helps improve overall performance across all the related tasks since the underlying algorithms are shared among them.

In summary, UNINEXT offers a more efficient and versatile approach to object perception by breaking down complex visual searches into simpler building blocks and applying them consistently across a broad spectrum of use cases. Its success is demonstrated by impressive results on twenty difficult benchmark datasets covering everything from basic category recognition to sophisticated tracking and segmentation scenarios. If you want to learn more about UNINEXT, check out the research paper and code repository linked in the text.

OpenAI chief seeks new Microsoft funds to build ‘superintelligence’

Sam Altman expects big tech group will back start-up’s mission to create software as intelligent as humans

www.ft.com

www.ft.com

OpenAI chief seeks new Microsoft funds to build ‘superintelligence’

Sam Altman expects big tech group will back start-up’s mission to create software as intelligent as humans

OpenAI’s chief Sam Altman said the partnership with Microsoft would ensure ‘that we both make money on each other’s success, and everybody is happy’ © FT montage/Bloomberg

Madhumita Murgia in San Francisco 11 HOURS AGO

OpenAI plans to secure further financial backing from its biggest investor Microsoft as the ChatGPT maker’s chief executive Sam Altman pushes ahead with his vision to create artificial general intelligence (AGI) — computer software as intelligent as humans.

In an interview with the Financial Times, Altman said his company’s partnership with Microsoft’s chief executive Satya Nadella was “working really well” and that he expected “to raise a lot more over time” from the tech giant among other investors, to keep up with the punishing costs of building more sophisticated AI models.

Microsoft earlier this year invested $10bn in OpenAI as part of a “multiyear” agreement that valued the San Francisco-based company at $29bn, according to people familiar with the talks.

Asked if Microsoft would keep investing further, Altman said: “I’d hope so.” He added: “There’s a long way to go, and a lot of compute to build out between here and AGI . . . training expenses are just huge.”

Altman said “revenue growth had been good this year”, without providing financial details, and that the company remained unprofitable due to training costs. But he said the Microsoft partnership would ensure “that we both make money on each other’s success, and everybody is happy”.

In the latest sign of how OpenAI intends to build a business model on top of ChatGPT, the company announced a suite of new tools, and upgrades to its existing model GPT-4 for developers and companies at an event on November 6 attended by Nadella.

The tools include custom versions of ChatGPT that can be adapted and tailored for specific applications, and a GPT Store, or a marketplace of the best apps. The eventual aim will be to split revenues with the most popular GPT creators, in a business model similar to Apple’s App Store.

“Right now, people [say] ‘you have this research lab, you have this API [software], you have the partnership with Microsoft, you have this ChatGPT thing, now there is a GPT store’. But those aren’t really our products,” Altman said. “Those are channels into our one single product, which is intelligence, magic intelligence in the sky. I think that’s what we’re about.”

To build out the enterprise business, Altman said he hired executives such as Brad Lightcap, who previously worked at Dropbox and start-up accelerator Y Combinator, as his chief operating officer.

Altman, meanwhile, splits his time between two areas: research into “how to build superintelligence” and ways to build up computing power to do so. “The vision is to make AGI, figure out how to make it safe . . . and figure out the benefits,” he said.

Pointing to the launch of GPTs, he said OpenAI was working to build more autonomous agents that can perform tasks and actions, such as executing code, making payments, sending emails or filing claims.

“We will make these agents more and more powerful . . . and the actions will get more and more complex from here,” he said. “The amount of business value that will come from being able to do that in every category, I think, is pretty good.”

The company is also working on GPT-5, the next generation of its AI model, Altman said, although he did not commit to a timeline for its release.

It will require more data to train on, which Altman said would come from a combination of publicly available data sets on the internet, as well as proprietary data from companies.

OpenAI recently put out a call for large-scale data sets from organisations that “are not already easily accessible online to the public today”, particularly for long-form writing or conversations in any format.

While GPT-5 is likely to be more sophisticated than its predecessors, Altman said it was technically hard to predict exactly what new capabilities and skills the model might have.

“Until we go train that model, it’s like a fun guessing game for us,” he said. “We’re trying to get better at it, because I think it’s important from a safety perspective to predict the capabilities. But I can’t tell you here’s exactly what it’s going to do that GPT-4 didn’t.”

To train its models, OpenAI, like most other large AI companies, uses Nvidia’s advanced H100 chips, which became Silicon Valley’s hottest commodity over the past year as rival tech companies raced to secure the crucial semiconductors needed to build AI systems.

Altman said there had been “a brutal crunch” all year due to supply shortages of Nvidia’s $40,000-a-piece chips. He said his company had received H100s, and was expecting more soon, adding that “next year looks already like it’s going to be better”.

However, as other players such as Google, Microsoft, AMD and Intel prepare to release rival AI chips, the dependence on Nvidia is unlikely to last much longer. “I think the magic of capitalism is doing its thing here. And a lot of people would like to be Nvidia now,” Altman said.

OpenAI has already taken an early lead in the race to build generative AI — systems that can create text, images, code and other multimedia in seconds — with the release of ChatGPT almost a year ago.

Despite its consumer success, OpenAI seeks to make progress towards building artificial general intelligence, Altman said. Large language models (LLMs), which underpin ChatGPT, are “one of the core pieces . . . for how to build AGI, but there’ll be a lot of other pieces on top of it”.

While OpenAI has focused primarily on LLMs, its competitors have been pursuing alternative research strategies to advance AI.

Altman said his team believed that language was a “great way to compress information” and therefore developing intelligence, a factor he thought that the likes of Google DeepMind had missed.

“[Other companies] have a lot of smart people. But they did not do it. They did not do it even after I thought we kind of had proved it with GPT-3,” he said.

Ultimately, Altman said “the biggest missing piece” in the race to develop AGI is what is required for such systems to make fundamental leaps of understanding.

“There was a long period of time where the right thing for [Isaac] Newton to do was to read more math textbooks, and talk to professors and practice problems . . . that’s what our current models do,” said Altman, using an example a colleague had previously used.

But he added that Newton was never going to invent calculus by simply reading about geometry or algebra. “And neither are our models,” Altman said.

“And so the question is, what is the missing idea to go generate net new . . . knowledge for humanity? I think that’s the biggest thing to go work on.”

Introducing Visual Copilot: A Better Figma-to-Code Workflow

OCTOBER 12, 2023

WRITTEN BY STEVE SEWELL

A staggering 79% of frontend developers say that turning a Figma design into a webpage is more than a day's work.

Today, we're thrilled to launch Visual Copilot, a completely reimagined version of the Builder Figma-to-code plugin that will save developers 50-80% of the time they spend turning Figma designs into clean code.

With Visual Copilot, you can convert Figma designs into React, Vue, Svelte, Angular, Qwik, Solid, or HTML code in real-time, with one click on the Figma plugin. It uses your choice of styling library, including plain CSS code, Tailwind, Emotion, Styled Components and you can use AI to iterate the code for your preferred CSS library or JavaScript meta-framework (such as Next.js).

Try Visual Copilot

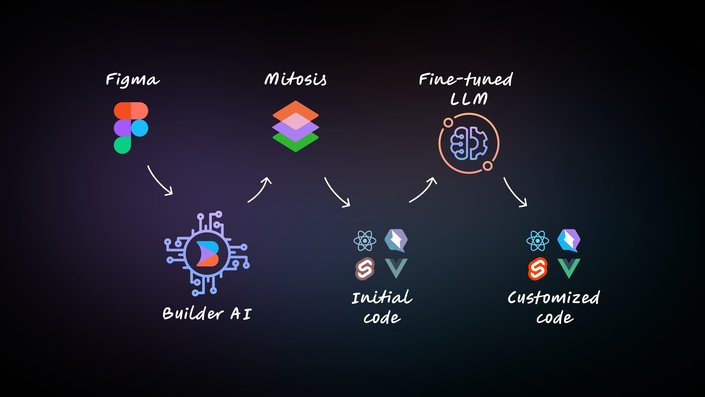

How Visual Copilot uses AI to output clean code

The major difference between Visual Copilot and previous design-to-code tools is that we trained a specialized AI model from scratch to solve this problem. This means there's no need for special alterations to your Figma design files or workflow to convert them into high-performance, responsive code that aligns with your style.

The heart of Visual Copilot lies in its AI models and a specialized compiler. The initial model, trained with over 2 million data points, transforms flat design structures into code hierarchies. Our open-source compiler, Mitosis, takes this structured hierarchy and compiles it into code. In the final pass, a finely tuned Large Language Model (LLM) refines the code to match your specific framework and styling preferences. This multi-stage process ensures that the generated code is high-quality and tailored to meet the requirements of your project.

Visual Copilot features

One-Click Conversion

With one click, Visual Copilot helps you convert a Figma design to high-quality components in your framework. This significantly speeds up the development process, making it much faster to get from design to a working webpage or mobile experience.

Automatic Responsiveness

Visual Copilot automatically adjusts components to fit all screen sizes, eliminating manual tweaks for mobile responsiveness. As you resize your screen, the design seamlessly adapts.

Extensive Framework and Library Support

Visual Copilot supports React, Vue, Svelte, Angular, Qwik, Solid, and HTML and seamlessly integrates with various styling libraries like plain CSS, Tailwind, Emotion, Styled Components, and Styled JSX. It supports many frameworks so you can feel confident that the code will be clean, easy to read, and integrate seamlessly into your codebase out-of-the-box today.

Customizable Code Structure

After code generation, you can structure the code to your preferences, ensuring consistency across the codebase. Be it utilizing specific components or iterating hard-coded content to use props.

Easy Integration with Your Codebase

Getting started is as easy as copying the code directly from Visual Copilot - no integration is required. To sync components without the back-and-forth copying and pasting, you can automatically sync the generated code to your codebase, making it a seamless part of your development workflow. It's about reducing the friction of integrating generated code and ensuring a smooth transition from design to a live, production-ready codebase.

Design to production in one click

The core essence of Visual Copilot lies not just in reducing the time spent in translating designs into code, but in catapulting designs straight into live production with minimal friction. The true objective behind any design is to have it interacted with by users, and that's precisely what we've honed in on.In a Builder.io integrated application, adding a single component to your code can now unlock the potential of deploying infinite designs live, all within the defined guardrails and workflows. This is not just about speed, but about an enriched, streamlined, and simplified workflow that eliminates numerous intermediary steps traditionally required to take a design live.

For instance, upon importing a design, with just a single click, you can publish it to your live production website. This process is devoid of any cumbersome steps such as naming or manual code adjustments. The live site gets updated in real time via our robust publishing API, without the need for any code deployment or commits. This is about as real-time as it gets, ensuring that the designs are not just quickly converted to code, but are immediately available for user interaction on the live platform.

Copy-Paste Designs to Builder

With just a simple copy from Figma and paste into Builder, you can effortlessly import either entire design sections or individual components, keeping your workflow smooth as your designs evolve. This feature is engineered for those spontaneous design iterations, ensuring your development workflow remains smooth and uninterrupted.

Coming soon for Visual Copilot

AI uses your components

After gathering feedback from dozens of designers and developers in large organizations, we've found that even teams with well-maintained design systems, cross-platform design tokens, and component libraries in Storybook still need help developing experiences that match what the designers conceived. That's why we've launched a private beta for teams with components in Figma and their codebase. This feature in Visual Copilot uses AI to map reusable components in your Figma file to those in your code repository and generates code using your existing components when you have them. It's especially beneficial for teams with many components and strict design guidelines.

Automatic Figma to Builder sync

Another common piece of feedback we received during research and development for Visual Copilot was that designs often change in Figma. To streamline the design-to-development workflow even further, teams wanted a way to automatically update experiences in the Builder when they make changes to designs in Figma. Coming soon, you can connect a Figma Artboard to an entry in Builder so you can “push” changes you make in Figma to your content in Builder.

Custom Components support and Automatic Figma to Builder Sync are currently in private beta. To get access to those two features, sign up here.

Try Visual Copilot today

Visual Copilot is free for a limited time while in beta, and you can try it today.

generate images as fast as you can type

Super Fast LCM-LoRA on SD1.5 - a Hugging Face Space by latent-consistency

Discover amazing ML apps made by the community

huggingface.co

Latent Consistency Model Demos - a latent-consistency Collection

Latent Consistency Models for Stable Diffusion

huggingface.co

GitHub - 1rgs/tokenwiz: A clone of OpenAI's Tokenizer page for HuggingFace Models

A clone of OpenAI's Tokenizer page for HuggingFace Models - 1rgs/tokenwiz

Tokenwiz

Hugging Face Tokenizer Visualizer, based off the OpenAI Tokenizer pageTokenwiz

tokenwiz.rahul.gs

TokenCounter: tokenize and estimate your LLM costs

TokenCounter provides an easy-to-use interface to tokenize your text and estimate your Large Language Model (LLM) costs. Understand how GPT models process your text into tokens and improve your usage efficiency.

Last edited:

About

LLM verified with Monte Carlo Tree SearchLLM verified with Monte Carlo Tree Search

This prototype synthesizes verified code with an LLM.Using Monte Carlo Tree Search (MCTS), it explores the space of possible generation of a verified program, and it checks at every step that it's on the right track by calling the verifier.

This prototype uses Dafny or Coq.

Logs for example runs can be found in the log directory. Scroll to the very end of a log to see a chosen solution. Note that the linked solution is optimal for the problem.

By using this technique, weaker models that might not even know the generated language all that well can compete with stronger models.

We can also reinforce the snippets that succeed positively and that fail negatively through PPO training. The model after PPO can solve the prompts without backtracking! For example, the log for solving the problem fact after PPO training on another problem, opt0.

Planning with Large Language Models for Code Generation

This is the repository for Planning with Large Language Models for Code Generation (accepted at ICLR 2023). This codebase is modified from Dan Hendrycks's APPS repository. The tree search algorithm is modified from the Dyna Gym repository.Note: The goal of this repository is to use LLMs for code generation and reproduce the results in our paper. If you are interested in applying Monte-Carlo tree search on LLMs in general, you may also check out our mcts-for-llm repository.

Planning with Large Language Models for Code Generation

ICLR 2023

Shun Zhang, Zhenfang Chen, Yikang Shen, Mingyu Ding, Joshua B. Tenenbaum, and Chuang Gan

PaperCodePrototype

Existing large language model-based code generation pipelines typically use beam search or sampling algorithms during the decoding process. Although the programs they generate achieve high token-matching-based scores, they often fail to compile or generate incorrect outputs. The main reason is that conventional Transformer decoding algorithms may not be the best choice for code generation. In this work, we propose a novel Transformer decoding algorithm, Planning-Guided Transformer Decoding (PG-TD), that uses a planning algorithm to do lookahead search and guide the Transformer to generate better programs. Specifically, instead of simply optimizing the likelihood of the generated sequences, the Transformer makes use of a planner that generates complete programs and tests them using public test cases. The Transformer can therefore make more informed decisions and output tokens that will eventually lead to higher-quality programs. We also design a mechanism that shares information between the Transformer and the planner to make the overall framework computationally efficient. We empirically evaluate our framework with several large language models as backbones on public coding challenge benchmarks, showing that 1) it can generate programs that consistently achieve higher performance compared with competing baseline methods; 2) it enables controllable code generation, such as concise codes and highly-commented codes by optimizing modified objectives.

teknium/dataforge-economics · Datasets at Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

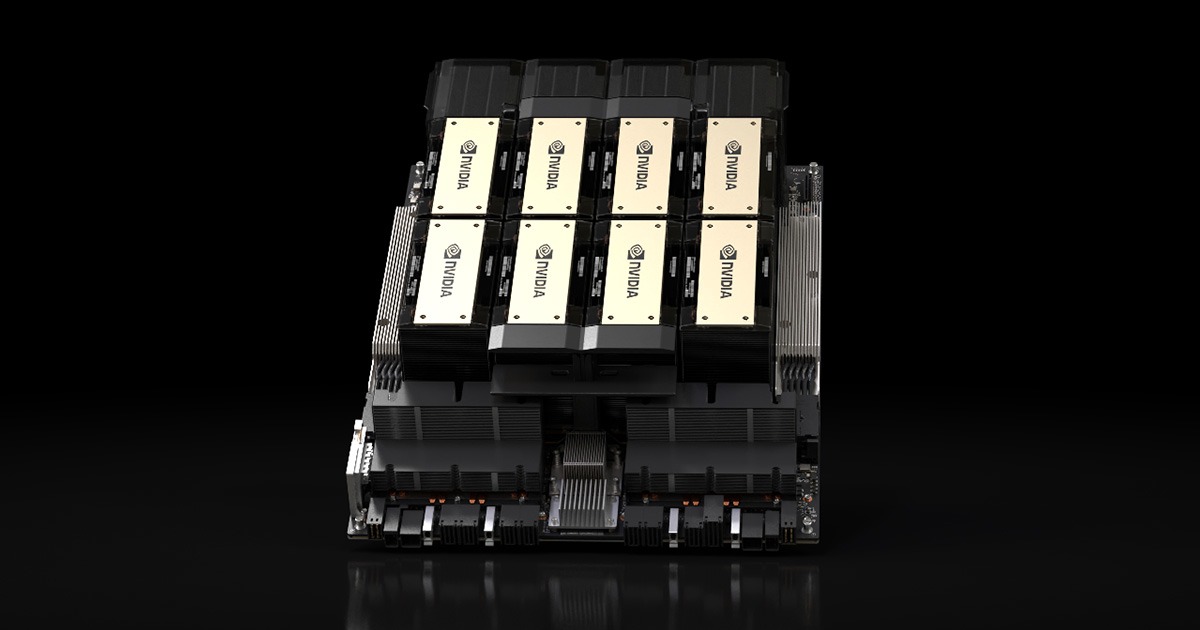

NVIDIA H200 Tensor Core GPU

The world’s most powerful GPU for supercharging AI and HPC workloads.Notify me when this product becomes available.

Notify Me

Datasheet | Specs

The World’s Most Powerful GPU

The NVIDIA H200 Tensor Core GPU supercharges generative AI and high-performance computing (HPC) workloads with game-changing performance and memory capabilities. As the first GPU with HBM3e, the H200’s larger and faster memory fuels the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads.NVIDIA Supercharges Hopper, the World’s Leading AI Computing Platform

Based on the NVIDIA Hopper™ architecture, the NVIDIA HGX H200 features the NVIDIA H200 Tensor Core GPU with advanced memory to handle massive amounts of data for generative AI and high-performance computing workloads.Read Press Release

Highlights

Experience Next-Level Performance

Llama2 70B Inference

1.9X Faster

GPT-3 175B Inference

1.6X Faster

High-Performance Computing

110X Faster

TensorRT-LLM/docs/source/blogs/H200launch.md at release/0.5.0 · NVIDIA/TensorRT-LLM

TensorRT LLM provides users with an easy-to-use Python API to define Large Language Models (LLMs) and supports state-of-the-art optimizations to perform inference efficiently on NVIDIA GPUs. Tensor...

H200 achieves nearly 12,000 tokens/sec on Llama2-13B with TensorRT-LLM

TensorRT-LLM evaluation of the new H200 GPU achieves 11,819 tokens/s on Llama2-13B on a single GPU. H200 is up to 1.9x faster than H100. This performance is enabled by H200's larger, faster HBM3e memory.H200 FP8 Max throughput

| Model | Batch Size(1) | TP(2) | Input Length | Output Length | Throughput (out tok/s) |

|---|---|---|---|---|---|

| llama_13b | 1024 | 1 | 128 | 128 | 11,819 |

| llama_13b | 128 | 1 | 128 | 2048 | 4,750 |

| llama_13b | 64 | 1 | 2048 | 128 | 1,349 |

| llama_70b | 512 | 1 | 128 | 128 | 3,014 |

| llama_70b | 512 | 4 | 128 | 2048 | 6,616 |

| llama_70b | 64 | 2 | 2048 | 128 | 682 |

| llama_70b | 32 | 1 | 2048 | 128 | 303 |

(1) Largest batch supported on given TP configuration by power of 2. (2) TP = Tensor Parallelism

Additional Performance data is available on the NVIDIA Data Center Deep Learning Product Performance page, & soon in TensorRT-LLM's Performance Documentation.

H200 vs H100

H200's HBM3e larger capacity & faster memory enables up to 1.9x performance on LLMs compared to H100. Max throughput improves due to its dependence on memory capacity and bandwidth, benefitting from the new HBM3e. First token latency is compute bound for most ISLs, meaning H200 retains similar time to first token as H100.For practical examples of H200's performance:

Max Throughput TP1: an offline summarization scenario (ISL/OSL=2048/128) with Llama-70B on a single H200 is 1.9x more performant than H100.

Max Throughput TP8: an online chat agent scenario (ISL/OSL=80/200) with GPT3-175B on a full HGX (TP8) H200 is 1.6x more performant than H100.

Preliminary measured performance, subject to change. TensorRT-LLM v0.5.0, TensorRT v9.1.0.4. | Llama-70B: H100 FP8 BS 8, H200 FP8 BS 32 | GPT3-175B: H100 FP8 BS 64, H200 FP8 BS 128

Max Throughput across TP/BS: Max throughput(3) on H200 vs H100 varies by model, sequence lengths, BS, and TP. Below results shown for maximum throughput per GPU across all these variables.

Preliminary measured performance, subject to change. TensorRT-LLM v0.5.0, TensorRT v9.1.0.4 | H200, H100 FP8.

(3) Max Throughput per GPU is defined as the highest tok/s per GPU, swept across TP configurations & BS powers of 2.

Latest HBM Memory

H200 is the newest addition to NVIDIA’s data center GPU portfolio. To maximize that compute performance, H200 is the first GPU with HBM3e memory with 4.8TB/s of memory bandwidth, a 1.4X increase over H100. H200 also expands GPU memory capacity nearly 2X to 141 gigabytes (GB). The combination of faster and larger HBM memory accelerates performance of LLM model inference performance with faster throughput and tokens per second. These results are measured and preliminary, more updates expected as optimizations for H200 continue with TensorRT-LLM.

NousResearch/Nous-Capybara-34B · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Nous-Capybara-34B V1.9

This is trained on the Yi-34B model with 200K context length, for 3 epochs on the Capybara dataset!First 34B Nous model and first 200K context length Nous model!

The Capybara series is the first Nous collection of models made by fine-tuning mostly on data created by Nous in-house.

We leverage our novel data synthesis technique called Amplify-instruct (Paper coming soon), the seed distribution and synthesis method are comprised of a synergistic combination of top performing existing data synthesis techniques and distributions used for SOTA models such as Airoboros, Evol-Instruct(WizardLM), Orca, Vicuna, Know_Logic, Lamini, FLASK and others, all into one lean holistically formed methodology for the dataset and model. The seed instructions used for the start of synthesized conversations are largely based on highly regarded datasets like Airoboros, Know logic, EverythingLM, GPTeacher and even entirely new seed instructions derived from posts on the website LessWrong, as well as being supplemented with certain in-house multi-turn datasets like Dove(A successor to Puffin).

While performing great in it's current state, the current dataset used for fine-tuning is entirely contained within 20K training examples, this is 10 times smaller than many similar performing current models, this is signficant when it comes to scaling implications for our next generation of models once we scale our novel syntheiss methods to significantly more examples.

Process of creation and special thank yous!

This model was fine-tuned by Nous Research as part of the Capybara/Amplify-Instruct project led by Luigi D.(LDJ) (Paper coming soon), as well as significant dataset formation contributions by J-Supha and general compute and experimentation management by Jeffrey Q. during ablations.Special thank you to A16Z for sponsoring our training, as well as Yield Protocol for their support in financially sponsoring resources during the R&D of this project.

Thank you to those of you that have indirectly contributed!

While most of the tokens within Capybara are newly synthsized and part of datasets like Puffin/Dove, we would like to credit the single-turn datasets we leveraged as seeds that are used to generate the multi-turn data as part of the Amplify-Instruct synthesis.The datasets shown in green below are datasets that we sampled from to curate seeds that are used during Amplify-Instruct synthesis for this project.

Datasets in Blue are in-house curations that previously existed prior to Capybara.

Prompt Format

The reccomended model usage is:Prefix: USER:

Suffix: ASSISTANT:

Stop token: </s>

Mutli-Modality!

- We currently have a Multi-modal model based on Capybara V1.9! NousResearch/Obsidian-3B-V0.5 · Hugging Face it is currently only available as a 3B sized model but larger versions coming!

Notable Features:

- Uses Yi-34B model as the base which is trained for 200K context length!

- Over 60% of the dataset is comprised of multi-turn conversations.(Most models are still only trained for single-turn conversations and no back and forths!)

- Over 1,000 tokens average per conversation example! (Most models are trained on conversation data that is less than 300 tokens per example.)

- Able to effectively do complex summaries of advanced topics and studies. (trained on hundreds of advanced difficult summary tasks developed in-house)

- Ability to recall information upto late 2022 without internet.

- Includes a portion of conversational data synthesized from less wrong posts, discussing very in-depth details and philosophies about the nature of reality, reasoning, rationality, self-improvement and related concepts.

NousResearch/Nous-Capybara-34B · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

TheBloke/Nous-Capybara-34B-GGUF · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co