Watching a training video featuring a deepfake version of yourself, as opposed to a clip featuring somebody else, makes learning faster, easier and more fun, according to new research led by the REVEAL research center at the University of Bath.

techxplore.com

- Home

- Computer Sciences

- Home

- Machine learning & AI

OCTOBER 6, 2023

Editors' notes

Two experiments make a case for using deepfakes in training videos

by

University of Bath

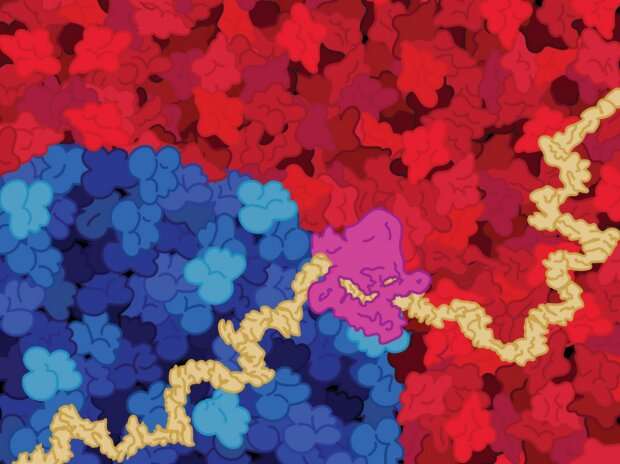

FakeForward wall squats (study participant practises her wall squats. From L to R: user; someone with better skills, FakeForward). Credit:

Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (2023). DOI: 10.1145/3544548.3581100

Watching a training video featuring a deepfake version of yourself, as opposed to a clip featuring somebody else, makes learning faster, easier and more fun, according to new research led by the REVEAL research center at the University of Bath.

This finding was seen over two

separate experiments, one exploring fitness training and the other involving public speaking. The work is published in the journal

Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems.

A deepfake is a video where a person's face or body has been digitally altered so they appear to be someone else. This technology receives highly negative press due to its potential to spread political misinformation and to maliciously develop pornographic content that superimposes the face of one person on the body of another. It also poses an existential threat to professionals in the creative industries.

Dr. Christof Lutteroth and Dr. Christopher Clarke, both from the Department of Computer Science at Bath and co-authors of the new study, say their findings present two positive use cases for deepfakes, and hope their findings catalyze more research into ways deepfake can be applied to do good in the world.

"Deepfakes are used a lot for nefarious purposes, but our research suggests that FakeForward (the name used by the researchers to describe the use of deepfake to teach a new skill) is an unexplored way of applying the technology so it adds value to people's lives by supporting and improving their performances," said Dr. Clarke.

Dr. Lutteroth added, "From this study, it's clear that deepfake has the potential to be really exciting for people. By following a tutorial where they act as their own tutor, people can immediately get better at a task—it's like magic."

More reps, greater enjoyment

For the fitness experiment, study participants were asked to watch a training video featuring a deepfake of their own face pasted over the body of a more advanced exerciser.

The researchers chose six exercises (planks, squats, wall squats, sit-ups, squat jumps and press-ups), each targeting a different muscle group and requiring different types of movement.

For each exercise, study participants first watched a video tutorial where a model demonstrated the exercise, and then had a go at repeating the exercise themselves. The model was chosen both to resemble the participant and to outperform them, though their

skill level was attainable to the test subject.

The process of watching the video and mimicking the exercise was also performed using a deepfake instructor, where the participant's own face was superimposed on a model's body.

For both conditions, the researchers measured the number of repetitions, or the time participants were able to hold an exercise.

Credit: University of Bath

For all exercises, regardless of the order in which the videos were watched, participants performed better after watching the video of "themselves," compared to watching a video showing someone else.

"Deepfake was a really powerful tool," said Dr. Lutteroth. "Immediately people could do more press-ups or whatever it was they were being asked to do. Most also marked themselves as doing the

exercise better than they did with the non-deepfake tutorial, and enjoyed it more."

Public speaking

The second FakeForward study by the same team found that deepfake can significantly boost a person's skills as a public speaker.

When the face of a proficient public speaker was replaced with a user's, learning was significantly amplified, with both confidence and perceived competence in public speaking growing after watching the FakeForward video.

Many participants felt moved and inspired by the FakeForward videos, saying things such as, "it gives me a lot of strength," "the deepfake video makes me feel that speaking is actually not that scary" and "when I saw myself standing there and speaking, I kinda felt proud of myself."

Ethical concerns

In principle it's already possible for individuals to create FakeForward videos through open-source applications such as Roop and Deep Face Lab, though in practice a degree of technical expertise is required.

To guard against potential misuse, the FakeForward research team has developed an ethical protocol to guide the development of 'selfie' deepfake videos.

"For this technology to be applied ethically, people should only create self-models of themselves, because the underpinning concept is that these are useful for private consumption," said Dr. Clarke.

Dr. Lutteroth added, "Just as

deepfake can be used to improve 'good' activities, it can also be misused to amplify 'bad' activities—for instance, it can teach people to be more racist, more sexist and ruder. For example, watching a video of what appears to be you saying terrible things can influence you more than watching someone else saying these things."

He added, "Clearly, we need to ensure users don't learn negative or harmful behaviors from FakeForward. The obstacles are considerable but not insurmountable."

More information: Christopher Clarke et al, FakeForward: Using Deepfake Technology for Feedforward Learning,

Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems (2023).

DOI: 10.1145/3544548.3581100

Provided by

University of Bath