Will Tencent’s “open source” HunyuanVideo launch an at-home “Stable Diffusion” moment for uncensored AI video?

arstechnica.com

A new, uncensored AI video model may spark a new AI hobbyist movement

Will Tencent's "open source" HunyuanVideo launch an at-home "Stable Diffusion" moment for uncensored AI video?

Benj Edwards – Dec 19, 2024 10:50 AM |

112

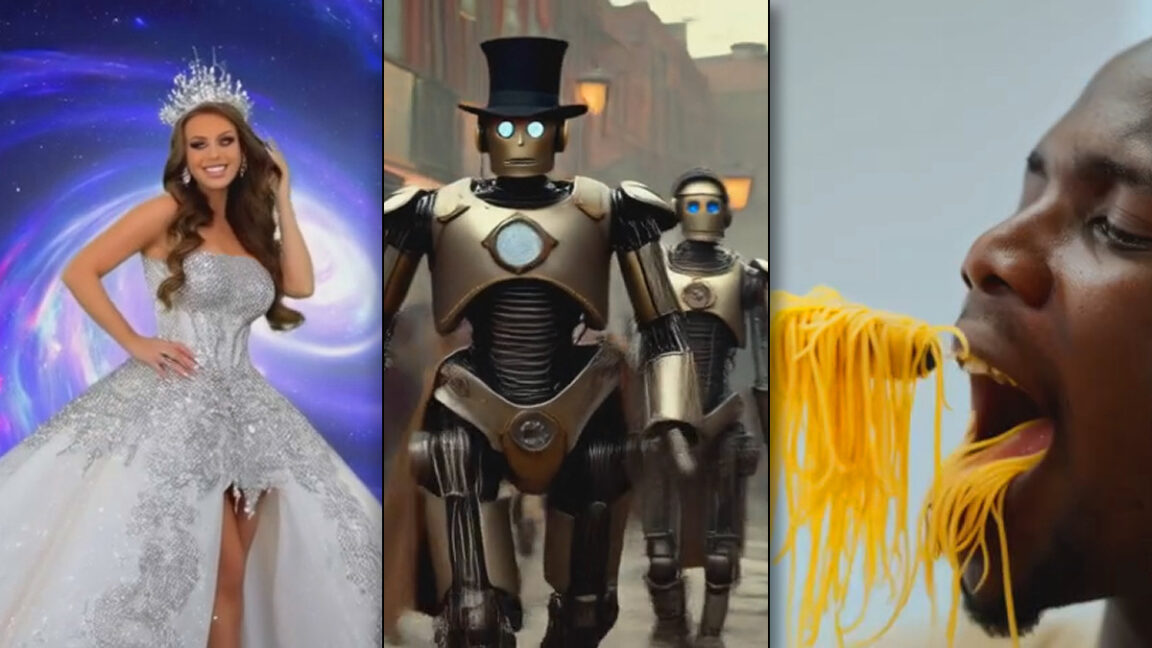

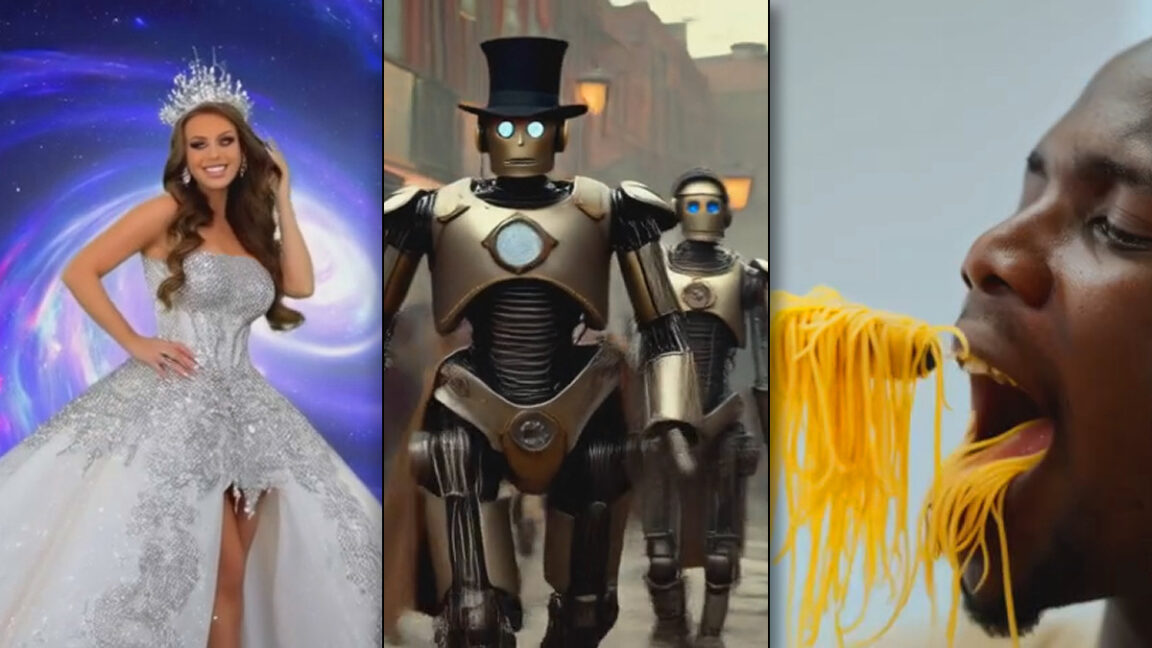

Still images from three videos generated with Tencent's HunyuanVideo. Credit: Tencent

The AI-generated video scene has been hopping this year (or

twirling wildly, as the case may be). This past week alone we've seen releases or announcements of OpenAI's

Sora, Pika AI's

Pika 2, Google's

Veo 2, and Minimax's

video-01-live. It's frankly hard to keep up, and even tougher to test them all. But recently, we put a new open-weights AI video synthesis model, Tencent's

HunyuanVideo, to the test—and it's surprisingly capable for being a "free" model.

Unlike the aforementioned models, HunyuanVideo's neural network weights are openly distributed, which means they can be run locally under the right circumstances (people have already

demonstrated it on a consumer 24 GB VRAM GPU) and it can be fine-tuned or used with

LoRAs to teach it new concepts.

Notably, a few Chinese companies have been at the forefront of AI video for most of this year, and some experts speculate that the reason is less reticence to train on copyrighted materials, use images and names of famous celebrities, and incorporate some uncensored video sources. As we saw with

Stable Diffusion 3's mangled release, including nudity or pornography in training data may allow these models achieve better results by providing more information about human bodies. HunyuanVideo notably allows uncensored outputs, so unlike the commercial video models out there, it can generate videos of anatomically realistic, nude humans.

Putting HunyuanVideo to the test

To evaluate HunyuanVideo, we provided it with an array of prompts that we used on

Runway's Gen-3 Alpha and

Minimax's video-01 earlier this year. That way, it's easy to revisit those earlier articles and compare the results.

We generated each of the five-second-long 864 × 480 videos seen below using a

commercial cloud AI provider. Each video generation took about seven to nine minutes to complete. Since the generations weren't free (each cost about $0.70 to make), we went with the first result for each prompt, so there's no cherry-picking below. Everything you see was the first generation for the prompt listed above it.

"A highly intelligent person reading 'Ars Technica' on their computer when the screen explodes"

"commercial for a new flaming cheeseburger from McDonald's"

"A cat in a car drinking a can of beer, beer commercial"

"Will Smith eating spaghetti"

"Robotic humanoid animals with vaudeville costumes roam the streets collecting protection money in tokens"

"A basketball player in a haunted passenger train car with a basketball court, and he is playing against a team of ghosts"

"A beautiful queen of the universe in a radiant dress smiling as a star field swirls around her"

"A herd of one million cats running on a hillside, aerial view"

"Video game footage of a dynamic 1990s third-person 3D platform game starring an anthropomorphic shark boy"

"A muscular barbarian breaking a CRT television set with a weapon, cinematic, 8K, studio lighting"

"A scared woman in a Victorian outfit running through a forest, dolly shot"

"Low angle static shot: A teddy bear sitting on a picnic blanket in a park, eating a slice of pizza. The teddy bear is brown and fluffy, with a red bowtie, and the pizza slice is gooey with cheese and pepperoni. The sun is setting, casting a golden glow over the scene"

"Aerial shot of a small American town getting deluged with liquid cheese after a massive cheese rainstorm where liquid cheese rained down and dripped all over the buildings"

Also, we added a new one: "A young woman doing a complex floor gymnastics routine at the Olympics, featuring running and flips."

Weighing the results

Overall, the results shown above seem fairly comparable to Gen-3 Alpha and Minimax video-01, and that's notable because HunyuanVideo can be downloaded for free, fine-tuned, and run locally in an uncensored way (given the appropriate hardware).

There are some flaws, of course. The vaudeville robots are not animals, the cat is drinking from a weird transparent beer can, and the man eating spaghetti is obviously not Will Smith. There appears to be some celebrity censorship in the metadata/labeling of the training data, which differs from Kling and Minimax's AI video offerings. And yes, the gymnast has some anatomical issues.

Right now, HunyuanVideo's results are fairly rough, especially compared to the state-of-the-art video synthesis model to beat at the moment, the newly-unveiled

Google Veo 2. We ran a few of these prompts through

Sora as well (more on that later in a future article), and Sora created more coherent results than HunyuanVideo but didn't deliver on the prompts with much fidelity. We are still early days of AI, but quality is rapidly improving while models are getting smaller and more efficient.

Even with these limitations, judging from

the history of Stable Diffusion and its

offshoots, HunyuanVideo may still have significant impact: It could be fine-tuned at higher resolutions over time to eventually create higher-quality results for free that may be used in video productions, or it could lead to people making bespoke video pornography, which is already beginning to appear in trickles on Reddit.

As we've mentioned before in previous AI video overviews, text-to-video models work by combining concepts from their training data—existing video clips used to create the model. Every AI model on the market has some degree of trouble with new scenarios not found in their training data, and that limitation persists with HunyuanVideo.

Future versions of HunyuanVideo could improve with better prompt interpretation, different training data sets, increased computing power during training, or changes in the model design. Like all AI video synthesis models today, users still need to run multiple generations to get desired results. But it looks like the “open weights” AI video models are already here to stay.[]

year after year for us to consume

year after year for us to consume