You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Microsoft wants to integrate chatGPT into Bing by the end of March

- Thread starter Why-Fi

- Start date

More options

Who Replied?

Bing Search Gets Generative AI Captions Feature, Here's What It Does

The newly introduced Generative AI Captions feature is powered by OpenAI's latest LLM, GPT 4.

Bing Search Gets Generative AI Captions Feature, Here's What It Does

The newly introduced Generative AI Captions feature is powered by OpenAI's latest LLM, GPT 4.

By

11/21/23 AT 8:25 AM GMT

Microsoft's latest update lets Bing Search results shows AI generated webpage summaries and snippets. WIKIMEDIA COMMONS

Microsoft has added a new feature dubbed Generative AI Captions to Bing Search. The feature offers customised snippets and summaries of individual web pages.

Microsoft recently rebranded Bing Chat to Copilot. Aside from this, the Redmond-based tech giant is rolling out new features to its Bing Search.

In a blog post, Microsoft said Generative Captions are powered by OpenAI's latest large language model (LLM), GPT 4. After analysing search queries, the feature extracts information from a webpage and presents them in what it describes as "easily digestible snippets".

Generative Captions will be different for every query and will probably generate unique snippets based on what the user is looking for. Microsoft also clarified that the feature isn't always likely to reflect the exact words used on the page since it generates these captions using a mixture of signals and techniques.

Furthermore, the company noted that website owners will have the option to opt out of Generative Captions by using the NOCACHE and NOARCHIVE tags. However, they might have to do this manually.

Microsoft also added a slew of new features to its Bing Webmaster tools. Now, website owners can see how much traffic they are getting from search queries and Bing Chat.

The latest Bing Search feature could turn out to be an alternative to Google's Notes feature for the search results and articles on Discover, which is part of Google Search.

Microsoft updates Bing Chat

According to an earlier report, Microsoft is planning to let users turn off Bing Chat's search engine. While the company has neither confirmed nor denied this speculation yet, it has updated the Bing AI chatbot service.This update will restrict people from generating fake film posters such as those containing Disney's logo and help the company avoid copyright infringement, as per a report by The Register.

Social media users have been using AI to generate images of dogs as Disney characters for a while now. Much to their chagrin, the trend caught the attention of the entertainment giant.

I saw this morning Disney AI Poster Trend Drives Microsoft To Alter Bing Image Creator: Illustrations 297677818, 283175153, 291280580 © Stockeeco | https://t.co/ByTZ5ROdyU

Microsoft is steering clear of the dog house after its… https://t.co/9gbzUMpLx1 👁

— alasdair lennox (@isawthismorning) November 20, 2023

As a result, Microsoft decided to temporarily block the word "Disney" in people's prompts, according to a Financial Times report. While the ban has been lifted, Bing has reportedly stopped recreating Disney's wording or logo.

This incident has reignited controversy surrounding AI text-to-image models' ability to violate copyright. Apparently, these models were trained on protected IP and trademarks and are capable of accurately generating the content.

In one of the examples, a person asked Midjourney AI to generate an image of an "average woman of Afghanistan". Notably, the AI tool came up with an almost carbon copy of the iconic 1984 photo of Sharbat Gula, taken by American photographer Steve McCurry.

This is the best example I've found yet of how derivative AI 'art' is. The person who generated the image on the left asked Midjourney to generate 'an average woman of Afghanistan'. It produced an almost carbon copy of the 1984 photo of Sharbat Gula, taken by Steve McCurry. 1/ pic.twitter.com/D0FDbqrcPA

— Anwen Kya(@Kyatic) November 16, 2023

Artists and illustrators, who have sued companies building AI text-to-image tools argue that the software can directly rip off their work and copy their styles. The defendants, on the other hand, believe it is imperative to protect AI-generated images under fair use since the images simply transform original works rather than replace them.

Microsoft now lets you uninstall Edge, Bing, Cortana

It will be interesting to see whether Microsoft will introduce stricter rules for using its AI image creator. In the meantime, the company has rolled out a new update for Windows users in Europe, allowing them to uninstall some of its basic apps including Cortana, the Edge browser and Bing Search.IBT UK Morning Brief - Let the best of International News come to you

Sign up and stay up to date with our daily newsletter.

You can unsubscribe at any time. By signing up you are agreeing to our Terms of Service and Privacy Policy.

It is worth noting that Microsoft is not rolling out this update to provide a superior user experience or to let users decide which app they want to get rid of. Instead. the tech behemoth is making this change to comply with The Digital Markets Act (DMA).

DMA, which was announced by the European Union, urges Microsoft to give its users the option to remove all basic apps. This act is slated to come into effect in March 2024 in the European Economic Area (EEA).

/cdn.vox-cdn.com/uploads/chorus_asset/file/24385268/STK148_Microsoft_Edge_1.jpg)

Microsoft’s Edge Copilot AI can’t really summarize every YouTube video

It leans on text from subtitles and transcriptions.

Microsoft’s Edge Copilot AI can’t really summarize every YouTube video

The chatbot’s summarization feature relies on preprocessed video data or existing subtitles and transcripts.

By Amrita Khalid, one of the authors of audio industry newsletter Hot Pod. Khalid has covered tech, surveillance policy, consumer gadgets, and online communities for more than a decade.

Dec 8, 2023, 9:18 PM EST|7 Comments / 7 New

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/24385268/STK148_Microsoft_Edge_1.jpg)

Image: The Verge

One feature added to Microsoft’s AI Copilot in the Edge browser this week is the ability to generate text summaries of videos. But Edge Copilot’s time-saving feature is still fairly limited and only works on pre-processed videos or those with subtitles, as Mikhail Parakhin, Microsoft’s CEO of advertising and web services, explained.

As spotted by MSPowerUser, Parakhin writes, “In order for it to work, we need to pre-process the video. If the video has subtitles - we can always fallback on that, if it does not and we didn’t preprocess it yet - then it won’t work,” in response to a question.

In other words, on its own Edge Copilot doesn’t so much summarize videos as it summarizes the text transcripts of the videos. Copilot can also perform a similar function throughout Microsoft 365, including summarizing Teams video meetings and calls for customer service agents — and in both cases, the audio needs to be transcribed first by Microsoft. Copilot on Microsoft Stream can also summarize any video, but again, it requires users to generate a written transcript.

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25145466/Screen_Shot_2023_12_08_at_3.58.46_PM.png)

Microsoft

The conversation started after designer Pietro Schirano posted a screen recording of Edge Copilot summarizing a YouTube video about the GTA VI trailer. In this case, Copilot appeared to be doing its job perfectly. The user in the recording presses the “Generate video summary” button in the Copilot sidebar, and mere seconds later, Copilot churns one out, complete with highlights and timestamps.

Of course, many platforms, including YouTube and Vimeo, can automatically generate transcripts and subtitles — if users enable the feature. After The Verge asked Parakhin on X if we could assume most publicly available videos (i.e. YouTube) weren’t pre-processed, he replied: “Should work for most videos.”

Copilot is just the latest example of the generative AI race Microsoft is competing in with Google (and others). Last month, Google upgraded the YouTube extension for its Bard chatbot to enable it to summarize the content of a video and surface specific information from it. Just this week, Google announced a major Gemini update that has its own issues — the company’s editing may have misrepresented some of the AI’s capabilities in a demo, and it doesn’t always have its facts straight.

Parakhin has been candid about the various stages of Copilot’s evolution on social media. While on a plane on Tuesday morning, the machine learning expert posted on X: “Adding ability for Edge Copilot to use information in videos - on a flight.”

Microsoft Copilot app arrives on iOS, bringing free ChatGPT-4 to iPhone and iPad

Microsoft has released its Copilot app for iOS devices, just two days after I reported exclusively that the iOS version was almost ready. Now, iPhone and iPad users can download it from the Apple App Store. Copilot isn’t just another name for Bing Chat; it brings some cool new things to the...

Microsoft Copilot app arrives on iOS, bringing free ChatGPT-4 to iPhone and iPad

By Mayank Parmar

December 30, 2023

Microsoft has released its Copilot app for iOS devices, just two days after I reported exclusively that the iOS version was almost ready. Now, iPhone and iPad users can download it from the Apple App Store. Copilot isn’t just another name for Bing Chat; it brings some cool new things to the table.

Microsoft Copilot app is available via Apple’s App Store, and the tech giant clarified that the AI app is optimized for iPad. In our tests, we also noticed that Copilot app offers better experience than the dedicated ChatGPT app for iPad.

While Bing integrates search, rewards, and chat functionalities, Copilot streamlines its focus on an advanced chat interface, incorporating features like the innovative DALL-E 3. Unlike Bing, which combines search and AI, Copilot emerges as a ChatGPT-like app tailored to iOS devices.

Microsoft Copilot for iPad

Copilot maintains its versatility as a multi-functional assistant on Android, supporting GPT Vision, GPT-4, and DALL-E. The addition of ChatGPT-4 Turbo, available to select users, enhances its ability to provide information on recent events, especially with the search plugin disabled.

Copilot is ChatGPT-4 for iOS, but it’s absolutely free. You can write emails, stories, or even summarize tough texts. It’s great with languages too, helping you translate, proofread, and more. And it’s all free, which Microsoft says won’t change.

It also plugin support, even third-party plugins at no extra cost. This can be super handy for planning trips or sprucing up your resume. Plus, there’s the Image Creator that turns your words into pictures right on your phone.

Microsoft launches Copilot Pro for $20 per month per user

Copilot Pro gives you the latest features and best models that Microsoft AI has to offer.

Microsoft launches Copilot Pro for $20 per month per user

Copilot Pro gives you the latest features and best models that Microsoft AI has to offer.

Barry Schwartz on January 15, 2024 at 2:00 pm | Reading time: 2 minutesCopilot Pro, the most advanced and fastest version of Copilot, has been released today by Microsoft. Copilot, the new name for the new Bing Chat experience, now has a paid version that costs $20 per month per user. This brings “a new premium subscription for individuals that provides a higher tier of service for AI capabilities, brings Copilot AI capabilities to Microsoft 365 Personal and Family subscribers, and new capabilities, such as the ability to create Copilot GPTs,” Microsoft announced.

Features in Copilot Pro. Copilot Pro has these features, that are above and beyond normal Copilot:

- A single AI experience that runs across your devices, understanding your context on the web, on your PC, across your apps and soon on your phone to bring the right skills to you when you need them.

- Access to Copilot in Word, Excel, PowerPoint, Outlook, and OneNote on PC, Mac, and iPad for Microsoft 365 Personal and Family subscribers.

- Priority access to the very latest models, including the new OpenAI’s GPT-4 Turbo. With Copilot Pro you’ll have access to GPT-4 Turbo during peak times for faster performance and, coming soon, the ability to toggle between models to optimize your experience how you choose, Microsoft explained.

- Enhanced AI image creation with Image Creator from Designer (formerly Bing Image Creator) – ensuring it’s faster with 100 boosts per day while bringing you more detailed image quality as well as landscape image format.

- The ability to build your own Copilot GPT – a customized Copilot tailored for a specific topic.

Video overview. Here is a video overview of the new Copilot Pro:

What else is new. Microsoft also announced these general improvements around Copilot:

- Copilot GPTs. Copilot GPTs let you customize the behavior of Microsoft Copilot on a topic that is of particular interest to you.

- Copilot mobile app. The Copilot mobile app is now available for Android and iOS.

- Copilot in the Microsoft 365 mobile app. Copilot is being added to the Microsoft 360 mobile app for Android and iOS for individuals with a Microsoft account.

Why we care. If you love Copilot, aka Bing Chat, and want to get the best out of it, you may want to try Copilot Pro. It will give you the more advanced AI models, priorities your prompts before others and give you more usage than the free version.

iceberg_is_on_fire

Wearing Lions gear when it wasn't cool

I've been using Bing for a minute now and yeah, my searches don't have me doubling back to Google like I used to when I first started using it.

interesting, good find.

Redguard

Hoonding

- Google's legal dispute with Alphabet revealed Microsoft's unsuccessful attempts to have Apple switch to Bing, highlighting Bing's lack of appeal to users.

- Microsoft tried to sell Bing to Apple, but Apple declined due to Bing's lower search quality and investment.

- Given how Bing was an early home for Copilot. it raises questions about the impact of Bing on Microsoft's AI progress.

While Bing hasn't exactly made a big splash in the search engine market, one could argue that the service was a good stepping stone for Copilot. After all, Copilot for the Web took over Bing Chat's place, and it has only snowballed from there. However, recent news has revealed that Microsoft did try to sell Bing to Apple, but the deal was cut off due to the quality of Bing's search results.

Microsoft Copilot banner© Provided by XDA Developers

Related

Microsoft Copilot: What is it, and how does it work?

Is Microsoft Copilot the best AI chatbot available right now?The time when Microsoft Bing could have been Apple's

A screenshot of Microsoft's website for Bing AI search© Provided by XDA Developers

As reported by CNBC, the news broke during a legal battle with Alphabet, the holding company for Google. This was during a discussion on whether Google has a monopoly on web searches, with Google arguing that it's playing fair. As part of its argument, it revealed that Microsoft tried to convince Apple to use Bing as its default search engine in 2009, 2013, 2015, 2016, 2018, and 2020. However, each time, Microsoft got the same result:

“In each instance, Apple took a hard look at the relative quality of Bing versus Google and concluded that Google was the superior default choice for its Safari users. That is competition,” Google wrote in the filing.

Furthermore, during Microsoft's 2018 attempt, it offered Apple the chance to either buy Bing or enter a joint venture over it. And as you might expect, Apple wasn't impressed. As per Eddy Cue, Apple’s senior vice president of services:

“Microsoft search quality, their investment in search, everything was not significant at all. And so everything was lower. So the search quality itself wasn’t as good. They weren’t investing at any level comparable to Google or to what Microsoft could invest in. And their advertising organization and how they monetize was not very good either.”

Unfortunately, while Google is trying to argue its case that it doesn't have a monopoly, it's having some collateral damage on Microsoft's own reputation. However, it's an interesting topic to think about; if Bing did end up becoming part of Apple's product line, would Microsoft go on to release Copilot? Was the switch from Bing Chat to Copilot an important catalyst for Microsoft's continued development, or would it have gone full steam ahead even if it lost Bing? Perhaps we'll never know, but at least we have proof that Bing isn't impressing many people, no matter how much Microsoft pesters users to make the switch to Bing.

1/1

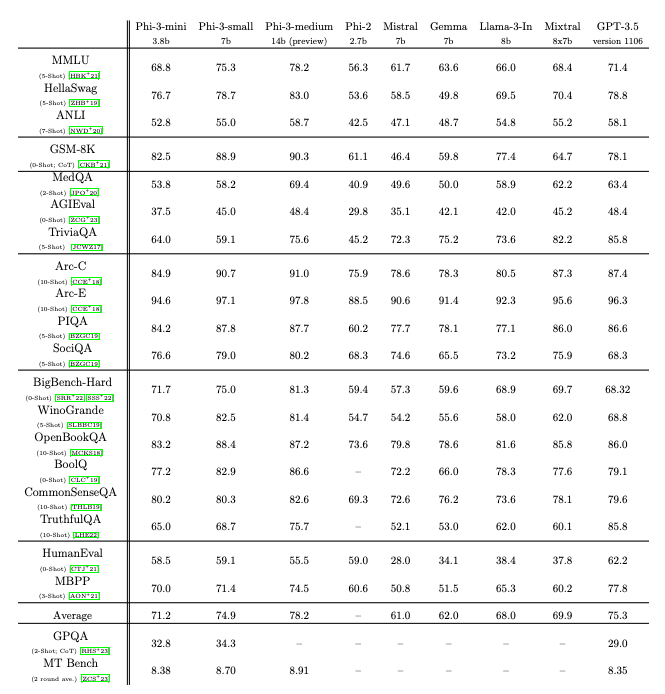

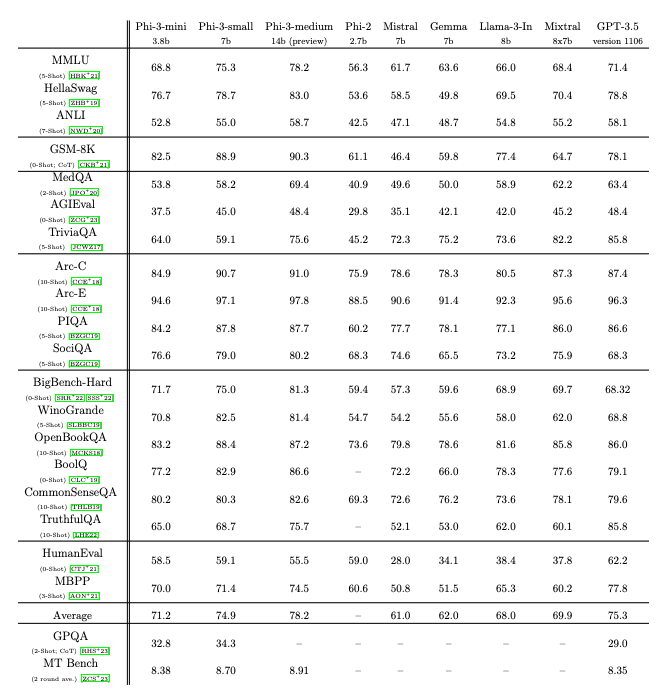

phi-3-mini: 3.8B model matching Mixtral 8x7B and GPT-3.5

Plus a 7B model that matches Llama 3 8B in many benchmarks.

Plus a 14B model.

arxiv.org

arxiv.org

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

phi-3-mini: 3.8B model matching Mixtral 8x7B and GPT-3.5

Plus a 7B model that matches Llama 3 8B in many benchmarks.

Plus a 14B model.

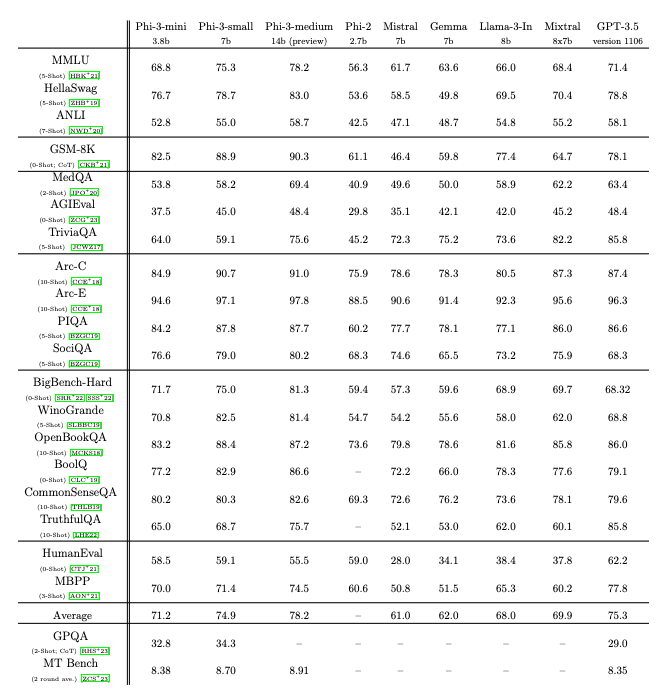

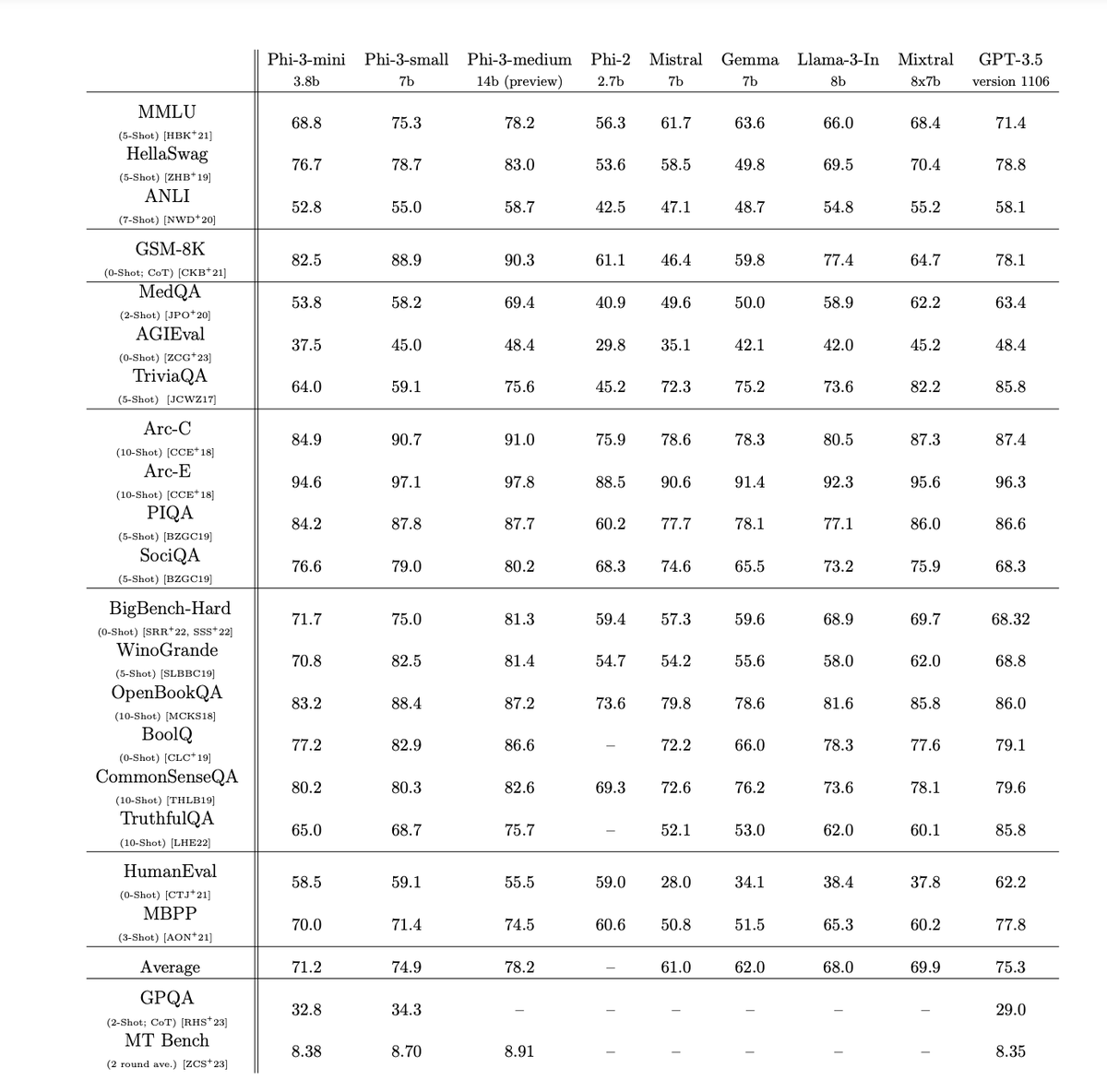

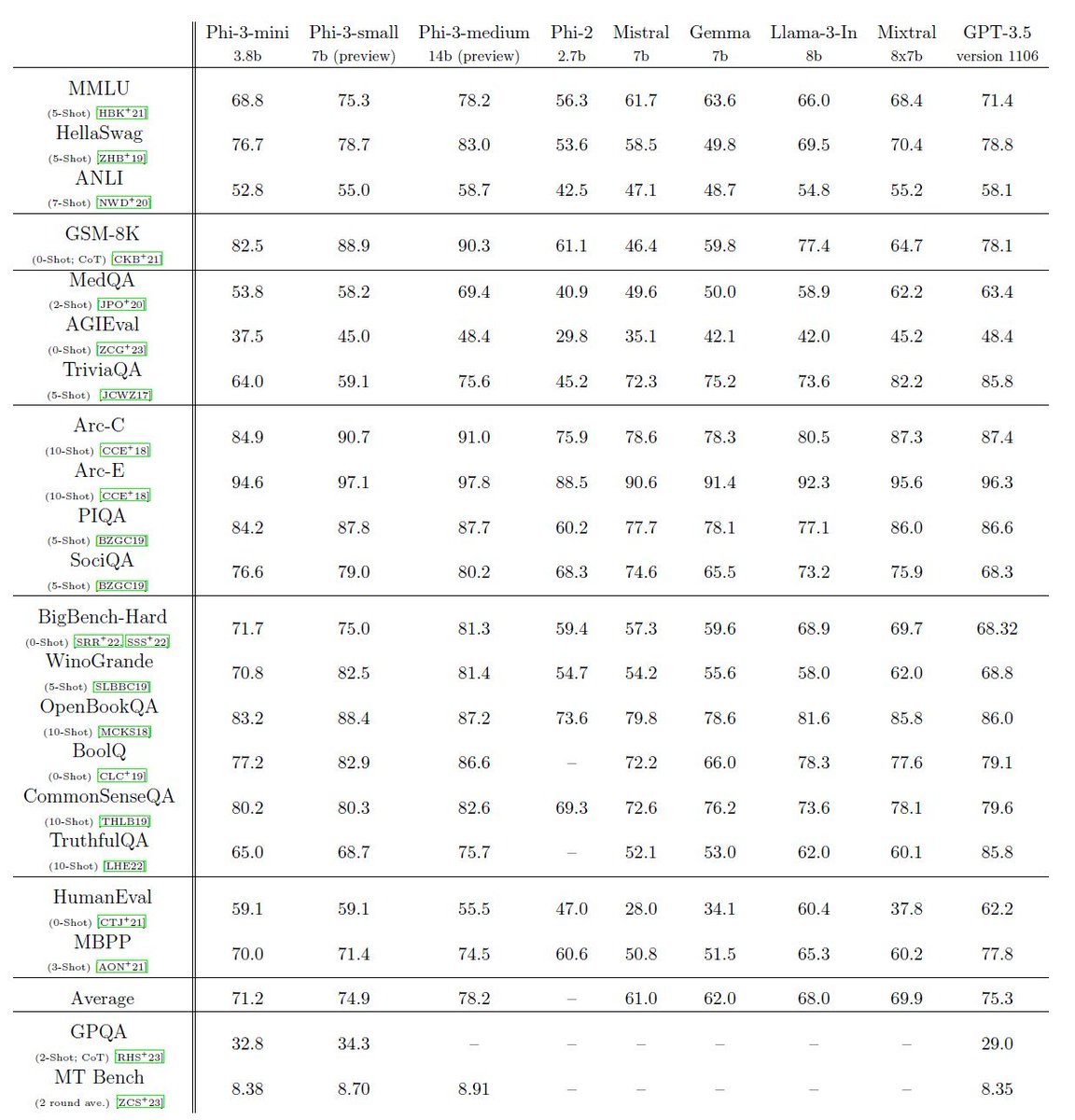

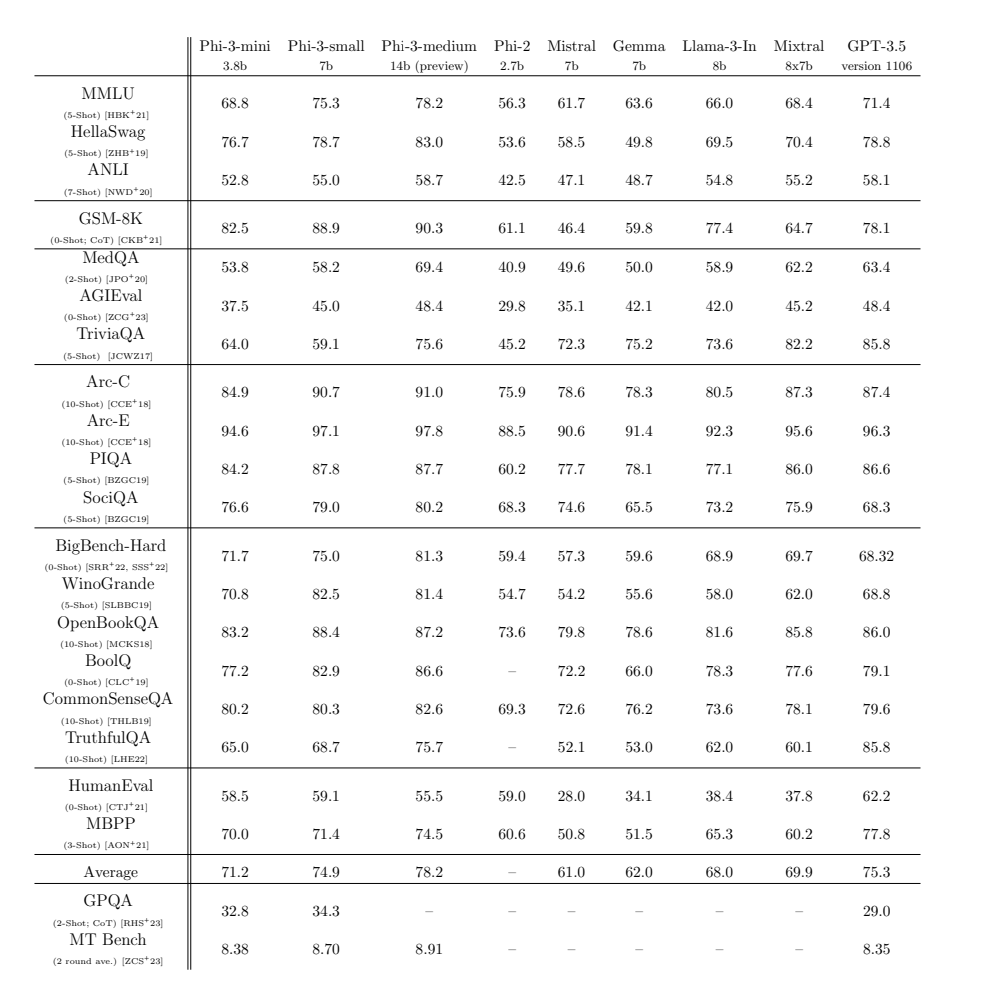

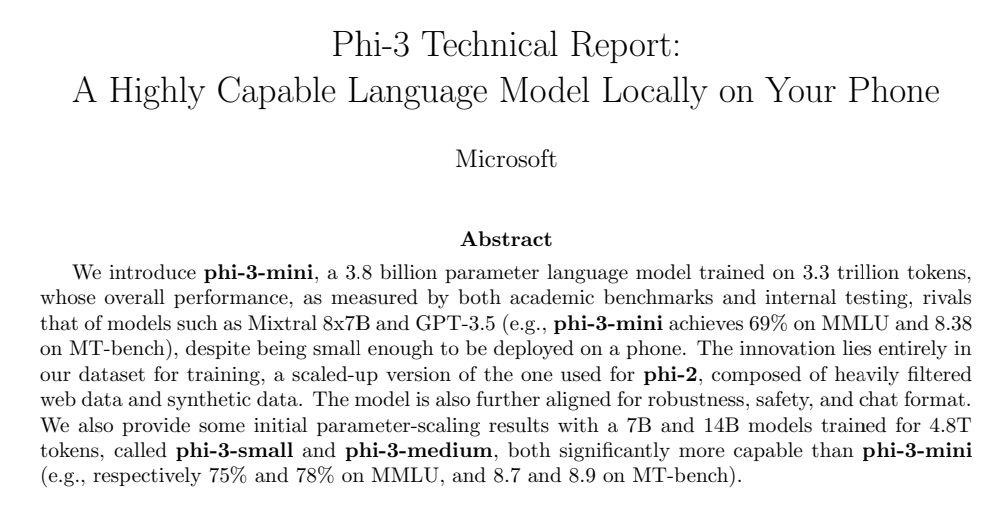

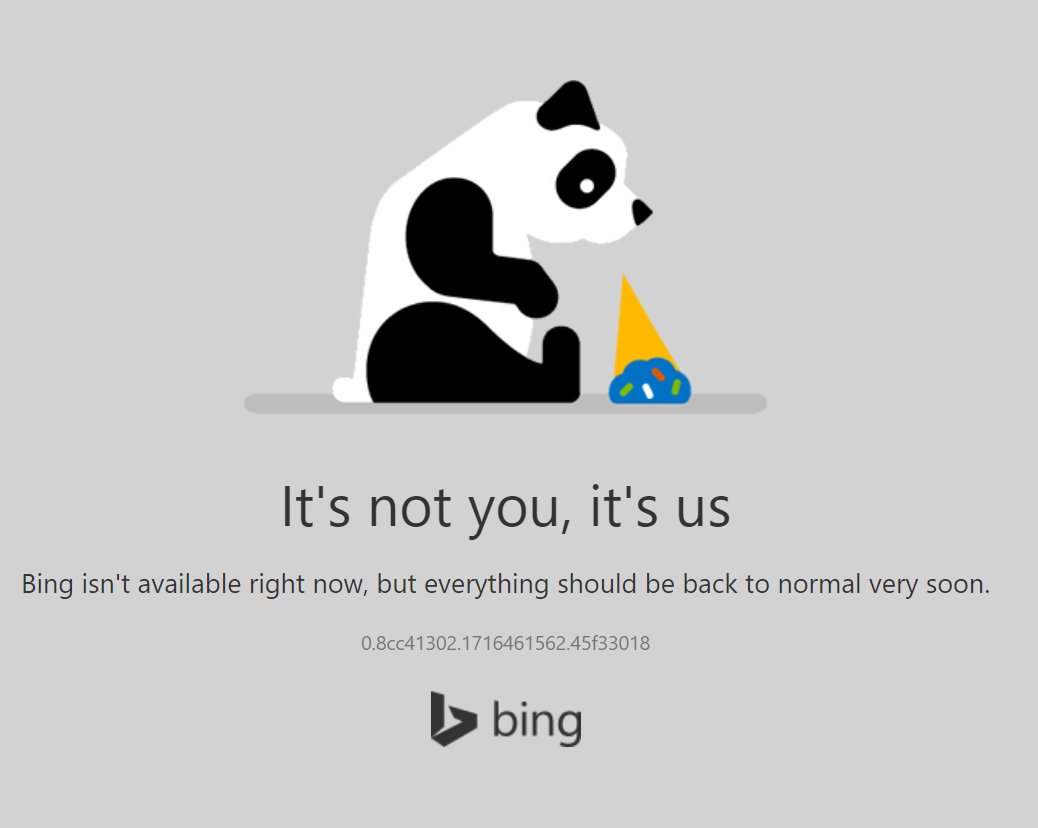

Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

We introduce phi-3-mini, a 3.8 billion parameter language model trained on 3.3 trillion tokens, whose overall performance, as measured by both academic benchmarks and internal testing, rivals that of models such as Mixtral 8x7B and GPT-3.5 (e.g., phi-3-mini achieves 69% on MMLU and 8.38 on...

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/5

Microsoft just released Phi-3

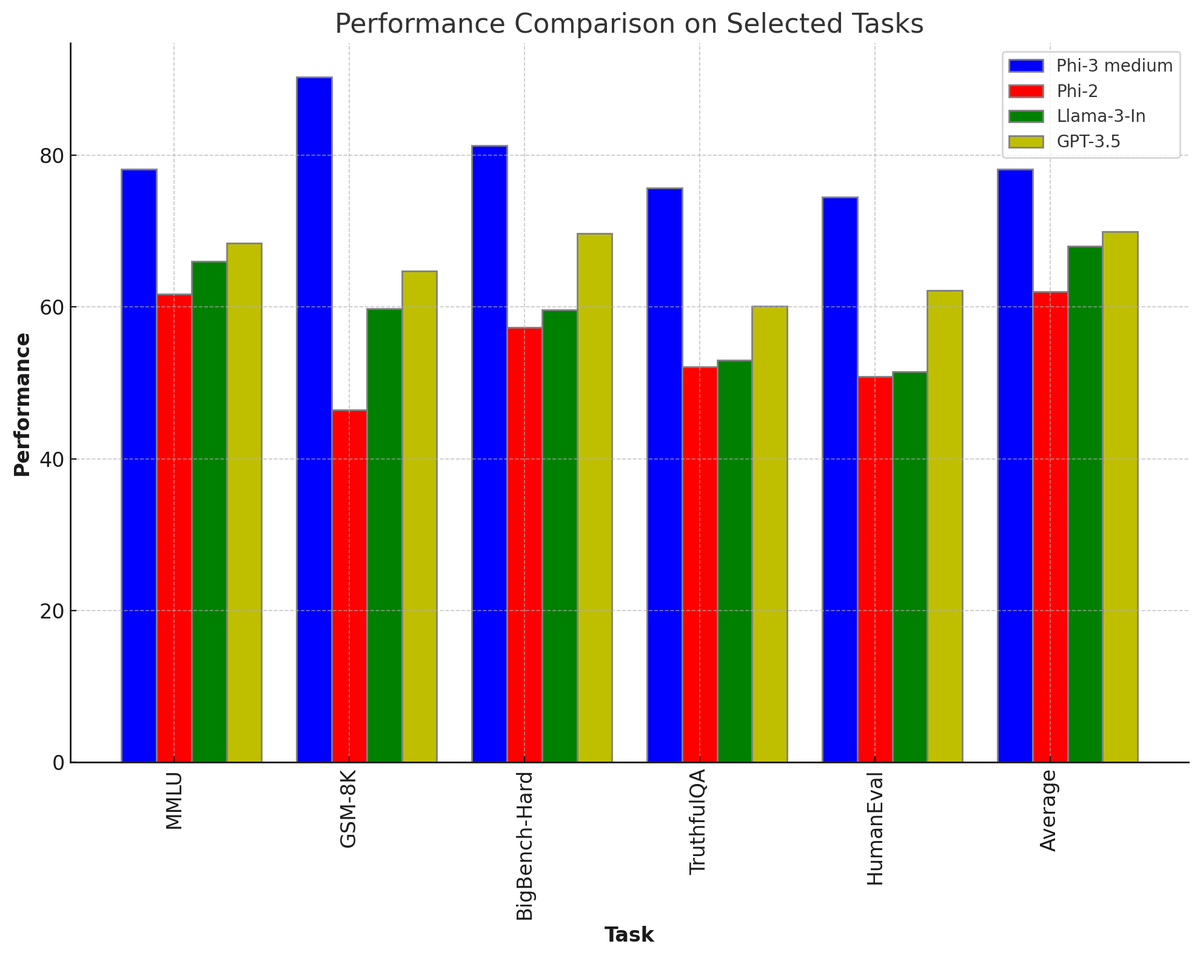

Phi-3 14B beats Llama-3 8B, GPt-3.5 and Mixtral 8x7b MoE in most of the benchmarks.

Even the Phi-3 mini beats Llama-3 8B in MMLU and HellaSwag.

2/5

More details and insights to follow in tomorrow's AI newsletter.

Subscribe now to get it delivered to your inbox first thing in the morning tomorrow: Unwind AI | Shubham Saboo | Substack

3/5

Reaserch Paper:

4/5

This is absolutely insane speed of Opensource AI development.

5/5

True, all of this is happening so fast!!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Microsoft just released Phi-3

Phi-3 14B beats Llama-3 8B, GPt-3.5 and Mixtral 8x7b MoE in most of the benchmarks.

Even the Phi-3 mini beats Llama-3 8B in MMLU and HellaSwag.

2/5

More details and insights to follow in tomorrow's AI newsletter.

Subscribe now to get it delivered to your inbox first thing in the morning tomorrow: Unwind AI | Shubham Saboo | Substack

3/5

Reaserch Paper:

4/5

This is absolutely insane speed of Opensource AI development.

5/5

True, all of this is happening so fast!!

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

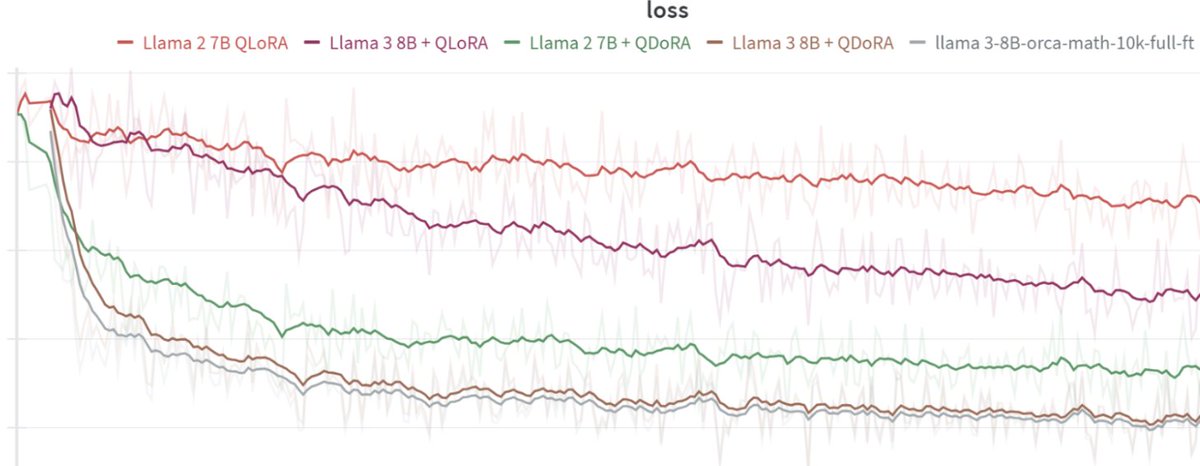

phi-3 is out! never would have guessed that our speculative attempt at creating synthetic python code for phi-1 (following TinyStories) would eventually lead to a gpt-3.5-level SLM. defly addicted to generating synth data by now...

2/2

hf:

phi-3 is out! never would have guessed that our speculative attempt at creating synthetic python code for phi-1 (following TinyStories) would eventually lead to a gpt-3.5-level SLM. defly addicted to generating synth data by now...

2/2

hf:

https://langfuse.datastrain.io/project/cluve1io10001y0rnqesj5bz4/traces/484ec288-6a9d-480b-bcca-9c8dfd965325?observation=b86ac62c-9ac2-40d2-9916-63e4c3e11b17…

@Prince_Canuma

mlx-community (MLX Community)

@answerdotai

@AIatMeta

@huggingface

@MicrosoftAI

microsoft/Phi-3-mini-4k-instruct · Hugging Face

@Prince_Canuma

mlx-community (MLX Community)

@answerdotai

@AIatMeta

@huggingface

@MicrosoftAI

microsoft/Phi-3-mini-4k-instruct · Hugging Face

1/5

Amazing numbers. Phi-3 is topping GPT-3.5 on MMLU at 14B. Trained on 3.3 trillion tokens. They say in the paper 'The innovation lies entirely in our dataset for training - composed of heavily filtered web data and synthetic data.'

2/5

Small is so big right now!

3/5

phi-3-mini: 3.8B model matching Mixtral 8x7B and GPT-3.5

Plus a 7B model that matches Llama 3 8B in many benchmarks.

Plus a 14B model.

[2404.14219] Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

4/5

The prophecy has been fulfilled!

5/5

Wow.

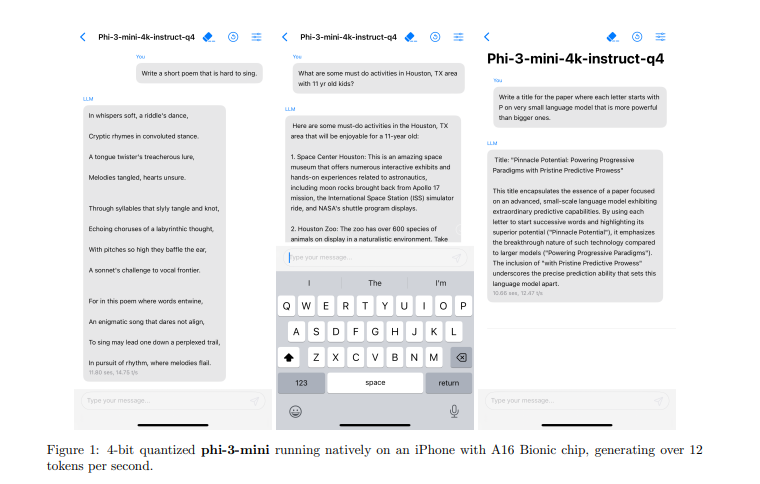

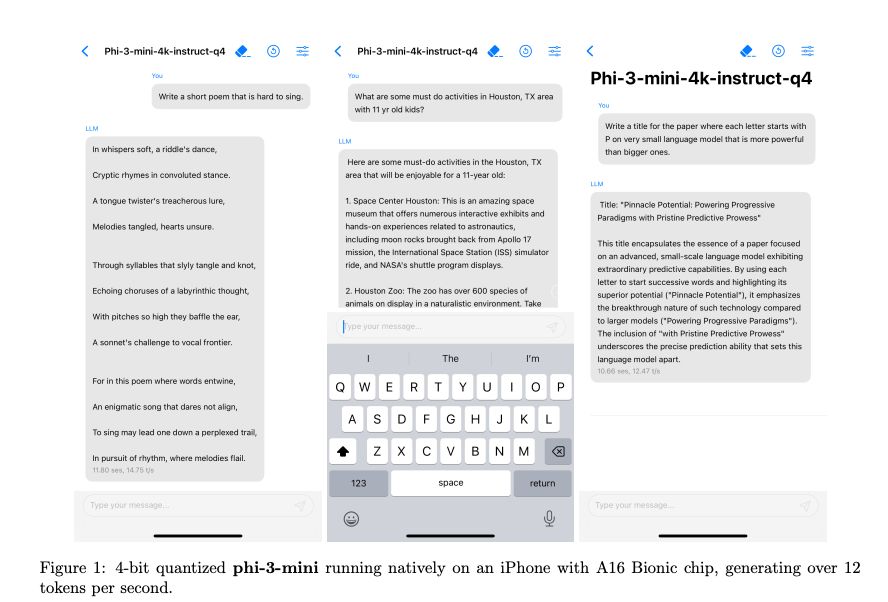

'Phi-3-mini can be quantized to 4-bits so that it only occupies ≈ 1.8GB of memory. We tested the quantized model by deploying phi-3-mini on iPhone 14 with A16 Bionic chip running natively on-device and fully offline achieving more than 12 tokens per second.'

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Amazing numbers. Phi-3 is topping GPT-3.5 on MMLU at 14B. Trained on 3.3 trillion tokens. They say in the paper 'The innovation lies entirely in our dataset for training - composed of heavily filtered web data and synthetic data.'

2/5

Small is so big right now!

3/5

phi-3-mini: 3.8B model matching Mixtral 8x7B and GPT-3.5

Plus a 7B model that matches Llama 3 8B in many benchmarks.

Plus a 14B model.

[2404.14219] Phi-3 Technical Report: A Highly Capable Language Model Locally on Your Phone

4/5

The prophecy has been fulfilled!

5/5

Wow.

'Phi-3-mini can be quantized to 4-bits so that it only occupies ≈ 1.8GB of memory. We tested the quantized model by deploying phi-3-mini on iPhone 14 with A16 Bionic chip running natively on-device and fully offline achieving more than 12 tokens per second.'

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/9

Run Microsoft Phi-3 locally in 3 simple steps (100% free and without internet):

2/9

1. Install Ollama on your Desktop

- Go to https://ollama.com/ - Download Ollama on your computer (works on Mac, Windows and Linux)

- Open the terminal and type this command: 'ollama run phi3'

3/9

2. Install OpenWeb UI (ChatGPT like opensource UI)

- Go to https://docs.openwebui.com - Install the docker image of Open Web UI with a single command

- Make sure Docker is installed and running on your computer

4/9

3. Run the model locally like ChatGPT

- Open the ChatGPT like UI locally by going to this link: http://localhost:3000 - Select the model from the top.

- Query and ask questions like ChatGPT

This is running on Macbook M1 Pro 16GB machine.

5/9

If you find this useful, RT to share it with your friends.

Don't forget to follow me

@Saboo_Shubham_ for more such LLMs tips and resources.

6/9

Run Microsoft Phi-3 locally in 3 simple steps (100% free and without internet):

7/9

Not checked yet.

@ollama was the first to push the updates!

8/9

That would be fine-tuning. You can try out

@monsterapis for nocode finetuning of LLMs.

9/9

Series of language models that pretty much outperformed llama-3 even with the small size.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Run Microsoft Phi-3 locally in 3 simple steps (100% free and without internet):

2/9

1. Install Ollama on your Desktop

- Go to https://ollama.com/ - Download Ollama on your computer (works on Mac, Windows and Linux)

- Open the terminal and type this command: 'ollama run phi3'

3/9

2. Install OpenWeb UI (ChatGPT like opensource UI)

- Go to https://docs.openwebui.com - Install the docker image of Open Web UI with a single command

- Make sure Docker is installed and running on your computer

4/9

3. Run the model locally like ChatGPT

- Open the ChatGPT like UI locally by going to this link: http://localhost:3000 - Select the model from the top.

- Query and ask questions like ChatGPT

This is running on Macbook M1 Pro 16GB machine.

5/9

If you find this useful, RT to share it with your friends.

Don't forget to follow me

@Saboo_Shubham_ for more such LLMs tips and resources.

6/9

Run Microsoft Phi-3 locally in 3 simple steps (100% free and without internet):

7/9

Not checked yet.

@ollama was the first to push the updates!

8/9

That would be fine-tuning. You can try out

@monsterapis for nocode finetuning of LLMs.

9/9

Series of language models that pretty much outperformed llama-3 even with the small size.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

microsoft/Phi-3-mini-4k-instruct · Hugging Face

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

huggingface.co

Edit: All versions: Phi-3 - a microsoft Collection

Meet Phi-3: Microsoft's New LLM That Can Run On Your Phone

Microsoft unveils Phi-3 model family, a collection of tiny LLMs that are highly powerful and can run on smartphones.

Meet Phi-3: Microsoft’s New LLM That Can Run On Your Phone

April 23, 2024

Reading Time: 6 mins read

Who thought that one day we would have tiny LLMs that can cope up with highly powerful ones such as Mixtral, Gemma, and GPT? Microsoft Research announced a powerful family of small LLMs called the Phi-3 model family.

Highlights:

- Microsoft unveils Phi-3 model family, a collection of tiny LLMs that are highly powerful and can run on smartphones.

- The model family is composed of 3 models namely Phi-3-mini, Phi-3-small and Phi-3-medium.

- Shows impressive benchmark results and highly rivals models like Mixtral 8x7B and GPT-3.5.

Microsoft’s Phi-3 LLM Family

Microsoft has leveled up its Generative AI game once again. It released Phi-2 back in December 2023, which had 2.7 billion parameters and provided state-of-the-art performance compared to base language models with less than 13 billion parameters.However many LLMs have been released since then which have outperformed Phi-2 on several benchmarks and evaluation metrics.

This is why Microsoft has released Phi-3, as the latest competitor in the Gen AI market, and the best thing about this model family is that you can run it on your smartphones!

So how powerful and efficient is this state-of-the-art model family? And what are its groundbreaking features? Let’s explore all these topics in-depth through this article.

Microsoft introduced the Model family in the form of three models: Phi-3-mini, Phi-3-small, and Phi-3-medium.

Let’s study all these models separately.

1) Phi-3-mini 3.8b

Phi-3-Mini is a 3.8 billion parameter language model that was trained on 3.3 trillion tokens in a large dataset. It has performance levels comparable to larger versions like Mixtral 8x7B and GPT-3.5, despite its small size.Because Mini is so powerful, it can operate locally on a mobile device. Because of its modest size, it can be quantized to 4 bits, requiring about 1.8GB of memory. Microsoft used Phi-3-Mini, which runs natively on the iPhone 14 with an A16 Bionic CPU and achieves more than 12 tokens per second while entirely offline, to test the quantized model.

The transformer decoder architecture used in the phi-3-mini model has a default context length of 4K. With a vocabulary size of 320641, phi-3-mini employs the same tokenizer as Llama 2, and it is constructed on a similar block structure.

Thus, any package created for the Llama-2 model family can be immediately converted to phi-3-mini. 32 heads, 32 layers, and 3072 hidden dimensions are used in the model.

The training dataset for Phi-3-Mini, which is an enlarged version of the one used for Phi-2, is what makes it innovative. This dataset includes both synthetic and highly filtered online data. Additionally, the model’s resilience, safety, and chat structure have all been optimized.

2) Phi-3-Small and Phi-3-Medium

Additionally, Phi-3-Small and Phi-3-Medium versions from Microsoft have been released; these are both noticeably more powerful than Phi-3-Mini. Using the tiktoken tokenizer, Phi-3-Small, with its 7 billion parameters, achieves better multilingual tokenization. It has an impressive 100,352-word vocabulary and an 8K default context.The Phi-3-small model has 32 layers and a hidden size of 4096, following the typical decoder design of a 7B model class. Phi-3-Small uses a grouped-query attention system, where four queries share a single key, to reduce the KV cache footprint.

Additionally, phi-3-small maintains lengthy context retrieval speed while further optimizing KV cache savings with the usage of new block sparse attention and alternate layers of dense attention. For this model, an extra 10% of multilingual data was also used.

Using the same tokenizer and architecture as phi-3-mini, Microsoft researchers also trained phi-3-medium, a model with 14B parameters, on the same data for a slightly longer number of epochs (4.8T tokens overall as compared to phi-3-small). The model has an embedding dimension of 5120 and 40 heads and 40 layers.

Looking At the Benchmarks

The typical open-source benchmarks testing the model’s reasoning capacity (both common sense reasoning and logical reasoning) were used to test the phi-3-mini, phi-3-small, and phi-3-medium versions. They are contrasted with GPT-3.5, phi-2, Mistral-7b-v0.1, Mixtral-8x7b, Gemma 7B, and Llama-3-instruct8b.

Phi-3-Mini is suited for mobile phone deployment, scoring 69% on the MMLU test and 8.38 on the MT bench.

With an MMLU score of 75.3, the Phi-3-small 7 billion parameter model performs better than Meta’s newly released Llama 3 8B Instruction, which has a score of 66.

However, the biggest difference was observed when Phi-3-medium was compared to all the models. It defeated several models including Mixtral 8x7B, GPT-3.5, and even Meta’s newly launched Llama 3 on several benchmark metrics such as MMLU, HellaSwag, ARC-C, and Big-Bench Hard. The differences were huge where Phi-3-medium highly outperformed all the competitors.

This just goes to show how powerful these tiny mobile LLMs are compared to all these large language models which need powerful GPUs and CPUs to operate. The benchmarks give us an idea that the Phi-3 model family will do quite well in coding-related tasks, common sense reasoning tasks, and general knowledge capabilities.

Are there any Limitations?

Even though it is too powerful for its size and deployment device, the Phi-3 model family has one major limitation. Its size essentially limits it for some tasks, even if it shows a comparable level of language understanding and reasoning capacity to much larger models. For instance, its inability to store large amounts of factual knowledge causes it to perform worse on tests like TriviaQA.“Exploring multilingual capabilities for Small Language Models is an important next step, with some initial promising results on phi-3-small by including more multilingual data. The use of carefully curated training data, targeted post-training, and improvements from red-teaming insights significantly mitigates these issues across all dimensions. However, there is significant work ahead to fully address these challenges.”

Microsoft also provided a potential solution to this drawback. It thinks a search engine added to the model can help with these flaws. Furthermore, the model’s limited language proficiency in English emphasizes the necessity of investigating multilingual capabilities for Small Language Models.

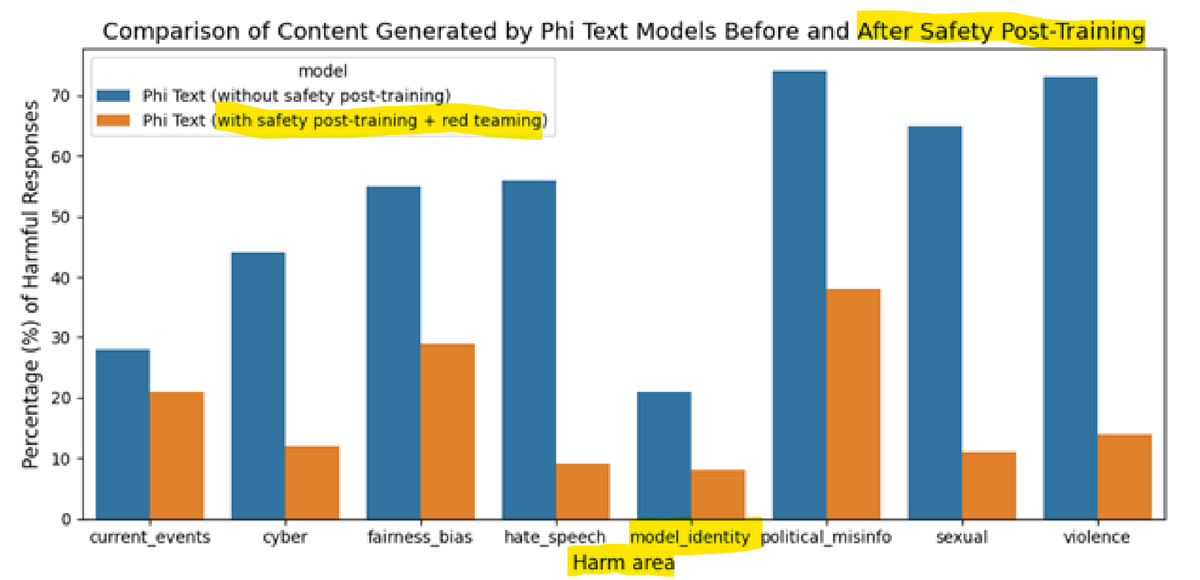

Is Phi-3 Safe?

The responsible AI guidelines of Microsoft were followed in the development of Phi-3-mini.The total strategy included automated testing, evaluations across hundreds of RAI harm categories, red-teaming, and safety alignment in post-training. Several in-house created datasets and datasets with adjustments influenced by helpfulness and harmlessness preferences were used to address the RAI harm categories in safety post-training.

To find further areas for improvement during the post-training phase, a Microsoft independent red team conducted an iterative analysis of phi-3-mini. Microsoft refined the post-training dataset by selecting new datasets that addressed their insights, as a result of receiving input from them. The procedure significantly reduced the rates of adverse responses.

Conclusion

Are we on the verge of a new era for Mobile LLMs? Phi-3 is here to answer this question. The mobile developer community will be highly benefit from Phi-3 models, especially Small and Medium. Recently, Microsoft has also working on the VASA-1 image to video Model, which is also a big thing in the gen AI space.1/1

The same day OpenAI announced GPT-4o, we made the model available for testing on the Azure OpenAI Service. Today, we are excited to announce full API access to GPT-4o.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

The same day OpenAI announced GPT-4o, we made the model available for testing on the Azure OpenAI Service. Today, we are excited to announce full API access to GPT-4o.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

With Microsoft Copilot, Copilot stack, and Copilot+ PCs, we're creating new opportunity for developers at a time when AI is transforming every layer of the tech stack. Here are highlights from my keynote this morning at #MSBuild.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

With Microsoft Copilot, Copilot stack, and Copilot+ PCs, we're creating new opportunity for developers at a time when AI is transforming every layer of the tech stack. Here are highlights from my keynote this morning at #MSBuild.

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Here are some of the ways a more accessible world is being built using our platforms and tools. #MSBuild

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Here are some of the ways a more accessible world is being built using our platforms and tools. #MSBuild

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/2

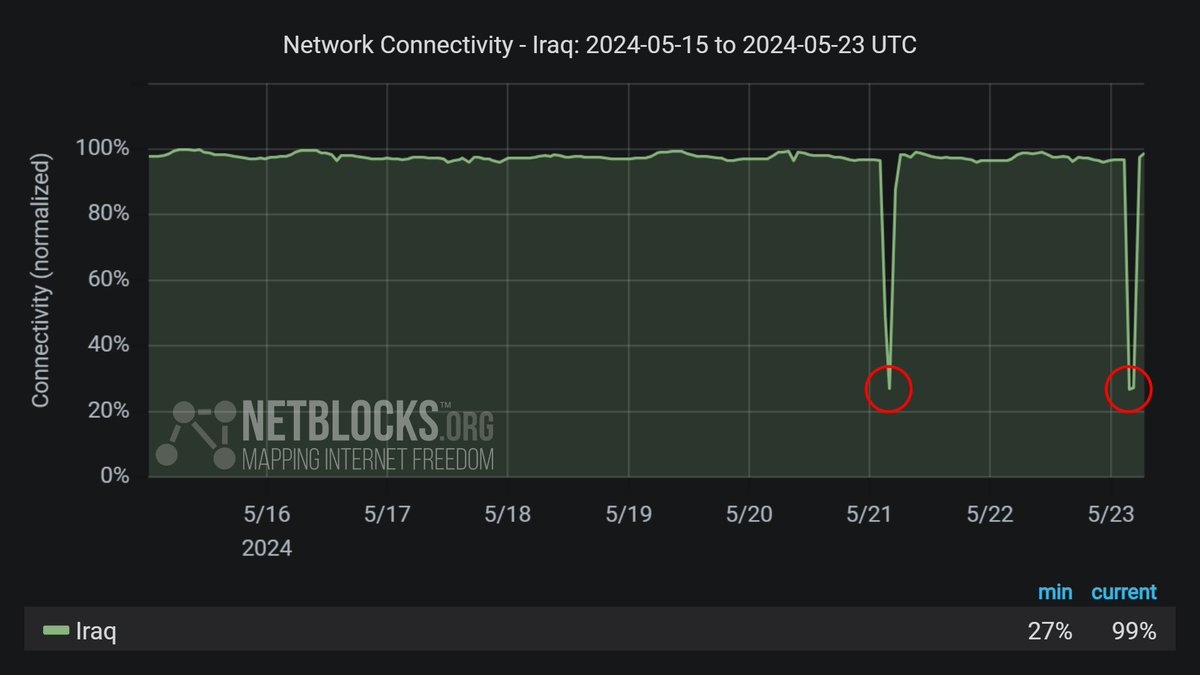

Note: Microsoft’s search engine Bing is currently experiencing international outages, particularly impacting CoPilot, ChatGPT and DuckDuckGo; incident not related to country-level internet disruptions or filtering #BingDown

2/2

Confirmed: Network data show a nation-scale disruption to internet connectivity in #Iraq for the second morning this week as authorities restrict service in a bid to prevent cheating and leaks during general school exams; incident duration ~2 hours

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Note: Microsoft’s search engine Bing is currently experiencing international outages, particularly impacting CoPilot, ChatGPT and DuckDuckGo; incident not related to country-level internet disruptions or filtering #BingDown

2/2

Confirmed: Network data show a nation-scale disruption to internet connectivity in #Iraq for the second morning this week as authorities restrict service in a bid to prevent cheating and leaks during general school exams; incident duration ~2 hours

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

1/1

Bing Search is down, bringing down DuckDuckGo, Copilot in Windows, ChatGPT Search, Ecosia and more services Bing Search Is Down; Taking Down DuckDuckGo, Ecosia, ChatGPT Search & More

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196

Bing Search is down, bringing down DuckDuckGo, Copilot in Windows, ChatGPT Search, Ecosia and more services Bing Search Is Down; Taking Down DuckDuckGo, Ecosia, ChatGPT Search & More

To post tweets in this format, more info here: https://www.thecoli.com/threads/tips-and-tricks-for-posting-the-coli-megathread.984734/post-52211196