AutoDev: an AI-driven development framework, relegates human developers to the role of mere supervisors of AI doing software engineering.

vulcanpost.com

Michael Petraeus

April 15, 2024

In this article

Two months ago, Jensen Huang, Nvidia’s CEO,

dismissed the past 15 years of global career advice and advised that it’s not a good idea to learn to code anymore—at least not for most people.

Last month, Microsoft added its own contribution to the argument by

releasing a research paper detailing

AutoDev: an automated AI-driven development framework, in which human developers are relegated to the role of mere supervisors of artificial intelligence doing all of the actual software engineering work.

Goodbye, developers?

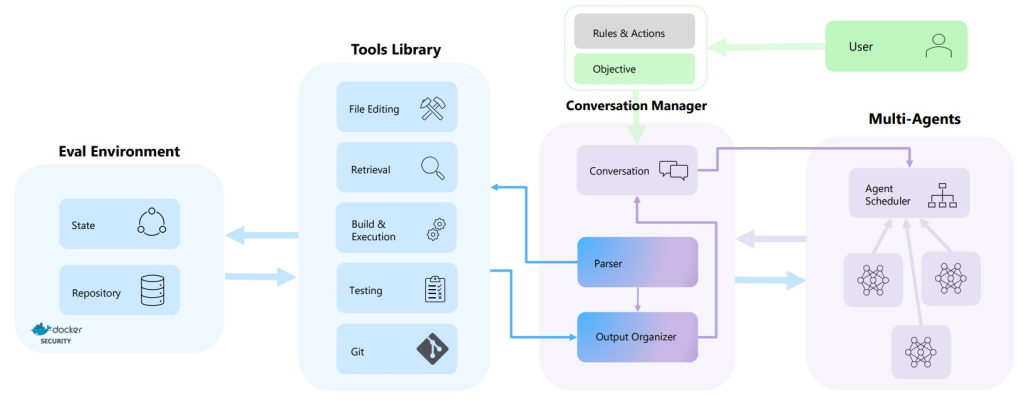

The authors have outlined—and successfully tested—a system of multiple AI agents interacting with each other as well as provided repositories to not only tackle complex software engineering tasks but also validate the outcomes on their own.

The role of humans, in their own words: “transforms from manual actions and validation of AI suggestions to a supervisor overseeing multi-agent collaboration on tasks, with the option to provide feedback. Developers can monitor AutoDev’s progress toward goals by observing the ongoing conversation used for communication among agents and the repository.”

In other words, instead of writing code, human developers would become spectators to the work of AI, interjecting whenever deemed necessary.

It’s really more akin to a management role, where you work with a team of people, guiding them towards the goals set for a project.

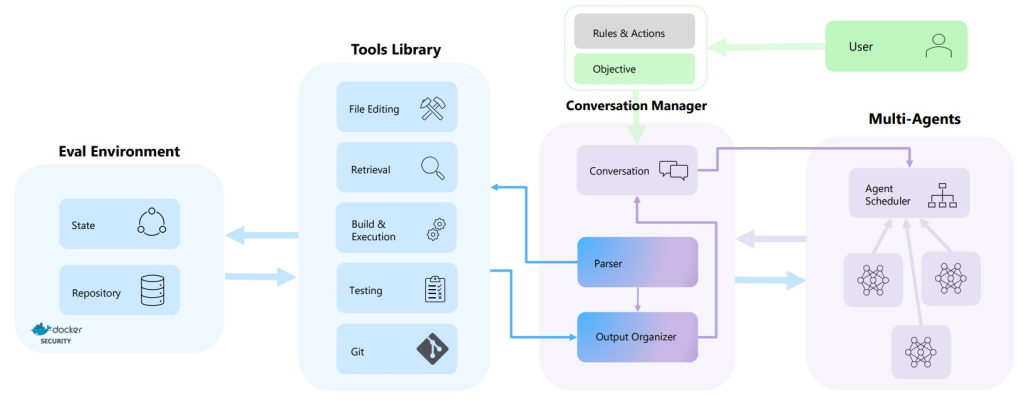

Overview of the AutoDev framework. Only the green input is provided by humans. / Image Credit: Microsoft

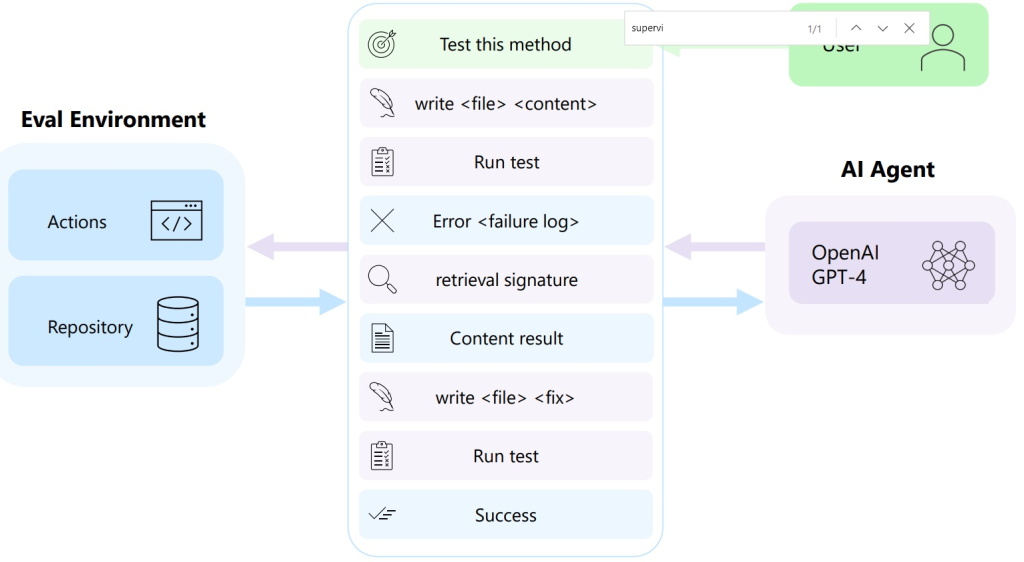

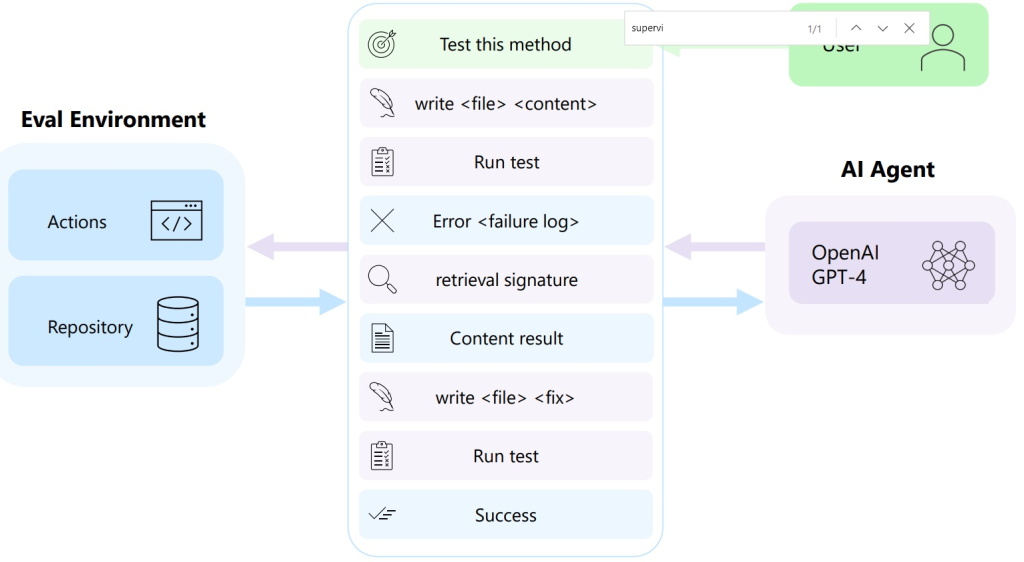

AutoDev workflow, outlining all of the actions that AI gents can perform on their own in pursuit of the desired output. / Image Credit: Microsoft

“We’ve shifted the responsibility of extracting relevant context for software engineering tasks and validating AI-generated code from users (mainly developers) to the AI agents themselves.”

But if that’s the case do we need human developers anymore? And what sort of skill should they have or acquire to remain useful in this AI-enabled workplace?

Will machines require soft skills in the future?

The conclusion to this evolutionary process may be unsettling to many—particularly to those highly-talented but reclusive software engineers who prefer working alone, dreading social interaction.

Well, as it happens, social skills may soon be required to… interact with machines.

Since all conversational models are essentially mimicking human communication, AI tools will require similar skills of their users as other humans would.

Image Credit: VisualGeneration / depositphotos

Fortunately, nobody is planning to equip computers with human emotions, so at least this aspect of teamwork is unlikely to become a problem, but many developers who today simply write code will now have to specialise in explaining it rather than executing themselves.

This certainly wasn’t a challenge that most techies foresaw when they entered the field but may very soon become a do or die situation for them.

If you’re not an effective supervisor, instructing machines to do the right things, your value in most companies may go down and not up, despite your highly specialised knowledge.

Software engineering path has just become less predictable

There will still be jobs for human programmers, of course, but they are more likely to be available in the companies that make the technology underpinning AI. After all, some future development and maintenance will have to be done by humans, if only as a safety measure.

That said, the pool of vacancies for software engineering experts will be draining quickly, unless you’re a competent communicator with a dose of managerial skills to competently run your own team of AI agents.

Perhaps the worst thing of all is that the creeping of AI into the field makes it very unpredictable as to what skills you should master as a future developer.

This is because we’re still looking for an answer to the fundamental question—if AI replaces most of us, will there be any humans left competent enough to modify the code if things go wrong in the future?

We have some examples of that in ancient IT systems written in

outdated languages that few people know well enough to manage, not to mention update.

Since the demand for expertise in e.g. COBOL has gone down with time, there simply aren’t enough people to tackle the problems of old financial or government systems, which often hold millions of critical records of customers and nation’s citizens.

One could easily see how AI could have a similar impact, just on a far larger scale.

It’s a chicken or egg situation: what comes first? You need development skills to understand what AI is doing but how do you learn if AI is doing everything?

If you no longer need to master hard programming skills in any field, how many people will be left to fix things if they go wrong and we’re overly dependent on artificial intelligence?

This isn’t just a problem of reduced attractiveness as a candidate, retaining skills in technologies most companies won’t need you to do anything in, but a fundamental lack of practice, since it would only be needed in rare emergencies.

You can’t be good at something you rarely do.

How do you plan for a career in tech?

It used to be simple: you specialised in a specific field, mastered the required tools and languages, continuously updated your skill set as the technology advanced, and you could expect to be a well-paid, sought after professional eventually.

But now the value of your technical expertise vs. the ability to juggle AI bots coming up with solutions on their own flips it all on its head.

Experts with years of practice are likely to remain in demand (a bit like an old mechanic still fixing modern cars today – they may be different, but many fundamentals remain the same, and his experience can’t easily be gained anymore). But young students in computer science will have a tough nut to crack picking between hard skills and competency in using AI tools to achieve the same or better outcome.

There will be some jobs for highly-specialised experts and many jobs for those simply interacting with chatbots. But those stuck in the middle will soon have to pick their future between the two.

It’s whether you have what it takes to climb to the top or descend down to compete with Zoomers getting their AI-enabled coding lessons on TikTok.

Featured Image Credit: 123RF

Overview of the AutoDev framework. Only the green input is provided by humans. / Image Credit: Microsoft

Overview of the AutoDev framework. Only the green input is provided by humans. / Image Credit: Microsoft

Image Credit: VisualGeneration / depositphotos

Image Credit: VisualGeneration / depositphotos