Prompt Poet potentially offers a look at the future direction of prompt context management across Google’s AI projects, such as Gemini.

venturebeat.com

Meet Prompt Poet: The Google-acquired tool revolutionizing LLM prompt engineering

Michael Trestman

August 8, 2024 5:32 PM

Image Credit: Logitech

In the age of artificial intelligence,

prompt engineering is an important new skill for harnessing the full potential of large language models (LLMs). This is the art of crafting complex inputs to extract relevant, useful outputs from AI models like ChatGPT. While many LLMs are designed to be friendly to non-technical users, and respond well to natural-sounding conversational prompts, advanced prompt engineering techniques offer another powerful level of control. These techniques are useful for individual users, and absolutely essential for developers seeking to build sophisticated AI-powered applications.

The Game-Changer: Prompt Poet

Prompt Poet is a groundbreaking tool developed by

Character.ai, a platform and makerspace for personalized conversational AIs, which was

recently acquired by Google. Prompt Poet potentially offers a look at the future direction of prompt context management across Google’s AI projects, such as Gemini.

Prompt Poet offers several key advantages, and stands out from other frameworks such as Langchain in its simplicity and focus:

Low Code Approach: Simplifies prompt design for both technical and non-technical users, unlike more code-intensive frameworks.

Template Flexibility: Uses YAML and Jinja2 to support complex prompt structures.

Context Management: Seamlessly integrates external data, offering a more dynamic and data-rich prompt creation process.

Efficiency: Reduces time spent on engineering string manipulations, allowing users to focus on crafting optimal prompt text.

This article focuses on the critical concept of context in prompt engineering, specifically the components of instructions and data. We’ll explore how Prompt Poet can streamline the creation of dynamic, data-rich prompts, enhancing the effectiveness of your LLM applications.

The Importance of Context: Instructions and Data

Customizing an LLM application often involves giving it detailed instructions about how to behave. This might mean defining a personality type, a specific situation, or even emulating a historical figure. For instance:

Customizing an LLM application, such as a chatbot, often means giving it specific instructions about how to act. This might mean describing a certain type of personality type, situation, or role, or even a specific historical or fictional person. For example, when asking for help with a moral dilemma, you can ask the model to answer in the style of someone specific, which will very much influence the type of answer you get. Try variations of the following prompt to see how the details (like the people you pick) matter:

Simulate a panel discussion with the philosophers Aristotle, Karl Marx, and Peter Singer. Each should provide individual advice, comment on each other's responses, and conclude. Suppose they are very hungry.

The question: The pizza place gave us an extra pie, should I tell them or should we keep it?

Details matter. Effective prompt engineering also involves creating a specific, customized data context. This means providing the model with relevant facts, like personal user data, real-time information or specialized knowledge, which it wouldn’t have access to otherwise. This approach allows the AI to produce output far more relevant to the user’s specific situation than would be possible for an uninformed generic model.

Efficient Data Management with Prompt Templating

Data can be loaded in manually, just by typing it into ChatGPT. If you ask for advice about how to install some software, you have to tell it about your hardware. If you ask for help crafting the perfect resume, you have to tell it your skills and work history first. However, while this is ok for personal use, it does not work for development. Even for personal use, manually inputting data for each interaction can be tedious and error-prone.

This is where prompt templating comes into play. Prompt Poet uses YAML and Jinja2 to create flexible and dynamic prompts, significantly enhancing LLM interactions.

Example: Daily Planner

To illustrate the power of Prompt Poet, let’s work through a simple example: a daily planning assistant that will remind the user of upcoming events and provide contextual information to help prepare for their day, based on real-time data.

For example, you might want output like this:

Good morning! It looks like you have virtual meetings in the morning and an afternoon hike planned. Don't forget water and sunscreen for your hike since it's sunny outside.

Here are your schedule and current conditions for today:

- **09:00 AM:** Virtual meeting with the marketing team

- **11:00 AM:** One-on-one with the project manager

- **07:00 PM:** Afternoon hike at Discovery Park with friends

It's currently 65°F and sunny. Expect good conditions for your hike. Be aware of a bridge closure on I-90, which might cause delays.

To do that, we’ll need to provide at least two different pieces of context to the model, 1) customized instructions about the task, and 2) the required data to define the factual context of the user interaction.

Prompt Poet gives us some powerful tools for handling this context. We’ll start by creating a

template to hold the general form of the instructions, and filling it in with specific

data at the time when we want to run the query. For the above example, we might use the following Python code to create a `raw_template` and the `template_data` to fill it, which are the components of a Prompt Poet `Prompt` object.

raw_template = """

- name: system instructions

role: system

content: |

You are a helpful daily planning assistant. Use the following information about the user's schedule and conditions in their area to provide a detailed summary of the day. Remind them of upcoming events and bring any warnings or unusual conditions to their attention, including weather, traffic, or air quality warnings. Ask if they have any follow-up questions.

- name: realtime data

role: system

content: |

Weather in {{ user_city }}, {{ user_country }}:

- Temperature: {{ user_temperature }}°C

- Description: {{ user_description }}

Traffic in {{ user_city }}:

- Status: {{ traffic_status }}

Air Quality in {{ user_city }}:

- AQI: {{ aqi }}

- Main Pollutant: {{ main_pollutant }}

Upcoming Events:

{% for event in events %}

- {{ event.start }}: {{ event.summary }}

{% endfor %}

"""

The code below uses Prompt Poet’s `Prompt` class to populate data from multiple data sources into a template to form a single, coherent prompt. This allows us to invoke a daily planning assistant to provide personalized, context-aware responses. By pulling in weather data, traffic updates, AQI information and calendar events, the model can offer detailed summaries and reminders, enhancing the user experience.

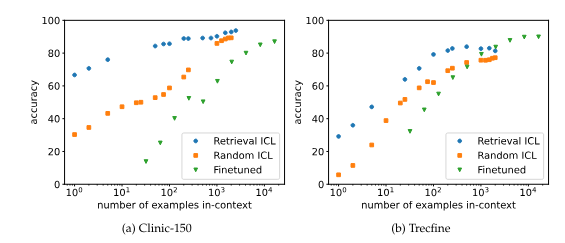

You can clone and experiment with the full working

code example, which also implements

few-shot learning, a powerful prompt engineering technique that involves presenting the models with a small set of training examples.

# User data

user_weather_info = get_weather_info(user_city)

traffic_info = get_traffic_info(user_city)

aqi_info = get_aqi_info(user_city)

events_info = get_events_info(calendar_events)

template_data = {

"user_city": user_city,

"user_country": user_country,

"user_temperature": user_weather_info["temperature"],

"user_description": user_weather_info["description"],

"traffic_status": traffic_info,

"aqi": aqi_info["aqi"],

"main_pollutant": aqi_info["main_pollutant"],

"events": events_info

}

# Create the prompt using Prompt Poet

prompt = Prompt(

raw_template=raw_template_yaml,

template_data=template_data

)

# Get response from OpenAI

model_response = openai.ChatCompletion.create(

model="gpt-4",

messages=prompt.messages

)

Conclusion

Mastering the fundamentals of prompt engineering, particularly the roles of instructions and data, is crucial for maximizing the potential of LLMs. Prompt Poet stands out as a powerful tool in this field, offering a streamlined approach to creating dynamic, data-rich prompts.

Prompt Poet’s low-code, flexible template system makes prompt design accessible and efficient. By integrating external data sources that would not be available to an LLM’s training, data-filled prompt templates can better ensure AI responses are accurate and relevant to the user.

By using tools like Prompt Poet, you can elevate your prompt engineering skills and develop innovative AI applications that meet diverse user needs with precision. As AI continues to evolve, staying proficient in the latest prompt engineering techniques will be essential.